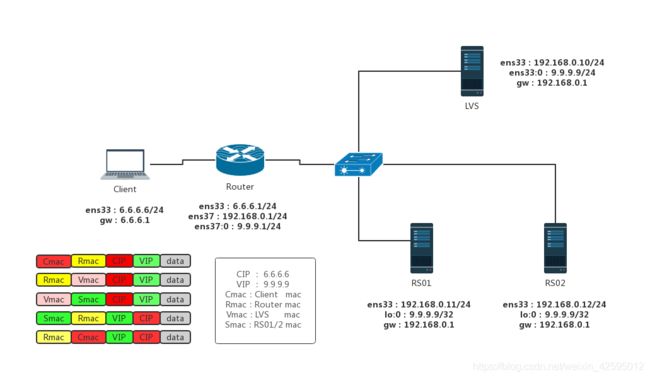

LVS跨网段DR模型配置

本次试验使用 5台 VMware 虚拟机搭建,Client 和 Router ens33网卡设置为桥接模式,其余网卡均为主机模式,各 ip 地址 规划如下:

一、各主机网络配置文件如下:

1.Client

[root@Client ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

BOOTPROTO=none

IPADDR=6.6.6.6

NETMASK=255.255.255.0

GATEWAY=6.6.6.1

NAME=ens33

DEVICE=ens33

ONBOOT=yes[root@Client ~]# ifconfig

ens33: flags=4163 mtu 1500

inet 6.6.6.6 netmask 255.255.255.0 broadcast 6.6.6.255

inet6 fe80::20c:29ff:feec:ed4f prefixlen 64 scopeid 0x20

ether 00:0c:29:ec:ed:4f txqueuelen 1000 (Ethernet)

RX packets 4148 bytes 282249 (275.6 KiB)

RX errors 0 dropped 1 overruns 0 frame 0

TX packets 441 bytes 77912 (76.0 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10

loop txqueuelen 1000 (Local Loopback)

RX packets 968 bytes 77720 (75.8 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 968 bytes 77720 (75.8 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 2.Router

[root@Router ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

BOOTPROTO=none

IPADDR=6.6.6.1

NETMASK=255.255.255.0

NAME=ens33

DEVICE=ens33

ONBOOT=yes

[root@Router ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens37

TYPE=Ethernet

BOOTPROTO=none

IPADDR=192.168.0.1

NETMASK=255.255.255.0

NAME=ens37

DEVICE=ens37

ONBOOT=yes

[root@Router ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens37:0

TYPE=Ethernet

BOOTPROTO=none

IPADDR=9.9.9.1

NETMASK=255.255.255.0

NAME=ens37:0

DEVICE=ens37:0

ONBOOT=yes[root@Router ~]# ifconfig

ens33: flags=4163 mtu 1500

inet 6.6.6.1 netmask 255.255.255.0 broadcast 6.6.6.255

inet6 fe80::20c:29ff:fe49:d145 prefixlen 64 scopeid 0x20

ether 00:0c:29:49:d1:45 txqueuelen 1000 (Ethernet)

RX packets 2742 bytes 185080 (180.7 KiB)

RX errors 0 dropped 1 overruns 0 frame 0

TX packets 215 bytes 21219 (20.7 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens37: flags=4163 mtu 1500

inet 192.168.0.1 netmask 255.255.255.0 broadcast 192.168.0.255

inet6 fe80::20c:29ff:fe49:d14f prefixlen 64 scopeid 0x20

ether 00:0c:29:49:d1:4f txqueuelen 1000 (Ethernet)

RX packets 2 bytes 303 (303.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 31 bytes 2358 (2.3 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens37:0: flags=4163 mtu 1500

inet 9.9.9.1 netmask 255.255.255.0 broadcast 9.9.9.255

ether 00:0c:29:49:d1:4f txqueuelen 1000 (Ethernet)

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10

loop txqueuelen 1000 (Local Loopback)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

3.LVS

[root@LVS ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

BOOTPROTO=none

IPADDR=192.168.0.10

NETMASK=255.255.255.0

GATEWAY=192.168.0.1

NAME=ens33

DEVICE=ens33

ONBOOT=yes

[root@LVS ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33:0

TYPE=Ethernet

BOOTPROTO=none

IPADDR=9.9.9.9

NETMASK=255.255.255.0

NAME=ens33:0

DEVICE=ens33:0

ONBOOT=yes[root@LVS ~]# ifconfig

ens33: flags=4163 mtu 1500

inet 192.168.0.10 netmask 255.255.255.0 broadcast 192.168.0.255

inet6 fe80::20c:29ff:feb8:7164 prefixlen 64 scopeid 0x20

ether 00:0c:29:b8:71:64 txqueuelen 1000 (Ethernet)

RX packets 182727 bytes 11437766 (10.9 MiB)

RX errors 0 dropped 64 overruns 0 frame 0

TX packets 10919 bytes 940289 (918.2 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens33:0: flags=4163 mtu 1500

inet 9.9.9.9 netmask 255.255.255.0 broadcast 9.9.9.255

ether 00:0c:29:b8:71:64 txqueuelen 1000 (Ethernet)

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10

loop txqueuelen 1000 (Local Loopback)

RX packets 2683 bytes 217652 (212.5 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2683 bytes 217652 (212.5 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 4.RS01

在RS服务器的还回口上配置vip之前,应先修改内核arp参数,禁止RS发出arp广播以及arp响应报文:

修改结果如下:

[root@RS01 ~]# cat /proc/sys/net/ipv4/conf/all/arp_ignore

1

[root@RS01 ~]# cat /proc/sys/net/ipv4/conf/lo/arp_ignore

1

[root@RS01 ~]# cat /proc/sys/net/ipv4/conf/all/arp_announce

2

[root@RS01 ~]# cat /proc/sys/net/ipv4/conf/lo/arp_announce

2[root@RS01 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

BOOTPROTO=none

IPADDR=192.168.0.11

NETMASK=255.255.255.0

GATEWAY=192.168.0.1

NAME=ens33

DEVICE=ens33

ONBOOT=yes

[root@RS01 ~]# cat /etc/sysconfig/network-scripts/ifcfg-lo:0

DEVICE=lo:0

NAME=lo:0

IPADDR=9.9.9.9

NETMASK=255.255.255.255

ONBOOT=yes[root@RS01 ~]# ifconfig

ens33: flags=4163 mtu 1500

inet 192.168.0.11 netmask 255.255.255.0 broadcast 192.168.0.255

inet6 fe80::20c:29ff:fe3a:5119 prefixlen 64 scopeid 0x20

ether 00:0c:29:3a:51:19 txqueuelen 1000 (Ethernet)

RX packets 4304 bytes 354474 (346.1 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2859 bytes 407104 (397.5 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10

loop txqueuelen 1000 (Local Loopback)

RX packets 596 bytes 47240 (46.1 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 596 bytes 47240 (46.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo:0: flags=73 mtu 65536

inet 9.9.9.9 netmask 255.255.255.255

loop txqueuelen 1000 (Local Loopback) 5.RS02

[root@RS02 ~]# cat /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

BOOTPROTO=none

IPADDR=192.168.0.12

NETMASK=255.255.255.0

GATEWAY=192.168.0.1

NAME=ens33

DEVICE=ens33

ONBOOT=yes

[root@RS02 ~]# cat /etc/sysconfig/network-scripts/ifcfg-lo:0

DEVICE=lo:0

NAME=lo:0

IPADDR=9.9.9.9

NETMASK=255.255.255.255

ONBOOT=yes[root@RS02 ~]# ifconfig

ens33: flags=4163 mtu 1500

inet 192.168.0.12 netmask 255.255.255.0 broadcast 192.168.0.255

inet6 fe80::20c:29ff:fedb:a51a prefixlen 64 scopeid 0x20

ether 00:0c:29:db:a5:1a txqueuelen 1000 (Ethernet)

RX packets 6266 bytes 514451 (502.3 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 3910 bytes 535357 (522.8 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10

loop txqueuelen 1000 (Local Loopback)

RX packets 1454 bytes 118913 (116.1 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 1454 bytes 118913 (116.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo:0: flags=73 mtu 65536

inet 9.9.9.9 netmask 255.255.255.255

loop txqueuelen 1000 (Local Loopback)

6.网络连通性测试

在客户端 ping vip(9.9.9.9)

[root@Client ~]# ping -c 3 9.9.9.9

PING 9.9.9.9 (9.9.9.9) 56(84) bytes of data.

64 bytes from 9.9.9.9: icmp_seq=1 ttl=64 time=3.57 ms

64 bytes from 9.9.9.9: icmp_seq=2 ttl=64 time=0.834 ms

64 bytes from 9.9.9.9: icmp_seq=3 ttl=64 time=0.839 ms

--- 9.9.9.9 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2012ms

rtt min/avg/max/mdev = 0.834/1.748/3.572/1.289 ms

在RS上 ping 客户端

[root@RS01 ~]# ping -c 3 6.6.6.6

PING 6.6.6.6 (6.6.6.6) 56(84) bytes of data.

64 bytes from 6.6.6.6: icmp_seq=1 ttl=63 time=1.98 ms

64 bytes from 6.6.6.6: icmp_seq=2 ttl=63 time=1.25 ms

64 bytes from 6.6.6.6: icmp_seq=3 ttl=63 time=1.42 ms

--- 6.6.6.6 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2028ms

rtt min/avg/max/mdev = 1.258/1.555/1.980/0.311 ms二、在RS上安装httpd

[root@LVS ~]# curl 192.168.0.11

RS01

[root@LVS ~]# curl 192.168.0.12

RS02三、设置LVS调度规则

1.安装ipvsadm

[root@LVS ~]# yum install ipvsadm -y2.设置lvs规则

[root@LVS ~]# ipvsadm -A -t 9.9.9.9:80 -s rr

[root@LVS ~]# ipvsadm -a -t 9.9.9.9:80 -r 192.168.0.11:80 -g

[root@LVS ~]# ipvsadm -a -t 9.9.9.9:80 -r 192.168.0.12:80 -g

[root@LVS ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 9.9.9.9:80 rr

-> 192.168.0.11:80 Route 1 0 0

-> 192.168.0.12:80 Route 1 0 0 四、LVS调度测试

1.在LVS上访问测试

[root@LVS ~]# curl 9.9.9.9

^C结果:长时间无反应,

2.在client端访问测试

[root@Client ~]# curl 9.9.9.9

RS01

[root@Client ~]# curl 9.9.9.9

RS02

[root@Client ~]# curl 9.9.9.9

RS01

[root@Client ~]# curl 9.9.9.9

RS02LVS调度成功,工作正常!

五、LVS工作原理

在上面的调度测试中,为何在LVS上测试时长时间无反应?

LVS 的 IP 负载均衡技术是通过 ipvs 模块来实现的,ipvs 是 LVS 集群系统的核心软件,ipvs 工作于内核空间的 INPUT 链上。

当收到用户请求某集群服务时,经过 PREROUTING 链,经检查本机路由表,送往 INPUT 链;在进入 netfilter 的 INPUT 链时,ipvs 强行将请求报文通过 ipvsadm 定义的集群服务策略的路径改为 FORWORD 链,将报文转发至后端真实提供服务的主机。

所以在 LVS 上测试时,本机发出的请求,是直接从 OUTPUT 链流向 POSTROUTING 链,不会经由INPUT链,所以不会经过 ipvs 调度规则,因此长时间无反应。

六、设置LVS规则开机自动加载

将 ipvs 规则写进 /etc/sysconfig/ipvsadm 文件,到服务器宕机重启后,会自动加载。

ipvs 服务默认启动停止策略如下:

[root@LVS ~]# cat /usr/lib/systemd/system/ipvsadm.service

[Unit]

Description=Initialise the Linux Virtual Server

After=syslog.target network.target

[Service]

Type=oneshot

ExecStart=/bin/bash -c "exec /sbin/ipvsadm-restore < /etc/sysconfig/ipvsadm"

ExecStop=/bin/bash -c "exec /sbin/ipvsadm-save -n > /etc/sysconfig/ipvsadm"

ExecStop=/sbin/ipvsadm -C

RemainAfterExit=yes

[Install]

WantedBy=multi-user.target保存当前规则到文件:

[root@LVS ~]# ipvsadm-save -n > /etc/sysconfig/ipvsadm从文件中加载策略:

[root@LVS ~]# ipvsadm-restore < /etc/sysconfig/ipvsadm