前言

最近项目需要记录系统的日志,发现springcloud集成elk(elasticsearch+logstash+kibna)是个不错的方案,于是着手开始搭建环境并记录过程分享给大家。

准备

首先我们要安装好elasticsearch+kibana以及logstash,下面是我相关文章,大家可以看看。

1、安装elasticsearch+kibana

2、Helm3-安装带有ik分词的ElasticSearch

3、安装logstash

Logstash配置

1、引入jar包

net.logstash.logback

logstash-logback-encoder

7.4

2、添加logback-spring.xml文件

如下所示:

%d{HH:mm:ss.SSS} [%thread] %-5level %logger{36} - %msg%n

xxx.xxx.xxx.xxx:xxxx

UTC

{

"active": "${active}",

"service": "${springAppName:-}",

"timestamp": "%date{ISO8601}",

"level": "%level",

"thread": "%thread",

"logger": "%logger",

"message": "%message",

"context": "%X"

}

3、运行项目查看logstash日志和elasticsearch日志输出

使用命令查看logstash日志

➜ ~ kubectl logs pod/logstash-0 -nlogstash输出:

{

"level" => "INFO",

"context" => "",

"message" => "[9958260f-3313-4e5a-9506-24c140c7d6c1] Receive server push request, request = NotifySubscriberRequest, requestId = 35420",

"thread" => "nacos-grpc-client-executor-47.97.208.153-30",

"service" => "gatewayserver",

"logger" => "com.alibaba.nacos.common.remote.client",

"active" => "dev",

"@timestamp" => 2023-09-27T06:50:25.552Z,

"timestamp" => "2023-09-27 14:50:25,552",

"@version" => "1",

"type" => "syslog"

}

{

"level" => "INFO",

"context" => "",

"message" => "[9958260f-3313-4e5a-9506-24c140c7d6c1] Ack server push request, request = NotifySubscriberRequest, requestId = 35420",

"thread" => "nacos-grpc-client-executor-47.97.208.153-30",

"service" => "gatewayserver",

"logger" => "com.alibaba.nacos.common.remote.client",

"active" => "dev",

"@timestamp" => 2023-09-27T06:50:25.555Z,

"timestamp" => "2023-09-27 14:50:25,555",

"@version" => "1",

"type" => "syslog"

}

{

"level" => "INFO",

"context" => "",

"message" => "new ips(1) service: DEFAULT_GROUP@@gatewayserver -> [{\"ip\":\"172.16.12.11\",\"port\":7777,\"weight\":1.0,\"healthy\":true,\"enabled\":true,\"ephemeral\":true,\"clusterName\":\"DEFAULT\",\"serviceName\":\"DEFAULT_GROUP@@gatewayserver\",\"metadata\":{\"preserved.register.source\":\"SPRING_CLOUD\"},\"instanceHeartBeatInterval\":5000,\"instanceHeartBeatTimeOut\":15000,\"ipDeleteTimeout\":30000}]",

"thread" => "nacos-grpc-client-executor-47.97.208.153-30",

"service" => "gatewayserver",

"logger" => "com.alibaba.nacos.client.naming",

"active" => "dev",

"@timestamp" => 2023-09-27T06:50:25.554Z,

"timestamp" => "2023-09-27 14:50:25,554",

"@version" => "1",

"type" => "syslog"

}

{

"level" => "INFO",

"context" => "",

"message" => "current ips:(1) service: DEFAULT_GROUP@@gatewayserver -> [{\"ip\":\"172.16.12.11\",\"port\":7777,\"weight\":1.0,\"healthy\":true,\"enabled\":true,\"ephemeral\":true,\"clusterName\":\"DEFAULT\",\"serviceName\":\"DEFAULT_GROUP@@gatewayserver\",\"metadata\":{\"preserved.register.source\":\"SPRING_CLOUD\"},\"instanceHeartBeatInterval\":5000,\"instanceHeartBeatTimeOut\":15000,\"ipDeleteTimeout\":30000}]",

"thread" => "nacos-grpc-client-executor-47.97.208.153-30",

"service" => "gatewayserver",

"logger" => "com.alibaba.nacos.client.naming",

"active" => "dev",

"@timestamp" => 2023-09-27T06:50:25.554Z,

"timestamp" => "2023-09-27 14:50:25,554",

"@version" => "1",

"type" => "syslog"

}elasticsearch日志

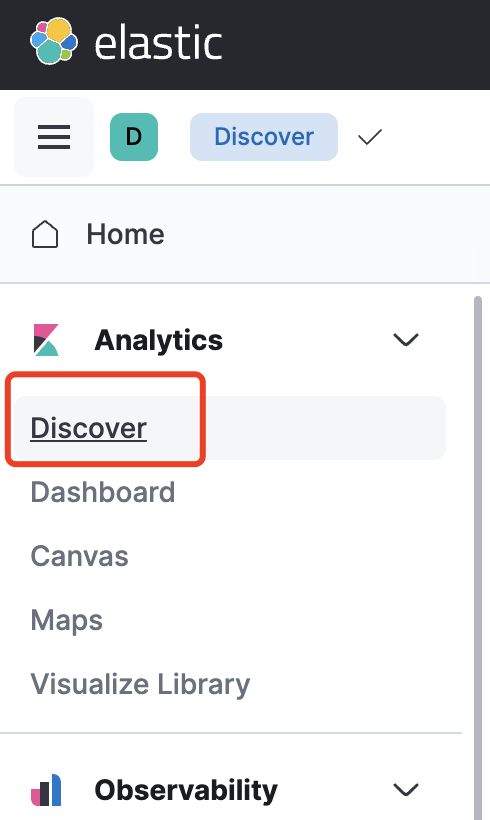

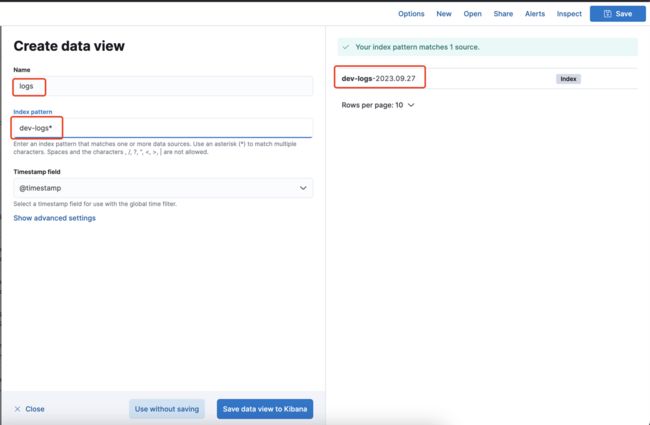

我们要在kibana中添加,操作步骤如下所示:

添加完成之后,我们点击查看日志,如下所示:

这样就完成了elk的日志系统搭建。

总结

1、在搭建logstash的时候出现过问题,有兴趣的可以看看helm3安装了logstash配置好了logback,但是日志记录一直不对,如何解决?

2、elasticsearch+kibana以及集成到springcloud中,准备工作里面已经列举了相关文章及操作,大家可以看看。