第P6周—好莱坞明星识别(2)

五、模型训练

# 训练循环

def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset) # 训练集的大小

num_batches = len(dataloader) # 批次数目

train_loss, train_acc = 0, 0 # 初始化训练损失和正确率

for X, y in dataloader: # 获取图片及其标签

X, y = X.to(device), y.to(device)

# 计算预测误差

pred = model(X) # 网络输出

loss = loss_fn(pred, y) # 计算网络输出和真实值之间的差距,targets为真实值,计算二者差值即为损失

# 反向传播

optimizer.zero_grad() # grad属性归零

loss.backward() # 反向传播

optimizer.step() # 每一步自动更新

# 记录acc与loss

train_acc += (pred.argmax(1) == y).type(torch.float).sum().item()

train_loss += loss.item()

train_acc /= size

train_loss /= num_batches

return train_acc, train_loss

# 测试函数

def test(dataloader, model, loss_fn):

size = len(dataloader.dataset) # 测试集的大小

num_batches = len(dataloader) # 批次数目,(size/batch_size,向上取整)

test_loss, test_acc = 0, 0

# 当不进行训练时,停止梯度更新,节省计算内存消耗

with torch.no_grad():

for imgs, target in dataloader:

imgs, target = imgs.to(device), target.to(device)

# 计算loss

target_pred = model(imgs)

loss = loss_fn(target_pred, target)

test_loss += loss.item()

test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()

test_acc /= size

test_loss /= num_batches

return test_acc, test_loss

''' 自定义设置动态学习率 '''

def adjust_learning_rate(optimizer, epoch, start_lr):

# 每 2 个epoch衰减到原来的 0.92

lr = start_lr * (0.92 ** (epoch // 2))

for param_group in optimizer.param_groups:

param_group['lr'] = lr

# 设置初始学习率

learn_rate = 1e-4

optimizer = torch.optim.SGD(model.parameters(), lr=learn_rate)

# 定义学习率调整函数

lambda1 = lambda epoch: 0.92 ** (epoch // 4)

scheduler = torch.optim.lr_scheduler.LambdaLR(optimizer, lr_lambda=lambda1) # 选定调整方法

# 定义损失函数

loss_fn = nn.CrossEntropyLoss()

# 定义训练参数

epochs = 40

train_loss = []

train_acc = []

test_loss = []

test_acc = []

best_acc = 0 # 用于保存最佳模型的准确率

for epoch in range(epochs):

model.train()

epoch_train_acc, epoch_train_loss = train(train_dl, model, loss_fn, optimizer)

scheduler.step() # 更新学习率(调用官方动态学习率接口时使用)

model.eval()

epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)

# 保存最佳模型到best model

if epoch_test_acc > best_acc:

best_acc = epoch_test_acc

best_model = copy.deepcopy(model)

train_acc.append(epoch_train_acc)

train_loss.append(epoch_train_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

# 获取当前的学习率

lr = optimizer.state_dict()['param_groups'][0]['lr']

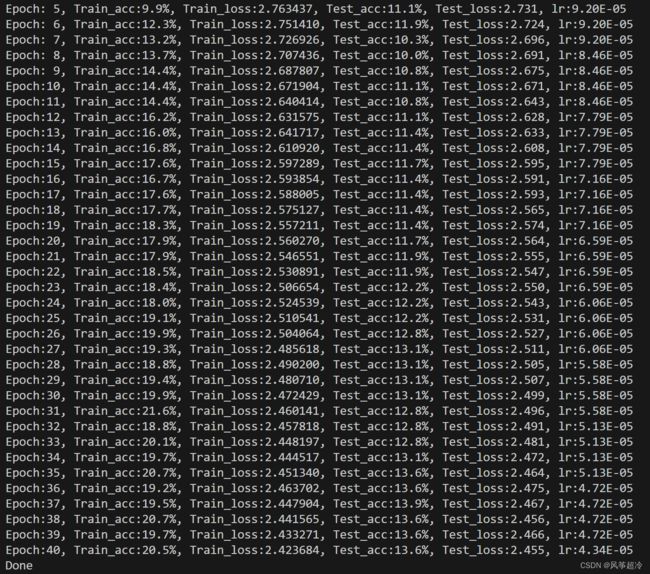

template = ('Epoch:{:2d}, Train_acc:{:.1f}%, Train_loss:{:3f}, Test_acc:{:.1f}%, Test_loss:{:.3f}, lr:{:.2E}')

print(template.format(epoch+1, epoch_train_acc*100, epoch_train_loss, epoch_test_acc*100, epoch_test_loss, lr))

# 保存最佳模型到文件中

PATH = './best_model.pth' # 保存的参数文件名

torch.save(best_model.state_dict(), PATH)

print('Done')在非实时编译器中运行出现Python 脚本中使用多进程相关问题报错,问题通常发生在没有正确使用 if __name__ == '__main__': 块的情况下。为了解决这个问题,我将完整代码修改如下:

import torch

import torch.nn as nn

import torchvision.transforms as transforms

import torchvision

from torchvision import transforms, datasets

import os, PIL, random, pathlib, warnings

import copy

warnings.filterwarnings("ignore")

def main():

# 您的现有代码放在这里

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(device)

import os, PIL, random, pathlib

data_dir = 'D:/P6/48-data/'

data_dir = pathlib.Path(data_dir)

data_path = list(data_dir.glob('*'))

print(data_path)

classname = [str(path).split("\\")[3] for path in data_path]

print(classname)

train_transforms = transforms.Compose([

transforms.Resize([224, 224]),

transforms.ToTensor(),

transforms.Normalize(

mean=[0.39354826, 0.41713402, 0.48036146],

std=[0.25076334, 0.25809455, 0.28359835]

)

])

total_data = datasets.ImageFolder("D:/P6/48-data/", transform=train_transforms)

print(total_data)

print(total_data.class_to_idx)

train_size = int(0.8 * len(total_data))

test_size = len(total_data) - train_size

train_dataset, test_dataset = torch.utils.data.random_split(total_data, [train_size, test_size])

print(train_dataset, test_dataset)

batch_size = 32

train_dl = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=1)

test_dl = torch.utils.data.DataLoader(test_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=1)

from torchvision.models import vgg16

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print("Using {} device\n".format(device))

''' 调用官方的VGG-16模型 '''

# 加载预训练模型,并且对模型进行微调

model = vgg16(pretrained=True).to(device) # 加载预训练的vgg16模型

for param in model.parameters():

param.requires_grad = False # 冻结模型的参数,这样子在训练的时候只训练最后一层的参数

# 修改classifier模块的第6层(即:(6): Linear(in_features=4096, out_features=2, bias=True))

# 注意查看我们下方打印出来的模型

model.classifier._modules['6'] = nn.Linear(4096, 17) # 修改vgg16模型中最后一层全连接层,输出目标类别个数

model.to(device)

print(model)

# 训练循环

def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset) # 训练集的大小

num_batches = len(dataloader) # 批次数目

train_loss, train_acc = 0, 0 # 初始化训练损失和正确率

for X, y in dataloader: # 获取图片及其标签

X, y = X.to(device), y.to(device)

# 计算预测误差

pred = model(X) # 网络输出

loss = loss_fn(pred, y) # 计算网络输出和真实值之间的差距,targets为真实值,计算二者差值即为损失

# 反向传播

optimizer.zero_grad() # grad属性归零

loss.backward() # 反向传播

optimizer.step() # 每一步自动更新

# 记录acc与loss

train_acc += (pred.argmax(1) == y).type(torch.float).sum().item()

train_loss += loss.item()

train_acc /= size

train_loss /= num_batches

return train_acc, train_loss

# 测试函数

def test(dataloader, model, loss_fn):

size = len(dataloader.dataset) # 测试集的大小

num_batches = len(dataloader) # 批次数目,(size/batch_size,向上取整)

test_loss, test_acc = 0, 0

# 当不进行训练时,停止梯度更新,节省计算内存消耗

with torch.no_grad():

for imgs, target in dataloader:

imgs, target = imgs.to(device), target.to(device)

# 计算loss

target_pred = model(imgs)

loss = loss_fn(target_pred, target)

test_loss += loss.item()

test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()

test_acc /= size

test_loss /= num_batches

return test_acc, test_loss

''' 自定义设置动态学习率 '''

def adjust_learning_rate(optimizer, epoch, start_lr):

# 每 2 个epoch衰减到原来的 0.92

lr = start_lr * (0.92 ** (epoch // 2))

for param_group in optimizer.param_groups:

param_group['lr'] = lr

# 设置初始学习率

learn_rate = 1e-4

optimizer = torch.optim.SGD(model.parameters(), lr=learn_rate)

# 定义学习率调整函数

lambda1 = lambda epoch: 0.92 ** (epoch // 4)

scheduler = torch.optim.lr_scheduler.LambdaLR(optimizer, lr_lambda=lambda1) # 选定调整方法

# 定义损失函数

loss_fn = nn.CrossEntropyLoss()

# 定义训练参数

epochs = 40

train_loss = []

train_acc = []

test_loss = []

test_acc = []

best_acc = 0 # 用于保存最佳模型的准确率

for epoch in range(epochs):

model.train()

epoch_train_acc, epoch_train_loss = train(train_dl, model, loss_fn, optimizer)

scheduler.step() # 更新学习率(调用官方动态学习率接口时使用)

model.eval()

epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)

# 保存最佳模型到best model

if epoch_test_acc > best_acc:

best_acc = epoch_test_acc

best_model = copy.deepcopy(model)

train_acc.append(epoch_train_acc)

train_loss.append(epoch_train_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

# 获取当前的学习率

lr = optimizer.state_dict()['param_groups'][0]['lr']

template = ('Epoch:{:2d}, Train_acc:{:.1f}%, Train_loss:{:3f}, Test_acc:{:.1f}%, Test_loss:{:.3f}, lr:{:.2E}')

print(template.format(epoch+1, epoch_train_acc*100, epoch_train_loss, epoch_test_acc*100, epoch_test_loss, lr))

# 保存最佳模型到文件中

PATH = './best_model.pth' # 保存的参数文件名

torch.save(best_model.state_dict(), PATH)

print('Done')

if __name__ == '__main__':

main()