Elasticsearch_分词器、搜索文档以及原生JAVA操作

文章目录

- 一、ES分词器

-

- 1、默认分词器

- 2、IK分词器

-

- 2.1 IK分词器安装及测试

- 2.2 IK分词器词典

- 3、拼音分词器

- 4、自定义分词器

- 二、搜索文档

-

- 1、添加文档数据

- 2、搜索方式

- 3、ES搜索文档的过滤处理

-

- 3.1 结果排序

- 3.2 分页查询

- 3.3 高亮查询

- 3.4 SQL查询

- 三、原生JAVA操作ES

-

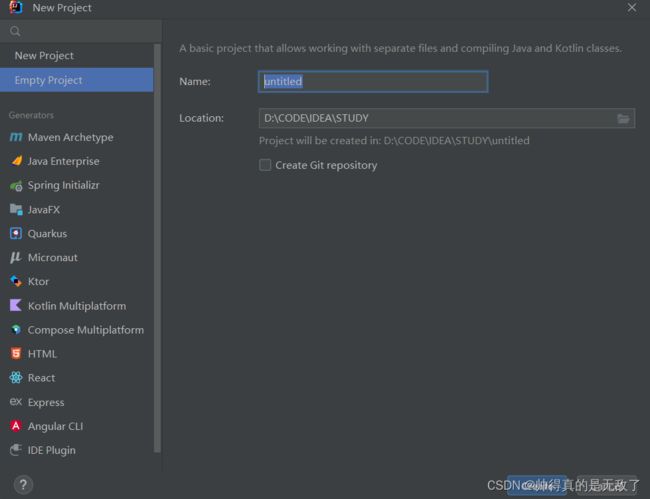

- 1、搭建项目

- 2、索引操作

- 3、文档操作

- 4、搜索文档

- 总结:

一、ES分词器

1、默认分词器

ES文档的数据拆分成一个个有完整含义的关键词,并将关键词与文档对应,这样就可以通过关键词查询文档。要想正确的分词,需要选择合适的分词器。

standard analyzer:Elasticsearch的默认分词器,根据空格和标点符号对应英文进行分词,会进行单词的大小写转换。

默认分词器是英文分词器,对中文是一字一词。

-

测试默认分词器的效果

GET /_analyze { "text":"z z x", "analyzer": "standard" }

2、IK分词器

2.1 IK分词器安装及测试

IK分词器提供了两种算法:

1)ik_smart:最少切分

2)ik_max_word:最细粒度划分

-

关闭docker容器中的ES服务和kibana:

docker stop elasticsearch,docker stop kibana -

下载IKAnalyzer:

https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v8.6.1/elasticsearch-analysis-ik-8.6.1.zip -

通过mobax上传至虚拟机的目录/,然后进行解压缩:

unzip elasticsearch-analysis-ik-8.6.1.zip -d /usr/local/elasticsearch/plugins/analysis-ik -

切换至es用户:

su es -

进入bin目录:

cd /usr/local/elasticsearch/bin -

启动ES服务并在后台运行:

./elasticsearch -d -

切换到kibana的bin目录:

cd ../../kibana-8.6.1/bin/ -

启动kibana:

./kibana -

测试IK分词器ik_smart(最少切分)

GET /_analyze { "text":"我是要成为海贼王的男人", "analyzer": "ik_smart" }即一段话中的字只会被划分一次

-

测试IK分词器ik_max_word(最细粒度划分)

GET /_analyze { "text":"我是要成为海贼王的男人", "analyzer": "ik_max_word" }即一段话中的字可能会被划分多次

2.2 IK分词器词典

IK分词器根据词典进行分词,词典文件在IK分词器的config目录中。

main.dic:IK中内置的词典。记录了IK统计的所有中文单词。

IKAnalyzer.cfg.xml:用于配置自定义词库。

-

进入到IK分词器的配置目录:

cd /usr/local/elasticsearch/plugins/analysis-ik/config -

切换root用户进行修改:

su root -

修改配置文件:

vim IKAnalyzer.cfg.xml,修改如下:<entry key="ext_dict">ext_dict.dic</entry> <entry key="ext_stopwords">ext_stopwords.dic</entry> -

创建并编辑扩展词:

vim ext_dict.dic,添加如下:率土之滨此时率土之滨会被分成一个词。

-

创建并编辑停用词:

vim ext_stopwords.dic,添加如下率土此时率土不会被分成一个词。

-

切换到es用户:

su es -

重启ES服务

1)找到对应的进程id:ps -ef |grep elastic

2)根据id杀死该进程:kill -9 ID号

3)进入到elasticsearch的bin目录:cd /usr/local/elasticsearch/bin

4)启动ES服务:./elasticsearch -d -

重启kibana服务

1)找到对应的进程id:netstat -anp | grep 5601

2)根据id杀死该进程:kill -9 ID号

3)进入到kibana的bin目录:cd ../../kibana-8.6.1/bin/

4)重启kibana服务:./kibana

3、拼音分词器

-

下载拼音分词器:

https://github.com/medcl/elasticsearch-analysis-pinyin/releases/download/v8.6.1/elasticsearch-analysis-pinyin-8.6.1.zip -

通过mobax上传到虚拟机的/目录,解压到elasticsearch的plugins/analysis-pinyin目录下:

unzip elasticsearch-analysis-pinyin-8.6.1.zip -d /usr/local/elasticsearch/plugins/analysis-pinyin -

切换到es用户:

su es -

重启ES服务

1)找到对应的进程id:ps -ef |grep elastic

2)根据id杀死该进程:kill -9 ID号

3)进入到elasticsearch的bin目录:cd /usr/local/elasticsearch/bin

4)启动ES服务:./elasticsearch -d -

重启kibana服务

1)找到对应的进程id:netstat -anp | grep 5601

2)根据id杀死该进程:kill -9 ID号

3)进入到kibana的bin目录:cd ../../kibana-8.6.1/bin/

4)重启kibana服务:./kibana -

测试拼音分词器

GET /_analyze { "text":"我是要成为海贼王的男人", "analyzer": "pinyin" }

4、自定义分词器

需要对一段内容既进行文字分词,又进行拼音分词,此时需要自定义ik+pinyin分词器。

-

在创建索引时设置自定义分词器

PUT student3 { "settings": { "analysis": { "analyzer": { "ik_pinyin":{//自定义分词器名 "tokenizer":"ik_max_word",//基本分词器 "filter":"pinyin_filter"//配置过滤分词器 } }, "filter": { "pinyin_filter":{ "type":"pinyin",//另一个分词器 "keep_separete_first_letter":false,//是否分词每个字的首字母 "keep_full_pinyin":true,//是否全拼分词 "keep_original":true,//是否保留原始输入 "remove_duplicated_term":true//是否删除重复项 } } } }, "mappings": { "properties": { "age":{ "type": "integer" }, "name":{ "type": "text", "store": true, "index": true, "analyzer": "ik_pinyin" } } } }即在ik分词器分词后,再用拼音分词器进行分词。

-

测试自定义分词器ik+pinyin

GET /student3/_analyze { "text":"我是要成为海贼王的男人", "analyzer": "ik_pinyin" }“keep_separete_first_letter”:false,所以没有wsycwhzwdnr这一段首字母拼音。

“keep_full_pinyin”:true,所以每个字的全拼都会被分词。

“keep_original”:true,所以ik分词器分词后的结果也还在。

“remove_duplicated_term”:true,所以分词器分词后显示的结果不会重复。

二、搜索文档

1、添加文档数据

-

创建students索引

PUT /students { "mappings": { "properties": { "id": { "type": "integer", "index": true }, "name": { "type": "text", "store": true, "index": true, "analyzer": "ik_smart" }, "info": { "type": "text", "store": true, "index": true, "analyzer": "ik_smart" } } } } -

在students索引中创建文档

POST /students/_doc { "id":1, "name":"羚羊王子", "info":"阿里嘎多美羊羊桑" } POST /students/_doc { "id":2, "name":"沸羊羊", "info":"吃阿里嘎多美羊羊桑" } POST /students/_doc { "id":3, "name":"美羊羊", "info":"沸羊羊,你八嘎呀lua" } POST /students/_doc { "id":4, "name":"李青", "info":"一库一库" } POST /students/_doc { "id":5, "name":"亚索", "info":"面对疾风吧" }

2、搜索方式

-

查询所有文档

GET /students/_search { "query": { "match_all": {} } } -

将查询条件分词后再进行搜索

GET /students/_search { "query": { "match": { "info":"阿里嘎多" } } }即将跟分词后的结果,一个一个进行搜索。

-

ES纠错功能(即模糊查询)

GET /students/_search { "query": { "match": { "info":{ "query": "lue", "fuzziness": 1//最多错误字符数,不能超过2 } } } }

使用match方式可以实现模糊查询,但是模糊查询对中文的支持效果一般。

-

对数字类型的字段进行范围搜索

GET /students/_search { "query": { "range": { "id": { "gte": 1, "lte": 3 } } } }此时gt是大于,lt是小于,后面的e是等于。

-

短语检索,搜索条件不做任何分词解析,在搜索字段对应的倒排索引中精确匹配。

GET /students/_search { "query": { "match_phrase": { "info": "八嘎" } } }它用于关键词检索,但是得是分词器认为是关键词才算是关键词,例如美羊羊就不算,但是沸羊羊就算。也可以在ik的扩展词文件中去设置关键词。

-

单词/词组搜索。搜索条件不做任何分词解析,在搜索字段对应的倒排索引中精确匹配。

1)单词搜索GET /students/_search { "query": { "term": { "info": "疾风" } } }单词搜索时跟短语检索很像。但是此时沸羊羊在单词这边查询不到,估计是单词时,条件的字符数不超过2个。

2)词组搜索GET /students/_search { "query": { "terms": { "info": ["疾风","沸"] } } }可以多个单词分别作为条件同时搜索。

-

复合搜索

1)must,多个搜索方式必须都满足GET /students/_search { "query": { "bool": { "must": [ { "term":{ "info": "嘎" } }, { "range": { "id": { "gte": 2, "lte": 4 } } } ] } } }2)should,多个搜索方式任意满足一个

GET /students/_search { "query": { "bool": { "should": [ { "term":{ "info": "嘎" } }, { "range": { "id": { "gte": 2, "lte": 4 } } } ] } } }3)must_not,多个搜索方式都必须不满足

GET /students/_search { "query": { "bool": { "must": [ { "term":{ "info": "嘎" } } ], "must_not": [ { "range": { "id": { "gte": 1, "lte": 2 } } } ] } } }

3、ES搜索文档的过滤处理

3.1 结果排序

-

默认排序,是根据查询条件的匹配度分数进行排序的

GET /students/_search { "query": { "match": { "info": "阿里噶多美羊羊桑" } } } -

按照id进行升序排序

GET /students/_search { "query": { "match": { "info": "阿里噶多美羊羊桑" } }, "sort": [ { "id": { "order": "asc" } } ] }降序则把asc改成desc。

3.2 分页查询

-

将查询结果从from条开始(不包括from),到from+size条结束(包括from+size)

GET /students/_search { "query": { "match_all": {} }, "from": 0, "size": 2 }

3.3 高亮查询

-

为查询的关键词设置高亮,即在关键词前面和后面加上后缀,再由前端的CS代码设置。

GET /students/_search { "query": { "match": { "info": "面对一库阿里噶多美羊羊桑" } }, "highlight": { "fields": { "info": { "fragment_size": 100,//返回高亮数据的最大长度 "number_of_fragments": 5 //返回结果最多可以包含几段不连续的文字 } }, "pre_tags": [""], "post_tags": [""] } }

3.4 SQL查询

在ES7之后,支持SQL语句查询文档,但是只支持部分SQL语句。并且开源版本不支持Java操作SQL进行查询。

- 使用简单的SQL语句查询文档

GET /_sql?format=txt { "query": "SELECT * FROM students" }

三、原生JAVA操作ES

1、搭建项目

-

在maven模块项目中的pom.xml文件中引入依赖

<dependencies> <!-- es --> <dependency> <groupId>org.elasticsearch</groupId> <artifactId>elasticsearch</artifactId> <version>7.17.9</version> </dependency> <!-- es客户端 --> <dependency> <groupId>org.elasticsearch.client</groupId> <artifactId>elasticsearch-rest-high-level-client</artifactId> <version>7.17.9</version> </dependency> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>4.13</version> </dependency> </dependencies>因为最新的客户端只更新到8.0,但是好像不能用,只能用7.17.9版本。

2、索引操作

-

创建一个空索引

//创建空索引 @Test public void createIndex() throws IOException { //1.创建客户端对象,连接ES RestHighLevelClient client = new RestHighLevelClient(RestClient.builder(new HttpHost("192.168.126.24", 9200, "http"))); //2.创建请求对象 CreateIndexRequest studentx = new CreateIndexRequest("studentx"); //3.发送请求 CreateIndexResponse response = client.indices().create(studentx, RequestOptions.DEFAULT); //4.操作响应结果 System.out.println(response.index()); //5.关闭客户端 client.close(); } -

给空索引添加结构

//给索引添加结构 @Test public void mappingIndex() throws IOException { //1.创建客户端对象,连接ES RestHighLevelClient client = new RestHighLevelClient(RestClient.builder(new HttpHost("192.168.126.24", 9200, "http"))); //2.创建请求对象 PutMappingRequest request = new PutMappingRequest("studentx"); request.source(" {\n" + " \"properties\": {\n" + " \"id\": {\n" + " \"type\": \"integer\"\n" + " },\n" + " \"name\": {\n" + " \"type\": \"text\"\n" + " },\n" + " \"age\": {\n" + " \"type\": \"integer\"\n" + " }\n" + " }\n" + " }", XContentType.JSON); //3.发送请求 AcknowledgedResponse response = client.indices().putMapping(request, RequestOptions.DEFAULT); //4.操作响应结果 System.out.println(response.isAcknowledged()); //5.关闭客户端 client.close(); } -

删除索引

//删除索引 @Test public void deleteIndex() throws IOException { //1.创建客户端对象,连接ES RestHighLevelClient client = new RestHighLevelClient(RestClient.builder(new HttpHost("192.168.126.24", 9200, "http"))); //2.创建请求对象 DeleteIndexRequest request = new DeleteIndexRequest("studentx"); //3.发送请求 AcknowledgedResponse response = client.indices().delete(request, RequestOptions.DEFAULT); //4.操作响应结果 System.out.println(response.isAcknowledged()); //5.关闭客户端 client.close(); }

3、文档操作

-

新增/修改文档操作

// 新增/修改文档 @Test public void addDocument() throws IOException { //1.创建客户端对象,连接ES RestHighLevelClient client = new RestHighLevelClient(RestClient.builder(new HttpHost("192.168.126.24", 9200, "http"))); //2.创建请求对象 IndexRequest request = new IndexRequest("studentx").id("1"); request.source(XContentFactory.jsonBuilder() .startObject() .field("id",1) .field("name","z z x") .field("age",18) .endObject()); //3.发送请求 IndexResponse response = client.index(request, RequestOptions.DEFAULT); //4.操作响应结果 System.out.println(response.status()); //5.关闭客户端 client.close(); }新增文档成功,但是报了个错Unable to parse response body for Response,意思是无法解析响应的正文。有可能是ES服务用的是8.6.1,但是JAVA依赖的版本是7.17.9,版本不一致导致的。

-

根据id查询文档

// 根据id查询文档 @Test public void findByIdDocument() throws IOException { //1.创建客户端对象,连接ES RestHighLevelClient client = new RestHighLevelClient(RestClient.builder(new HttpHost("192.168.126.24", 9200, "http"))); //2.创建请求对象 GetRequest request = new GetRequest("studentx", "1"); //3.发送请求 GetResponse response = client.get(request, RequestOptions.DEFAULT); //4.操作响应结果 System.out.println(response.getSourceAsString()); //5.关闭客户端 client.close(); } -

删除文档

// 删除文档 @Test public void deleteDocument() throws IOException { //1.创建客户端对象,连接ES RestHighLevelClient client = new RestHighLevelClient(RestClient.builder(new HttpHost("192.168.126.24", 9200, "http"))); //2.创建请求对象 DeleteRequest request = new DeleteRequest("studentx", "1"); //3.发送请求 DeleteResponse response = client.delete(request, RequestOptions.DEFAULT); //4.操作响应结果 System.out.println(response.status()); //5.关闭客户端 client.close(); }

4、搜索文档

-

查找所有文档

// 搜索所有文档 @Test public void queryAllDocument() throws IOException { //1.创建客户端对象,连接ES RestHighLevelClient client = new RestHighLevelClient(RestClient.builder(new HttpHost("192.168.126.24", 9200, "http"))); //2.创建搜索条件 SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder(); searchSourceBuilder.query(QueryBuilders.matchAllQuery()); //3.创建请求对象 SearchRequest request = new SearchRequest("studentx").source(searchSourceBuilder); //4.发送请求 SearchResponse response = client.search(request, RequestOptions.DEFAULT); //5.操作响应结果 SearchHits hits = response.getHits(); for (SearchHit hit:hits) { System.out.println(hit.getSourceAsString()); } //6.关闭客户端 client.close(); } -

根据关键词搜索文档

// 根据关键词搜索文档 @Test public void queryTermDocument() throws IOException { //1.创建客户端对象,连接ES RestHighLevelClient client = new RestHighLevelClient(RestClient.builder(new HttpHost("192.168.126.24", 9200, "http"))); //2.创建搜索条件 SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder(); searchSourceBuilder.query(QueryBuilders.termQuery("name","x")); //3.创建请求对象 SearchRequest request = new SearchRequest("studentx").source(searchSourceBuilder); //4.发送请求 SearchResponse response = client.search(request, RequestOptions.DEFAULT); //5.操作响应结果 SearchHits hits = response.getHits(); for (SearchHit hit:hits) { System.out.println(hit.getSourceAsString()); } //6.关闭客户端 client.close(); }

总结:

- 1)默认分词器是standard analyzer,是英文分词器,根据空格和标点符号对应英文进行分词,会进行单词的大小写转换。

2)IK分词器,是中文分词器,分别有两种算法ik_smart:最少切分,即一段话中的字只会被划分一次;ik_max_word:最细粒度划分,即一段话中的字可能会被划分多次。IK分词器中可以在IKAnalyzer.cfg.xml文件中配置自定义词库,其中ext_stopwords是停用词,即不使用;ext_dict是扩展词,即使用。

3)拼音分词器,将中文进行转换成拼音并且进行分词。即单个文字以及整段文字的拼音进行分词。

4)自定义分词器可以将ik和拼音分词器进行一个组合,将ik分词器分词后的结果让拼音分词器再进行分词。 - GET /students/_search,即GET + 索引+ _search是用来搜索文档的固定格式。

1)搜索方式有match_all,即搜索该索引全部的文档。

match,根据查询条件分词后,再按分词的结果一个一个查询,并且match可以实现模糊查询,但是错误的字符数量不能超过两个,使用fuzziness设置。

range,对数字类型的字段进行范围搜索,gt是大于,lt是小于,后面的e是等于。

match_phrase,关键词搜索,此时你的搜索条件要与分词器分词后的结果相同才会查询到结构,并且它是精确搜索,不会再分词。

term是单词搜索,条件的字符数不超过2个,也就是说可能match_phare的搜索范围是包含term的。

terms是词组搜索,可以多个单词分别作为条件同时搜索。

复合搜索,must,多个搜索方式必须都满足;should,多个搜索方式任意一个满足;must_not,多个条件都必须不满足。 - 由于ES对text类型字段数据会做分词处理,使用哪个单词做排序都是不合理的,所以ES中默认不允许text类型的字段做排序,可以使用keyword类型的字段作为排序依据,因为keyword字段不做分词处理。

ES默认排序是按查询条件的相关度分数排序,也可以使用sort,依据字段做升序和降序的排序。

使用from和size进行分页查询,从from(不包括from)位置开始到from+size位置结束。

highlight,为查询的关键词设置高亮,即在关键词前面和后面加上后缀,再由前端的CS代码设置。

在ES7之后,支持SQL语句查询文档,但是只支持部分SQL语句。并且开源版本不支持Java操作SQL进行查询。 - 原生JAVA操作ES时,索引操作时,使用CreateIndexRequest,PutMappingRequest,DeleteIndexRequest类进行索引的操作。

文档操作时,使用IndexRequest,GetRequest,DeleteRequest类进行文档的操作。

搜索文档时,使用SearchRequest类进行搜索的操作。

每次访问ES时,都需要创建客户端对象去连接ES,创建请求对象,发送请求到ES,然后ES返回一个响应,对这个响应进行处理,最后关闭这个客户端。