使用 Azure Databricks 做ETL

这是一个Demo,大家可以一起学习,一起交流,有问题的可以私信,或者留言

本文使用 Azure Databricks 执行 ETL(提取、转换和加载数据)操作。 将数据从 Azure Data Lake Storage Gen2 提取到 Azure Databricks 中,在 Azure Databricks 中对数据运行转换操作,然后将转换的数据加载到 Azure Synapse Analytics 中。

本文的步骤使用 Azure Databricks 的 Azure Synapse 连接器将数据传输到 Azure Databricks。 而此连接器又使用 Azure Blob 存储来临时存储在 Azure Databricks 群集和 Azure Synapse 之间传输的数据。

下图演示了应用程序流:

创建 Azure Databricks 服务

在本部分中,你将使用 Azure 门户创建 Azure Databricks 服务。

-

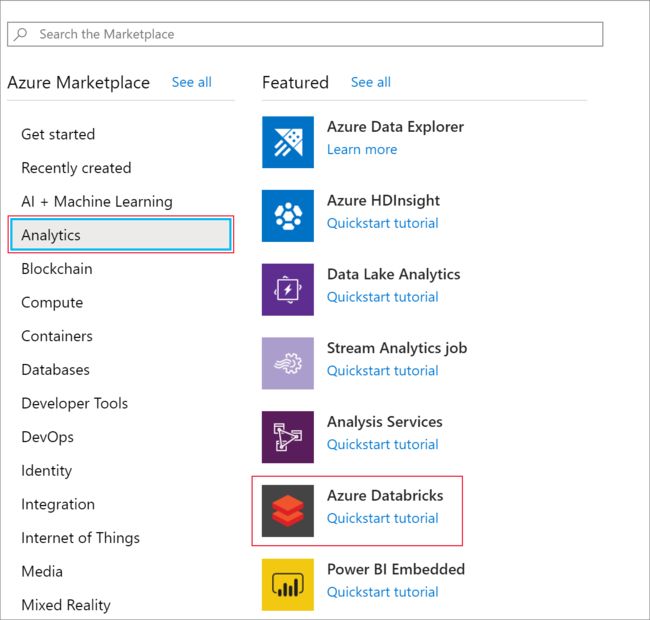

在 Azure 菜单中,选择“创建资源” 。

然后,选择“分析” > “Azure Databricks” 。

-

在“Azure Databricks 服务” 下,提供以下值来创建 Databricks 服务:

表 1 properties 说明 工作区名称 为 Databricks 工作区提供一个名称。 订阅 从下拉列表中选择自己的 Azure 订阅。 资源组 指定是要创建新的资源组还是使用现有的资源组。 资源组是用于保存 Azure 解决方案相关资源的容器。 有关详细信息,请参阅 Azure 资源组概述。 位置 选择“China East 2 ”。 有关其他可用区域,请参阅各区域推出的 Azure 服务。 定价层 选择“标准” 。 -

创建帐户需要几分钟时间。 若要监视操作状态,请查看顶部的进度栏。

-

选择“固定到仪表板” ,然后选择“创建” 。

在 Azure Databricks 中创建 Spark 群集

-

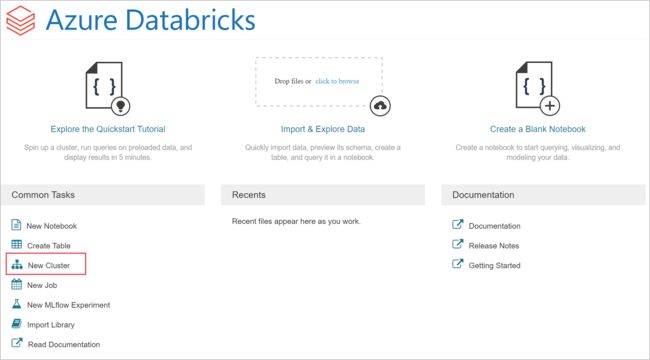

在 Azure 门户中,转到所创建的 Databricks 服务,然后选择“启动工作区”。

-

系统随后会将你重定向到 Azure Databricks 门户。 在门户中选择“群集”。

-

在“新建群集”页中,提供用于创建群集的值。

-

填写以下字段的值,对于其他字段接受默认值:

-

输入群集的名称。

-

请务必选中“在不活动超过 __ 分钟后终止” 复选框。 如果未使用群集,则请提供一个持续时间(以分钟为单位),超过该时间后群集会被终止。

-

选择“创建群集”。 群集运行后,可将笔记本附加到该群集,并运行 Spark 作业。

-

在 Azure Data Lake Storage Gen2 帐户中创建文件系统

在本部分中,你将在 Azure Databricks 工作区中创建一个 Notebook,然后运行代码片段来配置存储帐户

-

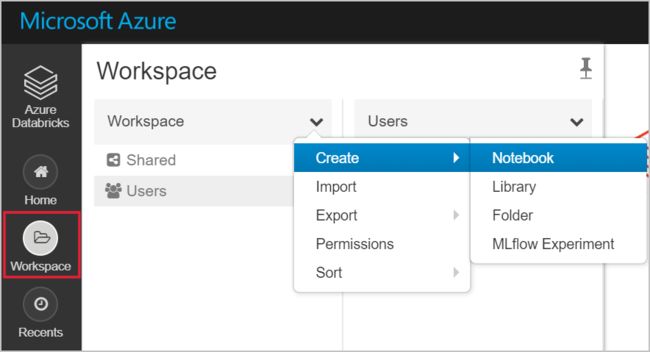

在 Azure 门户中,转到你创建的 Azure Databricks 服务,然后选择“启动工作区”。

-

在左侧选择“工作区” 。 在 工作区 下拉列表中,选择 创建 > 笔记本 。

-

在“创建 Notebook”对话框中,输入 Notebook 的名称。 选择“Scala”作为语言,然后选择前面创建的 Spark 群集。

-

选择“创建” 。

-

以下代码块设置 Spark 会话中访问的任何 ADLS Gen 2 帐户的默认服务主体凭据。 第二个代码块会将帐户名称追加到该设置,从而指定特定的 ADLS Gen 2 帐户的凭据。 将任一代码块复制并粘贴到 Azure Databricks 笔记本的第一个单元格中。

会话配置

Scala复制

val appID = "" val secret = " " val tenantID = " " spark.conf.set("fs.azure.account.auth.type", "OAuth") spark.conf.set("fs.azure.account.oauth.provider.type", "org.apache.hadoop.fs.azurebfs.oauth2.ClientCredsTokenProvider") spark.conf.set("fs.azure.account.oauth2.client.id", " ") spark.conf.set("fs.azure.account.oauth2.client.secret", " ") spark.conf.set("fs.azure.account.oauth2.client.endpoint", "https://login.microsoftonline.com/ /oauth2/token") spark.conf.set("fs.azure.createRemoteFileSystemDuringInitialization", "true") 帐户配置

Scala复制

val storageAccountName = "" val appID = " " val secret = " " val fileSystemName = " " val tenantID = " " spark.conf.set("fs.azure.account.auth.type." + storageAccountName + ".dfs.core.chinacloudapi.cn", "OAuth") spark.conf.set("fs.azure.account.oauth.provider.type." + storageAccountName + ".dfs.core.chinacloudapi.cn", "org.apache.hadoop.fs.azurebfs.oauth2.ClientCredsTokenProvider") spark.conf.set("fs.azure.account.oauth2.client.id." + storageAccountName + ".dfs.core.chinacloudapi.cn", "" + appID + "") spark.conf.set("fs.azure.account.oauth2.client.secret." + storageAccountName + ".dfs.core.chinacloudapi.cn", "" + secret + "") spark.conf.set("fs.azure.account.oauth2.client.endpoint." + storageAccountName + ".dfs.core.chinacloudapi.cn", "https://login.microsoftonline.com/" + tenantID + "/oauth2/token") spark.conf.set("fs.azure.createRemoteFileSystemDuringInitialization", "true") dbutils.fs.ls("abfss://" + fileSystemName + "@" + storageAccountName + ".dfs.core.chinacloudapi.cn/") spark.conf.set("fs.azure.createRemoteFileSystemDuringInitialization", "false") -

在此代码块中,请将

-

-

-

-

-

按 SHIFT + ENTER 键,运行此块中的代码。

注意:这些参数要看你使用的Azure是国际版还是中国版,本文使用的是中国版,如是国际版则需要将dfs.core.chinacloudapi.cn改成国际版的

将示例数据引入 Azure Data Lake Storage Gen2 帐户

将以下代码输入到 Notebook 单元格中:

复制

%sh wget -P /tmp https://raw.githubusercontent.com/Azure/usql/master/Examples/Samples/Data/json/radiowebsite/small_radio_json.json

下面是small_radio_json.json的内容,如果上面的执行不成功的话,可以复制,粘贴,上传到到Azure Data Lake Storage Gen2 上

{"ts":1409318650332,"userId":"309","sessionId":1879,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"free","itemInSession":2,"location":"Killeen-Temple, TX","lastName":"Montgomery","firstName":"Annalyse","registration":1384448062332,"gender":"F","artist":"El Arrebato","song":"Quiero Quererte Querer","length":234.57914}

{"ts":1409318653332,"userId":"11","sessionId":10,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"paid","itemInSession":9,"location":"Anchorage, AK","lastName":"Thomas","firstName":"Dylann","registration":1400723739332,"gender":"M","artist":"Creedence Clearwater Revival","song":"Born To Move","length":340.87138}

{"ts":1409318685332,"userId":"201","sessionId":2047,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"paid","itemInSession":11,"location":"New York-Newark-Jersey City, NY-NJ-PA","lastName":"Watts","firstName":"Liam","registration":1406279422332,"gender":"M","artist":"Gorillaz","song":"DARE","length":246.17751}

{"ts":1409318686332,"userId":"779","sessionId":2136,"page":"Home","auth":"Logged In","method":"GET","status":200,"level":"free","itemInSession":0,"location":"Nashville-Davidson--Murfreesboro--Franklin, TN","lastName":"Townsend","firstName":"Tess","registration":1406970190332,"gender":"F"}

{"ts":1409318697332,"userId":"401","sessionId":400,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"free","itemInSession":2,"location":"Atlanta-Sandy Springs-Roswell, GA","lastName":"Smith","firstName":"Margaux","registration":1406191211332,"gender":"F","artist":"Otis Redding","song":"Send Me Some Lovin'","length":135.57506}

{"ts":1409318714332,"userId":"521","sessionId":520,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"paid","itemInSession":39,"location":"Chicago-Naperville-Elgin, IL-IN-WI","lastName":"Morse","firstName":"Alan","registration":1401760632332,"gender":"M","artist":"Slightly Stoopid","song":"Mellow Mood","length":198.53016}

{"ts":1409318743332,"userId":"244","sessionId":2261,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"free","itemInSession":1,"location":"San Jose-Sunnyvale-Santa Clara, CA","lastName":"Shelton","firstName":"Gabriella","registration":1389460542332,"gender":"F","artist":"NOFX","song":"Linoleum","length":130.2722}

{"ts":1409318804332,"userId":"969","sessionId":968,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"paid","itemInSession":0,"location":"Detroit-Warren-Dearborn, MI","lastName":"Williams","firstName":"Elijah","registration":1388691347332,"gender":"M","artist":"Nirvana","song":"The Man Who Sold The World","length":260.98893}

{"ts":1409318832332,"userId":"401","sessionId":400,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"free","itemInSession":3,"location":"Atlanta-Sandy Springs-Roswell, GA","lastName":"Smith","firstName":"Margaux","registration":1406191211332,"gender":"F","artist":"Aventura","song":"La Nina","length":293.56363}

{"ts":1409318891332,"userId":"779","sessionId":2136,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"free","itemInSession":1,"location":"Nashville-Davidson--Murfreesboro--Franklin, TN","lastName":"Townsend","firstName":"Tess","registration":1406970190332,"gender":"F","artist":"Harmonia","song":"Sehr kosmisch","length":655.77751}

{"ts":1409318912332,"userId":"521","sessionId":520,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"paid","itemInSession":40,"location":"Chicago-Naperville-Elgin, IL-IN-WI","lastName":"Morse","firstName":"Alan","registration":1401760632332,"gender":"M","artist":"Spragga Benz","song":"Backshot","length":122.53995}

{"ts":1409318931332,"userId":"201","sessionId":2047,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"paid","itemInSession":12,"location":"New York-Newark-Jersey City, NY-NJ-PA","lastName":"Watts","firstName":"Liam","registration":1406279422332,"gender":"M","artist":"Bananarama","song":"Love In The First Degree","length":208.92689}

{"ts":1409318931332,"userId":"201","sessionId":2047,"page":"Home","auth":"Logged In","method":"GET","status":200,"level":"paid","itemInSession":13,"location":"New York-Newark-Jersey City, NY-NJ-PA","lastName":"Watts","firstName":"Liam","registration":1406279422332,"gender":"M"}

{"ts":1409318993332,"userId":"11","sessionId":10,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"paid","itemInSession":10,"location":"Anchorage, AK","lastName":"Thomas","firstName":"Dylann","registration":1400723739332,"gender":"M","artist":"Alliance Ethnik","song":"Représente","length":252.21179}

{"ts":1409319034332,"userId":"521","sessionId":520,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"paid","itemInSession":41,"location":"Chicago-Naperville-Elgin, IL-IN-WI","lastName":"Morse","firstName":"Alan","registration":1401760632332,"gender":"M","artist":"Sense Field","song":"Am I A Fool","length":181.86404}

{"ts":1409319064332,"userId":"969","sessionId":968,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"paid","itemInSession":1,"location":"Detroit-Warren-Dearborn, MI","lastName":"Williams","firstName":"Elijah","registration":1388691347332,"gender":"M","artist":"Binary Star","song":"Solar Powered","length":268.93016}

{"ts":1409319125332,"userId":"401","sessionId":400,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"free","itemInSession":4,"location":"Atlanta-Sandy Springs-Roswell, GA","lastName":"Smith","firstName":"Margaux","registration":1406191211332,"gender":"F","artist":"Sarah Borges and the Broken Singles","song":"Do It For Free","length":158.95465}

{"ts":1409319215332,"userId":"521","sessionId":520,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"paid","itemInSession":42,"location":"Chicago-Naperville-Elgin, IL-IN-WI","lastName":"Morse","firstName":"Alan","registration":1401760632332,"gender":"M","artist":"Incubus","song":"Drive","length":232.46322}

{"ts":1409319245332,"userId":"11","sessionId":10,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"paid","itemInSession":11,"location":"Anchorage, AK","lastName":"Thomas","firstName":"Dylann","registration":1400723739332,"gender":"M","artist":"Ella Fitzgerald","song":"On Green Dolphin Street (Medley) (1999 Digital Remaster)","length":427.15383}

{"ts":1409319283332,"userId":"401","sessionId":400,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"free","itemInSession":5,"location":"Atlanta-Sandy Springs-Roswell, GA","lastName":"Smith","firstName":"Margaux","registration":1406191211332,"gender":"F","artist":"10cc","song":"Silly Love","length":241.34485}

{"ts":1409319293332,"userId":"906","sessionId":1909,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"free","itemInSession":0,"location":"Toledo, OH","lastName":"Oconnell","firstName":"Aurora","registration":1406406461332,"gender":"F","artist":"Eric Johnson","song":"Trail Of Tears (Album Version)","length":361.37751}

{"ts":1409319332332,"userId":"969","sessionId":968,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"paid","itemInSession":2,"location":"Detroit-Warren-Dearborn, MI","lastName":"Williams","firstName":"Elijah","registration":1388691347332,"gender":"M","artist":"Phoenix","song":"Holdin' On Together","length":207.15057}

{"ts":1409319365332,"userId":"750","sessionId":749,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"free","itemInSession":0,"location":"Grants Pass, OR","lastName":"Coleman","firstName":"Alex","registration":1404326435332,"gender":"M","artist":"Ween","song":"The Stallion","length":276.13995}

{"ts":1409319447332,"userId":"521","sessionId":520,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"paid","itemInSession":43,"location":"Chicago-Naperville-Elgin, IL-IN-WI","lastName":"Morse","firstName":"Alan","registration":1401760632332,"gender":"M","artist":"dEUS","song":"Secret Hell","length":299.83302}

{"ts":1409319539332,"userId":"969","sessionId":968,"page":"NextSong","auth":"Logged In","method":"PUT","status":200,"level":"paid","itemInSession":3,"location":"Detroit-Warren-Dearborn, MI","lastName":"Williams","firstName":"Elijah","registration":1388691347332,"gender":"M","artist":"Holly Cole","song":"Cry (If You Want To)","length":158.98077}

从 Azure Data Lake Storage Gen2 帐户中提取数据

-

现在可以将示例 json 文件加载为 Azure Databricks 中的数据帧。 将以下代码粘贴到新单元格中。 将括号中显示的占位符替换为你的值。

Scala复制

val df = spark.read.json("abfss://" + fileSystemName + "@" + storageAccountName + ".dfs.core.chinacloudapi.cn/small_radio_json.json") -

按 SHIFT + ENTER 键,运行此块中的代码。

-

运行以下代码来查看数据帧的内容:

Scala复制

df.show()会显示类似于以下代码片段的输出:

输出复制

+---------------------+---------+---------+------+-------------+----------+---------+-------+--------------------+------+--------+-------------+---------+--------------------+------+-------------+------+ | artist| auth|firstName|gender|itemInSession| lastName| length| level| location|method| page| registration|sessionId| song|status| ts|userId| +---------------------+---------+---------+------+-------------+----------+---------+-------+--------------------+------+--------+-------------+---------+--------------------+------+-------------+------+ | El Arrebato |Logged In| Annalyse| F| 2|Montgomery|234.57914| free | Killeen-Temple, TX| PUT|NextSong|1384448062332| 1879|Quiero Quererte Q...| 200|1409318650332| 309| | Creedence Clearwa...|Logged In| Dylann| M| 9| Thomas|340.87138| paid | Anchorage, AK| PUT|NextSong|1400723739332| 10| Born To Move| 200|1409318653332| 11| | Gorillaz |Logged In| Liam| M| 11| Watts|246.17751| paid |New York-Newark-J...| PUT|NextSong|1406279422332| 2047| DARE| 200|1409318685332| 201| ... ...现在,你已将数据从 Azure Data Lake Storage Gen2 提取到 Azure Databricks 中。

在 Azure Databricks 中转换数据

原始示例数据 small_radio_json.json 文件捕获某个电台的听众,有多个不同的列。 在此部分,请对该数据进行转换,仅检索数据集中的特定列。

-

首先,仅从已创建的 dataframe 检索 firstName 、 lastName 、 gender 、 location 和 level 列。

val specificColumnsDf = df.select("firstname", "lastname", "gender", "location", "level") specificColumnsDf.show()接收的输出如以下代码片段所示:

输出复制

+---------+----------+------+--------------------+-----+ |firstname| lastname|gender| location|level| +---------+----------+------+--------------------+-----+ | Annalyse|Montgomery| F| Killeen-Temple, TX| free| | Dylann| Thomas| M| Anchorage, AK| paid| | Liam| Watts| M|New York-Newark-J...| paid| | Tess| Townsend| F|Nashville-Davidso...| free| | Margaux| Smith| F|Atlanta-Sandy Spr...| free| | Alan| Morse| M|Chicago-Napervill...| paid| |Gabriella| Shelton| F|San Jose-Sunnyval...| free| | Elijah| Williams| M|Detroit-Warren-De...| paid| | Margaux| Smith| F|Atlanta-Sandy Spr...| free| | Tess| Townsend| F|Nashville-Davidso...| free| | Alan| Morse| M|Chicago-Napervill...| paid| | Liam| Watts| M|New York-Newark-J...| paid| | Liam| Watts| M|New York-Newark-J...| paid| | Dylann| Thomas| M| Anchorage, AK| paid| | Alan| Morse| M|Chicago-Napervill...| paid| | Elijah| Williams| M|Detroit-Warren-De...| paid| | Margaux| Smith| F|Atlanta-Sandy Spr...| free| | Alan| Morse| M|Chicago-Napervill...| paid| | Dylann| Thomas| M| Anchorage, AK| paid| | Margaux| Smith| F|Atlanta-Sandy Spr...| free| +---------+----------+------+--------------------+-----+ -

可以进一步转换该数据,将 level 列重命名为 subscription_type 。

Scala复制

val renamedColumnsDF = specificColumnsDf.withColumnRenamed("level", "subscription_type") renamedColumnsDF.show()接收的输出如以下代码片段所示。

+---------+----------+------+--------------------+-----------------+ |firstname| lastname|gender| location|subscription_type| +---------+----------+------+--------------------+-----------------+ | Annalyse|Montgomery| F| Killeen-Temple, TX| free| | Dylann| Thomas| M| Anchorage, AK| paid| | Liam| Watts| M|New York-Newark-J...| paid| | Tess| Townsend| F|Nashville-Davidso...| free| | Margaux| Smith| F|Atlanta-Sandy Spr...| free| | Alan| Morse| M|Chicago-Napervill...| paid| |Gabriella| Shelton| F|San Jose-Sunnyval...| free| | Elijah| Williams| M|Detroit-Warren-De...| paid| | Margaux| Smith| F|Atlanta-Sandy Spr...| free| | Tess| Townsend| F|Nashville-Davidso...| free| | Alan| Morse| M|Chicago-Napervill...| paid| | Liam| Watts| M|New York-Newark-J...| paid| | Liam| Watts| M|New York-Newark-J...| paid| | Dylann| Thomas| M| Anchorage, AK| paid| | Alan| Morse| M|Chicago-Napervill...| paid| | Elijah| Williams| M|Detroit-Warren-De...| paid| | Margaux| Smith| F|Atlanta-Sandy Spr...| free| | Alan| Morse| M|Chicago-Napervill...| paid| | Dylann| Thomas| M| Anchorage, AK| paid| | Margaux| Smith| F|Atlanta-Sandy Spr...| free| +---------+----------+------+--------------------+-----------------+

将数据加载到 Azure Synapse 中

在本部分,请将转换的数据上传到 Azure Synapse 中。 使用适用于 Azure Databricks 的 Azure Synapse 连接器直接上传数据帧,在 Azure Synapse 池中作为表来存储。

如前所述,Azure Synapse 连接器使用 Azure Blob 存储作为临时存储,以便将数据从 Azure Databricks 上传到 Azure Synapse。 因此,一开始请提供连接到存储帐户所需的配置。 必须已经按照本文先决条件部分的要求创建了帐户。

-

提供从 Azure Databricks 访问 Azure 存储帐户所需的配置。

val blobStorage = ".blob.core.chinacloudapi.cn" val blobContainer = " " val blobAccessKey = " " -

指定一个在 Azure Databricks 和 Azure Synapse 之间移动数据时需要使用的临时文件夹。

val tempDir = "wasbs://" + blobContainer + "@" + blobStorage +"/tempDirs" -

运行以下代码片段,以便在配置中存储 Azure Blob 存储访问密钥。 此操作可确保不需将访问密钥以纯文本形式存储在笔记本中。

val acntInfo = "fs.azure.account.key."+ blobStorage sc.hadoopConfiguration.set(acntInfo, blobAccessKey) -

提供连接到 Azure Synapse 实例所需的值。 先决条件是必须已创建 Azure Synapse Analytics 服务。 为 dwServer 使用完全限定的服务器名称 。 例如,

.database.chinacloudapi.cn //Azure Synapse related settings val dwDatabase = "" val dwServer = " " val dwUser = " " val dwPass = " " val dwJdbcPort = "1433" val dwJdbcExtraOptions = "encrypt=true;trustServerCertificate=true;hostNameInCertificate=*.database.chinacloudapi.cn;loginTimeout=30;" val sqlDwUrl = "jdbc:sqlserver://" + dwServer + ":" + dwJdbcPort + ";database=" + dwDatabase + ";user=" + dwUser+";password=" + dwPass + ";$dwJdbcExtraOptions" val sqlDwUrlSmall = "jdbc:sqlserver://" + dwServer + ":" + dwJdbcPort + ";database=" + dwDatabase + ";user=" + dwUser+";password=" + dwPass -

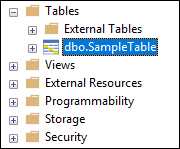

运行以下代码片段来加载转换的数据帧 renamedColumnsDF ,在 Azure Synapse 中将其存储为表。 此代码片段在 SQL 数据库中创建名为 SampleTable 的表。

spark.conf.set( "spark.sql.parquet.writeLegacyFormat", "true") renamedColumnsDF.write.format("com.databricks.spark.sqldw").option("url", sqlDwUrlSmall).option("dbtable", "SampleTable") .option( "forward_spark_azure_storage_credentials","True").option("tempdir", tempDir).mode("overwrite").save() -

连接到 SQL 数据库,验证是否看到名为 SampleTable 的数据库。

-

运行一个 select 查询,验证表的内容。 该表的数据应该与 renamedColumnsDF dataframe 相同。