nginx入门和使用实践

文章目录

- nginx入门和使用实践

-

- 一、前言

- 二、nginx的初步认识

-

- 1.单体应用到集群

- 2.nginx的安装以及配置分析

-

- nginx简介

- 安装nginx

- 配置分析

- 3.nginx虚拟主机配置

- 4.详解Location的配置规则

- 5.nginx模块

- 三、nginx的实践应用

-

- 1.反向代理功能配置

-

- 1.12服务器

- 1.11服务器

- 2.负载均衡实战

-

- 1.13服务器

- 1.11服务器

- 3.动静分离

-

- 1.11 服务器

- 1.12服务器和1.13服务器

- 4.配置文件分析

- 5.多进程模型原理

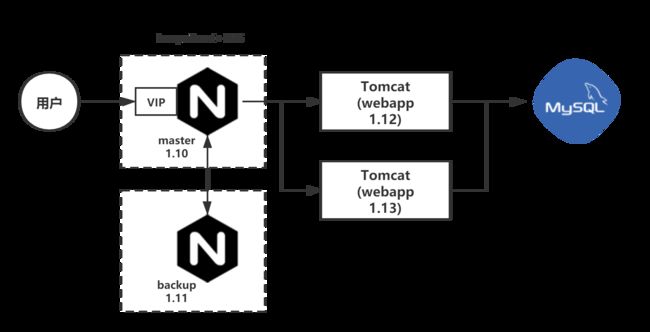

- 6.高可用集群实战

-

- 1)安装 keepalived

- 2)配置 keepalived

- 3)运行keepalived、nginx和tomcat

- 7.nginx停止服务 触发sh脚本

nginx入门和使用实践

一、前言

- 环境:

LInux发行版: CentOS-7-x86_64-DVD-1804.iso

SSH工具:FinalShell

- 参考:

nginx:

http://nginx.org/en/

http://nginx.org/en/download.html

LVS:http://zh.linuxvirtualserver.org/

Keepalived:https://www.keepalived.org/

Tomcat:http://tomcat.apache.org/

HAProxy:http://www.haproxy.org/

CentOS 操作命令:https://blog.csdn.net/u011424614/article/details/94555916

二、nginx的初步认识

1.单体应用到集群

通过集群的方式,提高用户请求的并发量, 实现服务的高可用

- TPS:Transactions Per Second ,指系统每秒可处理的事务个数(增删改)

- QPS:Queries Per Second ,指系统每秒可查询的个数(查)

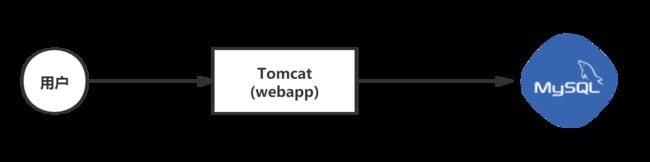

- 单体架构

服务端部署 Tomcat 的 webapp 和 数据库,用户直接将请求发送到 Tomcat

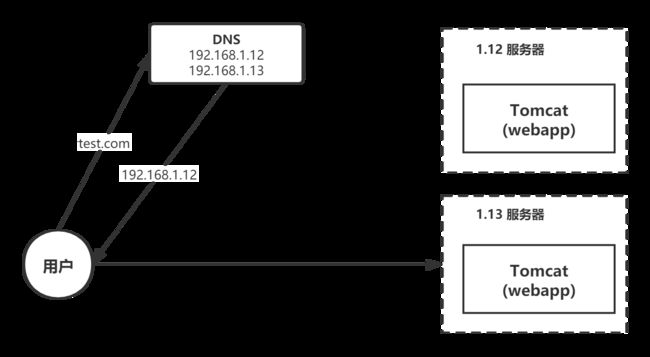

- DNS 负载均衡

将多个IP地址配置到DNS中

DNS轮询负载方式,无法判断 Tomcat 的运行情况

- F5 负载均衡

硬件负载均衡,即在 Tomcat 的前面加上 F5 负载均衡服务器,F5 将用户请求分发到不同的服务器上

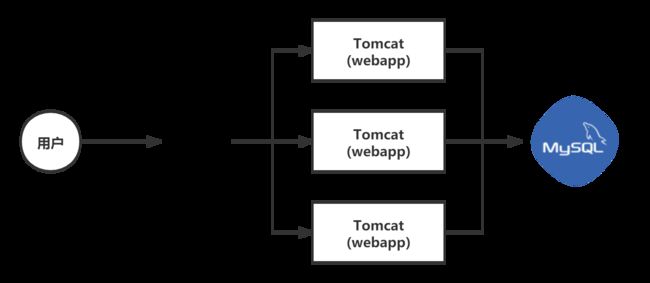

- nginx 负载均衡

软件负载均衡,使用 Apache、nginx、HAProxy 等中间件实现负载均衡,完成用户请求的分发工作

- nginx 双机热备

双机热备通过切换方式分为: 主-备模式 (Active-Standby) 和 双主模式 (Active-Active)

1.主-备模式: 一台服务器为激活状态,处理请求; 而另外一台为备用状态, 等待激活

2.双主模式: 两台服务器处理分别处理不同的业务, 相互为主备关系, 如果其中一台不能处理业务后, 合并为一台进行处理

通过使用 LVS+Keepalived 来进行心跳检测和IP漂移(对外提供虚拟IP [VIP]),从而实现 nginx 服务器的主-备模式, 保证 nginx 服务的高可用

软硬件负载可组合使用, 例如: 在 nginx 的前置先使用 F5 负载

2.nginx的安装以及配置分析

nginx简介

nginx [engine x]是HTTP和反向代理服务器,邮件代理服务器和通用TCP / UDP代理服务器.

nginx是一个高性能反向代理服务器

正向代理: 代理客户端 (e.g. VPN)

反向代理: 代理服务端

可以实现认证, 授权, 限流, 动静分离, 内容分发 等等

安装nginx

资源链接: http://nginx.org/en/download.html

Stable version (稳定版本)

- 下载软件(root用户),解压和编译安装

# mkdir /root/download

#-- wet [IPv4] [保存路径] [下载链接]

# wget -4 /root/download http://nginx.org/download/nginx-1.18.0.tar.gz

# cd /root/download

# tar -zxvf nginx-1.18.0.tar.gz

# cd nginx-1.18.0/

# mkdir /opt/nginx

# yum install pcre-devel

# yum install zlib-devel

#-- 配置安装路径

# ./configure --prefix=/opt/nginx

# make && make install

devel 包含:头文件和链接库

例如:zlib 和 zlib-devel,如果只是引用包的API,则下载zlib,如果需要使用到源码,则下载zlib-devel

- 运行

# cd /opt/nginx

# ./sbin/nginx

浏览器输入 IP 进行访问,如果是远程IP,请确保 CentOS 已经开放了 80 端口;开放端口命令,可参考前言的 CentOS 命令

- 其它命令

| 命令 | 说明 |

|---|---|

| ./sbin/nginx -s stop | 停止服务 |

| ./sbin/nginx -s reload | 重启服务 |

配置分析

- 配置文件

conf/nginx.conf

# vim ./conf/nginx.conf

- 通过

段进行划分,例如:events 段、http 段包含 server 段

http://nginx.org/en/docs/http/ngx_http_core_module.html#http

#-- 配置用户组和用户

#user nobody;

#-- worker 进程数

worker_processes 1;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

#-- 配置IO模型,允许的连接数

events {

worker_connections 1024;

}

http {

#-- 引入多媒体类型

include mime.types;

#-- 默认类型:二进制流

default_type application/octet-stream;

#-- 日志格式

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#-- 访问日志输出,默认使用 main 日志格式

#access_log logs/access.log main;

#-- 是否开启零拷贝模式

sendfile on;

#tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

#-- 压缩

#gzip on;

server {

#-- 监听的端口

listen 80;

#-- 监听的主机名

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

#-- 匹配规则

location / {

#-- 文件夹

root html;

#-- 文件

index index.html index.htm;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

# proxy the PHP scripts to Apache listening on 127.0.0.1:80

#

#location ~ \.php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ \.php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /\.ht {

# deny all;

#}

}

# another virtual host using mix of IP-, name-, and port-based configuration

#

#server {

# listen 8000;

# listen somename:8080;

# server_name somename alias another.alias;

# location / {

# root html;

# index index.html index.htm;

# }

#}

# HTTPS server

#

#server {

# listen 443 ssl;

# server_name localhost;

# ssl_certificate cert.pem;

# ssl_certificate_key cert.key;

# ssl_session_cache shared:SSL:1m;

# ssl_session_timeout 5m;

# ssl_ciphers HIGH:!aNULL:!MD5;

# ssl_prefer_server_ciphers on;

# location / {

# root html;

# index index.html index.htm;

# }

#}

}

3.nginx虚拟主机配置

server:http://nginx.org/en/docs/http/ngx_http_core_module.html#server

listen:http://nginx.org/en/docs/http/ngx_http_core_module.html#listen

server_name:http://nginx.org/en/docs/http/ngx_http_core_module.html#server_name

- 指 nginx 文件中 server 段的内容

server {

listen 80;

server_name localhost;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

root html;

index index.html index.htm;

}

}

- 通过修改

listen和server_name,可实现基于端口号的虚拟主机、基于IP的虚拟主机(虚拟IP)和基于域名的虚拟主机(二级域名,多个域名使用空格分隔)

4.详解Location的配置规则

location:http://nginx.org/en/docs/http/ngx_http_core_module.html#location

- 配置语法

location [ = | ~ | ~* | ^~ ] uri { ... }

- 匹配规则

| 配置 | 说明 | 例子 |

|---|---|---|

| location /url | 精准匹配 | location = /index |

| location ^~ url | 前缀匹配 | location ^~ /article/ |

| location ~ | 正则匹配(e.g. 动静分离) | location ~ \.(gif|png|js|css)$ |

| location / | 通用匹配 | location / |

-

匹配优先级:精准匹配 > 前缀匹配 > 正则匹配

(如果匹配到多个路径,会以匹配到最长路径的为主)

5.nginx模块

- 核心模块:ngx_http_core_module

http://nginx.org/en/docs/http/ngx_http_core_module.html

- 标准模块:http模块;e.g. ngx_http_access_module

http://nginx.org/en/docs/http/ngx_http_access_module.html

- 第三方模块:

https://www.nginx.com/resources/wiki/modules/

安装完第三方模块后,需要重新编译

原配置文件,如果不做处理,会被覆盖(加上原配置即可)

#-- nginx解压路径

# cd /root/download/nginx-1.18.0/

#-- 查看之前的配置

# ./sbin/nginx -V

#-- 指定原安装路径,新增模块

#-- http_stub_status_module:状态监控

#-- http_random_index_module:随机首页

# ./configure --prefix=/opt/nginx --with-http_stub_status_module --with-http_random_index_module

# make

#-- 注意需要先停止nginx

# cp objs/nginx /opt/nginx/sbin/

- http_stub_status_module:状态监控

修改配置

vim ./conf/nginx.conf,server 段中设置

location /status {

stub_status;

}

访问:http://192.168.1.11/status (nginx机器的IP)

页面内容:

Active connections: 1 server accepts handled requests 1 1 1 Reading: 0 Writing: 1 Waiting: 0

- http_random_index_module:随机首页

随机不同版本的首页 或者 不同页面

location / {

root html;

#-- 匹配规则必须是通用匹配,即 / 时才会生效

random_index on;

index index.html index.htm;

}

三、nginx的实践应用

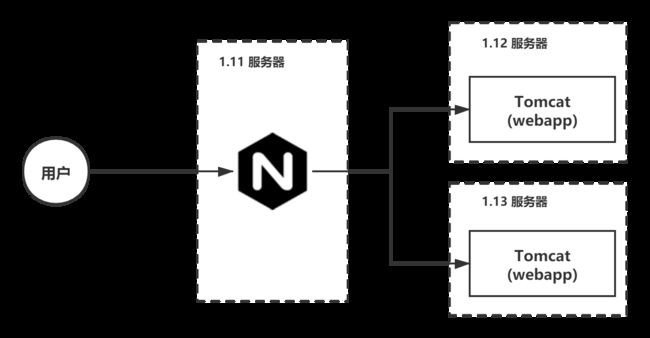

1.反向代理功能配置

场景说明:

当前示例的部分操作基于前面内容

通过 nginx 反向代理 tomcat,1.11 服务器部署 nginx;1.12 服务器部署 tomcat

1.12服务器

- 下载和配置 Tomcat

# cd /root/download/

# wget ./ https://mirrors.tuna.tsinghua.edu.cn/apache/tomcat/tomcat-8/v8.5.54/bin/apache-tomcat-8.5.54.tar.gz

# tar -zxvf apache-tomcat-8.5.54.tar.gz

# mkdir /opt/tomcat

# cp -r apache-tomcat-8.5.54 /opt/tomcat

# cd /opt/tomcat/apache-tomcat-8.5.54/

# ./bin/startup.sh

浏览器输入 IP 进行访问,如果是远程IP,请确保 CentOS 已经开放了对应的端口;开放端口命令,可参考前言的 CentOS 命令

- 修改 tomcat 的 index.jsp

# vim /opt/tomcat/apache-tomcat-8.5.54/webapps/ROOT/index.jsp

- index.jsp 找到 或者 任意位置

......index 1 page

<%= request.getRemoteAddr() %>

<%= request.getHeader("X-Real-IP") %>

If you're seeing this, you've successfully installed Tomcat. Congratulations!

......1.11服务器

- 拆分 nginx 配置

# cd /opt/nginx # mkdir ./conf/extra # cp ./conf/nginx.conf ./conf/extra/proxy.conf #-- 参考下方主配置 # vim ./conf/nginx.conf #-- 参考下方代理配置 # vim ./extra/proxy.conf # ./sbin/nginx -s reload- 主配置(通过

include extra/*.conf;引入外部配置)

#user nobody; #-- 设置权限: http://nginx.org/en/docs/ngx_core_module.html#user user root; worker_processes 1; #error_log logs/error.log; #error_log logs/error.log notice; #error_log logs/error.log info; #pid logs/nginx.pid; events { worker_connections 1024; } http { include mime.types; default_type application/octet-stream; #log_format main '$remote_addr - $remote_user [$time_local] "$request" ' # '$status $body_bytes_sent "$http_referer" ' # '"$http_user_agent" "$http_x_forwarded_for"'; #access_log logs/access.log main; sendfile on; #tcp_nopush on; #keepalive_timeout 0; keepalive_timeout 65; #-- 启用压缩 gzip on; #-- 导入外部配置 include extra/*.conf; }- 代理配置

http://nginx.org/en/docs/http/ngx_http_upstream_module.html

server { listen 80; server_name localhost; #charset koi8-r; #access_log logs/host.access.log main; location / { #-- 代理地址 proxy_pass http://192.168.1.12:8080; #-- 客户端的协议头信息,否则被代理对象只能获取到代理服务器的信息 proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_X_forwarded_for; } #error_page 404 /404.html; # redirect server error pages to the static page /50x.html # error_page 500 502 503 504 /50x.html; location = /50x.html { root html; } }- 重启 nginx

2.负载均衡实战

场景说明:

-

当前示例的部分操作基于前面内容

-

通过 nginx 反向代理 tomcat,1.11 服务器部署 nginx;1.12 服务器部署 tomcat;1.13 服务器部署 tomcat

1.13服务器

- 下载和配置 Tomcat

# cd /root/download/ # wget ./ https://mirrors.tuna.tsinghua.edu.cn/apache/tomcat/tomcat-8/v8.5.54/bin/apache-tomcat-8.5.54.tar.gz # tar -zxvf apache-tomcat-8.5.54.tar.gz # mkdir /opt/tomcat # cp -r apache-tomcat-8.5.54 /opt/tomcat # cd /opt/tomcat/apache-tomcat-8.5.54/ # ./bin/startup.sh浏览器输入 IP 进行访问,如果是远程IP,请确保 CentOS 已经开放了对应的端口;开放端口命令,可参考前言的 CentOS 命令

- 修改 tomcat 的 index.jsp

# vim /opt/tomcat/apache-tomcat-8.5.54/webapps/ROOT/index.jsp- index.jsp 找到 或者 任意位置

......index 2 page

<%= request.getRemoteAddr() %>

<%= request.getHeader("X-Real-IP") %>

If you're seeing this, you've successfully installed Tomcat. Congratulations!

......1.11服务器

- 代理配置

http://nginx.org/en/docs/http/ngx_http_upstream_module.html

upstream backend { server 192.168.1.12:8080; server 192.168.1.13:8080; } server { listen 80; server_name localhost; #charset koi8-r; #access_log logs/host.access.log main; location / { proxy_pass http://backend; #-- 客户端的协议头信息,否则被代理对象只能获取到代理服务器的信息 proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_X_forwarded_for; } #error_page 404 /404.html; # redirect server error pages to the static page /50x.html # error_page 500 502 503 504 /50x.html; location = /50x.html { root html; } }-

重启 nginx

-

其它配置

upstream backend { #-- 设置权重,请求失败 2 次后,60 秒内不会再访问 server 192.168.1.12:8080 weight=1 max_fails=2 fail_timeout=60s; server 192.168.1.13:8080 weight=2 max_fails=2 fail_timeout=60s; } server { listen 80; server_name localhost; location / { proxy_pass http://backend; #-- 客户端的协议头信息,否则被代理对象只能获取到代理服务器的信息 proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_X_forwarded_for; #-- 请求错误或超时等情况,跳转到下一个被代理服务器处理 proxy_next_upstream error timeout http_500; #-- nginx 连接 被代理服务器 的超时时间 proxy_connect_timeout 60s; #-- nginx 发送数据到 被代理服务器 的超时时间 proxy_send_timeout 60s; #-- nginx 从 被代理服务器 读取数据的超时时间 proxy_read_timeout 60s; } }- 负载均衡算法

http://nginx.org/en/docs/http/ngx_http_upstream_module.html#ip_hash

- 轮询算法:默认,如果被代理服务器宕机,会自动清除宕机服务器的IP

- IP_HASH:通过计算客户端IP地址的HASH值,确定跳转的服务器

- 权重轮询:weight 值设置越大,被访问的概率越大

3.动静分离

场景说明:

将 静态资源(html、js、css、图片…) 和 动态资源(jsp、php…) 分开

1.11 服务器

- 查看多媒体资源类型

# cd /opt/nginx # cat ./conf/mime.types #-- 创建静态资源目录 # mkdir static_resource- nginx 代理配置

缓存:expires 1d ,nginx默认添加ETag和Last-Modified

upstream backend { server 192.168.1.12:8080; server 192.168.1.13:8080; } server { listen 80; server_name localhost; #charset koi8-r; #access_log logs/host.access.log main; location / { proxy_pass http://backend; #-- 客户端的协议头信息,否则被代理对象只能获取到代理服务器的信息 proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_X_forwarded_for; } #-- 静态资源配置 location ~ \.(gif|png|icon|svg|jpg|txt|css)$ { root static_resource; #-- 过期时间为1天,nginx默认添加ETag和Last-Modified expires 1d; } #error_page 404 /404.html; # redirect server error pages to the static page /50x.html # error_page 500 502 503 504 /50x.html; location = /50x.html { root html; } }1.12服务器和1.13服务器

- 将静态资源移动到备份目录中

# cd /opt/tomcat/apache-tomcat-8.5.54/webapps/ROOT # mkdir bak #-- 此处省略移动操作,使用图形界面移动更方便 #-- 将 bak 目录下的所有文件拷贝到 1.11服务器 # scp -r ./bak/* 192.168.1.11:/opt/nginx/static_resource4.配置文件分析

- 主配置

压缩:gzip#user nobody; #-- 设置权限: http://nginx.org/en/docs/ngx_core_module.html#user user root; worker_processes 1; #error_log logs/error.log; #error_log logs/error.log notice; #error_log logs/error.log info; #pid logs/nginx.pid; events { worker_connections 1024; } http { include mime.types; default_type application/octet-stream; #log_format main '$remote_addr - $remote_user [$time_local] "$request" ' # '$status $body_bytes_sent "$http_referer" ' # '"$http_user_agent" "$http_x_forwarded_for"'; #access_log logs/access.log main; sendfile on; #tcp_nopush on; #keepalive_timeout 0; keepalive_timeout 65; #-- 启用压缩 gzip on; #-- 超过20k就需要压缩 gzip_min_length 20k; #-- 设置压缩等级;数值越大,压缩率越高,CUP占用率也越高 gzip_comp_level 3; #-- 指定压缩类型 gzip_types application/javascript image/jpeg text/css image/png image/gif; #-- 缓冲区大小申请;以32k为单位,申请4倍的大小 gzip_buffers 4 32k; #-- 显示gzip的标识 gzip_vary on; #-- 导入外部配置 include extra/*.conf; }- 代理配置

过期时间:expires 1d防盗链:valid_referers、$invalid_referer跨域:add_headerupstream backend { server 192.168.1.12:8080; server 192.168.1.13:8080; } server { listen 80; server_name localhost; #charset koi8-r; #access_log logs/host.access.log main; location / { proxy_pass http://backend; #-- 客户端的协议头信息,否则被代理对象只能获取到代理服务器的信息 proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_X_forwarded_for; add_header 'Access-Control-Allow-Origin' '*'; add_header 'Access-Control-Allow-Methods' 'GET,POST,DELETE'; add_header 'Access-ControlHeader-Header' 'Content-Type,*'; } #-- 静态资源配置 location ~ \.(gif|png|icon|svg|jpg|txt|css)$ { #-- 配置允许访问的 IP 或 域名 valid_referers none blocked 192.168.1.11 www.test.com #-- 如果非法访问,则返回404 或 其它页面 或 图片等 if($invalid_referer) { return 404; } # 静态资源目录 root static_resource; #-- 过期时间为1天,nginx默认添加ETag和Last-Modified expires 1d; } #error_page 404 /404.html; # redirect server error pages to the static page /50x.html # error_page 500 502 503 504 /50x.html; location = /50x.html { root html; } }5.多进程模型原理

- 默认启动

master进程和worker进程,可以通过master进程 fork 多个worker进程,worker 进程内部使用 多路复用 进行请求处理

> ps -ef|grep nginx- 配置文件

#user nobody; #-- 指定 用户组和用户 运行nginx #-- 设置权限: http://nginx.org/en/docs/ngx_core_module.html#user user root; #-- 配置 nginx 的 worker 进程数 #-- 建议设置为 CPU 的总核心数 worker_processes 1; events { #-- 可选IO模型,例如:epoll、select ...... # use epoll; #-- 每一个 worker 进程可处理的连接数 worker_connections 1024; }6.高可用集群实战

- keepalived:轻量级的高可用解决方案

- LVS:四层负载均衡软件(IP+端口),Linux 2.4及以上版本的内核中

- Nginx 和 HAProxy :七层负载(请求信息)

场景说明:

-

当前示例的部分操作基于前面内容

-

1.10 和 1.11 部署nginx+keepalived+LVS,1.12 和 1.13 为tomcat 应用服务器

- 启动 1.10 和 1.11 的nginx

# cd /opt/nginx # ./sbin/nginx1)安装 keepalived

- 1.10 和 1.11 下载 keepalived,并且配置和安装

# cd /root/download # wget -4 /root/download https://www.keepalived.org/software/keepalived-2.0.20.tar.gz # tar -zxvf keepalived-2.0.20.tar.gz # mkdir /opt/keepalived/ # cd keepalived-2.0.20/ # yum install openssl-devel # ./configure --prefix=/opt/keepalived/ --sysconf=/etc # make && make install # cd /opt/keepalived/ #-- 创建软链接 # ln -s /opt/keepalived/sbin/keepalived /sbin #-- 拷贝启动服务的脚本文件 到 系统服务目录 # cp /root/download/keepalived-2.0.20/keepalived/etc/init.d/keepalived /etc/init.d/ # cp /root/download/keepalived-2.0.20/keepalived/etc/sysconfig/keepalived /etc/sysconfig/ #-- 添加到系统服务 # chkconfig --add keepalived # chkconfig keepalived on # service keepalived start # service keepalived status- 期间出现启动失败的情况,提示如下:

启动时提示

[root@MiWiFi-R3-srv keepalived]# service keepalived start Starting keepalived (via systemctl): Job for keepalived.service failed because a timeout was exceeded. See "systemctl status keepalived.service" and "journalctl -xe" for details. [失败]根据提示输入

journalctl -xe命令后[root@MiWiFi-R3-srv keepalived]# journalctl -xe 6月 08 08:21:20 MiWiFi-R3-srv Keepalived[90772]: Opening file '/etc/keepalived/keepalived.conf'. 6月 08 08:21:20 MiWiFi-R3-srv Keepalived[90772]: (Line 15) number '0' outside range [1e-06, 4294] 6月 08 08:21:20 MiWiFi-R3-srv Keepalived[90772]: (Line 15) vrrp_garp_interval '0' is invalid 6月 08 08:21:20 MiWiFi-R3-srv Keepalived[90772]: (Line 16) number '0' outside range [1e-06, 4294] 6月 08 08:21:20 MiWiFi-R3-srv Keepalived[90772]: (Line 16) vrrp_gna_interval '0' is invalid 6月 08 08:21:20 MiWiFi-R3-srv Keepalived[90772]: daemon is already running 6月 08 08:21:20 MiWiFi-R3-srv systemd[1]: PID file /run/keepalived.pid not readable (yet?) after start. 6月 08 08:22:50 MiWiFi-R3-srv systemd[1]: keepalived.service start operation timed out. Terminating. 6月 08 08:22:50 MiWiFi-R3-srv systemd[1]: Failed to start LVS and VRRP High Availability Monitor. -- Subject: Unit keepalived.service has failed -- Defined-By: systemd -- Support: http://lists.freedesktop.org/mailman/listinfo/systemd-devel -- -- Unit keepalived.service has failed. -- -- The result is failed. 6月 08 08:22:50 MiWiFi-R3-srv systemd[1]: Unit keepalived.service entered failed state. 6月 08 08:22:50 MiWiFi-R3-srv systemd[1]: keepalived.service failed. 6月 08 08:22:50 MiWiFi-R3-srv polkitd[631]: Unregistered Authentication Agent for unix-process:90766:32864825 (s lines 3738-3756/3756 (END)输入查看状态命令

service keepalived status[root@MiWiFi-R3-srv keepalived]# service keepalived status ● keepalived.service - LVS and VRRP High Availability Monitor Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled) Active: failed (Result: timeout) since 一 2020-06-08 08:22:50 PDT; 3min 50s ago Process: 90772 ExecStart=/opt/keepalived/sbin/keepalived $KEEPALIVED_OPTIONS (code=exited, status=0/SUCCESS) Tasks: 1 CGroup: /system.slice/keepalived.service └─2485 /opt/keepalived/sbin/keepalived -D 6月 08 08:21:20 MiWiFi-R3-srv Keepalived[90772]: (Line 15) number '0' outside range [1e-06, 4294] 6月 08 08:21:20 MiWiFi-R3-srv Keepalived[90772]: (Line 15) vrrp_garp_interval '0' is invalid 6月 08 08:21:20 MiWiFi-R3-srv Keepalived[90772]: (Line 16) number '0' outside range [1e-06, 4294] 6月 08 08:21:20 MiWiFi-R3-srv Keepalived[90772]: (Line 16) vrrp_gna_interval '0' is invalid 6月 08 08:21:20 MiWiFi-R3-srv Keepalived[90772]: daemon is already running 6月 08 08:21:20 MiWiFi-R3-srv systemd[1]: PID file /run/keepalived.pid not readable (yet?) after start. 6月 08 08:22:50 MiWiFi-R3-srv systemd[1]: keepalived.service start operation timed out. Terminating. 6月 08 08:22:50 MiWiFi-R3-srv systemd[1]: Failed to start LVS and VRRP High Availability Monitor. 6月 08 08:22:50 MiWiFi-R3-srv systemd[1]: Unit keepalived.service entered failed state. 6月 08 08:22:50 MiWiFi-R3-srv systemd[1]: keepalived.service failed.- 解决方法:

- 查看服务配置

# vi /lib/systemd/system/keepalived.service- 内容如下:

[Unit] Description=LVS and VRRP High Availability Monitor After=network-online.target syslog.target Wants=network-online.target [Service] Type=forking PIDFile=/run/keepalived.pid KillMode=process EnvironmentFile=-/etc/sysconfig/keepalived ExecStart=/opt/keepalived/sbin/keepalived $KEEPALIVED_OPTIONS ExecReload=/bin/kill -HUP $MAINPID [Install] WantedBy=multi-user.target- 其中发现

/run/keepalived.pid文件不存在 ,修改为默认路径:

PIDFile=var/run/keepalived.pid- 重新载入

# systemctl daemon-reload- 重新启动和查看状态

# service keepalived start # service keepalived status2)配置 keepalived

官网配置说明:https://www.keepalived.org/manpage.html

- 打开配置文件

# vim /etc/keepalived/keepalived.conf- 文件内容如下:

global_defs { notification_email { [email protected] [email protected] [email protected] } notification_email_from [email protected] smtp_server 192.168.200.1 smtp_connect_timeout 30 router_id LVS_DEVEL vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 } vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 51 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.200.16 192.168.200.17 192.168.200.18 } } virtual_server 192.168.200.100 443 { delay_loop 6 lb_algo rr lb_kind NAT persistence_timeout 50 protocol TCP real_server 192.168.201.100 443 { weight 1 SSL_GET { url { path / digest ff20ad2481f97b1754ef3e12ecd3a9cc } url { path /mrtg/ digest 9b3a0c85a887a256d6939da88aabd8cd } connect_timeout 3 retry 3 delay_before_retry 3 } } } virtual_server 10.10.10.2 1358 { delay_loop 6 lb_algo rr lb_kind NAT persistence_timeout 50 protocol TCP sorry_server 192.168.200.200 1358 real_server 192.168.200.2 1358 { weight 1 HTTP_GET { url { path /testurl/test.jsp digest 640205b7b0fc66c1ea91c463fac6334d } url { path /testurl2/test.jsp digest 640205b7b0fc66c1ea91c463fac6334d } url { path /testurl3/test.jsp digest 640205b7b0fc66c1ea91c463fac6334d } connect_timeout 3 retry 3 delay_before_retry 3 } } real_server 192.168.200.3 1358 { weight 1 HTTP_GET { url { path /testurl/test.jsp digest 640205b7b0fc66c1ea91c463fac6334c } url { path /testurl2/test.jsp digest 640205b7b0fc66c1ea91c463fac6334c } connect_timeout 3 retry 3 delay_before_retry 3 } } } virtual_server 10.10.10.3 1358 { delay_loop 3 lb_algo rr lb_kind NAT persistence_timeout 50 protocol TCP real_server 192.168.200.4 1358 { weight 1 HTTP_GET { url { path /testurl/test.jsp digest 640205b7b0fc66c1ea91c463fac6334d } url { path /testurl2/test.jsp digest 640205b7b0fc66c1ea91c463fac6334d } url { path /testurl3/test.jsp digest 640205b7b0fc66c1ea91c463fac6334d } connect_timeout 3 retry 3 delay_before_retry 3 } } real_server 192.168.200.5 1358 { weight 1 HTTP_GET { url { path /testurl/test.jsp digest 640205b7b0fc66c1ea91c463fac6334d } url { path /testurl2/test.jsp digest 640205b7b0fc66c1ea91c463fac6334d } url { path /testurl3/test.jsp digest 640205b7b0fc66c1ea91c463fac6334d } connect_timeout 3 retry 3 delay_before_retry 3 } } }- 修改后【1.10(MASTER)的配置】

# ==== 全局默认配置 global_defs { # keepalived 的服务器标志 router_id LVS_DEVEL } # ==== 配置冗余协议 vrrp_instance VI_1 { # 主节点 state MASTER # 网卡地址 interface ens33 virtual_router_id 51 # 成为master的优先级 priority 100 advert_int 1 # 通信认证-master和backup的通信授权 authentication { auth_type PASS auth_pass 1234 } # 虚拟IP virtual_ipaddress { 192.168.1.100 } } # ==== 配置LVS # 对外的IP和端口,与虚拟IP一致 virtual_server 192.168.1.100 80 { delay_loop 6 # 负载均衡算法 lb_algo rr # 转发规则 lb_kind NAT persistence_timeout 50 protocol TCP # nginx服务器IP和端口 real_server 192.168.1.10 80 { weight 1 TCP_CHECK { connect_timeout 3 retry 3 delay_before_retry 3 } } }- 将配置拷贝到 1.11(BACKUP) ,并修改配置

# ==== 全局默认配置 global_defs { # keepalived 的服务器标志 router_id LVS_DEVEL } # ==== 配置冗余协议 vrrp_instance VI_1 { # 主节点 state BACKUP # 网卡地址 interface ens33 virtual_router_id 51 # 成为master的优先级 priority 50 advert_int 1 # 通信认证-master和backup的通信授权 authentication { auth_type PASS auth_pass 1234 } # 虚拟IP virtual_ipaddress { 192.168.1.100 } } # ==== 配置LVS # 对外的IP和端口,与虚拟IP一致 virtual_server 192.168.1.100 80 { delay_loop 6 # 负载均衡算法 lb_algo rr # 转发规则 lb_kind NAT persistence_timeout 50 protocol TCP # nginx服务器IP和端口 real_server 192.168.1.11 80 { weight 1 TCP_CHECK { connect_timeout 3 retry 3 delay_before_retry 3 } } }3)运行keepalived、nginx和tomcat

- 1.10 和 1.11 的操作

1.10 的 nginx 配置,可以参考

三 - 2 负载均衡实战 和 三 - 3 动静分离# cd /opt/nginx # ./sbin/nginx # service keepalived restart # service keepalived status- 1.12 和 1.13 的操作

# cd /opt/tomcat/apache-tomcat-8.5.54/ # ./bin/startup.sh- 最后,通过虚拟IP(VIP)进行访问:

http://192.168.1.100 - 测试 1.10 nginx 停止运行后,是否自动使用 1.11 进行请求处理

# cd /opt/nginx # ./sbin/nginx -s stop # ps -ef|grep nginx # service keepalived status7.nginx停止服务 触发sh脚本

- 以 1.10为例

配置 vrrp_script 段

配置 enable_script_security

配置 track_script 段

- 打开配置文件

# vim /etc/keepalived/keepalived.conf- 配置内容:

# ==== 全局默认配置 global_defs { # keepalived 的服务器标志 router_id LVS_DEVEL # 脚本安全策略 enable_script_security } # ==== sh脚本 vrrp_script nginx_status_process { # 脚本存放目录 script "/opt/nginx/sbin/nginx_status_check.sh" # 指定执行的用户 # user root # 检查频次 interval 3 } # ==== 配置冗余协议 vrrp_instance VI_1 { # 主节点 state MASTER # 网卡地址 interface ens33 virtual_router_id 51 # 成为master的优先级 priority 100 advert_int 1 # 通信认证-master和backup的通信授权 authentication { auth_type PASS auth_pass 1234 } # 虚拟IP virtual_ipaddress { 192.168.1.100 } # 触发脚本 track_script { nginx_status_process } } # ==== 配置LVS # 对外的IP和端口,与虚拟IP一致 virtual_server 192.168.1.100 80 { delay_loop 6 # 负载均衡算法 lb_algo rr # 转发规则 lb_kind NAT persistence_timeout 50 protocol TCP # nginx服务器IP和端口 real_server 192.168.1.10 80 { weight 1 TCP_CHECK { connect_timeout 3 retry 3 delay_before_retry 3 } } }- 当 nginx 停止服务后,触发脚本,停止 keepalived 的运行

# vim /opt/nginx/sbin/nginx_status_check.sh- 脚本内容

#!bin/sh # --获取nginx的进程数 A=$(ps -C nginx --no-header |wc -l) # -- 判断进程数是否等于0 if [ $A -eq 0 ] then # --停止运行 keepalived service keepalived stop fi- 测试效果(这里如果 nginx 没有启动,keepalived 会一启动就会被关闭,说明脚本生效

#-- 设置执行权限 # chmod +x /opt/nginx/sbin/nginx_status_check.sh #-- 运行脚本,测试运行效果 # sh /opt/nginx/sbin/nginx_status_check.sh #-- 重启 keepalived # service keepalived restart #-- 停止运行nginx # cd /opt/nginx/sbin/ # ./nginx -s stop #-- nginx 运行情况 # ps -ef|grep nginx #-- 查看 keepalived 的状态 # service keepalived status #-- 查看keepalived 的日志 # tail -f /var/log/messages