浅析工具dirpro v1.2源码

文章目录

-

- 前言

- 源码分析

-

- dirpro.py

- start.py

- backup.py

- rely.py

- results.py

- end.py

前言

工具简介

dirpro 是一款由 python 编写的目录扫描器专业版,操作简单,功能强大,高度自动化

自动根据返回状态码和返回长度,对扫描结果进行二次整理和判断,准确性非常高

项目地址

项目已在github开源,求个star嘻嘻嘻

https://github.com/coleak2021/dirpro

已实现功能

- 可自定义扫描线程

- 导入url文件进行批量扫描并分别保存结果

- 状态码429检测,自动退出程序并提示设置更小的线程

- 每扫描10%自动显示扫描进度

- 可自定义扫描字典文件

- 可自定义代理流量

- 自动使用随机的User-Agent

- 自动规范输入的目标url格式,根据输入的url动态生成敏感目录

- 强大的默认字典top10000

- 自动根据返回状态码和返回长度对扫描结果进行二次整理和判断

- 扫描结果自动生成 域名+时间 格式的防同名文件名 并保存到scan_result目录中

扫描参数

options:

-h, --help show this help message and exit

-u U url

-t T thread:default=30

-w W dirfile path

-a A proxy,such as 127.0.0.1:7890

-f F urlfile,urls in the file

-b fastly to find backup files and sensitive files

源码分析

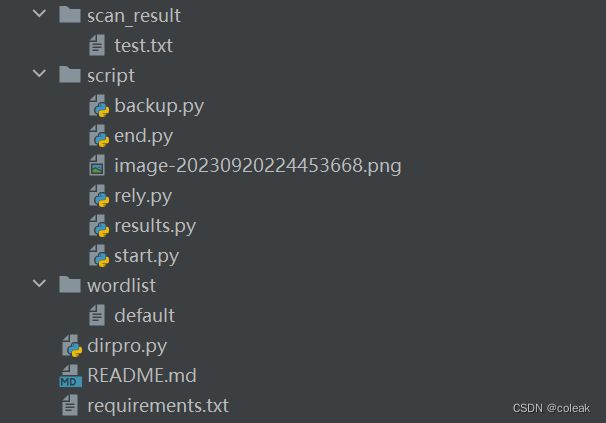

目录结构

dirpro.py

项目入口,接受传入的参数,并调用函数进行后续操作

if not args.f:

rooturl = args.u.strip('/')

(time1,ret)=__start(args,rooturl)

__end(rooturl,time1,ret)

else:

urlfile=open(args.f, 'r')

urls = urlfile.read().splitlines()

for rooturl in urls:

rooturl = rooturl.strip('/')

(time1,ret) = __start(args, rooturl)

__end(rooturl,time1,ret)

判断是否传入url文件,初始化处理掉url末尾的/,调用__start(args,rooturl)返回(time1,ret),然后调用__end(rooturl,time1,ret)对扫描结果进行处理

start.py

sem = threading.Semaphore(args.t)

urlList = []

urlList.extend(searchFiles(rooturl))

限制线程的最大数,清空urlList(防止多url文件扫描时前面生成的urllist影响后续url扫描),调用searchFiles(rooturl)生成敏感目录并将结果加入到urllist中

if args.a:

proxies['http'] = f"http://{args.a}"

proxies['https'] = f"http://{args.a}"

判断是否加入代理

if args.b:

sem = threading.Semaphore(5)

searchdir(urlList,sem,rooturl)

else:

if not args.w:

defaultword = './wordlist/default'

else:

defaultword = args.w

f = open(defaultword, 'r')

files = f.read().splitlines()

for file in files:

urlList.append(f'{rooturl}/{file}')

f.close()

searchdir(urlList,sem,rooturl)

return (time_1,ret)

判断扫描方式是快速扫描还是普通扫描,快速扫描需要设置小的线程(快速扫描自带的字典比较小),普通扫描判断是否传入字典文件,将字典中的dir加载到url中,调用 searchdir(urlList,sem,rooturl),最后返回(time_1,ret)

backup.py

searchFiles(rooturl)生成敏感目录并将结果加入到urllist中

for file in FILE_LIST:

urlList.append(f'{rootUrl}/{file}')

urlList.append(f'{rootUrl}/{file}.bak')

urlList.append(f'{rootUrl}/{file}~')

urlList.append(f'{rootUrl}/{file}.swp')

urlList.append(f'{rootUrl}/.{file}.swp')

urlList.append(f'{rootUrl}/.{file}.un~')

加入备份文件目录

SOURCE_LIST = [

'.svn', '.svn/wc.db', '.svn/entries', # svn

'.git/', '.git/HEAD', '.git/index', '.git/config', '.git/description', '.gitignore' # git

'.hg/', # hg

'CVS/', 'CVS/Root', 'CVS/Entries', # cvs

'.bzr', # bzr

'WEB-INF/web.xml', 'WEB-INF/src/', 'WEB-INF/classes', 'WEB-INF/lib', 'WEB-INF/database.propertie', # java

'.DS_Store', # macos

'README', 'README.md', 'README.MD', # readme

'_viminfo', '.viminfo', # vim

'.bash_history',

'.htaccess'

]

for source in SOURCE_LIST:

urlList.append(f'{rootUrl}/{source}')

加入源代码文件目录

suffixList = ['.rar','.zip','.tar','.tar.gz', '.7z']

keyList = ['www','wwwroot','site','web','website','backup','data','mdb','WWW','新建文件夹','ceshi','databak',

'db','database','sql','bf','备份','1','2','11','111','a','123','test','admin','app','bbs','htdocs','wangzhan']

num1 = rootUrl.find('.')

num2 = rootUrl.find('.', num1 + 1)

keyList.append(rootUrl[num1 + 1:num2])

for key in keyList:

for suff in suffixList:

urlList.append(f'{rootUrl}/{key}{suff}')

加入压缩文件目录

rely.py

扫描功能集中在这个文件

def __random_agent():

user_agent_list = [{'User-Agent': 'Mozilla/4.0 (Mozilla/4.0; MSIE 7.0; Windows NT 5.1; FDM; SV1; .NET CLR 3.0.04506.30)'},{'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36'},

......]

return random.choice(user_agent_list)

使用随机user_agent

def searchdir(urlList,sem,rooturl):

global d

global _sem

global _list

d=0

_sem=sem

thread_array = []

n=len(urlList)

k=int (n/10)

for i in range(1,10):

_list.append(k*i)

print(f"[*]开始扫描{rooturl}")

for i in urlList:

t = Thread(target= __get,args=(i,))

thread_array.append(t)

t.start()

for t in thread_array:

t.join()

_list存放进度条信息,for循环将调用get方法加入线程列表,通过t.join()设置除非子线程全部运行完毕,否则主线程一直挂起

def __get(url):

count = 0

global d

with _sem:

while count < 3:

try:

r = requests.get(url,headers=__random_agent(),proxies=proxies)

except:

count += 1

continue

break

#判断请求是否成功

if count >= 3:

print(f'visit failed:{url}')

return

l=len(r.text)

if r.status_code != 404 and r.status_code != 429:

log = f'{r.status_code:<6}{l:<7}{url}'

print(log)

elif r.status_code == 429:

print('Too Many Requests 429 so that Request terminated,please Set up smaller threads')

os._exit(0)

d += 1

if d in _list:

print(f"[*]已经扫描{(_list.index(d)+1)*10}%")

# 添加到ret

ret.append({

'status_code': r.status_code,

'length': l,

'url': url

})

with _sem相当于 sem.acquire(), sem.release()

锁定信号的变量sem在线程内阻塞,等待前面的线程执行结束。就是说实际上有多少任务就会开多少线程,只是超过限制的部分线程在线程内阻塞

os._exit(0)

这里判断返回码出现429则退出整个程序,如果用exit()则只能退出子线程

results.py

对扫描结果进行二次整理和判断

t=f"./scan_result/{rooturl.split('//')[1].replace(':', '')}{int (time.time())}"

try:

f = open(t, 'w',encoding="utf-8")

except:

f = open(f"{int (time.time())}", 'w',encoding="utf-8")

设置保存扫描结果的文件名

for result in ret:

statusCode = result['status_code']

length = result['length']

statusCodeMap[statusCode] = statusCodeMap.get(statusCode, 0) + 1

lenMap[length] = lenMap.get(length, 0) + 1

统计返回长度和状态码的个数

for result in ret:

if result['length'] != maxLength:

__log(f'{result["status_code"]:<6}{result["length"]:<7}{result["url"]}')

f.close()

return t

打印异常的状态码和长度对于的url

end.py

result = __Results(rooturl,ret)

time2 = time.time()

print("总共花费: ", time2 - time1, "秒,", f"结果保存在{result}")

ret.clear()

将ret清空以免影响后续的扫描