【OpenCV】Mango的OpenCV学习笔记【四】

本文主要参考自 OpenCV官方文档

一、Canny边缘检测

Canny边缘检测 算法步骤:

-

彩色图转化为灰度图

-

应用高斯滤波来平滑图像 --> 去除噪声

由于边缘检测容易受到图像中噪声的影响

-

找寻图像的强度梯度

Canny的基本思想是找寻一幅图像中强度变化最强的位置。所谓的变化最强,即指梯度方向。平滑后的图像中每个像素点的梯度可以由

Sobel算子来获得:1)首先,利用

Sobel算子得到沿x轴和y轴方向的梯度G_x和G_y。2)由

G_X和G_Y便可计算每一个像素点的梯度幅值G。3)接着,每一个像素点用

G代替。对于变化剧烈的边界处,G值越大,对应的颜色为白色。4)然后,这些边界通常非常粗,难以标定边界的真正位置,还必须存储梯度的方向

θ。

E d g e _ G r a d i e n t ( G ) = G x 2 + G y 2 A n g l e ( θ ) = tan − 1 ( G y G x ) Edge\_Gradient \; (G) = \sqrt{G_x^2 + G_y^2} \\ Angle \; (\theta) = \tan^{-1} \bigg(\frac{G_y}{G_x}\bigg) Edge_Gradient(G)=Gx2+Gy2Angle(θ)=tan−1(GxGy) -

沿着梯度

θ方向上比较该像素点,若该像素点与两侧相比形成局部最大值则保留,否则抑制(置为 0 )。这一步的目的是将模糊的边界变得清晰,剔除一大部分不是边缘的点。

-

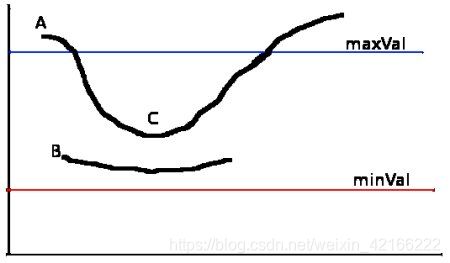

双阈值边缘连接处理

规则:设定两个阈值,

minVal和maxVal。大于

maxVal的边缘肯定是边缘(保留),低于minVal的边缘是非边缘(舍去)。 -

二值化图像输出结果

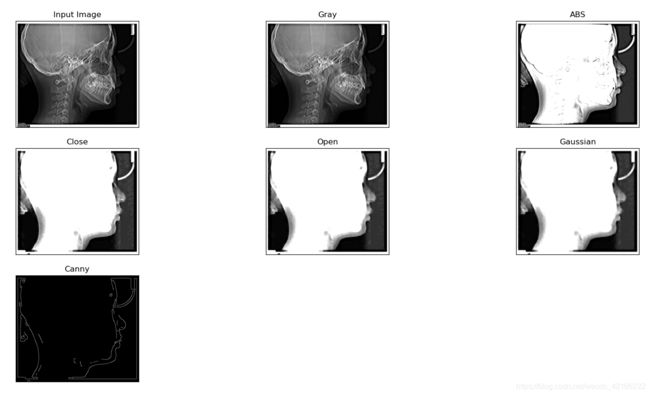

import cv2 import matplotlib.pyplot as plt # 1.将图片转化为灰度图 img = cv2.imread("../../Resources/CT.jpg") img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB) gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) # 对于对比度较暗的图片,可进行高亮处理 abs = cv2.convertScaleAbs(gray, alpha=6, beta=0) # 形态学操作(去除中间的黑色噪点) kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (7, 7)) close = cv2.morphologyEx(abs, cv2.MORPH_CLOSE, kernel) open_ = cv2.morphologyEx(close, cv2.MORPH_OPEN, kernel) # 2.高斯平滑 gaussian = cv2.GaussianBlur(open_, (5, 5), 0) # 3.Canny算法 canny = cv2.Canny(gaussian, 100, 150) # 梯度阈值 # 结合matplotlib展示多张图片 images = [img, gray, abs, close, open_, gaussian, canny] titles = ["Input Image", "Gray", "ABS", "Close", "Open", "Gaussian", "Canny"] plt.figure(figsize=(10, 10)) for i in range(7): plt.subplot(3, 3, i + 1) plt.imshow(images[i], cmap="gray") plt.title(titles[i]) plt.xticks([]) plt.yticks([]) plt.show()

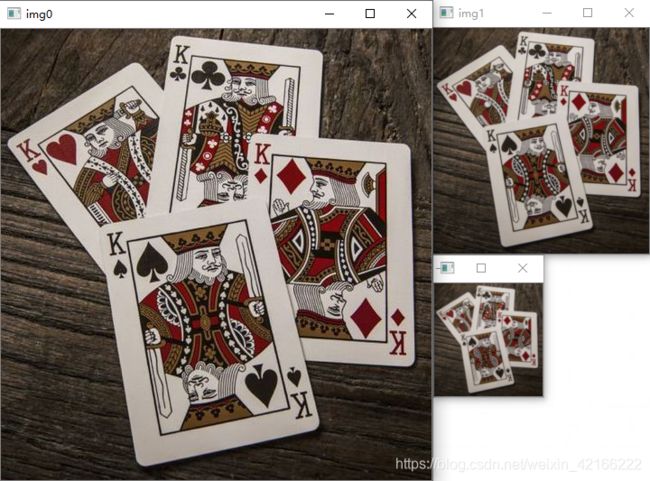

二、图像金字塔

-

高斯金字塔

实际上就是缩小、放大图片的操作,分别通过 cv.pyrDown()(对图像向下采样) 和cv.pyrUp()(对图像向上采样) 函数实现。

循环3次进行 cv.pyrDown() 操作:

import cv2 img = cv2.imread(r"../../Resources/cards.jpg") for i in range(3): cv2.imshow(f"img{i}", img) img = cv2.pyrDown(img) # img = cv2.pyrUp(img) cv2.waitKey(0) cv2.destroyAllWindows()得到的结果:

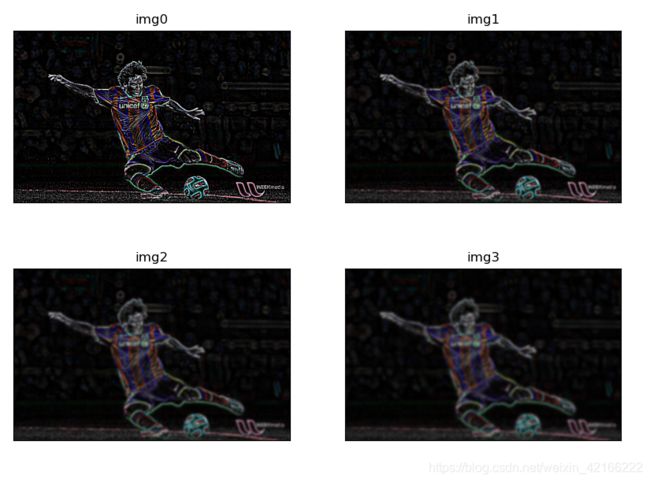

我们可以看到图片成功缩小并且变得模糊了,那我们先缩小再放大后会发生什么效果呢?import cv2 import matplotlib.pyplot as plt '图像金字塔' # 1.高斯金字塔 img = cv2.imread(r"../../Resources/cards.jpg") img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB) for i in range(4): plt.subplot(2, 2, i + 1) plt.title(f"img{i}") plt.imshow(img) plt.xticks([]) plt.yticks([]) img = cv2.pyrDown(img) img = cv2.pyrUp(img) plt.show()可以看到,依然是模糊的,这是因为

PryUp和PryDown不是互逆的,即PryUp不是降采样的逆操作。pyrUP( )函数:作用是向上采样并模糊一张图像,即放大一张图片。pyrDown( )函数:作用是向下采样并模糊一张图像,即缩小一张图片。这种情况下,首先在每个维度上缩小为原来的 0.5 倍,会丢失信息;

然后再在每个维度上扩大为原来的 2 倍,图像新增的行(偶数行)以 0 填充。

所以最终我们看到的图片变得十分模糊。 -

拉普拉斯金字塔

拉普拉斯金字塔由高斯金字塔形成。拉普拉斯金字塔图像为 边缘图像,它的大多数元素为 0。

import cv2 img = cv2.imread(r"../../Resources/messi15.jpg") img_down = cv2.pyrDown(img) img_up = cv2.pyrUp(img_down) img_new = cv2.subtract(img, img_up) # 做减法提取轮廓 # 为了更容易看清楚,做了个提高对比度的操作 img_new = cv2.convertScaleAbs(img_new, alpha=9, beta=0) # 展示图片 for i in range(4): plt.subplot(2, 2, i + 1) plt.title(f"img{i}") plt.imshow(img_new) plt.xticks([]) plt.yticks([]) img_new = cv2.pyrDown(img_new) img_new = cv2.pyrUp(img_new) plt.show() -

使用金字塔进行图像融合

import cv2 import numpy as np import matplotlib.pyplot as plt A = cv2.imread('../../Resources/apple.jpg') B = cv2.imread('../../Resources/orange.jpg') # 生成A的高斯金字塔 G = A.copy() gpA = [G] for i in range(6): G = cv2.pyrDown(G) gpA.append(G) # 生成B的高斯金字塔 G = B.copy() gpB = [G] for i in range(6): G = cv2.pyrDown(G) gpB.append(G) # 生成A的拉普拉斯金字塔 lpA = [gpA[5]] for i in range(5, 0, -1): GE = cv2.pyrUp(gpA[i]) L = cv2.subtract(gpA[i - 1], GE) lpA.append(L) # 生成B的拉普拉斯金字塔 lpB = [gpB[5]] for i in range(5, 0, -1): GE = cv2.pyrUp(gpB[i]) L = cv2.subtract(gpB[i - 1], GE) lpB.append(L) # 在每个级别中添加左右两半图像,进行融合 LS = [] for la, lb in zip(lpA, lpB): rows, cols, dpt = la.shape ls = np.hstack((la[:, 0:cols // 2], lb[:, cols // 2:])) LS.append(ls) # 重建图像 ls_ = LS[0] for i in range(1, 6): ls_ = cv2.pyrUp(ls_) ls_ = cv2.add(ls_, LS[i]) # 直接合成的图片 rows, cols, channels = A.shape real = np.hstack((A[:, :cols // 2], B[:, cols // 2:])) cv2.imwrite('../../Resources/Pyramid_blending2.jpg', ls_) cv2.imwrite('../../Resources/Direct_blending.jpg', real) # 转换为RGB模式,在matplotlib里显示 A = cv2.cvtColor(A, cv2.COLOR_BGR2RGB) B = cv2.cvtColor(B, cv2.COLOR_BGR2RGB) real = cv2.cvtColor(real, cv2.COLOR_BGR2RGB) ls_ = cv2.cvtColor(ls_, cv2.COLOR_BGR2RGB) titles = ['Apple', 'Orange', 'Direct Connection', 'Pyramid Blending'] images = [A, B, real, ls_] for i in range(len(titles)): plt.subplot(2, 2, i + 1) plt.imshow(images[i]) plt.title(titles[i]) plt.xticks([]) plt.yticks([]) plt.show()

三、轮廓

-

轮廓查找和绘制

查找轮廓:

findContours(image, mode, method, contours=None, hierarchy=None, offset=None)image:输入图像(二值化图像)

mode:轮廓检索方式

method:轮廓近似方法轮廓检索方式:

cv2.RETR_EXTERNAL:只检测外轮廓cv2.RETR_LIST:检测的轮廓不建立等级关系cv2.RETR_CCOMP:建立两个等级的轮廓,上面一层为外边界,里面一层为内孔的边界信息cv2.RETR_TREE:建立一个等级树结构的轮廓,包含关系轮廓近似方法:

cv2.CHAIN_APPROX_NONE:存储所有边界点cv2.CHAIN_APPROX_SIMPLE:压缩垂直、水平、对角方向,只保留端点绘制轮廓:直接对原图进行操作

contourIdx轮廓的索引(当设置为-1时,绘制所有轮廓)drawContours(image, contours, contourIdx, color, thickness=None, lineType=None, hierarchy=None, maxLevel=None, offset=None)import cv2 img = cv2.imread("../../Resources/lightning.jpg") gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) ret, thresh = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY | cv2.THRESH_OTSU) contours, hierarchy = cv2.findContours(thresh, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) # print(len(contours[0])) # 点的数量 # print(numpy.shape(contours)) # print(hierarchy) # 层次树 img_contour = cv2.drawContours(img, contours, -1, (0, 255, 0), 2) cv2.imshow("img", img) cv2.waitKey(0) cv2.destroyAllWindows() -

轮廓特征

import cv2 import numpy as np img = cv2.imread("../../Resources/lightning.jpg") gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) ret, binary = cv2.threshold(gray, 127, 255, cv2.THRESH_BINARY) contours, _ = cv2.findContours(binary, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)① 特征矩和重心

质心由关系给出, C x = M 10 M 00 C_x = \frac{M_{10}}{M_{00}} Cx=M00M10 和 C y = M 01 M 00 C_y = \frac{M_{01}}{M_{00}} Cy=M00M01

M = cv2.moments(contours[0]) # 矩 print(M) cx = int(M['m10'] / M['m00']) cy = int(M['m01'] / M['m00']) print("重心:", cx, cy)② 轮廓面积

area = cv2.contourArea(contours[0]) print("面积:", area)③ 周长

perimeter = cv2.arcLength(contours[0], True) print("周长:", perimeter)④ 轮廓近似

# epsilon指定逼近精度的参数。这是原始曲线与其近似值之间的最大距离。 epsilon = 20 approx = cv2.approxPolyDP(contours[0], epsilon, True) print(np.shape(approx)) print(approx) # 绘制轮廓:直接对原图进行操作 cv2.drawContours(img, [approx], -1, (0, 0, 255), 3) cv2.imshow("img", img) cv2.waitKey(0) cv2.destroyAllWindows()函数

cv2.convexHull()可以用来检测一个曲线是否具有凸性缺陷,并能纠正缺陷

函数cv2.isContourConvex()可以可以用来检测一个曲线是不是凸的。它只能返回True或False。import cv2 "凸包和凸性检测: convexHull()、isContourConvex()" img = cv2.imread("../../Resources/lightning.jpg") gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) ret, binary = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY | cv2.THRESH_OTSU) # 查找轮廓 contours, _ = cv2.findContours(binary, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE) hull = cv2.convexHull(contours[0]) print(cv2.isContourConvex(contours[0]), cv2.isContourConvex(hull)) # False True # 说明轮廓曲线是非凸的,凸包曲线是凸的 # 绘制轮廓 cv2.drawContours(img, [hull], -1, (0, 255, 0), 2) cv2.imshow("img", img) cv2.waitKey(0) cv2.destroyAllWindows()边界矩形、最小(面积)矩形、最小外接圆、椭圆拟合、直线拟合

import cv2 import numpy as np "边界检测: 边界矩形、最小(面积)矩形、最小外接圆以及椭圆拟合、直线拟合" img = cv2.imread('../../Resources/lightning.jpg') img_gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) ret, thresh = cv2.threshold(img_gray, 127, 255, 0) contours, _ = cv2.findContours(thresh, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE) # 边界矩形 x, y, w, h = cv2.boundingRect(contours[0]) cv2.rectangle(img, (x, y), (x + w, y + h), (0, 255, 0), 2) # 最小矩形 rect = cv2.minAreaRect(contours[0]) print(rect) box = cv2.boxPoints(rect) print(box) box = np.int32(box) cv2.drawContours(img, [box], 0, (0, 0, 255), 2) # 最小外接圆 (x, y), radius = cv2.minEnclosingCircle(contours[0]) center = (int(x), int(y)) radius = int(radius) cv2.circle(img, center, radius, (255, 0, 0), 2) # 椭圆拟合 ellipse = cv2.fitEllipse(contours[0]) print(ellipse) cv2.ellipse(img, ellipse, (0, 255, 255), 2) # 直线拟合 rows, cols = img.shape[:2] [vx, vy, x, y] = cv2.fitLine(contours[0], cv2.DIST_L2, 0, 0.01, 0.01) lefty = int((-x * vy / vx) + y) right_y = int(((cols - x) * vy / vx) + y) cv2.line(img, (cols - 1, right_y), (0, lefty), (0, 255, 0), 2) cv2.imshow("img_contour", img) cv2.waitKey(0) -

轮廓属性

① 边界矩形宽高比、轮廓面积与边界矩形面积的比、轮廓面积和凸包面积的比、与轮廓面积相等的圆的直径、对象的方向(物体指向角度)

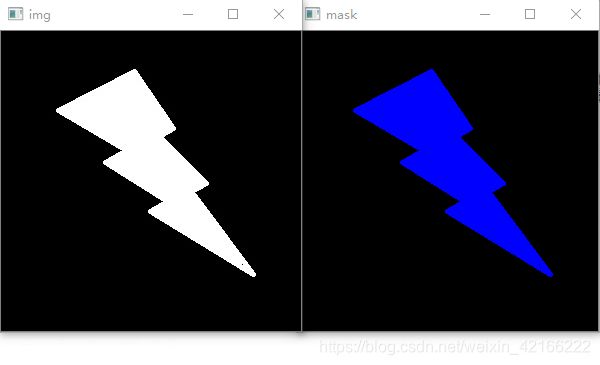

import cv2 import numpy as np "轮廓性质" img = cv2.imread("../../Resources/lightning.jpg") gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) ret, binary = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY | cv2.THRESH_OTSU) # 查找轮廓 contours, _ = cv2.findContours(binary, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE) # 边界矩形 x, y, w, h = cv2.boundingRect(contours[0]) cv2.rectangle(img, (x, y), (x + w, y + h), (0, 0, 255), 2) # 最小面积矩形 rect = cv2.minAreaRect(contours[0]) box = cv2.boxPoints(rect) box = np.int32(box) cv2.drawContours(img, [box], -1, (0, 255, 0), 2) # 最小外切圆 (x, y), radius = cv2.minEnclosingCircle(contours[0]) cv2.circle(img, (int(x), int(y)), int(radius), (255, 0, 0), 2) # 绘制轮廓 cv2.drawContours(img, contours, -1, (255, 255, 0), 2) # 1.边界矩形的宽高比 aspect_ratio = float(w) / h print("边界矩形的宽高比:", aspect_ratio) # 2.轮廓面积与边界矩形面积的比 area = cv2.contourArea(contours[0]) rect_area = w * h extent = float(area) / rect_area print("轮廓面积与边界矩形面积的比:", extent) # 3.轮廓面积和凸包面积的比 hull = cv2.convexHull(contours[0]) # 凸包和凸性检测 area = cv2.contourArea(contours[0]) hull_area = cv2.contourArea(hull) solidity = float(area) / hull_area print("轮廓面积和凸包面积的比:", solidity) # 4.与轮廓面积相等的圆的直径 area = cv2.contourArea(contours[0]) equi_diameter = np.sqrt(4 * area / np.pi) print("与轮廓面积相等的圆的直径:", equi_diameter) # 5.对象的方向 ellipse = cv2.fitEllipse(contours[0]) print(ellipse) print("对象的方向angle:", ellipse[2]) cv2.ellipse(img, ellipse, (0, 255, 255), thickness=2) # cv2.ellipse(img, (150, 124), (int(78 / 2), int(261 / 2)), 138, 0, 360, (0, 0, 255), thickness=2) # cv2.imshow("img", img) # cv2.waitKey(0) # cv2.destroyAllWindows()② 对象掩码

在某些情况下,我们可能需要构成该对象的所有点

import cv2 import numpy as np "对象掩码mask" img = cv2.imread("../../Resources/lightning.jpg") gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) ret, binary = cv2.threshold(gray, 0, 255, cv2.THRESH_BINARY | cv2.THRESH_OTSU) contours, _ = cv2.findContours(binary, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE) mask = np.zeros(img.shape, np.uint8) cv2.drawContours(mask, contours, -1, (255, 0, 0), -1) pixel_points = np.transpose(np.nonzero(mask)) print(mask.shape) print(len(np.nonzero(mask)[0])) print(pixel_points.shape) cv2.imshow("img", img) cv2.imshow("mask", mask) cv2.waitKey(0) cv2.destroyAllWindows()③ 形状匹配

将两个图形进行比对,返回一个匹配值

import cv2 "形状匹配: matchShapes()" img1 = cv2.imread("../../Resources/rectangle.jpg") img2 = cv2.imread("../../Resources/polygon.jpg") gray1 = cv2.cvtColor(img1, cv2.COLOR_BGR2GRAY) ret1, binary1 = cv2.threshold(gray1, 0, 255, cv2.THRESH_BINARY | cv2.THRESH_OTSU) contours1, _ = cv2.findContours(binary1, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE) gray2 = cv2.cvtColor(img2, cv2.COLOR_BGR2GRAY) ret2, binary2 = cv2.threshold(gray2, 0, 255, cv2.THRESH_BINARY | cv2.THRESH_OTSU) contours2, _ = cv2.findContours(binary2, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE) ret = cv2.matchShapes(contours1[0], contours2[0], cv2.CONTOURS_MATCH_I1, 0.0) print(ret) # cv2.imshow("img1", img1) # cv2.imshow("img2", img2) # cv2.waitKey(0) # cv2.destroyAllWindows()

四、模板匹配

-

模板匹配步骤

① 模板匹配,得到匹配灰度图

res = cv2.matchTemplate(image, templ, method, result=None, mask=None)参数:

image:输入图像

templ:模板图像

method:模板匹配方法,包括:•

CV_TM_SQDIFF(平方差匹配法):该方法采用平方差来进行匹配;最好的匹配值为0;匹配越差,匹配值越大。•

CV_TM_SQDIFF_NORMED(相关匹配法):该方法采用乘法操作;数值越大表明匹配程度越好。•

CV_TM_CCORR(相关系数匹配法):1表示完美的匹配;-1表示最差的匹配。•

CV_TM_CCORR_NORMED(归一化平方差匹配法)•

CV_TM_CCOEFF(归一化相关匹配法)•

CV_TM_CCOEFF_NORMED(归一化相关系数匹配法)② 获取最小和最大像素值及它们的位置

min_val, max_val, min_loc, max_loc = cv2.minMaxLoc(res)③ 最后,将匹配的区域标记出来

cv2.rectangle(img, pt1, pt2, color, thickness=None, lineType=None, shift=None) -

单对象匹配

原图中仅有一个与模板匹配

import cv2 img = cv2.imread("../../Resources/messi15.jpg") template = cv2.imread("../../Resources/messi_head.jpg") h, w, c = template.shape # print(img.shape) # 1)匹配模板,得到匹配灰度图 # res = cv2.matchTemplate(img, template, cv2.TM_CCOEFF) # 最大值是最匹配区域 # res = cv2.matchTemplate(img, template, cv2.TM_CCOEFF_NORMED) # 归一化# [-1, 1],1表示100%匹配 # res = cv2.matchTemplate(img, template, cv2.TM_CCORR) # 最大值是最匹配区域 res = cv2.matchTemplate(img, template, cv2.TM_CCORR_NORMED) # 归一化[0, 1],1表示100%匹配 # res = cv2.matchTemplate(img, template, cv2.TM_SQDIFF) # 最小值是最匹配区域 # res = cv2.matchTemplate(img, template, cv2.TM_SQDIFF_NORMED) # 归一化[0, 1],0表示100%匹配 # print(res.shape) # 2)获取最小和最大像素值及它们的位置 min_val, max_val, min_loc, max_loc = cv2.minMaxLoc(res) # print(min_val) # print(max_val) # print(min_loc) # print(max_loc) # 3)最后,将匹配的区域标记出来 # 匹配类型是TM_CCOEFF、TM_CCOEFF_NORMED、TM_CCORR、TM_CCORR_NORMED时,最大值是最匹配区域 cv2.rectangle(img, (max_loc[0], max_loc[1]), (max_loc[0] + w, max_loc[1] + h), color=(0, 0, 255), thickness=2) # 匹配类型是TM_SQDIFF、TM_SQDIFF_NORMED时,最小值是最匹配区域 # cv2.rectangle(img, (min_loc[0], min_loc[1]), (min_loc[0] + w, min_loc[1] + h), color=(0, 0, 255), thickness=2) cv2.imshow("img", img) cv2.imshow("template", template) cv2.waitKey(0) cv2.destroyAllWindows() -

多对象匹配

import cv2 import numpy as np img = cv2.imread("../../Resources/Mario.jpg") template = cv2.imread("../../Resources/Mario_coin.jpg") h, w, c = template.shape # print(template.shape) # 1)匹配模板,得到匹配灰度图 res = cv2.matchTemplate(img, template, cv2.TM_CCORR_NORMED) # print(res.shape) # h,w # print(res) # 2)当匹配像素值>=0.95时,认为是匹配的 loc = np.where(res >= 0.99) # np.where(条件) 返回满足条件的下标 # print(loc) # h,w # print(*loc[::-1]) # w,h-->x,y for pt in zip(*loc[::-1]): # print(pt[0], pt[1]) # 3)最后,将所有匹配的区域标记出来 cv2.rectangle(img, (pt[0], pt[1]), (pt[0] + w, pt[1] + h), color=(0, 0, 255), thickness=1) cv2.imshow("img", img) cv2.imshow("template", template) cv2.waitKey(0) cv2.destroyAllWindows()

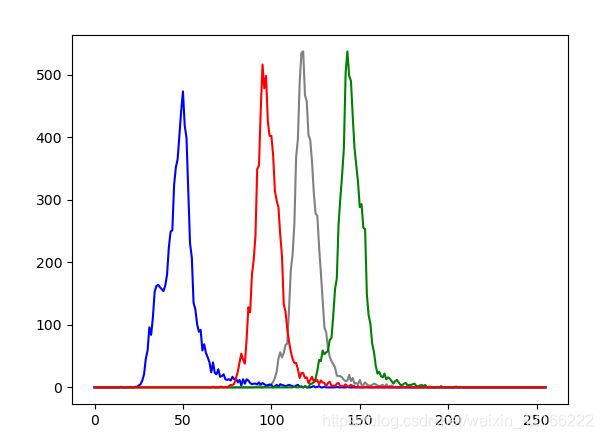

五、直方图

- 绘制直方图

效果图如下import cv2 import matplotlib.pyplot as plt '绘制直方图' # 1)一维直方图 img = cv2.imread("../../Resources/messi_grass.jpg") gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) # 转化为灰度图 # 绘制灰度图的直方图 hist_gray = cv2.calcHist(images=[gray], channels=[0], mask=None, histSize=[256], ranges=[0, 255]) # 绘制单通道B的直方图 hist_B = cv2.calcHist([img], [0], None, [256], [0, 255]) # 绘制单通道G的直方图 hist_G = cv2.calcHist([img], [1], None, [256], [0, 255]) # 绘制单通道R的直方图 hist_R = cv2.calcHist([img], [2], None, [256], [0, 255]) plt.plot(hist_gray, color="gray", label="Gray") plt.plot(hist_B, color="b", label="B") plt.plot(hist_G, color="g", label="G") plt.plot(hist_R, color="r", label="R") plt.show() # 2)2D直方图 img = cv2.imread("../../Resources/messi_grass.jpg") hsv = cv2.cvtColor(img, cv2.COLOR_BGR2HSV) # 转化为HSV图 # 绘制2D直方图 hist_hsv = cv2.calcHist([hsv], [0, 1], None, [180, 256], [0, 180, 0, 255]) cv2.imshow("hist_hsv", hist_hsv) cv2.waitKey(0) cv2.destroyAllWindows()

一维直方图

一维直方图

-

直方图均衡化

① 全局直方图均衡化

import cv2 import matplotlib.pyplot as plt '直方图均衡化:类似放大方差' # 1)全局直方图均衡化 src = cv2.imread("../../Resources/landscape.jpg", 0) # 全局直方图均衡化 dst = cv2.equalizeHist(src) # 原直方图 hist_src = cv2.calcHist([src], [0], None, [256], [0, 255]) # 全局均衡化后的直方图 hist_dst = cv2.calcHist([dst], [0], None, [256], [0, 255]) # 结合matplotlib展示多张图片 plt.subplot(221), plt.imshow(src, cmap="gray"), plt.title("Src Image") plt.xticks([]), plt.yticks([]) plt.subplot(222), plt.imshow(dst, cmap="gray"), plt.title("Dst Image") plt.xticks([]), plt.yticks([]) plt.subplot(223), plt.plot(hist_src, color="r", label="hist_src"), plt.legend() plt.subplot(224), plt.plot(hist_dst, color="b", label="hist_dst"), plt.legend() plt.show()效果图如下

② CLAHE(限制对比度的自适应直方图均衡化)

src = cv2.imread("../../Resources/sculpture.jpg", cv2.IMREAD_GRAYSCALE) # 1.全局直方图均衡化 img_equalize = cv2.equalizeHist(src) # 2.CLAHE自适应均衡化 # createCLAHE(clipLimit=None, tileGridSize=None) clahe = cv2.createCLAHE(tileGridSize=(7, 7)) img_clahe = clahe.apply(src) # 原直方图 hist_src = cv2.calcHist([src], [0], None, [256], [0, 255]) # 全局均衡化后的直方图 hist_equalize = cv2.calcHist([img_equalize], [0], None, [256], [0, 255]) # CLAHE均衡化后的直方图 hist_clahe = cv2.calcHist([img_clahe], [0], None, [256], [0, 255]) # 结合matplotlib展示多张图片 plt.subplot(231), plt.imshow(src, cmap="gray"), plt.title("Src Image") plt.xticks([]), plt.yticks([]) plt.subplot(232), plt.imshow(img_equalize, cmap="gray"), plt.title("Image after Equalzie") plt.xticks([]), plt.yticks([]) plt.subplot(233), plt.imshow(img_clahe, cmap="gray"), plt.title("Image after CLAHE") plt.xticks([]), plt.yticks([]) plt.subplot(234), plt.plot(hist_src, color="b", label="hist_src"), plt.legend() plt.subplot(235), plt.plot(hist_equalize, color="g", label="hist_equalize"), plt.legend() plt.subplot(236), plt.plot(hist_clahe, color="r", label="hist_clahe"), plt.legend() plt.show() -

直方图反向投影

它用于图像分割或在图像中查找感兴趣的对象。简而言之,它创建的图像大小与输入图像相同(但只有一个通道),其中每个像素对应于该像素属于我们物体的概率。

import cv2 '直方图反向投影' # 感兴趣对象ROI roi = cv2.imread("../../Resources/messi_grass.jpg") hsv_roi = cv2.cvtColor(roi, cv2.COLOR_BGR2HSV) # 转化为HSV图 # 目标图像 target = cv2.imread("../../Resources/messi15.jpg") hsv_target = cv2.cvtColor(target, cv2.COLOR_BGR2HSV) # 转化为HSV图 # 1)统计ROI的直方图 hist_roi = cv2.calcHist([hsv_roi], [0, 1], None, [180, 256], [0, 179, 0, 255]) # 2)直方图归一化: 在调用calcBackProject之前,需要对hist_roi进行归一化 cv2.normalize(hist_roi, hist_roi, 0, 255, cv2.NORM_MINMAX) # 3)反向投影: calcBackProject backProject = cv2.calcBackProject([hsv_target], [0, 1], hist_roi, [0, 179, 0, 255], 1) # 4)圆盘卷积 kernel = cv2.getStructuringElement(shape=cv2.MORPH_ELLIPSE, ksize=(5, 5)) backProject = cv2.filter2D(backProject, -1, kernel) # 5)图像二值化 ret, thresh = cv2.threshold(backProject, 50, 255, cv2.THRESH_BINARY) # 6)抠出目标图像中的感兴趣部分 thresh = cv2.merge((thresh, thresh, thresh)) # 通道合并 res = cv2.bitwise_and(target, thresh) # 展示图片 # cv2.imshow("roi", roi) # cv2.imshow("target", target) # cv2.imshow("backProject", backProject) cv2.imshow("thresh", thresh) cv2.imshow("res", res) cv2.waitKey(0) cv2.destroyAllWindows()

六、轮廓检测练习题

-

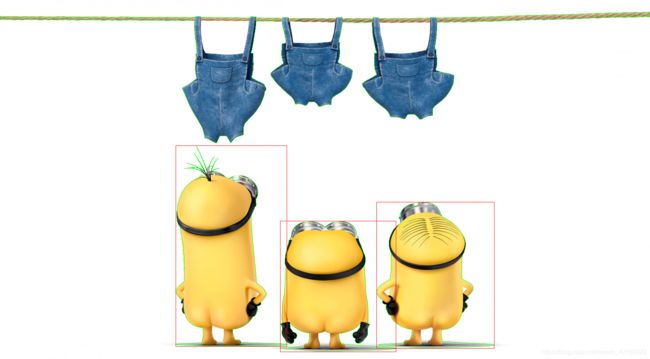

静态图片的人物检测

import cv2 img = cv2.imread("millions.jpg") gray_img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) ret, thresh_img = cv2.threshold(gray_img, 227, 255, cv2.THRESH_BINARY_INV) # ret, thresh_img = cv2.threshold(gray_img, 198, 255, cv2.THRESH_BINARY_INV) contours, _ = cv2.findContours(thresh_img, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) img_contour = cv2.drawContours(img, contours, -1, (0, 255, 0), 1) for i in range(len(contours)): if 10 < len(contours[i]) < 1000: # if len(contours[i]) > 160: x, y, w, h = cv2.boundingRect(contours[i]) cv2.rectangle(img, (x, y), (x + w, y + h), (0, 0, 255), 1) cv2.imshow('', thresh_img) cv2.imshow("img", img) cv2.waitKey(0) cv2.destroyAllWindows() -

动态视频(图片)人物检测

import cv2 import numpy as np import time path = r'./model.mp4' cap = cv2.VideoCapture(path) fps = cap.get(cv2.CAP_PROP_FPS) total_fps = cap.get(cv2.CAP_PROP_FRAME_COUNT) print(fps, total_fps) start = time.time() while True: ret, frame = cap.read() if cv2.waitKey(int(1000 / fps)) & 0xFF == ord('q'): break elif ret is False: break gray_img = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY) ret, thresh_img = cv2.threshold(gray_img, 107, 255, cv2.THRESH_BINARY_INV) # print(ret) contours, _ = cv2.findContours(thresh_img, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) # img_contour = cv2.drawContours(frame, contours, -1, (0, 255, 0), 1) for i in range(len(contours)): # print(len(contours[i])) if len(contours[i]) > 200: x, y, w, h = cv2.boundingRect(contours[i]) cv2.rectangle(frame, (x, y), (x + w, y + h), (0, 0, 255), 1) cv2.imshow('th', thresh_img) cv2.imshow("img", frame) cap.release() cv2.destroyAllWindows() end = time.time() print(end - start)实现的效果图