(三)ChatGLM-6B 的 DeepSpeed/P-Tuning v2微调

文章目录

-

- 模型文件和相关代码准备

- ChatGLM6B部署

- 解决`ninja`报错

- 训练开始

模型文件和相关代码准备

安装日期:2023-04-19

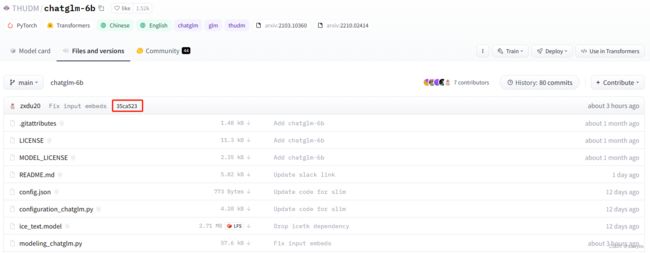

模型文件地址:https://huggingface.co/THUDM/chatglm-6b/tree/main

Hash: 35ca523

相对上一篇文章(04-09),官方更新了文件,也增加了 DeepSpeed支持,所以火速跟进体验一下(他们也好努力,截图3小时前还在更新代码)

参考之前写的(二)ChatGLM-6B模型部署以及ptuning微调详细教程 关于附:下载大文件的的python代码 ,准重新下载模型文件(国外,速度慢没办法)

等待模型文件下载完毕后,下载其他的配置文件,把大的模型文件移动进去

# 根据需要选择一个目录存放模型文件

mkdir /data/thudm2/

cd /data/thudm2/

# 准备下载其他配置文件

GIT_LFS_SKIP_SMUDGE=1 git clone https://huggingface.co/THUDM/chatglm-6b

# 移动文件进去,存在覆盖即可(上面代码的占位文件,其实是没用的,覆盖了)

mv -f pytor* chatglm-6b/

mv -f ice_text.model chatglm-6b/

ChatGLM6B部署

准备代码

https://github.com/THUDM/ChatGLM-6B

hash 01e6313

git clone https://github.com/THUDM/ChatGLM-6B.git

cd ChatGLM-6B

# 把模型提前准备进来,这里的路径需要根据实际情况修改,你的未必和我一样

ln -s /data/thudm2/ THUDM

修改requirements.txt,

特别注意torch torchvision版本对应问题,看官网

protobuf==3.20.0

transformers==4.28.0

cpm_kernels

gradio

mdtex2html

sentencepiece

rouge_chinese

nltk

jieba

datasets

deepspeed

accelerate

torchvision==0.14.0

torch==1.13.0

我的显卡环境

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 470.82.01 Driver Version: 470.82.01 CUDA Version: 11.4 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla P40 On | 00000000:00:09.0 Off | 0 |

| N/A 22C P8 8W / 250W | 0MiB / 22919MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

venv环境准备开始

yum -y install python3.9-devel

# 设置版本都为3.9(要自己手动设置一下哈)

update-alternatives --config python

update-alternatives --config python3

------------------------------------------------------------

* 0 /usr/bin/python3.9 3 自动模式

# 现在开始python都是默认3.9了

python -m venv venv

pip3 install -r requirements.txt

CUDA设置,可见和分割内存大小

修改ds_train_finetune.sh,使用DeepSpeed进行全参数微调。

解决ninja报错

如果出现下面这个提示

/data/chatgml2/ChatGLM-6B/venv/lib/python3.9/site-packages/torch/include/torch/csrc/python_headers.h:10:10: fatal error: Python.h: No such file or directory

10 | #include

| ^~~~~~~~~~

compilation terminated.

ninja: build stopped: subcommand failed.

Traceback (most recent call last):

File "/data/chatgml2/ChatGLM-6B/venv/lib64/python3.9/site-packages/torch/utils/cpp_extension.py", line 1717, in _run_ninja_build

subprocess.run(

File "/usr/lib64/python3.9/subprocess.py", line 528, in run

raise CalledProcessError(retcode, process.args,

subprocess.CalledProcessError: Command '['ninja', '-v']' returned non-zero exit status

这个好像是ninja版本问题,查看版本命令不是这个,试过升级和降级都没用,所以只能手动干源码了

venv/lib64/python3.9/site-packages/torch/utils/cpp_extension.py文件

将['ninja','-v']改成['ninja','--version']

运行还是继续报错,但是内容已经有些变化了

# 报错内容

File "", line 228, in _call_with_frames_removed

ImportError: /root/.cache/torch_extensions/py39_cu117/utils/utils.so: cannot open shared object file: No such file or directory

可以手动编译utils.so

# 解决思路,保证python python3 都是指向同一个版本,我这里是python3.9

# 然后手动编译,发现可以过的

# py39_cu117目录可能不同,跟进报错提示看

cd /root/.cache/torch_extensions/py39_cu117/utils/

# 手动编译

(venv) [root@VM-245-24-centos utils]# ninja

[2/2] g++ flatten_unflatten.o -shared -L/data/chatgml2/Cha...h/lib -lc10 -ltorch_cpu -ltorch -ltorch_python -o utils.so

通过上面的折腾就可以解决ninja相关问题了

训练开始

bash ds_train_finetune.sh

内存爆了CUDA out of memory. Tried to allocate 11.50 GiB (GPU 0; 22.38 GiB total capacity; 11.50 GiB already allocated; 10.31 GiB free; 11.50 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF, 但是PYTORCH_CUDA_ALLOC_CONF的值我是有设置过的

(venv) [root@VM-245-24-centos ptuning]# bash ds_train_finetune.sh

[2023-04-20 13:04:57,843] [WARNING] [runner.py:190:fetch_hostfile] Unable to find hostfile, will proceed with training with local resources only.

[2023-04-20 13:04:57,851] [INFO] [runner.py:540:main] cmd = /data/chatgml2/ChatGLM-6B/venv/bin/python -u -m deepspeed.launcher.launch --world_info=eyJsb2NhbGhvc3QiOiBbMF19 --master_addr=127.0.0.1 --master_port=35859 --enable_each_rank_log=None main.py --deepspeed deepspeed.json --do_train --train_file AdvertiseGen/train.json --test_file AdvertiseGen/dev.json --prompt_column content --response_column summary --overwrite_cache --model_name_or_path ../THUDM/chatglm-6b --output_dir ./output/adgen-chatglm-6b-ft-1e-4 --overwrite_output_dir --max_source_length 64 --max_target_length 64 --per_device_train_batch_size 4 --per_device_eval_batch_size 1 --gradient_accumulation_steps 1 --predict_with_generate --max_steps 5000 --logging_steps 10 --save_steps 1000 --learning_rate 1e-4 --fp16

[2023-04-20 13:05:00,282] [INFO] [launch.py:229:main] WORLD INFO DICT: {'localhost': [0]}

[2023-04-20 13:05:00,282] [INFO] [launch.py:235:main] nnodes=1, num_local_procs=1, node_rank=0

[2023-04-20 13:05:00,282] [INFO] [launch.py:246:main] global_rank_mapping=defaultdict(, {'localhost': [0]})

[2023-04-20 13:05:00,282] [INFO] [launch.py:247:main] dist_world_size=1

[2023-04-20 13:05:00,282] [INFO] [launch.py:249:main] Setting CUDA_VISIBLE_DEVICES=0

[2023-04-20 13:05:05,024] [INFO] [comm.py:586:init_distributed] Initializing TorchBackend in DeepSpeed with backend nccl

04/20/2023 13:05:05 - WARNING - __main__ - Process rank: 0, device: cuda:0, n_gpu: 1distributed training: True, 16-bits training: True

04/20/2023 13:05:05 - INFO - __main__ - Training/evaluation parameters Seq2SeqTrainingArguments(

_n_gpu=1,

adafactor=False,

adam_beta1=0.9,

adam_beta2=0.999,

adam_epsilon=1e-08,

auto_find_batch_size=False,

bf16=False,

bf16_full_eval=False,

data_seed=None,

dataloader_drop_last=False,

dataloader_num_workers=0,

dataloader_pin_memory=True,

ddp_bucket_cap_mb=None,

ddp_find_unused_parameters=None,

ddp_timeout=1800,

debug=[],

deepspeed=deepspeed.json,

disable_tqdm=False,

do_eval=False,

do_predict=False,

do_train=True,

eval_accumulation_steps=None,

eval_delay=0,

eval_steps=None,

evaluation_strategy=no,

fp16=True,

fp16_backend=auto,

fp16_full_eval=False,

fp16_opt_level=O1,

fsdp=[],

fsdp_config={'fsdp_min_num_params': 0, 'xla': False, 'xla_fsdp_grad_ckpt': False},

fsdp_min_num_params=0,

fsdp_transformer_layer_cls_to_wrap=None,

full_determinism=False,

generation_config=None,

generation_max_length=None,

generation_num_beams=None,

gradient_accumulation_steps=1,

gradient_checkpointing=False,

greater_is_better=None,

group_by_length=False,

half_precision_backend=auto,

hub_model_id=None,

hub_private_repo=False,

hub_strategy=every_save,

hub_token=,

ignore_data_skip=False,

include_inputs_for_metrics=False,

jit_mode_eval=False,

label_names=None,

label_smoothing_factor=0.0,

learning_rate=0.0001,

length_column_name=length,

load_best_model_at_end=False,

local_rank=0,

log_level=passive,

log_level_replica=warning,

log_on_each_node=True,

logging_dir=./output/adgen-chatglm-6b-ft-1e-4/runs/Apr20_13-05-05_VM-245-24-centos,

logging_first_step=False,

logging_nan_inf_filter=True,

logging_steps=10,

logging_strategy=steps,

lr_scheduler_type=linear,

max_grad_norm=1.0,

max_steps=5000,

metric_for_best_model=None,

mp_parameters=,

no_cuda=False,

num_train_epochs=3.0,

optim=adamw_hf,

optim_args=None,

output_dir=./output/adgen-chatglm-6b-ft-1e-4,

overwrite_output_dir=True,

past_index=-1,

per_device_eval_batch_size=1,

per_device_train_batch_size=4,

predict_with_generate=True,

prediction_loss_only=False,

push_to_hub=False,

push_to_hub_model_id=None,

push_to_hub_organization=None,

push_to_hub_token=,

ray_scope=last,

remove_unused_columns=True,

report_to=[],

resume_from_checkpoint=None,

run_name=./output/adgen-chatglm-6b-ft-1e-4,

save_on_each_node=False,

save_safetensors=False,

save_steps=1000,

save_strategy=steps,

save_total_limit=None,

seed=42,

sharded_ddp=[],

skip_memory_metrics=True,

sortish_sampler=False,

tf32=None,

torch_compile=False,

torch_compile_backend=None,

torch_compile_mode=None,

torchdynamo=None,

tpu_metrics_debug=False,

tpu_num_cores=None,

use_ipex=False,

use_legacy_prediction_loop=False,

use_mps_device=False,

warmup_ratio=0.0,

warmup_steps=0,

weight_decay=0.0,

xpu_backend=None,

)

04/20/2023 13:05:06 - WARNING - datasets.builder - Found cached dataset json (/root/.cache/huggingface/datasets/json/default-dbfa988cf4fa1ea5/0.0.0/fe5dd6ea2639a6df622901539cb550cf8797e5a6b2dd7af1cf934bed8e233e6e)

100%|████████████████████████████████████████████████████████████████████████████████████| 2/2 [00:00<00:00, 535.09it/s]

[INFO|configuration_utils.py:666] 2023-04-20 13:05:06,079 >> loading configuration file ../THUDM/chatglm-6b/config.json

[WARNING|configuration_auto.py:925] 2023-04-20 13:05:06,079 >> Explicitly passing a `revision` is encouraged when loading a configuration with custom code to ensure no malicious code has been contributed in a newer revision.

[INFO|configuration_utils.py:666] 2023-04-20 13:05:06,082 >> loading configuration file ../THUDM/chatglm-6b/config.json

[INFO|configuration_utils.py:720] 2023-04-20 13:05:06,083 >> Model config ChatGLMConfig {

"_name_or_path": "../THUDM/chatglm-6b",

"architectures": [

"ChatGLMModel"

],

"auto_map": {

"AutoConfig": "configuration_chatglm.ChatGLMConfig",

"AutoModel": "modeling_chatglm.ChatGLMForConditionalGeneration",

"AutoModelForSeq2SeqLM": "modeling_chatglm.ChatGLMForConditionalGeneration"

},

"bos_token_id": 130004,

"eos_token_id": 130005,

"gmask_token_id": 130001,

"hidden_size": 4096,

"inner_hidden_size": 16384,

"layernorm_epsilon": 1e-05,

"mask_token_id": 130000,

"max_sequence_length": 2048,

"model_type": "chatglm",

"num_attention_heads": 32,

"num_layers": 28,

"pad_token_id": 3,

"position_encoding_2d": true,

"pre_seq_len": null,

"prefix_projection": false,

"quantization_bit": 0,

"torch_dtype": "float16",

"transformers_version": "4.28.0",

"use_cache": true,

"vocab_size": 130528

}

[WARNING|tokenization_auto.py:675] 2023-04-20 13:05:06,083 >> Explicitly passing a `revision` is encouraged when loading a model with custom code to ensure no malicious code has been contributed in a newer revision.

[INFO|tokenization_utils_base.py:1807] 2023-04-20 13:05:06,085 >> loading file ice_text.model

[INFO|tokenization_utils_base.py:1807] 2023-04-20 13:05:06,086 >> loading file added_tokens.json

[INFO|tokenization_utils_base.py:1807] 2023-04-20 13:05:06,086 >> loading file special_tokens_map.json

[INFO|tokenization_utils_base.py:1807] 2023-04-20 13:05:06,086 >> loading file tokenizer_config.json

[WARNING|auto_factory.py:456] 2023-04-20 13:05:06,345 >> Explicitly passing a `revision` is encouraged when loading a model with custom code to ensure no malicious code has been contributed in a newer revision.

[INFO|modeling_utils.py:2531] 2023-04-20 13:05:06,365 >> loading weights file ../THUDM/chatglm-6b/pytorch_model.bin.index.json

[INFO|configuration_utils.py:575] 2023-04-20 13:05:06,366 >> Generate config GenerationConfig {

"_from_model_config": true,

"bos_token_id": 130004,

"eos_token_id": 130005,

"pad_token_id": 3,

"transformers_version": "4.28.0"

}

Loading checkpoint shards: 100%|██████████████████████████████████████████████████████████| 8/8 [00:06<00:00, 1.17it/s]

[INFO|modeling_utils.py:3190] 2023-04-20 13:05:13,287 >> All model checkpoint weights were used when initializing ChatGLMForConditionalGeneration.

[INFO|modeling_utils.py:3198] 2023-04-20 13:05:13,288 >> All the weights of ChatGLMForConditionalGeneration were initialized from the model checkpoint at ../THUDM/chatglm-6b.

If your task is similar to the task the model of the checkpoint was trained on, you can already use ChatGLMForConditionalGeneration for predictions without further training.

[INFO|modeling_utils.py:2839] 2023-04-20 13:05:13,292 >> Generation config file not found, using a generation config created from the model config.

input_ids [5, 65421, 61, 67329, 32, 98339, 61, 72043, 32, 65347, 61, 70872, 32, 69768, 61, 68944, 32, 67329, 64103, 61, 96914, 130001, 130004, 5, 87052, 96914, 81471, 64562, 65759, 64493, 64988, 6, 65840, 65388, 74531, 63825, 75786, 64009, 63823, 65626, 63882, 64619, 65388, 6, 64480, 65604, 85646, 110945, 10, 64089, 65966, 87052, 67329, 65544, 6, 71964, 70533, 64417, 63862, 89978, 63991, 63823, 77284, 88473, 64219, 63848, 112012, 6, 71231, 65099, 71252, 66800, 85768, 64566, 64338, 100323, 75469, 63823, 117317, 64218, 64257, 64051, 74197, 6, 63893, 130005, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3]

inputs 类型#裤*版型#宽松*风格#性感*图案#线条*裤型#阔腿裤 宽松的阔腿裤这两年真的吸粉不少,明星时尚达人的心头爱。毕竟好穿时尚,谁都能穿出腿长2米的效果宽松的裤腿,当然是遮肉小能手啊。上身随性自然不拘束,面料亲肤舒适贴身体验感棒棒哒。系带部分增加设计看点,还

label_ids [-100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, 130004, 5, 87052, 96914, 81471, 64562, 65759, 64493, 64988, 6, 65840, 65388, 74531, 63825, 75786, 64009, 63823, 65626, 63882, 64619, 65388, 6, 64480, 65604, 85646, 110945, 10, 64089, 65966, 87052, 67329, 65544, 6, 71964, 70533, 64417, 63862, 89978, 63991, 63823, 77284, 88473, 64219, 63848, 112012, 6, 71231, 65099, 71252, 66800, 85768, 64566, 64338, 100323, 75469, 63823, 117317, 64218, 64257, 64051, 74197, 6, 63893, 130005, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100]

labels 宽松的阔腿裤这两年真的吸粉不少,明星时尚达人的心头爱。毕竟好穿时尚,谁都能穿出腿长2米的效果宽松的裤腿,当然是遮肉小能手啊。上身随性自然不拘束,面料亲肤舒适贴身体验感棒棒哒。系带部分增加设计看点,还

/data/chatgml2/ChatGLM-6B/venv/lib64/python3.9/site-packages/transformers/optimization.py:391: FutureWarning: This implementation of AdamW is deprecated and will be removed in a future version. Use the PyTorch implementation torch.optim.AdamW instead, or set `no_deprecation_warning=True` to disable this warning

warnings.warn(

[2023-04-20 13:07:02,968] [INFO] [logging.py:96:log_dist] [Rank 0] DeepSpeed info: version=0.9.0, git-hash=unknown, git-branch=unknown

[2023-04-20 13:07:07,408] [INFO] [logging.py:96:log_dist] [Rank 0] DeepSpeed Flops Profiler Enabled: False

[2023-04-20 13:07:07,409] [INFO] [logging.py:96:log_dist] [Rank 0] Removing param_group that has no 'params' in the client Optimizer

[2023-04-20 13:07:07,409] [INFO] [logging.py:96:log_dist] [Rank 0] Using client Optimizer as basic optimizer

[2023-04-20 13:07:07,426] [INFO] [logging.py:96:log_dist] [Rank 0] DeepSpeed Basic Optimizer = AdamW

[2023-04-20 13:07:07,426] [INFO] [utils.py:51:is_zero_supported_optimizer] Checking ZeRO support for optimizer=AdamW type=

[2023-04-20 13:07:07,426] [WARNING] [engine.py:1118:_do_optimizer_sanity_check] **** You are using ZeRO with an untested optimizer, proceed with caution *****

[2023-04-20 13:07:07,426] [INFO] [logging.py:96:log_dist] [Rank 0] Creating torch.float16 ZeRO stage 2 optimizer

[2023-04-20 13:07:07,426] [INFO] [stage_1_and_2.py:133:__init__] Reduce bucket size 500000000

[2023-04-20 13:07:07,426] [INFO] [stage_1_and_2.py:134:__init__] Allgather bucket size 500000000

[2023-04-20 13:07:07,426] [INFO] [stage_1_and_2.py:135:__init__] CPU Offload: False

[2023-04-20 13:07:07,426] [INFO] [stage_1_and_2.py:136:__init__] Round robin gradient partitioning: False

Using /root/.cache/torch_extensions/py39_cu117 as PyTorch extensions root...

Emitting ninja build file /root/.cache/torch_extensions/py39_cu117/utils/build.ninja...

Building extension module utils...

Allowing ninja to set a default number of workers... (overridable by setting the environment variable MAX_JOBS=N)

1.11.1.git.kitware.jobserver-1

Loading extension module utils...

Time to load utils op: 0.2386620044708252 seconds

Traceback (most recent call last):

File "/data/chatgml2/ChatGLM-6B/ptuning/main.py", line 434, in

main()

File "/data/chatgml2/ChatGLM-6B/ptuning/main.py", line 373, in main

train_result = trainer.train(resume_from_checkpoint=checkpoint)

File "/data/chatgml2/ChatGLM-6B/ptuning/trainer.py", line 1636, in train

return inner_training_loop(

File "/data/chatgml2/ChatGLM-6B/ptuning/trainer.py", line 1705, in _inner_training_loop

deepspeed_engine, optimizer, lr_scheduler = deepspeed_init(

File "/data/chatgml2/ChatGLM-6B/venv/lib64/python3.9/site-packages/transformers/deepspeed.py", line 378, in deepspeed_init

deepspeed_engine, optimizer, _, lr_scheduler = deepspeed.initialize(**kwargs)

File "/data/chatgml2/ChatGLM-6B/venv/lib64/python3.9/site-packages/deepspeed/__init__.py", line 156, in initialize

engine = DeepSpeedEngine(args=args,

File "/data/chatgml2/ChatGLM-6B/venv/lib64/python3.9/site-packages/deepspeed/runtime/engine.py", line 328, in __init__

self._configure_optimizer(optimizer, model_parameters)

File "/data/chatgml2/ChatGLM-6B/venv/lib64/python3.9/site-packages/deepspeed/runtime/engine.py", line 1187, in _configure_optimizer

self.optimizer = self._configure_zero_optimizer(basic_optimizer)

File "/data/chatgml2/ChatGLM-6B/venv/lib64/python3.9/site-packages/deepspeed/runtime/engine.py", line 1418, in _configure_zero_optimizer

optimizer = DeepSpeedZeroOptimizer(

File "/data/chatgml2/ChatGLM-6B/venv/lib64/python3.9/site-packages/deepspeed/runtime/zero/stage_1_and_2.py", line 346, in __init__

self.single_partition_of_fp32_groups.append(self.parallel_partitioned_bit16_groups[i][partition_id].to(

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 11.50 GiB (GPU 0; 22.38 GiB total capacity; 11.50 GiB already allocated; 10.31 GiB free; 11.50 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

怀疑是DeepSpeed参数设置的问题,先找点自信看看以前使用P-Tuning v2对ChatGLM-6B进行参数微调还有没作用

(venv) [root@VM-245-24-centos ptuning]# bash train.sh

04/20/2023 13:09:39 - WARNING - __main__ - Process rank: -1, device: cuda:0, n_gpu: 1distributed training: False, 16-bits training: False

04/20/2023 13:09:39 - INFO - __main__ - Training/evaluation parameters Seq2SeqTrainingArguments(

_n_gpu=1,

adafactor=False,

adam_beta1=0.9,

adam_beta2=0.999,

adam_epsilon=1e-08,

auto_find_batch_size=False,

bf16=False,

bf16_full_eval=False,

data_seed=None,

dataloader_drop_last=False,

dataloader_num_workers=0,

dataloader_pin_memory=True,

ddp_bucket_cap_mb=None,

ddp_find_unused_parameters=None,

ddp_timeout=1800,

debug=[],

deepspeed=None,

disable_tqdm=False,

do_eval=False,

do_predict=False,

do_train=True,

eval_accumulation_steps=None,

eval_delay=0,

eval_steps=None,

evaluation_strategy=no,

fp16=False,

fp16_backend=auto,

fp16_full_eval=False,

fp16_opt_level=O1,

fsdp=[],

fsdp_config={'fsdp_min_num_params': 0, 'xla': False, 'xla_fsdp_grad_ckpt': False},

fsdp_min_num_params=0,

fsdp_transformer_layer_cls_to_wrap=None,

full_determinism=False,

generation_config=None,

generation_max_length=None,

generation_num_beams=None,

gradient_accumulation_steps=16,

gradient_checkpointing=False,

greater_is_better=None,

group_by_length=False,

half_precision_backend=auto,

hub_model_id=None,

hub_private_repo=False,

hub_strategy=every_save,

hub_token=,

ignore_data_skip=False,

include_inputs_for_metrics=False,

jit_mode_eval=False,

label_names=None,

label_smoothing_factor=0.0,

learning_rate=0.02,

length_column_name=length,

load_best_model_at_end=False,

local_rank=-1,

log_level=passive,

log_level_replica=warning,

log_on_each_node=True,

logging_dir=output/adgen-chatglm-6b-pt-128-2e-2/runs/Apr20_13-09-39_VM-245-24-centos,

logging_first_step=False,

logging_nan_inf_filter=True,

logging_steps=10,

logging_strategy=steps,

lr_scheduler_type=linear,

max_grad_norm=1.0,

max_steps=3000,

metric_for_best_model=None,

mp_parameters=,

no_cuda=False,

num_train_epochs=3.0,

optim=adamw_hf,

optim_args=None,

output_dir=output/adgen-chatglm-6b-pt-128-2e-2,

overwrite_output_dir=True,

past_index=-1,

per_device_eval_batch_size=1,

per_device_train_batch_size=4,

predict_with_generate=True,

prediction_loss_only=False,

push_to_hub=False,

push_to_hub_model_id=None,

push_to_hub_organization=None,

push_to_hub_token=,

ray_scope=last,

remove_unused_columns=True,

report_to=[],

resume_from_checkpoint=None,

run_name=output/adgen-chatglm-6b-pt-128-2e-2,

save_on_each_node=False,

save_safetensors=False,

save_steps=1000,

save_strategy=steps,

save_total_limit=None,

seed=42,

sharded_ddp=[],

skip_memory_metrics=True,

sortish_sampler=False,

tf32=None,

torch_compile=False,

torch_compile_backend=None,

torch_compile_mode=None,

torchdynamo=None,

tpu_metrics_debug=False,

tpu_num_cores=None,

use_ipex=False,

use_legacy_prediction_loop=False,

use_mps_device=False,

warmup_ratio=0.0,

warmup_steps=0,

weight_decay=0.0,

xpu_backend=None,

)

Downloading and preparing dataset json/default to /root/.cache/huggingface/datasets/json/default-d4e829b82a89c7e1/0.0.0/fe5dd6ea2639a6df622901539cb550cf8797e5a6b2dd7af1cf934bed8e233e6e...

Downloading data files: 100%|███████████████████████████████████████████████████████████| 2/2 [00:00<00:00, 9187.96it/s]

Extracting data files: 100%|████████████████████████████████████████████████████████████| 2/2 [00:00<00:00, 1529.93it/s]

Dataset json downloaded and prepared to /root/.cache/huggingface/datasets/json/default-d4e829b82a89c7e1/0.0.0/fe5dd6ea2639a6df622901539cb550cf8797e5a6b2dd7af1cf934bed8e233e6e. Subsequent calls will reuse this data.

100%|████████████████████████████████████████████████████████████████████████████████████| 2/2 [00:00<00:00, 537.63it/s]

[INFO|configuration_utils.py:666] 2023-04-20 13:09:41,765 >> loading configuration file ../THUDM/chatglm-6b/config.json

[WARNING|configuration_auto.py:925] 2023-04-20 13:09:41,766 >> Explicitly passing a `revision` is encouraged when loading a configuration with custom code to ensure no malicious code has been contributed in a newer revision.

[INFO|configuration_utils.py:666] 2023-04-20 13:09:41,768 >> loading configuration file ../THUDM/chatglm-6b/config.json

[INFO|configuration_utils.py:720] 2023-04-20 13:09:41,769 >> Model config ChatGLMConfig {

"_name_or_path": "../THUDM/chatglm-6b",

"architectures": [

"ChatGLMModel"

],

"auto_map": {

"AutoConfig": "configuration_chatglm.ChatGLMConfig",

"AutoModel": "modeling_chatglm.ChatGLMForConditionalGeneration",

"AutoModelForSeq2SeqLM": "modeling_chatglm.ChatGLMForConditionalGeneration"

},

"bos_token_id": 130004,

"eos_token_id": 130005,

"gmask_token_id": 130001,

"hidden_size": 4096,

"inner_hidden_size": 16384,

"layernorm_epsilon": 1e-05,

"mask_token_id": 130000,

"max_sequence_length": 2048,

"model_type": "chatglm",

"num_attention_heads": 32,

"num_layers": 28,

"pad_token_id": 3,

"position_encoding_2d": true,

"pre_seq_len": null,

"prefix_projection": false,

"quantization_bit": 0,

"torch_dtype": "float16",

"transformers_version": "4.28.0",

"use_cache": true,

"vocab_size": 130528

}

[WARNING|tokenization_auto.py:675] 2023-04-20 13:09:41,769 >> Explicitly passing a `revision` is encouraged when loading a model with custom code to ensure no malicious code has been contributed in a newer revision.

[INFO|tokenization_utils_base.py:1807] 2023-04-20 13:09:41,772 >> loading file ice_text.model

[INFO|tokenization_utils_base.py:1807] 2023-04-20 13:09:41,772 >> loading file added_tokens.json

[INFO|tokenization_utils_base.py:1807] 2023-04-20 13:09:41,772 >> loading file special_tokens_map.json

[INFO|tokenization_utils_base.py:1807] 2023-04-20 13:09:41,772 >> loading file tokenizer_config.json

[WARNING|auto_factory.py:456] 2023-04-20 13:09:42,044 >> Explicitly passing a `revision` is encouraged when loading a model with custom code to ensure no malicious code has been contributed in a newer revision.

[INFO|modeling_utils.py:2531] 2023-04-20 13:09:42,065 >> loading weights file ../THUDM/chatglm-6b/pytorch_model.bin.index.json

[INFO|configuration_utils.py:575] 2023-04-20 13:09:42,066 >> Generate config GenerationConfig {

"_from_model_config": true,

"bos_token_id": 130004,

"eos_token_id": 130005,

"pad_token_id": 3,

"transformers_version": "4.28.0"

}

Loading checkpoint shards: 100%|██████████████████████████████████████████████████████████| 8/8 [00:06<00:00, 1.17it/s]

[INFO|modeling_utils.py:3190] 2023-04-20 13:09:49,180 >> All model checkpoint weights were used when initializing ChatGLMForConditionalGeneration.

[WARNING|modeling_utils.py:3192] 2023-04-20 13:09:49,180 >> Some weights of ChatGLMForConditionalGeneration were not initialized from the model checkpoint at ../THUDM/chatglm-6b and are newly initialized: ['transformer.prefix_encoder.embedding.weight']

You should probably TRAIN this model on a down-stream task to be able to use it for predictions and inference.

[INFO|modeling_utils.py:2839] 2023-04-20 13:09:49,185 >> Generation config file not found, using a generation config created from the model config.

Quantized to 4 bit

input_ids [5, 65421, 61, 67329, 32, 98339, 61, 72043, 32, 65347, 61, 70872, 32, 69768, 61, 68944, 32, 67329, 64103, 61, 96914, 130001, 130004, 5, 87052, 96914, 81471, 64562, 65759, 64493, 64988, 6, 65840, 65388, 74531, 63825, 75786, 64009, 63823, 65626, 63882, 64619, 65388, 6, 64480, 65604, 85646, 110945, 10, 64089, 65966, 87052, 67329, 65544, 6, 71964, 70533, 64417, 63862, 89978, 63991, 63823, 77284, 88473, 64219, 63848, 112012, 6, 71231, 65099, 71252, 66800, 85768, 64566, 64338, 100323, 75469, 63823, 117317, 64218, 64257, 64051, 74197, 6, 63893, 130005, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3, 3]

inputs 类型#裤*版型#宽松*风格#性感*图案#线条*裤型#阔腿裤 宽松的阔腿裤这两年真的吸粉不少,明星时尚达人的心头爱。毕竟好穿时尚,谁都能穿出腿长2米的效果宽松的裤腿,当然是遮肉小能手啊。上身随性自然不拘束,面料亲肤舒适贴身体验感棒棒哒。系带部分增加设计看点,还

label_ids [-100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, 130004, 5, 87052, 96914, 81471, 64562, 65759, 64493, 64988, 6, 65840, 65388, 74531, 63825, 75786, 64009, 63823, 65626, 63882, 64619, 65388, 6, 64480, 65604, 85646, 110945, 10, 64089, 65966, 87052, 67329, 65544, 6, 71964, 70533, 64417, 63862, 89978, 63991, 63823, 77284, 88473, 64219, 63848, 112012, 6, 71231, 65099, 71252, 66800, 85768, 64566, 64338, 100323, 75469, 63823, 117317, 64218, 64257, 64051, 74197, 6, 63893, 130005, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100, -100]

labels 宽松的阔腿裤这两年真的吸粉不少,明星时尚达人的心头爱。毕竟好穿时尚,谁都能穿出腿长2米的效果宽松的裤腿,当然是遮肉小能手啊。上身随性自然不拘束,面料亲肤舒适贴身体验感棒棒哒。系带部分增加设计看点,还

/data/chatgml2/ChatGLM-6B/venv/lib64/python3.9/site-packages/transformers/optimization.py:391: FutureWarning: This implementation of AdamW is deprecated and will be removed in a future version. Use the PyTorch implementation torch.optim.AdamW instead, or set `no_deprecation_warning=True` to disable this warning

warnings.warn(

0%| | 0/3000 [00:00 OK, 到这里发现以前的方法是可以继续使用的,只能说是暂时环境跟不上,单机跑不了,或者要研究一下他的参数了,后续找到更大的机器看看deepspeed并行调参效果。

如果看到这里你知道怎么搞,或者我说错了的,欢迎留言一起探讨下