黑马程序员--分布式搜索ElasticSearch学习笔记

写在最前

- 黑马视频地址:https://www.bilibili.com/video/BV1LQ4y127n4/

- 想获得最佳的阅读体验,请移步至我的个人博客

- SpringCloud学习笔记

- 消息队列MQ学习笔记

- Docker学习笔记

- 分布式搜索ElasticSearch学习笔记

初识ElasticSearch

了解ES

ElasticSearch的作用

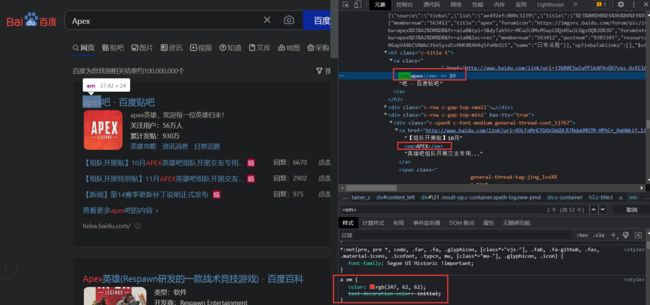

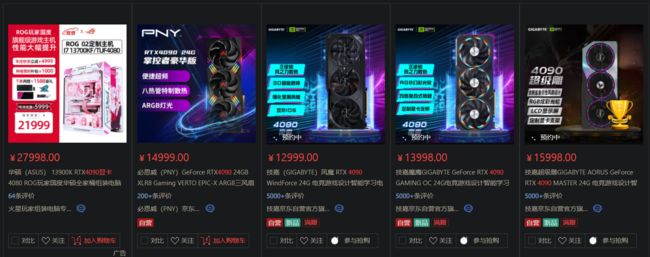

ElasticSearch是一款非常强大的开源搜素引擎,具备非常强大的功能,可以帮助我们从海量数据中快速找到需要的内容- 例如在电商平台搜索商品,搜索

4090显卡会以红色标识

- 在搜索引擎搜索答案,搜索到的内容同样会以红色标识,也可以实现搜索时的自动补全功能

ELK技术栈

ElasticSearch结合kibana、Logstash、Beats,也就是elastic stack(ELK)。被广泛应用在日志数据分析、实时监控等领域

- 而

ElasticSearch是elastic stack的核心,负责存储、搜索、分析数据

ElasticSearch和Lucene

-

ElasticSearch底层是基于Lucene来实现的

-

Lucene是一个Java语言的搜索引擎类库,是Apache公司的顶级项目,由DougCutting于1999年研发,官网地址:https://lucene.apache.org/

-

Lucene的优势

- 易扩展

- 高性能(基于倒排索引)

-

Lucene的缺点

- 只限于Java语言开发

- 学习曲线陡峭

- 不支持水平扩展

-

ElasticSearch的发展史

- 2004年,Shay Banon基于Lucene开发了Compass

- 2010年,Shay Banon重写了Compass,取名为ElasticSearch,官网地址:https://www.elastic.co/cnl/

-

相比于Lucene,ElasticSearch具备以下优势

- 支持分布式,可水平扩展

- 提供Restful接口,可以被任意语言调用

总结

- 什么是ElasticSearch?

- 一个开源的分布式搜索引擎,可以用来实现搜索、日志统计、分析、系统监控等功能

- 什么是Elastic Stack(ELK)?

- 它是以ElasticSearch为核心的技术栈,包括beats、Logstash、kibana、elasticsearch

- 什么是Lucene?

- 是Apache的开源搜索引擎类库,提供了搜索引擎的核心API

倒排索引

- 倒排索引的概念是基于MySQL这样的正向索引而言的

正向索引

- 为了搞明白什么是倒排索引,我们先来看看什么是正向索引,例如给下表中的id创建索引

| id | title | price |

|---|---|---|

| 1 | 小米手机 | 3499 |

| 2 | 华为手机 | 4999 |

| 3 | 华为小米充电器 | 49 |

| 4 | 小米手环 | 49 |

- 如果是基于id查询,那么直接走索引,查询速度非常快。

- 但是实际应用里,用户并不知道每一个商品的id,他们只知道title(商品名称),所以对于用户的查询方式,是基于title(商品名称)做模糊查询,只能是逐行扫描数据

select id, title, price from tb_goods where title like %手机%

- 具体流程如下

- 用户搜索数据,搜索框输入手机,那么条件就是title符合

%手机% - 逐行获取数据

- 判断数据中的title是否符合用户搜索条件

- 如果符合,则放入结果集,不符合则丢弃

- 用户搜索数据,搜索框输入手机,那么条件就是title符合

- 逐行扫描,也就是全表扫描,随着数据量的增加,其查询效率也会越来越低。当数据量达到百万时,这将是一场灾难

倒排索引

- 倒排索引中有两个非常重要的概念

- 文档(Document):用来搜索的数据,其中的每一条数据就是一个文档。例如一个网页、一个商品信息

- 词条(Term):对文档数据或用户搜索数据,利用某种算法分词,得到的具备含义的词语就是词条。例如:我最喜欢的FPS游戏是Apex,就可以分为我、我最喜欢、FPS游戏、最喜欢的FPS、Apex这样的几个词条

- 创建倒排索引是对正向索引的一种特殊处理,流程如下

- 将每一个文档的数据利用算法分词,得到一个个词条

- 创建表,每行数据包括词条、词条所在文档id、位置等信息

- 因为词条唯一性,可以给词条创建索引,例如hash表结构索引

| 词条(term) | 文档id |

|---|---|

| 小米 | 1,3,4 |

| 手机 | 1,2 |

| 华为 | 2,3 |

| 充电器 | 3 |

| 手环 | 4 |

- 以搜索

华为手机为例- 用户输入条件

华为手机,进行搜索。 - 对用户输入的内容分词,得到词条:华为、手机。

- 拿着词条在倒排索引中查找,可以得到包含词条的文档id为:1、2、3。

- 拿着文档id到正向索引中查找具体文档

- 用户输入条件

- 虽然要先查询倒排索引,再查询正向索引,但是无论是词条还是文档id,都建立了索引,所以查询速度非常快,无需全表扫描

正向和倒排

- 那么为什么一个叫做正向索引,一个叫做倒排索引呢?

正向索引是最传统的,根据id索引的方式。但是根据词条查询是,必须先逐条获取每个文档,然后判断文档中是否包含所需要的词条,是根据文档查找词条的过程- 而

倒排索引则相反,是先找到用户要搜索的词条,然后根据词条得到包含词条的文档id,然后根据文档id获取文档,是根据词条查找文档的过程

- 那么二者的优缺点各是什么呢?

正向索引- 优点:可以给多个字段创建索引,根据索引字段搜索、排序速度非常快

- 缺点:根据非索引字段,或者索引字段中的部分词条查找时,只能全表扫描

倒排索引- 优点:根据词条搜索、模糊搜索时,速度非常快

- 缺点:只能给词条创建索引,而不是字段,无法根据字段做排序

ES的一些概念

ElasticSearch中有很多独有的概念,与MySQL中略有差别,但也有相似之处

文档和字段

- ElasticSearch是面向文档(Document)存储的,可以是数据库中的一条商品数据,一个订单信息。文档数据会被序列化为json格式后存储在ElasticSearch中

{

"id": 1,

"title": "小米手机",

"price": 3499

}

{

"id": 2,

"title": "华为手机",

"price": 4999

}

{

"id": 3,

"title": "华为小米充电器",

"price": 49

}

{

"id": 4,

"title": "小米手环",

"price ": 299

}

- 而Json文档中往往包含很多的字段(Field),类似于数据库中的列

索引和映射

-

索引(Index),就是相同类型的文档的集合

-

例如

- 所有用户文档,可以组织在一起,成为用户的索引

{ "id": 101, "name": "张三", "age": 39 } { "id": 102, "name": "李四", "age": 49 } { "id": 103, "name": "王五", "age": 69 }- 所有商品的文档,可以组织在一起,称为商品的索引

{ "id": 1, "title": "小米手机", "price": 3499 } { "id": 2, "title": "华为手机", "price": 4999 } { "id": 3, "title": "苹果手机", "price": 6999 }- 所有订单的文档,可以组织在一起,称为订单的索引

{ "id": 11, "userId": 101, "goodsId": 1, "totalFee": 3999 } { "id": 12, "userId": 102, "goodsId": 2, "totalFee": 4999 } { "id": 13, "userId": 103, "goodsId": 3, "totalFee": 6999 } -

因此,我们可以把索引当做是数据库中的表

-

数据库的表会有约束信息,用来定义表的结构、字段的名称、类型等信息。因此,索引库就有

映射(mapping),是索引中文档的字段约束信息,类似于表的结构约束

MySQL与ElasticSearch

- 我们统一的把MySQL和ElasticSearch的概念做一下对比

| MySQL | Elasticsearch | 说明 |

|---|---|---|

| Table | Index | 索引(index),就是文档的集合,类似数据库的表(Table) |

| Row | Document | 文档(Document),就是一条条的数据,类似数据库中的行(Row),文档都是JSON格式 |

| Column | Field | 字段(Field),就是JSON文档中的字段,类似数据库中的列(Column) |

| Schema | Mapping | Mapping(映射)是索引中文档的约束,例如字段类型约束。类似数据库的表结构(Schema) |

| SQL | DSL | DSL是elasticsearch提供的JSON风格的请求语句,用来操作elasticsearch,实现CRUD |

-

二者各有自己擅长之处

MySQL:产长事务类型操作,可以保证数据的安全和一致性ElasticSearch:擅长海量数据的搜索、分析、计算

-

因此在企业中,往往是这二者结合使用

安装ES、Kibana

部署单点ES

- 因为我们还需要部署Kibana容器,因此需要让es和kibana容器互联,这里先创建一个网络(使用compose部署可以一键互联,不需要这个步骤,但是将来有可能不需要kbiana,只需要es,所以先这里手动部署单点es)

docker network create es-net

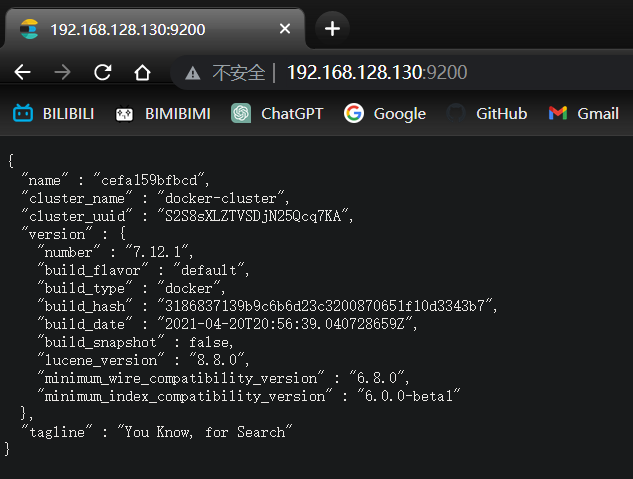

- 拉取镜像,这里采用的是ElasticSearch的7.12.1版本镜像

docker pull elasticsearch:7.12.1

- 运行docker命令,部署单点ES

docker run -d \

--name es \

-e "ES_JAVA_OPTS=-Xms512m -Xmx512m" \

-e "discovery.type=single-node" \

-v es-data:/usr/share/elasticsearch/data \

-v es-plugins:/usr/share/elasticsearch/plugins \

--privileged \

--network es-net \

-p 9200:9200 \

elasticsearch:7.12.1

-

命令解释:

-e "ES_JAVA_OPTS=-Xms512m -Xmx512m":配置JVM的堆内存大小,默认是1G,但是最好不要低于512M-e "discovery.type=single-node":单点部署-v es-data:/usr/share/elasticsearch/data:数据卷挂载,绑定es的数据目录-v es-plugins:/usr/share/elasticsearch/plugins:数据卷挂载,绑定es的插件目录-privileged:授予逻辑卷访问权--network es-net:让ES加入到这个网络当中-p 9200:暴露的HTTP协议端口,供我们用户访问的

-

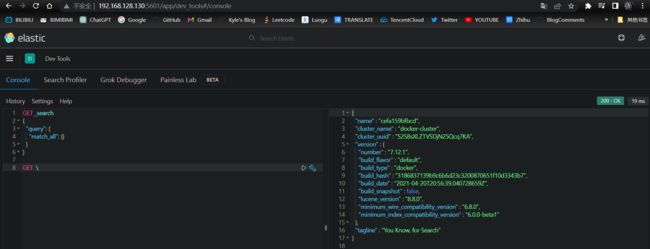

成功启动之后,打开浏览器访问:http://192.168.128.130:9200/, 即可看到elasticsearch的响应结果

部署kibana

- 同样是先拉取镜像,注意版本需要与ES保持一致

docker pull kibana:7.12.1

- 运行docker命令,部署kibana

docker run -d \

--name kibana \

-e ELASTICSEARCH_HOSTS=http://es:9200 \

--network=es-net \

-p 5601:5601 \

kibana:7.12.1

- 命令解释

--network=es-net:让kibana加入es-net这个网络,与ES在同一个网络中-e ELASTICSEARCH_HOSTS=http://es:9200:设置ES的地址,因为kibana和ES在同一个网络,因此可以直接用容器名访问ES-p 5601:5601:端口映射配置

- 成功启动后,打开浏览器访问:http://192.168.128.130:5601/ ,即可以看到结果

DevTools

安装IK分词器

- 默认的分词对中文的支持不是很好,所以这里我们需要安装IK插件

- 在线安装IK插件

# 进入容器内部

docker exec -it elasticsearch /bin/bash

# 在线下载并安装

./bin/elasticsearch-plugin install https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v7.12.1/elasticsearch-analysis-ik-7.12.1.zip

#退出

exit

#重启容器

docker restart elasticsearch

- IK分词器包含两种模式

ik_smart:最少切分ik_max_word:最细切分

- 下面我们分别测试这两种模式

{% tabs 测试两种分词模式 %}

GET /_analyze

{

"analyzer": "ik_smart",

"text": "青春猪头G7人马文不会梦到JK黑丝兔女郎铁驭艾许"

}

- 结果

{

"tokens" : [

{

"token" : "青春",

"start_offset" : 0,

"end_offset" : 2,

"type" : "CN_WORD",

"position" : 0

},

{

"token" : "猪头",

"start_offset" : 2,

"end_offset" : 4,

"type" : "CN_WORD",

"position" : 1

},

{

"token" : "G7",

"start_offset" : 4,

"end_offset" : 6,

"type" : "LETTER",

"position" : 2

},

{

"token" : "人",

"start_offset" : 6,

"end_offset" : 7,

"type" : "COUNT",

"position" : 3

},

{

"token" : "不会",

"start_offset" : 7,

"end_offset" : 9,

"type" : "CN_WORD",

"position" : 4

},

{

"token" : "梦到",

"start_offset" : 9,

"end_offset" : 11,

"type" : "CN_WORD",

"position" : 5

},

{

"token" : "jk",

"start_offset" : 11,

"end_offset" : 13,

"type" : "ENGLISH",

"position" : 6

},

{

"token" : "黑",

"start_offset" : 13,

"end_offset" : 14,

"type" : "CN_CHAR",

"position" : 7

},

{

"token" : "丝",

"start_offset" : 14,

"end_offset" : 15,

"type" : "CN_CHAR",

"position" : 8

},

{

"token" : "兔女郎",

"start_offset" : 15,

"end_offset" : 18,

"type" : "CN_WORD",

"position" : 9

},

{

"token" : "铁",

"start_offset" : 18,

"end_offset" : 19,

"type" : "CN_CHAR",

"position" : 10

},

{

"token" : "驭",

"start_offset" : 19,

"end_offset" : 20,

"type" : "CN_CHAR",

"position" : 11

},

{

"token" : "艾",

"start_offset" : 20,

"end_offset" : 21,

"type" : "CN_CHAR",

"position" : 12

},

{

"token" : "许",

"start_offset" : 21,

"end_offset" : 22,

"type" : "CN_CHAR",

"position" : 13

}

]

}

GET /_analyze

{

"analyzer": "ik_max_word",

"text": "青春猪头G7人马文不会梦到JK黑丝兔女郎铁驭艾许"

}

- 结果

{

"tokens" : [

{

"token" : "青春",

"start_offset" : 0,

"end_offset" : 2,

"type" : "CN_WORD",

"position" : 0

},

{

"token" : "猪头",

"start_offset" : 2,

"end_offset" : 4,

"type" : "CN_WORD",

"position" : 1

},

{

"token" : "G7",

"start_offset" : 4,

"end_offset" : 6,

"type" : "LETTER",

"position" : 2

},

{

"token" : "G",

"start_offset" : 4,

"end_offset" : 5,

"type" : "ENGLISH",

"position" : 3

},

{

"token" : "7",

"start_offset" : 5,

"end_offset" : 6,

"type" : "ARABIC",

"position" : 4

},

{

"token" : "人马",

"start_offset" : 6,

"end_offset" : 8,

"type" : "CN_WORD",

"position" : 5

},

{

"token" : "人",

"start_offset" : 6,

"end_offset" : 7,

"type" : "COUNT",

"position" : 6

},

{

"token" : "马文",

"start_offset" : 7,

"end_offset" : 9,

"type" : "CN_WORD",

"position" : 7

},

{

"token" : "不会",

"start_offset" : 9,

"end_offset" : 11,

"type" : "CN_WORD",

"position" : 8

},

{

"token" : "梦到",

"start_offset" : 11,

"end_offset" : 13,

"type" : "CN_WORD",

"position" : 9

},

{

"token" : "jk",

"start_offset" : 13,

"end_offset" : 15,

"type" : "ENGLISH",

"position" : 10

},

{

"token" : "黑",

"start_offset" : 15,

"end_offset" : 16,

"type" : "CN_CHAR",

"position" : 11

},

{

"token" : "丝",

"start_offset" : 16,

"end_offset" : 17,

"type" : "CN_CHAR",

"position" : 12

},

{

"token" : "兔女郎",

"start_offset" : 17,

"end_offset" : 20,

"type" : "CN_WORD",

"position" : 13

},

{

"token" : "女郎",

"start_offset" : 18,

"end_offset" : 20,

"type" : "CN_WORD",

"position" : 14

},

{

"token" : "铁",

"start_offset" : 20,

"end_offset" : 21,

"type" : "CN_CHAR",

"position" : 15

},

{

"token" : "驭",

"start_offset" : 21,

"end_offset" : 22,

"type" : "CN_CHAR",

"position" : 16

},

{

"token" : "艾",

"start_offset" : 22,

"end_offset" : 23,

"type" : "CN_CHAR",

"position" : 17

},

{

"token" : "许",

"start_offset" : 23,

"end_offset" : 24,

"type" : "CN_CHAR",

"position" : 18

}

]

}

{% endtabs %}

- 可以看到G7人马文在最少切分时,没有被分为

人马,而在最细切分时,被分为了人马,而且目前现在识别不了黑丝、铁驭、艾许等词汇,所以我们需要自己扩展词典

{% note info no-icon %}

随着互联网的发展,造词运动也愈发频繁。出现了许多新词汇,但是在原有的词汇表中并不存在,例如白给、白嫖等

所以我们的词汇也需要不断的更新,IK分词器提供了扩展词汇的功能

{% endnote %}

- 打开IK分词器的config目录

- 找到IKAnalyzer.cfg.xml文件,并添加如下内容

IK Analyzer 扩展配置

ext.dic

stopword.dic

- 在IKAnalyzer.cfg.xml同级目录下新建ext.dic和stopword.dic,并编辑内容

{% tabs 停止词和添加词典 %}

艾许

铁驭

黑丝

兔女郎

{% endtabs %}

4. 重启es

docker restart es

- 测试

{

"tokens" : [

{

"token" : "青春",

"start_offset" : 0,

"end_offset" : 2,

"type" : "CN_WORD",

"position" : 0

},

{

"token" : "猪头",

"start_offset" : 2,

"end_offset" : 4,

"type" : "CN_WORD",

"position" : 1

},

{

"token" : "g7",

"start_offset" : 4,

"end_offset" : 6,

"type" : "LETTER",

"position" : 2

},

{

"token" : "人",

"start_offset" : 6,

"end_offset" : 7,

"type" : "COUNT",

"position" : 3

},

{

"token" : "不会",

"start_offset" : 7,

"end_offset" : 9,

"type" : "CN_WORD",

"position" : 4

},

{

"token" : "梦到",

"start_offset" : 9,

"end_offset" : 11,

"type" : "CN_WORD",

"position" : 5

},

{

"token" : "jk",

"start_offset" : 11,

"end_offset" : 13,

"type" : "ENGLISH",

"position" : 6

},

{

"token" : "铁驭",

"start_offset" : 18,

"end_offset" : 20,

"type" : "CN_WORD",

"position" : 7

},

{

"token" : "艾许",

"start_offset" : 20,

"end_offset" : 22,

"type" : "CN_WORD",

"position" : 8

}

]

}

{% note info no-icon %}

- 分词器的作用是什么?

- 创建倒排索引时对文档分词

- 用户搜索时,对输入的内容分词

- IK分词有几种模式?

- ik_smart:智能切分,粗粒度

- ik_max_word:最细切分,细粒度

- IK分词器如何拓展词条?如何停用词条?

- 利用config目录的IKAnalyzer.cfg.xml文件添加拓展词典和停用词典

- 在词典中添加拓展词条或者停用词条

{% endnote %}

索引库操作

- 索引库就类似于数据库表,mapping映射就类似表的结构

- 我们要向es中存储数据,必须先创建

库和表

mapping映射属性

- mapping是对索引库中文档的约束,常见的mapping属性包括

type:字段数据类型,常见的简单类型有- 字符串:text(可分词文本)、keyword(精确值,例如:品牌、国家、ip地址;因为这些词,分词之后毫无意义)

- 数值:long、integer、short、byte、double、float

- 布尔:boolean

- 日期:date

- 对象:object

index:是否创建索引,默认为true,默认情况下会对所有字段创建倒排索引,即每个字段都可以被搜索。但是某些字段是不存在搜索的意义的,例如邮箱,图片(存储的只是图片url),搜索邮箱或图片url的片段,没有任何意义。因此我们在创建字段映射时,一定要判断一下这个字段是否参与搜索,如果不参与搜索,则将其设置为falseanalyzer:使用哪种分词器properties:该字段的子字段

- 例如下面的json文档

{

"age": 32,

"weight": 48,

"isMarried": false,

"info": "次元游击兵--恶灵",

"email": "[email protected]",

"score": [99.1, 99.5, 98.9],

"name": {

"firstName": "雷尼",

"lastName": "布莱希"

}

}

- 对应的每个字段映射(mapping):

| 字段 | 类型 | index | analyzer |

|---|---|---|---|

| age | integer | true | null |

| weight | float | true | null |

| isMarried | boolean | true | null |

| info | text | true | ik_smart |

| keyword | false | null | |

| score | float | true | null |

| name | object | ||

| name.firstName | keyword | true | null |

| name.lastName | keyword | true | null |

- 其中

score:虽然是数组,但是我们只看其中元素的类型,类型为float;email不参与搜索,所以index为false;info参与搜索,且需要分词,所以需要设置一下分词器

{% note info no-icon %}

小结

- mapping常见属性有哪些?

- type:数据类型

- index:是否创建索引

- analyzer:选择分词器

- properties:子字段

- type常见的有哪些

- 字符串:text、keyword

- 数值:long、integer、short、byte、double、float

- 布尔:boolean

- 日期:date

- 对象:object

{% endnote %}

索引库的CRUD

- 这里是使用的Kibana提供的DevTools编写DSL语句

创建索引库和映射

- 基本语法

- 请求方式:

PUT - 请求路径:

/{索引库名},可以自定义 - 请求参数:

mapping映射

- 请求方式:

- 格式

PUT /{索引库名}

{

"mappings": {

"properties": {

"字段名1": {

"type": "text ",

"analyzer": "standard"

},

"字段名2": {

"type": "text",

"index": true

},

"字段名3": {

"type": "text",

"properties": {

"子字段1": {

"type": "keyword"

},

"子字段2": {

"type": "keyword"

}

}

}

}

}

}

- 示例

PUT /test001

{

"mappings": {

"properties": {

"info": {

"type": "text",

"analyzer": "ik_smart"

},

"email": {

"type": "keyword",

"index": false

},

"name": {

"type": "object",

"properties": {

"firstName": {

"type": "keyword"

},

"lastName": {

"type": "keyword"

}

}

}

}

}

}

查询索引库

- 基本语法

- 请求方式:

GET - 请求路径:

/{索引库名} - 请求参数:

无

- 请求方式:

- 格式:

GET /{索引库名}

- 举例:

GET /test001

修改索引库

- 基本语法

- 请求方式:

PUT - 请求路径:

/{索引库名}/_mapping - 请求参数:

mapping映射

- 请求方式:

- 格式:

PUT /{索引库名}/_mapping

{

"properties": {

"新字段名":{

"type": "integer"

}

}

}

- 倒排索引结构虽然不复杂,但是一旦数据结构改变(比如改变了分词器),就需要重新创建倒排索引,这简直是灾难。因此索引库

一旦创建,就无法修改mapping - 虽然无法修改mapping中已有的字段,但是却允许添加新字段到mapping中,因为不会对倒排索引产生影响

- 示例:

PUT /test001/_mapping

{

"properties": {

"age": {

"type": "integer"

}

}

}

删除索引库

- 基本语法:

- 请求方式:

DELETE - 请求路径:

/{索引库名} - 请求参数:无

- 请求方式:

- 格式

DELETE /{索引库名}

总结

- 索引库操作有哪些?

- 创建索引名:PUT /{索引库名}

- 查询索引库:GET /{索引库名}

- 删除索引库:DELETE /{索引库名}

- 添加字段:PUT /{索引库名}/_mapping

文档操作

新增文档

- 语法

POST /{索引库名}/_doc/{文档id}

{

"字段1": "值1",

"字段2": "值2",

"字段3": {

"子属性1": "值3",

"子属性2": "值4"

},

// ...

}

- 示例

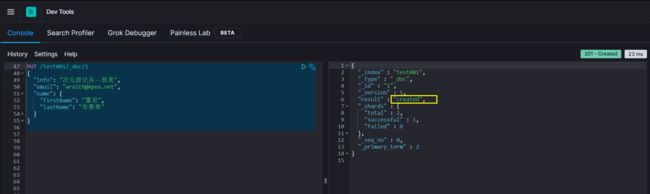

POST /test001/_doc/1

{

"info": "次元游记兵--恶灵",

"email": "[email protected]",

"name": {

"firstName": "雷尼",

"lastName": "布莱希"

}

}

查询文档

- 根据rest风格,新增是post,查询应该是get,而且一般查询都需要条件,这里我们把文档id带上

- 语法

GET /{索引库名}/_doc/{id}

- 示例

GET /test001/_doc/1

删除文档

- 删除使用DELETE请求,同样,需要根据id进行删除

- 语法

DELETE /{索引库名}/_doc/{id}

- 示例:根据id删除数据, 若删除的文档不存在, 则result为not found

DELETE /test001/_doc/1

修改文档

- 修改有两种方式

- 全量修改:直接覆盖原来的文档

- 增量修改:修改文档中的部分字段

全量修改

-

全量修改是覆盖原来的文档,其本质是

- 根据指定的id删除文档

- 新增一个相同id的文档

{% note warning no-icon %}

注意:如果根据id删除时,id不存在,第二步的新增也会执行,也就从修改变成了新增操作了

{% endnote %}

-

语法

PUT /{索引库名}/_doc/{文档id}

{

"字段1": "值1",

"字段2": "值2",

// ... 略

}

- 示例

PUT /test001/_doc/1

{

"info": "爆破专家--暴雷",

"email": "@Apex.net",

"name": {

"firstName": "沃尔特",

"lastName": "菲茨罗伊"

}

}

增量修改

- 增量修改只修改指定id匹配文档中的部分字段

- 语法

POST /{索引库名}/_update/{文档id}

{

"doc": {

"字段名": "新的值",

...

}

}

- 示例

POST /test001/_update/1

{

"doc":{

"email":"[email protected]",

"info":"恐怖G7人--马文"

}

}

总结

- 文档的操作有哪些?

- 创建文档:POST /{索引库名}/_doc/{id}

- 查询文档:GET /{索引库名}/_doc/{id}

- 删除文档:DELETE /{索引库名}/_doc/{id}

- 修改文档

- 全量修改:PUT /{索引库名}/_doc/{id}

- 增量修改:POST /{索引库名}/_update/{id}

RestAPI

- ES官方提供了各种不同语言的客户端用来操作ES。这些客户端的本质就是组装DSL语句,通过HTTP请求发送给ES。

- 官方文档地址:https://www.elastic.co/guide/en/elasticsearch/client/index.html

- 其中JavaRestClient又包括两种

- Java Low Level Rest Client

- Java High Level Rest Client

- 这里学习的是Java High Level Rest Client

导入Demo工程

- 导入黑马提供的数据库数据和hotel-demo,其中表结构如下

| 字段 | 类型 | 长度 | 注释 |

|---|---|---|---|

| id | bigint | 20 | 酒店id |

| name | varchar | 255 | 酒店名称 |

| address | varchar | 255 | 酒店地址 |

| price | int | 10 | 酒店价格 |

| score | int | 2 | 酒店评分 |

| brand | varchar | 32 | 酒店品牌 |

| city | varchar | 32 | 所在城市 |

| star_name | varchar | 16 | 酒店星级,1星到5星,1钻到5钻 |

| business | varchar | 255 | 商圈 |

| latitude | varchar | 32 | 纬度 |

| longitude | varchar | 32 | 经度 |

| pic | varchar | 255 | 酒店图片 |

mapping映射分析

- 创建索引库,最关键的是mapping映射,而mapping映射要考虑的信息包括

- 字段名?

- 字段数据类型?

- 是否参与搜索?

- 是否需要分词?

- 如果分词,分词器是什么?

- 其中

- 字段名、字段数据类型,可以参考数据表结构的名称和类型

- 是否参与搜索要分析业务来判断,例如图片地址,就无需参与搜索

- 是否分词要看内容,如果内容是一个整体就无需分词,反之则需要分词

- 分词器,为了提高被搜索到的概率,统一使用最细切分

ik_max_word

- 下面我们来分析一下酒店数据的索引库结构

id:id的类型比较特殊,不是long,而是keyword,而且id后期肯定需要涉及到我们的增删改查,所以需要参与搜索name:需要参与搜索,而且是text,需要参与分词,分词器选择ik_max_wordaddress:是字符串,但是个人感觉不需要分词(所以这里把它设为keyword),当然你也可以选择分词,个人感觉不需要参与搜索,所以index为falseprice:类型:integer,需要参与搜索(做范围排序)score:类型:integer,需要参与搜索(做范围排序)brand:类型:keyword,但是不需要分词(品牌名称分词后毫无意义),所以为keyword,需要参与搜索city:类型:keyword,分词无意义,需要参与搜索star_name:类型:keyword,需要参与搜索business:类型:keyword,需要参与搜索latitude和longitude:地理坐标在ES中比较特殊,ES中支持两种地理坐标数据类型:geo_point:由纬度(latitude)和经度( longitude)确定的一个点。例如:“32.8752345,120.2981576”geo_shape:有多个geo_point组成的复杂几何图形。例如一条直线,“LINESTRING (-77.03653 38.897676,-77.009051 38.889939)”

- 所以这里应该是geo_point类型

pic:类型:keyword,不需要参与搜索,index为false

PUT /hotel

{

"mappings": {

"properties": {

"id": {

"type": "keyword"

},

"name": {

"type": "text",

"analyzer": "ik_max_word"

},

"address": {

"type": "keyword",

"index": false

},

"price": {

"type": "integer"

},

"score": {

"type": "integer"

},

"brand": {

"type": "keyword"

},

"city": {

"type": "keyword"

},

"starName": {

"type": "keyword"

},

"business": {

"type": "keyword"

},

"location": {

"type": "geo_point"

},

"pic": {

"type": "keyword",

"index": false

}

}

}

}

- 但是现在还有一个小小的问题,现在我们的name、brand、city字段都需要参与搜索,也就意味着用户在搜索的时候,会根据多个字段搜,例如:

上海虹桥希尔顿五星酒店 - 那么ES是根据多个字段搜效率高,还是根据一个字段搜效率高

- 显然是搜索一个字段效率高

- 那现在既想根据多个字段搜又想要效率高,怎么解决这个问题呢?

- ES给我们提供了一种简单的解决方案

{% note info no-icon %}

字段拷贝可以使用copy_to属性,将当前字段拷贝到指定字段,示例

- ES给我们提供了一种简单的解决方案

"all": {

"type": "text",

"analyzer": "ik_max_word"

},

"brand": {

"type": "keyword",

"copy_to": "all"

}

{% endnote %}

- 那现在修改我们的DSL语句

PUT /hotel

{

"mappings": {

"properties": {

"id": {

"type": "keyword"

},

"name": {

"type": "text",

"analyzer": "ik_max_word",

"copy_to": "all"

},

"address": {

"type": "keyword",

"index": false

},

"price": {

"type": "integer"

},

"score": {

"type": "integer"

},

"brand": {

"type": "keyword",

"copy_to": "all"

},

"city": {

"type": "keyword"

},

"starName": {

"type": "keyword"

},

"business": {

"type": "keyword"

, "copy_to": "all"

},

"location": {

"type": "geo_point"

},

"pic": {

"type": "keyword",

"index": false

},

"all":{

"type": "text",

"analyzer": "ik_max_word"

}

}

}

}

初始化RestCliet

- 在

ElasticSearch提供的API中,与ElasticSearch一切交互都封装在一个名为RestHighLevelClient的类中,必须先完成这个对象的初始化,建立与ES的连接

- 引入ES的RestHighLevelClient的依赖

org.elasticsearch.client

elasticsearch-rest-high-level-client

- 因为SpringBoot管理的ES默认版本为7.6.2,所以我们需要覆盖默认的ES版本

1.8

7.12.1

- 初始化RestHighLevelClient

RestHighLevelClient client = new RestHighLevelClient(RestClient.builder(

HttpHost.create("http://192.168.150.101:9200")

));

- 但是为了单元测试方便,我们创建一个测试类HotelIndexTest,在成员变量声明一个RestHighLevelClient,然后将初始化的代码编写在

@BeforeEach中

import org.apache.http.HttpHost;

import org.elasticsearch.client.RestClient;

import org.elasticsearch.client.RestHighLevelClient;

import org.junit.jupiter.api.AfterEach;

import org.junit.jupiter.api.BeforeEach;

import org.junit.jupiter.api.Test;

import org.springframework.boot.test.context.SpringBootTest;

import java.io.IOException;

@SpringBootTest

class HotelDemoApplicationTests {

private RestHighLevelClient client;

@Test

void contextLoads() {

}

@BeforeEach

public void setup() {

this.client = new RestHighLevelClient(RestClient.builder(

new HttpHost("http://192.168.128.130:9200")

));

}

@AfterEach

void tearDown() throws IOException {

this.client.close();

}

}

创建索引库

代码解读

- 创建索引库的代码如下

@Test

void testCreateHotelIndex() throws IOException {

CreateIndexRequest request = new CreateIndexRequest("hotel");

request.source(MAPPING_TEMPLATE, XContentType.JSON);

client.indices().create(request, RequestOptions.DEFAULT);

}

- 代码分为三部分

- 创建Request对象,因为是创建索引库的操作,因此Request是CreateIndexRequest,这一步对标DSL语句中的

PUT /hotel - 添加请求参数,其实就是DSL的JSON参数部分,因为JSON字符很长,所以这里是定义了静态常量

MAPPING_TEMPLATE,让代码看起来更优雅

public static final String MAPPING_TEMPLATE = "{\n" + " \"mappings\": {\n" + " \"properties\": {\n" + " \"id\": {\n" + " \"type\": \"keyword\"\n" + " },\n" + " \"name\": {\n" + " \"type\": \"text\",\n" + " \"analyzer\": \"ik_max_word\",\n" + " \"copy_to\": \"all\"\n" + " },\n" + " \"address\": {\n" + " \"type\": \"keyword\",\n" + " \"index\": false\n" + " },\n" + " \"price\": {\n" + " \"type\": \"integer\"\n" + " },\n" + " \"score\": {\n" + " \"type\": \"integer\"\n" + " },\n" + " \"brand\": {\n" + " \"type\": \"keyword\",\n" + " \"copy_to\": \"all\"\n" + " },\n" + " \"city\": {\n" + " \"type\": \"keyword\"\n" + " },\n" + " \"starName\": {\n" + " \"type\": \"keyword\"\n" + " },\n" + " \"business\": {\n" + " \"type\": \"keyword\"\n" + " , \"copy_to\": \"all\"\n" + " },\n" + " \"location\": {\n" + " \"type\": \"geo_point\"\n" + " },\n" + " \"pic\": {\n" + " \"type\": \"keyword\",\n" + " \"index\": false\n" + " },\n" + " \"all\":{\n" + " \"type\": \"text\",\n" + " \"analyzer\": \"ik_max_word\"\n" + " }\n" + " }\n" + " }\n" + "}";- 发送请求,client.indics()方法的返回值是IndicesClient类型,封装了所有与索引库有关的方法

- 创建Request对象,因为是创建索引库的操作,因此Request是CreateIndexRequest,这一步对标DSL语句中的

删除索引库

- 删除索引库的DSL语句非常简单

DELETE /hotel

- 与创建索引库相比

- 请求方式由PUT变为DELETE

- 请求路径不变

- 无请求参数

- 所以代码的差异,主要体现在Request对象上,整体步骤没有太大变化

- 创建Request对象,这次是DeleteIndexRequest对象

- 准备请求参数,这次是无参

- 发送请求,改用delete方法

@Test

void testDeleteHotelIndex() throws IOException {

DeleteIndexRequest request = new DeleteIndexRequest("hotel");

client.indices().delete(request, RequestOptions.DEFAULT);

}

判断索引库是否存在

- 判断索引库是否存在,本质就是查询,对应的DSL是

GET /hotel

- 因此与删除的Java代码流程是类似的

- 创建Request对象,这次是GetIndexRequest对象

- 准备请求参数,这里是无参

- 发送请求,改用exists方法

@Test

void testGetHotelIndex() throws IOException {

GetIndexRequest request = new GetIndexRequest("hotel");

boolean exists = client.indices().exists(request, RequestOptions.DEFAULT);

System.out.println(exists ? "索引库已存在" : "索引库不存在");

}

总结

- JavaRestClient对索引库操作的流程计本类似,核心就是client.indices()方法来获取索引库的操作对象

- 索引库操作基本步骤

- 初始化RestHighLevelClient

- 创建XxxIndexRequest。Xxx是Create、Get、Delete

- 准备DSL(Create时需要,其它是无参)

- 发送请求,调用ReseHighLevelClient.indices().xxx()方法,xxx是create、exists、delete

RestClient操作文档

- 为了与索引库操作分离,我们再添加一个测试类,做两件事

- 初始化RestHighLevelClient

- 我们的酒店数据在数据库,需要利用IHotelService去查询,所以要注入这个接口

import cn.blog.hotel.service.IHotelService;

import org.apache.http.HttpHost;

import org.elasticsearch.client.RestClient;

import org.elasticsearch.client.RestHighLevelClient;

import org.junit.jupiter.api.AfterEach;

import org.junit.jupiter.api.BeforeEach;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.boot.test.context.SpringBootTest;

import java.io.IOException;

@SpringBootTest

public class HotelDocumentTest {

@Autowired

private IHotelService hotelService;

private RestHighLevelClient client;

@BeforeEach

void setUp() {

client = new RestHighLevelClient(RestClient.builder(

new HttpHost("http://192.168.128.130:9200")

));

}

@AfterEach

void tearDown() throws IOException {

client.close();

}

}

新增文档

- 我们要把数据库中的酒店数据查询出来,写入ES中

索引库实体类

- 数据库查询后的结果是一个Hotel类型的对象,结构如下

@Data

@TableName("tb_hotel")

public class Hotel {

@TableId(type = IdType.INPUT)

private Long id;

private String name;

private String address;

private Integer price;

private Integer score;

private String brand;

private String city;

private String starName;

private String business;

private String longitude;

private String latitude;

private String pic;

}

- 但是与我们的索引库结构存在差异

- longitude和latitude需要合并为location

- 因此我们需要定义一个新类型,与索引库结构吻合

import lombok.Data;

import lombok.NoArgsConstructor;

@Data

@NoArgsConstructor

public class HotelDoc {

private Long id;

private String name;

private String address;

private Integer price;

private Integer score;

private String brand;

private String city;

private String starName;

private String business;

private String location;

private String pic;

public HotelDoc(Hotel hotel) {

this.id = hotel.getId();

this.name = hotel.getName();

this.address = hotel.getAddress();

this.price = hotel.getPrice();

this.score = hotel.getScore();

this.brand = hotel.getBrand();

this.city = hotel.getCity();

this.starName = hotel.getStarName();

this.business = hotel.getBusiness();

this.location = hotel.getLatitude() + ", " + hotel.getLongitude();

this.pic = hotel.getPic();

}

}

语法说明

- 新增文档的DSL语法如下

POST /{索引库名}/_doc/{id}

{

"name": "Jack",

"age": 21

}

- 对应的Java代码如下

@Test

void testIndexDocument() throws IOException {

IndexRequest request = new IndexRequest("indexName").id("1");

request.source("{\"name\":\"Jack\",\"age\":21}");

client.index(request, RequestOptions.DEFAULT);

}

- 可以看到与创建索引库类似,同样是三步走:

- 创建Request对象

- 准备请求参数,也就是DSL中的JSON文档

- 发送请求

- 变化的地方在于,这里直接使用client.xxx()的API,不再需要client.indices()了

完整代码

- 我们导入酒店数据,基本流程一致,但是需要考虑几点变化

- 酒店数据来自于数据库,我们需要先从数据库中查询,得到

Hotel对象 Hotel对象需要转换为HotelDoc对象HotelDoc需要序列化为json格式

- 酒店数据来自于数据库,我们需要先从数据库中查询,得到

- 因此,代码整体步骤如下

- 根据id查询酒店数据Hotel

- 将Hotel封装为HotelDoc

- 将HotelDoc序列化为Json

- 创建IndexRequest,指定索引库名和id

- 准备请求参数,也就是Json文档

- 发送请求

- 在hotel-demo的HotelDocumentTest测试类中,编写单元测试

@Test

void testAddDocument() throws IOException {

// 1. 根据id查询酒店数据

Hotel hotel = hotelService.getById(61083L);

// 2. 转换为文档类型

HotelDoc hotelDoc = new HotelDoc(hotel);

// 3. 转换为Json字符串

String jsonString = JSON.toJSONString(hotelDoc);

// 4. 准备request对象

IndexRequest request = new IndexRequest();

// 5. 准备json文档

request.source(jsonString, XContentType.JSON);

// 6. 发送请求

client.index(request, RequestOptions.DEFAULT);

}

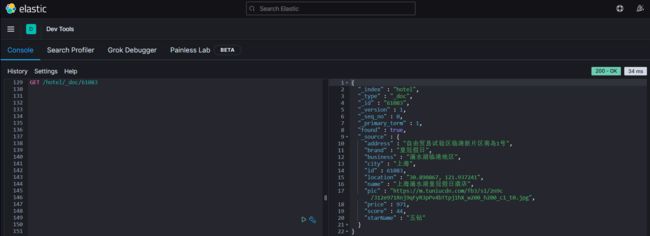

{

"_index" : "hotel",

"_type" : "_doc",

"_id" : "61083",

"_version" : 1,

"_seq_no" : 0,

"_primary_term" : 1,

"found" : true,

"_source" : {

"address" : "自由贸易试验区临港新片区南岛1号",

"brand" : "皇冠假日",

"business" : "滴水湖临港地区",

"city" : "上海",

"id" : 61083,

"location" : "30.890867, 121.937241",

"name" : "上海滴水湖皇冠假日酒店",

"pic" : "https://m.tuniucdn.com/fb3/s1/2n9c/312e971Rnj9qFyR3pPv4bTtpj1hX_w200_h200_c1_t0.jpg",

"price" : 971,

"score" : 44,

"starName" : "五钻"

}

}

查询文档

- 查询的DSL语句如下

GET /hotel/_doc/{id}

- 由于没有请求参数,所以非常简单,代码分为以下两步

- 准备Request对象

- 发送请求

- 解析结果

- 不过查询的目的是为了得到HotelDoc,因此难点是结果的解析,在刚刚查询的结果中,我们发现HotelDoc对象的主要内容在

_source属性中,所以我们要获取这部分内容,然后将其转化为HotelDoc

@Test

void testGetDocumentById() throws IOException {

// 1. 准备request对象

GetRequest request = new GetRequest("hotel").id("61083");

// 2. 发送请求,得到结果

GetResponse response = client.get(request, RequestOptions.DEFAULT);

// 3. 解析结果

String jsonStr = response.getSourceAsString();

HotelDoc hotelDoc = JSON.parseObject(jsonStr, HotelDoc.class);

System.out.println(hotelDoc);

}

修改文档

- 修改依旧是两种方式

- 全量修改:本质是先根据id删除,再新增

- 增量修改:修改文档中的指定字段值

- 在RestClient的API中,全量修改与新增的API完全一致,判断的依据是ID

- 若新增时,ID已经存在,则修改(删除再新增)

- 若新增时,ID不存在,则新增

- 这里就主要讲增量修改,对应的DSL语句如下

POST /test001/_update/1

{

"doc":{

"email":"[email protected]",

"info":"恐怖G7人--马文"

}

}

- 与之前类似,也是分为三步

- 准备Request对象,这次是修改,对应的就是UpdateRequest

- 准备参数,也就是对应的JSON文档,里面包含要修改的字段

- 发送请求,更新文档

@Test

void testUpdateDocumentById() throws IOException {

// 1. 准备request对象

UpdateRequest request = new UpdateRequest("hotel","61083");

// 2. 准备参数

request.doc(

"city","北京",

"price",1888);

// 3. 发送请求

client.update(request,RequestOptions.DEFAULT);

}

删除文档

- 删除的DSL语句如下

DELETE /hotel/_doc/{id}

- 与查询相比,仅仅是请求方式由DELETE变为GET,不难猜想对应的Java依旧是三步走

- 准备Request对象,因为是删除,所以是DeleteRequest对象,要指明索引库名和id

- 准备参数,无参

- 发送请求,因为是删除,所以是client.delete()方法

@Test

void testDeleteDocumentById() throws IOException {

// 1. 准备request对象

DeleteRequest request = new DeleteRequest("hotel","61083");

// 2. 发送请求

client.delete(request,RequestOptions.DEFAULT);

}

- 成功删除之后,再调用查询的测试方法,返回值为null,删除成功

批量导入文档

- 之前我们都是一条一条的新增文档,但实际应用中,还是需要批量的将数据库数据导入索引库中

{% note info no-icon %}

需求:批量查询酒店数据,然后批量导入索引库中

思路:

- 利用mybatis-plus查询酒店数据

- 将查询到的酒店数据(Hotel)转化为文档类型数据(HotelDoc)

- 利用JavaRestClient中的Bulk批处理,实现批量新增文档,示例代码如下

@Test

void testBulkAddDoc() throws IOException {

BulkRequest request = new BulkRequest();

request.add(new IndexRequest("hotel").id("101").source("json source1", XContentType.JSON));

request.add(new IndexRequest("hotel").id("102").source("json source2", XContentType.JSON));

client.bulk(request, RequestOptions.DEFAULT);

}

{% endnote %}

- 实现代码如下

@Test

void testBulkAddDoc() throws IOException {

BulkRequest request = new BulkRequest();

List hotels = hotelService.list();

for (Hotel hotel : hotels) {

HotelDoc hotelDoc = new HotelDoc(hotel);

request.add(new IndexRequest("hotel").

id(hotelDoc.getId().toString()).

source(JSON.toJSONString(hotelDoc), XContentType.JSON));

}

client.bulk(request, RequestOptions.DEFAULT);

}

- 使用stream流操作可以简化代码

@Test

void testBulkAddDoc() throws IOException {

BulkRequest request = new BulkRequest();

hotelService.list().stream().forEach(hotel ->

request.add(new IndexRequest("hotel")

.id(hotel.getId().toString())

.source(JSON.toJSONString(new HotelDoc(hotel)), XContentType.JSON)));

client.bulk(request, RequestOptions.DEFAULT);

}

小结

- 文档初始化的基本步骤

- 初始化RestHighLevelClient

- 创建XxxRequest对象,Xxx是Index、Get、Update、Delete

- 准备参数(Index和Update时需要)

- 发送请求,调用RestHighLevelClient.xxx方法,xxx是index、get、update、delete

- 解析结果(Get时需要)

DSL查询文档

- ElasticSearch的查询依然是基于JSON风格的DSL来实现的

DSL查询分类

- ElasticSearch提供了基于DSL来定义查询。常见的查询类型包括

查询所有:查询出所有数据,一般测试用。例如- match_all

全文检索(full text):利用分词器对用户输入的内容分词,然后去倒排索引库中匹配。例如- match_query

- multi_match_query

精确查询:根据精确词条值查找数据,一般是查找keyword、数值、日期、boolean等类型字段。例如- ids

- range

- term

地理查询(geo):根据经纬度查询。例如- geo_distance

- geo_bounding_box

复合查询(compound):复合查询可以将上述各种查询条件组合起来,合并查询条件。例如- bool

- function_score

- 查询的语法基本一致

GET /indexname/_search

{

"query": {

"查询类型": {

"查询条件": "条件值"

}

}

}

- 这里以查询所有为例

- 查询类型为

match_all - 没有查询条件

GET /indexName/_search { "query": { "match_all": { } } } - 查询类型为

- 其他的无非就是

查询类型和查询条件的变化

全文检索的查询

使用场景

- 全文检索的查询流程基本如下

- 根据用户搜索的内容做分词,得到词条

- 根据词条去倒排索引库中匹配,得到文档id

- 根据文档id找到的文档,返回给用户

- 比较常用的场景包括

- 商城的输入框搜索

- 百度输入框搜索

- 因为是拿着词条去匹配,因此参与搜索的字段也必须是可分词的text类型的字段

基本语法

- 常见的全文检索包括

- match查询:单字段查询

- multi_match查询:多字段查询,任意一个字段符合条件就算符合查询条件

- match查询语法如下

GET /indexName/_search

{

"query": {

"match": {

"FIELD": "TEXT"

}

}

}

- multi_match语法如下

GET /indexName/_search

{

"query": {

"multi_match": {

"fields": ["FIELD1", "FIELD2"]

}

}

}

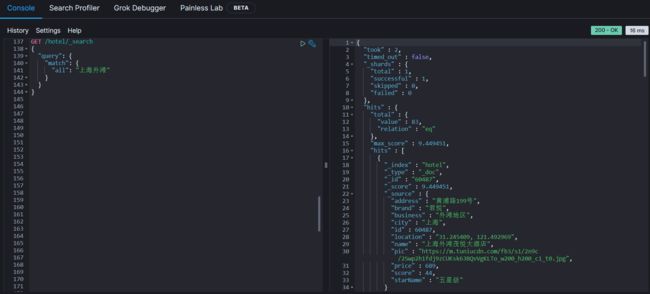

示例

- 查询上海外滩的酒店数据

- 以match查询示例,这里的

all字段是之前由name、city、business这三个字段拷贝得来的

GET /hotel/_search { "query": { "match": { "all": "上海外滩" } } }

- 以multi_match查询示例

GET /hotel/_search { "query": { "multi_match": { "query": "上海外滩", "fields": ["brand", "city", "business"] } } }

- 以match查询示例,这里的

- 可以看到,这两种查询的结果是一样的,为什么?

- 因为我们将

name、city、business的值都利用copy_to复制到了all字段中,因此根据这三个字段搜索和根据all字段搜索的结果当然一样了 - 但是搜索的字段越多,对查询性能影响就越大,因此建议采用

copy_to,然后使用单字段查询的方式

- 因为我们将

小结

- match和multi_match的区别是什么?

- match:根据一个字段查询

- multi_match:根据多个字段查询,参与查询的字段越多,查询性能就越差

精确查询

- 精确查询一般是查找keyword、数值、日期、boolean等类型字段。所以不会对搜索条件分词。常见的有

term:根据词条精确值查询range:根据值的范围查询

term查询

GET /indexName/_search

{

"query": {

"term": {

"FIELD": {

"value": "VALUE"

}

}

}

}

- 示例:查询北京的酒店数据

GET /hotel/_search

{

"query": {

"term": {

"city": {

"value": "北京"

}

}

}

}

range查询

- 范围查询,一般应用在对数值类型做范围过滤的时候。例如做价格范围的过滤

- 基本语法

GET /hotel/_search

{

"query": {

"range": {

"FIELD": {

"gte": 10, //这里的gte表示大于等于,gt表示大于

"lte": 20 //这里的let表示小于等于,lt表示小于

}

}

}

}

- 示例:查询酒店价格在1000~3000的酒店

GET /hotel/_search

{

"query": {

"range": {

"price": {

"gte": 1000,

"lte": 3000

}

}

}

}

小结

- 精确查询常见的有哪些?

- term查询:根据词条精确匹配,一般搜索keyword类型、数值类型、布尔类型、日期类型字段

- range查询:根据数值范围查询,可以使数值、日期的范围

地理坐标查询

- 所谓地理坐标查询,其实就是根据经纬度查询,官方文档:https://www.elastic.co/guide/en/elasticsearch/reference/current/geo-queries.html

- 常见的使用场景包括

- 携程:搜索附近的酒店

- 滴滴:搜索附近的出租车

- 微信:搜索附近的人

矩形范围查询

- 矩形范围查询,也就是geo_bounding_box查询,查询坐标落在某个矩形范围内的所有文档

- 查询时。需指定矩形的左上、游戏啊两个点的坐标,然后画出一个矩形,落在该矩形范围内的坐标,都是符合条件的文档

- 基本语法

GET /indexName/_search

{

"query": {

"geo_bounding_box": {

"FIELD": {

"top_left": { // 左上点

"lat": 31.1, // lat: latitude 纬度

"lon": 121.5 // lon: longitude 经度

},

"bottom_right": { // 右下点

"lat": 30.9, // lat: latitude 纬度

"lon": 121.7 // lon: longitude 经度

}

}

}

}

}

- 示例

GET /hotel/_search

{

"query": {

"geo_bounding_box": {

"location": {

"top_left": {

"lat": 31.1,

"lon": 121.5

},

"bottom_right": {

"lat": 30.9,

"lon": 121.7

}

}

}

}

}

附近查询

- 附近查询,也叫做举例查询(geo_distance):查询到指定中心点的距离小于等于某个值的所有文档

- 换句话说,也就是以指定中心点为圆心,指定距离为半径,画一个圆,落在圆内的坐标都算符合条件

- 语法说明

GET /indexName/_search

{

"query": {

"geo_distance": {

"distance": "3km", // 半径

"location": "39.9, 116.4" // 圆心

}

}

}

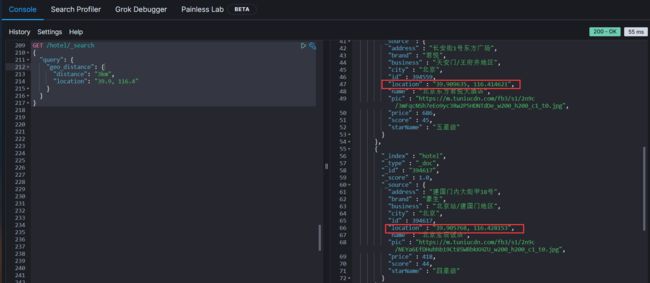

- 示例:查询我附近3km内的酒店文档

GET /hotel/_search

{

"query": {

"geo_distance": {

"distance": "3km",

"location": "39.9, 116.4"

}

}

}

复合查询

- 复合(compound)查询:复合查询可以将其他简单查询组合起来,实现更复杂的搜索逻辑,常见的有两种

- function score:算分函数查询,可以控制文档相关性算分,控制文档排名(例如搜索引擎的排名,第一大部分都是广告)

- bool query:布尔查询,利用逻辑关系组合多个其他的查询,实现复杂搜索

相关性算分

- 当我们利用match查询时,文档结果会根据搜索词条的关联度打分(_score),返回结果时按照分值降序排列

- 例如我们搜索虹桥如家,结果如下

[

{

"_score" : 17.850193,

"_source" : {

"name" : "虹桥如家酒店真不错",

}

},

{

"_score" : 12.259849,

"_source" : {

"name" : "外滩如家酒店真不错",

}

},

{

"_score" : 11.91091,

"_source" : {

"name" : "迪士尼如家酒店真不错",

}

}

]

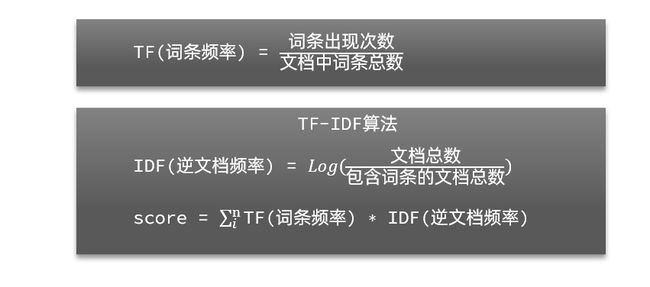

-

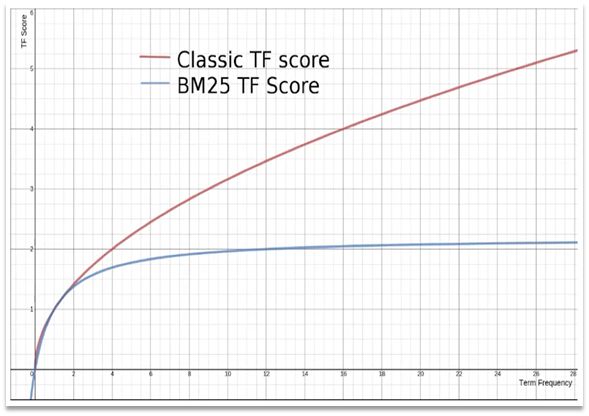

TF-IDF算法有一种缺陷,就是词条频率越高,文档得分也会越高,单个词条对文档影响较大。而BM25则会让单个词条的算分有一个上限,曲线更平滑

-

小结:ES会根据词条和文档的相关度做打分,算法有两种

- TF-IDF算法

- BM25算法, ES 5.1版本后采用的算法

算分函数查询

- 根据相关度打分是比较合理的需求,但是合理的并不一定是产品经理需要的

- 以某搜索引擎为例,你在搜索的结果中,并不是相关度越高就越靠前,而是谁掏的钱多就让谁的排名越靠前

- 要想控制相关性算分,就需要利用ES中的

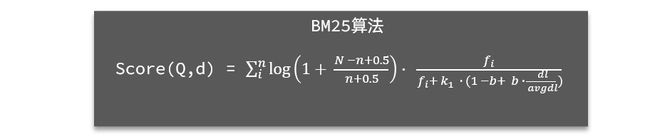

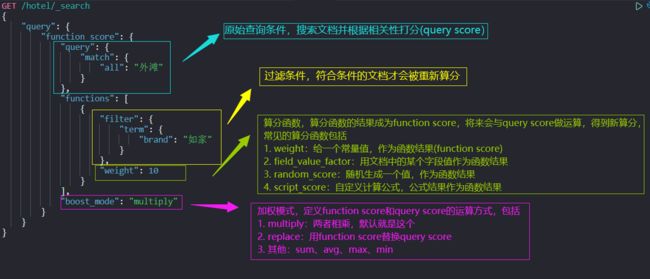

function score查询了 - 语法说明

GET /indexName/_search

{

"query": {

"function_score": {

"query": {

"match": {

"all": "外滩"

}

},

"functions": [

{

"filter": {

"term": {

"id": "1"

}

},

"weight": 10

}

],

"boost_mode": "multiply"

}

}

}

- function score查询中包含四部分内容

- 原始查询条件:query部分,基于这个条件搜索文档,并且基于BM25算法给文档打分,原始算分(query score)

- 过滤条件:filter部分,符合该条件的文档才会被重新算分

- 算分函数:符合filter条件的文档要根据这个函数做运算,得到函数算分(function score),有四种函数

- weight:函数结果是常量

- field_value_factor:以文档中的某个字段值作为函数结果

- random_score:以随机数作为函数结果

- script_score:自定义算分函数算法

- 运算模式:算分函数的结果、原始查询的相关性算分,二者之间的运算方式,包括

- multiply:相乘

- replace:用function score替换query score

- 其他,例如:sum、avg、max、min

- function score的运行流程如下

- 根据

原始条件查询搜索文档,并且计算相关性算分,称为原始算法(query score) - 根据

过滤条件,过滤文档 - 符合

过滤条件的文档,基于算分函数运算,得到函数算分(function score) - 将

原始算分(query score)和函数算分(function score)基于运算模式做运算,得到最终给结果,作为相关性算分

- 根据

- 因此,其中的关键点是:

- 过滤条件:决定哪些文档的算分被修改

- 算分函数:决定函数算分的算法

- 运算模式:决定最终算分结果

{% note info no-icon %}

需求:给如家这个品牌的酒店排名靠前一点

思路:过滤条件为"brand": "如家",算分函数和运算模式我们可以暴力一点,固定算分结果相乘

{% endnote %}

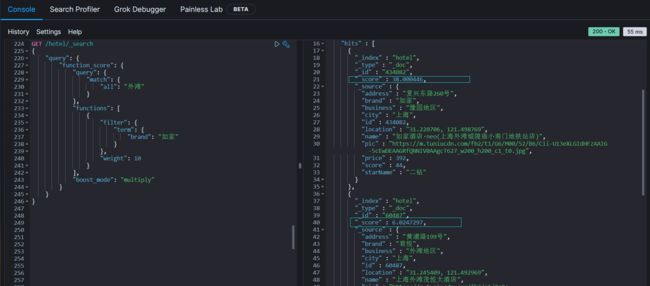

- 对应的DSL语句如下,我们搜索外滩的酒店,对如家品牌过滤,最终的运算结果是10倍的原始算分

GET /hotel/_search

{

"query": {

"function_score": {

"query": {

"match": {

"all": "外滩"

}

},

"functions": [

{

"filter": {

"term": {

"brand": "如家"

}

},

"weight": 10

}

],

"boost_mode": "multiply"

}

}

}

布尔查询

- 布尔查询是一个或多个子查询的组合,每一个子句就是一个子查询。子查询的组合方式有

must:必须匹配每个子查询,类似与should:选择性匹配子查询,类似或must_not:必须不匹配,不参与算分,类似非filter:必须匹配,不参与算分

- 例如在搜索酒店时,除了关键字搜索外,我们还可能根据酒店品牌、价格、城市等字段做过滤

- 每一个不同的字段,其查询条件、方式都不一样,必须是多个不同的查询,而要组合这些查询,就需要用到布尔查询了

{% note warning no-icon %}

需要注意的是,搜索时,参与打分的字段越多,查询的性能就越差,所以在多条件查询时 - 搜索框的关键字搜索,是全文检索查询,使用must查询,参与算分

- 其他过滤条件,采用filter和must_not查询,不参与算分

{% endnote %}

{% note info no-icon %}

需求:搜索名字中包含如家,价格不高于400,在坐标39.9, 116.4周围10km范围内的酒店

分析: - 名称搜索,属于全文检索查询,应该参与算分,放到

must中 - 价格不高于400,用range查询,属于过滤条件,不参与算分,放到

must_not中 - 周围10km范围内,用geo_distance查询,属于过滤条件,放到

filter中

{% endnote %}

GET /hotel/_search

{

"query": {

"bool": {

"must": [

{

"term": {

"name": {

"value": "如家"

}

}

}

],

"must_not": [

{

"range": {

"price": {

"gt": 400

}

}

}

],

"filter": [

{

"geo_distance": {

"distance": "10km",

"location": {

"lat": 39.9,

"lon": 116.4

}

}

}

]

}

}

}

{% note info no-icon %}

需求:搜索城市在上海,品牌为皇冠假日或华美达,价格不低于500,且用户评分在45分以上的酒店

{% endnote %}

GET /hotel/_search

{

"query": {

"bool": {

"must": [

{"term": {

"city": {

"value": "上海"

}

}}

],

"should": [

{"term": {

"brand": {

"value": "皇冠假日"

}

}},

{"term": {

"brand": {

"value": "华美达"

}

}}

],

"must_not": [

{"range": {

"price": {

"lte": 500

}

}}

],

"filter": [

{"range": {

"score": {

"gte": 45

}

}}

]

}

}

}

{% note warning no-icon %}

-

如果细心一点,就会发现这里的should有问题,must和should一起用的时候,should会不生效,结果中会查询到除了

皇冠假日和华美达之外的品牌。 -

对于DSL语句的解决方案比较麻烦,需要在must里再套一个bool,里面再套should,但是对于Java代码来说比较容易修改

{% endnote %} -

小结:布尔查询有几种逻辑关系?

- must:必须匹配的条件,可以理解为

与 - should:选择性匹配的条件,可以理解为

或 - must_not:必须不匹配的条件,不参与打分,可以理解为

非 - filter:必须匹配的条件,不参与打分

- must:必须匹配的条件,可以理解为

搜索结果处理

- 搜索的结果可以按照用户指定的方式去处理或展示

排序

- ES默认是根据相关度算分(_score)来排序,但是也支持自定义方式对搜索结果排序。可以排序的字段有:keyword类型、数值类型、地理坐标类型、日期类型等

普通字段排序

- keyword、数值、日期类型排序的语法基本一致

GET /hotel/_search

{

"query": {

"match_all": {

}

},

"sort": [

{

"FIELD": {

"order": "desc"

},

"FIELD": {

"order": "asc"

}

}

]

}

- 排序条件是一个数组,也就是可以写读个排序条件。按照声明顺序,当第一个条件相等时,再按照第二个条件排序,以此类推

{% note info no-icon %}

需求:酒店数据按照用户评价(score)降序排序,评价相同再按照价格(price)升序排序

{% endnote %}

GET /hotel/_search

{

"query": {

"match_all": {}

},

"sort": [

{

"score": {

"order": "desc"

},

"price": {

"order": "asc"

}

}

]

}

地理坐标排序

- 语法说明

GET /indexName/_search

{

"query": {

"match_all": {}

},

"sort": [

{

"_geo_distance": {

"FIELD": {

"lat": 40,

"lon": -70

},

"order": "asc",

"unit": "km"

}

}

]

}

- 这个查询的含义是

- 指定一个坐标,作为目标点

- 计算每一个文档中,指定字段(必须是geo_point类型)的坐标,到目标点的距离是多少

- 根据距离排序

{% note info no-icon %}

需求:实现酒店数据按照到你位置坐标的距离升序排序

{% endnote %}

GET /hotel/_search

{

"query": {

"match_all": {}

},

"sort": [

{

"_geo_distance": {

"location": {

"lat": 39.9,

"lon": 116.4

},

"order": "asc",

"unit": "km"

}

}

]

}

分页

- ES默认情况下只返回

top10的数据。而如果要查询更多数据就需要修改分页参数了。 - ES中通过修改from、size参数来控制要返回的分页结果

from:从第几个文档开始size:总共查询几个文档

- 类似于mysql中的

limit ?, ?

基本的分页

- 分页的基本语法如下

GET /indexName/_search

{

"query": {

"match_all": {}

},

"from": 0,

"size": 20

}

深度分页问题

- 现在,我要查询990~1000条数据

GET /hotel/_search

{

"query": {

"match_all": {}

},

"from": 990,

"size": 10,

"sort": [

{

"price": {

"order": "asc"

}

}

]

}

- 这里是查询990开始的数据,也就是第991~第1000条数据

- 不过,ES内部分页时,必须先查询

0~1000条,然后截取其中990~1000的这10条

- 查询

TOP1000,如果ES是单点模式,那么并无太大影响 - 但是ES将来一定是集群部署模式,例如我集群里有5个节点,我要查询

TOP1000的数据,并不是每个节点查询TOP200就可以了。 - 因为节点A的TOP200,可能在节点B排在10000名开外了

- 因此想获取整个集群的

TOP1000,就必须先查询出每个节点的TOP1000,汇总结果后,重新排名,重新截取TOP1000

- 那么如果要查询

9900~10000的数据呢?是不是要先查询TOP10000,然后汇总每个节点的TOP10000,重新排名呢? - 当查询分页深度较大时,汇总数据过多时,会对内存和CPU产生非常大的压力,因此ES会禁止

form + size > 10000的请求

- 针对深度分页,ES提供而两种解决方案,官方文档:https://www.elastic.co/guide/en/elasticsearch/reference/current/paginate-search-results.html

- search after:分页时需要排序,原理是从上一次的排序值开始,查询下一页数据。官方推荐使用的方式

- scrool:原理是将排序后的文档id形成快照,保存在内存。官方已经不推荐使用

小结

- 分页查询的常见实现方案以及优缺点

- from + size:

- 优点:支持随机翻页

- 缺点:深度分页问题,默认查询上限是10000(from + size)

- 场景:百度、京东、谷歌、淘宝这样的随机翻页搜索(百度现在支持翻页到75页,然后显示

提示:限于网页篇幅,部分结果未予显示。)

- after search:

- 优点:没有查询上限(单词查询的size不超过10000)

- 缺点:只能向后逐页查询,不支持随机翻页

- 场景:没有随机翻页需求的搜索,例如手机的向下滚动翻页

- scroll:

- 优点:没有查询上限(单词查询的size不超过10000)

- 缺点:会有额外内存消耗,并且搜索结果是非实时的(快照保存在内存中,不可能每搜索一次都更新一次快照)

- 场景:海量数据的获取和迁移。从ES7.1开始不推荐,建议使用after search方案

- from + size:

高亮

高亮原理

实现高亮

- 高亮的语法

GET /indexName/_search

{

"query": {

"match": {

"FIELD": "TEXT"

}

},

"highlight": {

"fields": {

"FIELD": {

"pre_tags": "",

"post_tags": ""

}

}

}

}

{% note warning no-icon %}

注意:

- 高亮是对关键词高亮,因此搜索条件必须带有关键字,而不能是范围这样的查询

- 默认情况下,高亮的字段,必须与搜索指定的字段一致,否则无法高亮

- 如果要对非搜索字段高亮,则需要添加一个属性:

required_field_match=false

{% endnote %} - 示例

GET /hotel/_search

{

"query": {

"match": {

"all": "上海如家"

}

},

"highlight": {

"fields": {

"name": {

"pre_tags": "",

"post_tags": "",

"require_field_match": "false"

}

}

}

}

- 但默认情况下就是加的

标签,所以我们也可以省略

GET /hotel/_search

{

"query": {

"match": {

"all": "上海如家"

}

},

"highlight": {

"fields": {

"name": {

"require_field_match": "false"

}

}

}

}

小结

- 查询的DSL是一个大的JSON对象,包含以下属性

- query:查询条件

- from和size:分页条件

- sort:排序条件

- highlight:高亮条件

- 示例

GET /hotel/_search

{

"query": {

"match": {

"all": "上海如家"

}

},

"from": 0,

"size": 20,

"sort": [

{

"_geo_distance": {

"location": {

"lat": 39.9,

"lon": 116.4

},

"order": "asc",

"unit": "km"

},

"price": "asc"

}

],

"highlight": {

"fields": {

"name": {

"require_field_match": "false"

}

}

}

}

RestClient查询文档

- 文档的查询同样适用于RestHighLevelClient对象,基本步骤包括

- 准备Request对象

- 准备请求参数

- 发起请求

- 解析响应