Flink自定义catalog管理元数据信息

本篇博客通过源码,走进Flink Catalog,并且简单实现了一个MysqlCatalog利用Mysql来存储元数据信息

1.Flink元数据信息

元数据,即描述数据的数据。简单来说,我们创建一个table之后,这个table叫什么名字、有哪些字段、主键是什么、和其他表的关系是什么样的。这都是元数据需要知道的东西,所以元数据是描述数据的数据

在流式处理环境中,Kafka对于数据格式并没有一个约束,所以对元数据的管理我们需要自己去做。此外为了避免在使用Flink SQL的时候频繁创建相同的表,将这些表的信息管理起来也是非常有必要的。

Flink作为一个开源的流式处理引擎,对于元数据的管理也提供了自己的接口Catalog,用户自定义实现Catalog接口就可以对元数据进行管理

2. 元数据模型构建

2.1 从新手角度去了解需要的字段

首先我们要知道Flink需要哪些信息,它对于表有哪些字段的规范,我们可以很简单的去实现,就写一个简单的SQL语句,使用默认的Catalog实现(即GenericInMemoryCatalog),在getTable()方法打上断点,利用IDEA查看返回的是什么。

下面给出测试使用的SQL语句以及getTable()方法

create table sourceTable

(

`region` varchar,

deviceName varchar,

collectValue double,

eventTime bigint,

ts as to_timestamp(from_unixtime(eventTime,'yyyy-MM-dd HH:mm:ss')),

watermark for ts as ts - interval '5' second

) WITH(

'connector'='kafka',

'topic' = 'firsttopic',

'properties.group.id' = 'start_log_group',

'properties.bootstrap.servers' = '10.50.22.229:9092',

'format'='csv',

'csv.ignore-parse-errors' = 'true',

'csv.allow-comments' = 'true'

)

create table destinationTable

(

`region` varchar,

deviceName varchar,

collectValue double

) WITH(

'connector'='kafka',

'topic' = 'secondtopic',

'properties.bootstrap.servers' = '10.50.22.229:9092',

'format'='json'

)

-- 简单的operation操作

insert into destinationTable

select * from sourceTable where deviceName <> '' and `region` <> ''

public CatalogBaseTable getTable(ObjectPath tablePath) throws TableNotExistException {

checkNotNull(tablePath);

if (!tableExists(tablePath)) {

throw new TableNotExistException(getName(), tablePath);

} else {

return tables.get(tablePath).copy();

}

}

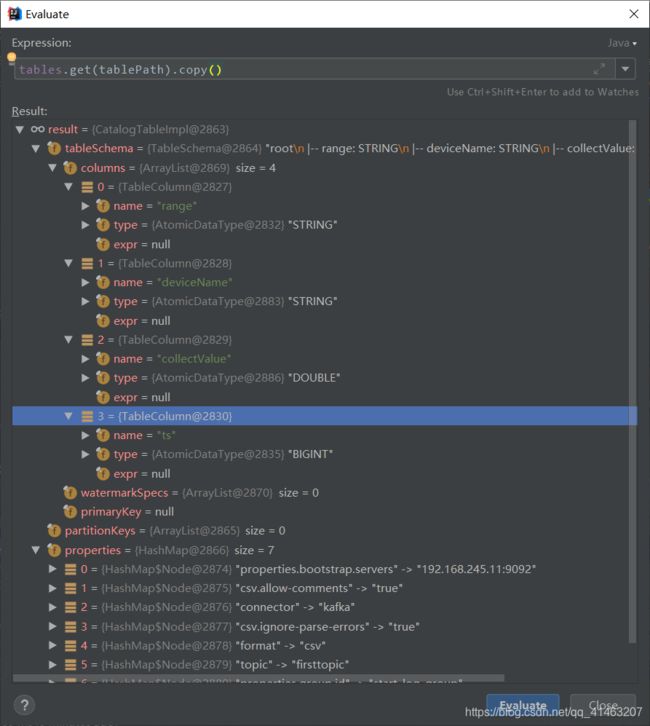

运行程序之后,查看返回的值,结果如下图所示:

这样我们就很清楚的知道,返回的类型是CatalogImpl类,它需要的字段有TableSchema,WatermarkSpec,Properties,partitionKeys

2.2 设计表

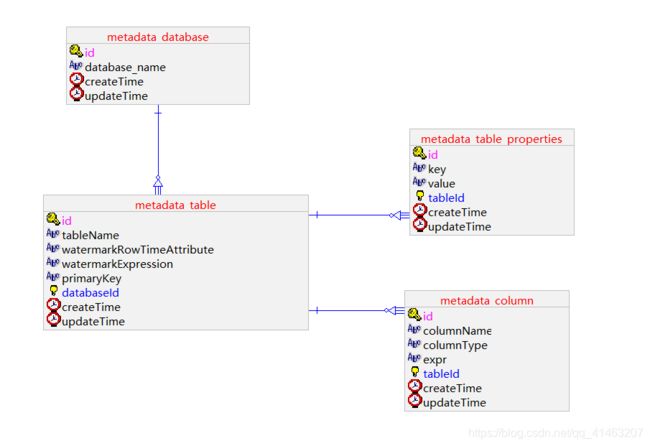

知道我们要的属性之后,我们可以对模型进行简单的设计,生成一个模型图,如下图所示:

这里设计了四张表,分别是数据库,数据表,数据表的列,数据表的配置属性。

这样,我们就可以把表的信息存放在Mysql中了。

2.3 像表中插入数据

INSERT INTO `metadata_database`

VALUES (1, 'default_database', '2021-4-12 15:35:23', '2021-4-12 15:35:26');

INSERT INTO `metadata_table`

VALUES (1, 'sourceTable', '', '', NULL, 1, '2021-4-12 15:31:14', '2021-4-12 15:31:17');

INSERT INTO `metadata_table`

VALUES (2, 'destinationTable', NULL, NULL, NULL, 1, '2021-4-12 17:42:30', '2021-4-12 17:42:33');

INSERT INTO `metadata_table_properties` VALUES (1, 'properties.bootstrap.servers', '192.168.245.11:9092', 1, '2021-4-12 15:58:36', '2021-4-12 15:58:36');

INSERT INTO `metadata_table_properties` VALUES (2, 'csv.allow-comments', 'true', 1, '2021-4-12 15:58:36', '2021-4-12 15:58:36');

INSERT INTO `metadata_table_properties` VALUES (3, 'connector', 'kafka', 1, '2021-4-12 15:58:36', '2021-4-12 15:58:36');

INSERT INTO `metadata_table_properties` VALUES (4, 'csv.ignore-parse-errors', 'true', 1, '2021-4-12 15:58:36', '2021-4-12 15:58:36');

INSERT INTO `metadata_table_properties` VALUES (5, 'format', 'csv', 1, '2021-4-12 15:58:36', '2021-4-12 15:58:36');

INSERT INTO `metadata_table_properties` VALUES (6, 'topic', 'firsttopic', 1, '2021-4-12 15:58:36', '2021-4-12 15:58:36');

INSERT INTO `metadata_table_properties` VALUES (7, 'properties.group.id', 'start_log_group', 1, '2021-4-12 15:58:36', '2021-4-12 15:58:36');

INSERT INTO `metadata_table_properties` VALUES (8, 'connector', 'kafka', 2, '2021-4-12 17:45:29', '2021-4-12 17:45:31');

INSERT INTO `metadata_table_properties` VALUES (9, 'topic', 'secondtopic', 2, '2021-4-12 17:45:33', '2021-4-12 17:45:35');

INSERT INTO `metadata_table_properties` VALUES (10, 'properties.bootstrap.servers', '192.168.245.11:9092', 2, '2021-4-12 17:45:37', '2021-4-12 17:45:39');

INSERT INTO `metadata_table_properties` VALUES (11, 'format', 'json', 2, '2021-4-12 17:45:41', '2021-4-12 17:45:43');

INSERT INTO `metadata_column` VALUES (1, 'region', 'string', NULL, 1, '2021-4-12 15:41:43', '2021-4-12 15:41:43');

INSERT INTO `metadata_column` VALUES (2, 'deviceName', 'string', NULL, 1, '2021-4-12 15:41:43', '2021-4-12 15:41:43');

INSERT INTO `metadata_column` VALUES (3, 'collectValue', 'double', NULL, 1, '2021-4-12 15:42:32', '2021-4-12 15:42:32');

INSERT INTO `metadata_column` VALUES (4, 'ts', 'bigint', NULL, 1, '2021-4-12 15:42:32', '2021-4-12 15:42:32');

INSERT INTO `metadata_column` VALUES (5, 'region', 'string', NULL, 2, '2021-4-12 17:43:11', '2021-4-12 17:43:13');

INSERT INTO `metadata_column` VALUES (6, 'deviceName', 'string', NULL, 2, '2021-4-12 17:43:51', '2021-4-12 17:43:53');

INSERT INTO `metadata_column` VALUES (7, 'collectValue', 'double', NULL, 2, '2021-4-12 17:43:55', '2021-4-12 17:43:57');

INSERT INTO `metadata_column` VALUES (8, 'ts', 'bigint', NULL, 2, '2021-4-12 17:44:00', '2021-4-12 17:44:02');

3. 自定义catalog

一开始我们自定义Catalog的时候,可能会没有思路,不知道要去重写哪些方法。没关系,我们可以找到对应的源码看,Flink目前支持HiveCatalog,JdbcCatalog,PostgresCatalog,我们可以查看这些实现类的源码去了解要编写的操作。

这里通过查看之后,我们需要自己复写的是getTable(),databaseExists(),getFunction()方法。所以实现思路就是在getTable方法中去查询数据库,组成一个CatalogTableImpl类,即可。现在开始上手

3.1 类型对应

因为数据库存储的类型和Flink的类型系统并不是对应的,我们需要将类型进行一个转换操作,自己编写一个方法,这里写一个简单的实现更多的类型可以自己实现:

/**

* 根据数据库中存储的数据类型,返回flink中的数据类型

*

* @param mysqlType

* @return

*/

private DataType mappingType(String mysqlType) {

switch (mysqlType) {

case MYSQL_TYPE_BIGINT:

return DataTypes.BIGINT();

case MYSQL_TYPE_DOUBLE:

return DataTypes.DOUBLE();

case MYSQL_TYPE_STRING:

return DataTypes.STRING();

case MYSQL_TYPE_TIMESTAMP:

return DataTypes.TIMESTAMP(3);

default:

throw new UnsupportedOperationException("current not support " + mysqlType);

}

}

3.2 getTable()编写

@Override

public CatalogBaseTable getTable(ObjectPath tablePath) throws TableNotExistException, CatalogException {

TableSchema.Builder builder = TableSchema.builder();

String databaseName = tablePath.getDatabaseName();

String tableName = tablePath.getObjectName();

String pk = "";

String watermarkRowTimeAttribute = "";

String watermarkExpression = "";

try {

PreparedStatement ps = connection.prepareStatement("select tb.id, db.database_name, tb.tableName,tb.primaryKey, tb.watermarkRowTimeAttribute, tb.watermarkExpression, cl.columnName, cl.columnType, cl.expr FROM metadata_database db JOIN metadata_table tb ON db.id = tb.databaseId JOIN metadata_column cl ON tb.id = cl.tableId WHERE database_name =? AND tableName =?");

ps.setString(1, databaseName);

ps.setString(2, tableName);

ResultSet rs = ps.executeQuery();

while(rs.next()){

if(rs.isFirst()){

pk = rs.getString("primaryKey");

watermarkRowTimeAttribute = rs.getString("watermarkRowTimeAttribute");

watermarkExpression = rs.getString("watermarkExpression");

}

String columnName = rs.getString("columnName");

String columnType = rs.getString("columnType");

String expr = rs.getString("expr");

if(expr == null || "".equals(expr)){

builder.field(columnName,mappingType(columnType));

}else{

builder.field(columnName,mappingType(columnType),expr);

}

}

} catch (Exception e) {

logger.error("get table fail",e);

}

//设置主键

if(pk != null && !"".equals(pk)){

builder.primaryKey(pk.split(";"));

}

//设置watermark

if(watermarkExpression != null && !"".equals(watermarkExpression)){

builder.watermark(watermarkRowTimeAttribute,watermarkExpression,DataTypes.TIMESTAMP(3));

}

TableSchema schema = builder.build();

return new CatalogTableImpl(schema, getPropertiesFromMysql(databaseName,tableName), "").copy();

}

3.3 databaseExists()编写

@Override

public boolean databaseExists(String databaseName) throws CatalogException {

String sql = "select count(*) from metadata_database where database_name=?";

try {

PreparedStatement ps = connection.prepareStatement(sql);

ps.setString(1,databaseName);

ResultSet rs = ps.executeQuery();

while(rs.next()){

int count = rs.getInt(1);

if(count == 0){

return false;

}else {

return true;

}

}

} catch (SQLException e) {

e.printStackTrace();

}

return false;

}

3.4 完整代码

public class MysqlCatalog extends AbstractCatalog {

private Logger logger = LoggerFactory.getLogger(this.getClass());

public static final String MYSQL_CLASS = "com.mysql.jdbc.Driver";

public static final String MYSQL_TYPE_STRING = "string";

public static final String MYSQL_TYPE_DOUBLE = "double";

public static final String MYSQL_TYPE_TIMESTAMP = "timestamp";

public static final String MYSQL_TYPE_BIGINT = "bigint";

private Connection connection;

public MysqlCatalog(String catalogName, String defaultDatabase, String username, String pwd, String connectUrl) {

super(catalogName, defaultDatabase);

try {

Class.forName(MYSQL_CLASS);

this.connection = DriverManager.getConnection(connectUrl,username,pwd);

}catch (Exception e){

throw new RuntimeException(e);

}

}

@Override

public void open() throws CatalogException {}

@Override

public void close() throws CatalogException {

}

@Override

public List<String> listDatabases() throws CatalogException {

return null;

}

@Override

public CatalogDatabase getDatabase(String databaseName) throws DatabaseNotExistException, CatalogException {

return null;

}

@Override

public List<String> listTables(String databaseName) throws DatabaseNotExistException, CatalogException {

return null;

}

@Override

public List<String> listViews(String databaseName) throws DatabaseNotExistException, CatalogException {

return null;

}

@Override

public CatalogBaseTable getTable(ObjectPath tablePath) throws TableNotExistException, CatalogException {

TableSchema.Builder builder = TableSchema.builder();

String databaseName = tablePath.getDatabaseName();

String tableName = tablePath.getObjectName();

String pk = "";

String watermarkRowTimeAttribute = "";

String watermarkExpression = "";

try {

PreparedStatement ps = connection.prepareStatement("select tb.id, db.database_name, tb.tableName,tb.primaryKey, tb.watermarkRowTimeAttribute, tb.watermarkExpression, cl.columnName, cl.columnType, cl.expr FROM metadata_database db JOIN metadata_table tb ON db.id = tb.databaseId JOIN metadata_column cl ON tb.id = cl.tableId WHERE database_name =? AND tableName =?");

ps.setString(1, databaseName);

ps.setString(2, tableName);

ResultSet rs = ps.executeQuery();

while(rs.next()){

if(rs.isFirst()){

pk = rs.getString("primaryKey");

watermarkRowTimeAttribute = rs.getString("watermarkRowTimeAttribute");

watermarkExpression = rs.getString("watermarkExpression");

}

String columnName = rs.getString("columnName");

String columnType = rs.getString("columnType");

String expr = rs.getString("expr");

if(expr == null || "".equals(expr)){

builder.field(columnName,mappingType(columnType));

}else{

builder.field(columnName,mappingType(columnType),expr);

}

}

} catch (Exception e) {

logger.error("get table fail",e);

}

//设置主键

if(pk != null && !"".equals(pk)){

builder.primaryKey(pk.split(";"));

}

//设置watermark

if(watermarkExpression != null && !"".equals(watermarkExpression)){

builder.watermark(watermarkRowTimeAttribute,watermarkExpression, DataTypes.TIMESTAMP(3));

}

TableSchema schema = builder.build();

return new CatalogTableImpl(schema, getPropertiesFromMysql(databaseName,tableName), "").copy();

}

@Override

public boolean tableExists(ObjectPath tablePath) throws CatalogException {

return false;

}

@Override

public void dropTable(ObjectPath tablePath, boolean ignoreIfNotExists) throws TableNotExistException, CatalogException {

}

@Override

public void renameTable(ObjectPath tablePath, String newTableName, boolean ignoreIfNotExists) throws TableNotExistException, TableAlreadyExistException, CatalogException {

}

@Override

public void createTable(ObjectPath tablePath, CatalogBaseTable table, boolean ignoreIfExists) throws TableAlreadyExistException, DatabaseNotExistException, CatalogException {

}

@Override

public void alterTable(ObjectPath tablePath, CatalogBaseTable newTable, boolean ignoreIfNotExists) throws TableNotExistException, CatalogException {

}

@Override

public List<CatalogPartitionSpec> listPartitions(ObjectPath tablePath) throws TableNotExistException, TableNotPartitionedException, CatalogException {

return null;

}

@Override

public List<CatalogPartitionSpec> listPartitions(ObjectPath tablePath, CatalogPartitionSpec partitionSpec) throws TableNotExistException, TableNotPartitionedException, CatalogException {

return null;

}

@Override

public List<CatalogPartitionSpec> listPartitionsByFilter(ObjectPath tablePath, List<Expression> filters) throws TableNotExistException, TableNotPartitionedException, CatalogException {

return null;

}

@Override

public CatalogPartition getPartition(ObjectPath tablePath, CatalogPartitionSpec partitionSpec) throws PartitionNotExistException, CatalogException {

return null;

}

@Override

public boolean partitionExists(ObjectPath tablePath, CatalogPartitionSpec partitionSpec) throws CatalogException {

return false;

}

@Override

public void createPartition(ObjectPath tablePath, CatalogPartitionSpec partitionSpec, CatalogPartition partition, boolean ignoreIfExists) throws TableNotExistException, TableNotPartitionedException, PartitionSpecInvalidException, PartitionAlreadyExistsException, CatalogException {

}

@Override

public void dropPartition(ObjectPath tablePath, CatalogPartitionSpec partitionSpec, boolean ignoreIfNotExists) throws PartitionNotExistException, CatalogException {

}

@Override

public void alterPartition(ObjectPath tablePath, CatalogPartitionSpec partitionSpec, CatalogPartition newPartition, boolean ignoreIfNotExists) throws PartitionNotExistException, CatalogException {

}

@Override

public List<String> listFunctions(String dbName) throws DatabaseNotExistException, CatalogException {

return Collections.emptyList();

}

@Override

public CatalogFunction getFunction(ObjectPath functionPath) throws FunctionNotExistException, CatalogException {

throw new FunctionNotExistException(getName(), functionPath);

}

@Override

public boolean functionExists(ObjectPath functionPath) throws CatalogException {

return false;

}

@Override

public void createFunction(ObjectPath functionPath, CatalogFunction function, boolean ignoreIfExists) throws FunctionAlreadyExistException, DatabaseNotExistException, CatalogException {

}

@Override

public void alterFunction(ObjectPath functionPath, CatalogFunction newFunction, boolean ignoreIfNotExists) throws FunctionNotExistException, CatalogException {

}

@Override

public void dropFunction(ObjectPath functionPath, boolean ignoreIfNotExists) throws FunctionNotExistException, CatalogException {

}

@Override

public CatalogTableStatistics getTableStatistics(ObjectPath tablePath) throws TableNotExistException, CatalogException {

return null;

}

@Override

public CatalogColumnStatistics getTableColumnStatistics(ObjectPath tablePath) throws TableNotExistException, CatalogException {

return null;

}

@Override

public CatalogTableStatistics getPartitionStatistics(ObjectPath tablePath, CatalogPartitionSpec partitionSpec) throws PartitionNotExistException, CatalogException {

return null;

}

@Override

public CatalogColumnStatistics getPartitionColumnStatistics(ObjectPath tablePath, CatalogPartitionSpec partitionSpec) throws PartitionNotExistException, CatalogException {

return null;

}

@Override

public void alterTableStatistics(ObjectPath tablePath, CatalogTableStatistics tableStatistics, boolean ignoreIfNotExists) throws TableNotExistException, CatalogException {

}

@Override

public void alterTableColumnStatistics(ObjectPath tablePath, CatalogColumnStatistics columnStatistics, boolean ignoreIfNotExists) throws TableNotExistException, CatalogException, TablePartitionedException {

}

@Override

public void alterPartitionStatistics(ObjectPath tablePath, CatalogPartitionSpec partitionSpec, CatalogTableStatistics partitionStatistics, boolean ignoreIfNotExists) throws PartitionNotExistException, CatalogException {

}

@Override

public void alterPartitionColumnStatistics(ObjectPath tablePath, CatalogPartitionSpec partitionSpec, CatalogColumnStatistics columnStatistics, boolean ignoreIfNotExists) throws PartitionNotExistException, CatalogException {

}

@Override

public boolean databaseExists(String databaseName) throws CatalogException {

String sql = "select count(*) from metadata_database where database_name=?";

try {

PreparedStatement ps = connection.prepareStatement(sql);

ps.setString(1,databaseName);

ResultSet rs = ps.executeQuery();

while(rs.next()){

int count = rs.getInt(1);

if(count == 0){

return false;

}else {

return true;

}

}

} catch (SQLException e) {

e.printStackTrace();

}

return false;

}

@Override

public void createDatabase(String name, CatalogDatabase database, boolean ignoreIfExists) throws DatabaseAlreadyExistException, CatalogException {

}

@Override

public void dropDatabase(String name, boolean ignoreIfNotExists, boolean cascade) throws DatabaseNotExistException, DatabaseNotEmptyException, CatalogException {

}

@Override

public void alterDatabase(String name, CatalogDatabase newDatabase, boolean ignoreIfNotExists) throws DatabaseNotExistException, CatalogException {

}

private Map<String, String> getPropertiesFromMysql(String databaseName, String tableName) {

Map<String, String> map = new HashMap<>();

String sql = "select tp.`key`,tp.`value` from metadata_database db join metadata_table tb on db.id=tb.databaseId join metadata_table_properties tp on tp.tableId=tb.id where database_name=? and tableName=?";

try {

PreparedStatement ps = connection.prepareStatement(sql);

ps.setString(1,databaseName);

ps.setString(2,tableName);

ResultSet rs = ps.executeQuery();

while (rs.next()){

map.put(rs.getString("key"),rs.getString("value"));

}

}catch (Exception e){

logger.error("get table's properties fail",e);

}

return map;

}

/**

* 根据数据库中存储的数据类型,返回flink中的数据类型

*

* @param mysqlType

* @return

*/

private DataType mappingType(String mysqlType) {

switch (mysqlType) {

case MYSQL_TYPE_BIGINT:

return DataTypes.BIGINT();

case MYSQL_TYPE_DOUBLE:

return DataTypes.DOUBLE();

case MYSQL_TYPE_STRING:

return DataTypes.STRING();

case MYSQL_TYPE_TIMESTAMP:

return DataTypes.TIMESTAMP(3);

default:

throw new UnsupportedOperationException("current not support " + mysqlType);

}

}

/**

* 由FactoryUtils调用,如果返回空,就根据connector字段来判断,利用Java SPI去实现工厂的获取

* AbstractJdbcCatalog默认会返回Jdbc动态工厂这是不对的

* @return

*/

@Override

public Optional<Factory> getFactory() {

return Optional.empty();

}

}

4.使用自定义的Catalog

上面就完成了Catalog的定义,接下来我们在代码中使用自定义的Catalog,首先要利用StreamExecutionEnvironment.registerCatalog()注册catalog,然后利用useCatalog()使用。

object JdbcCatalogTest {

def main(args: Array[String]): Unit = {

val env : StreamExecutionEnvironment = StreamExecutionEnvironment.getExecutionEnvironment

val settings : EnvironmentSettings = EnvironmentSettings.newInstance().inStreamingMode().useBlinkPlanner().build()

val tableEnv : StreamTableEnvironment = StreamTableEnvironment.create(env,settings)

val catalogName = "mysql-catalog"

val defaultDatabase = "default_database"

val username = "root"

val password = "root"

val jdbcUrl = "jdbc:mysql://localhost:3306/flink_metastore?useUnicode=true&characterEncoding=utf8&serverTimezone=UTC"

//使用jdbc catalog

val catalog : MysqlCatalog = new MysqlCatalog(catalogName,defaultDatabase,username,password,jdbcUrl)

tableEnv.registerCatalog(catalogName,catalog)

tableEnv.useCatalog(catalogName)

val queryDDL : String =

"""

|insert into destinationTable

|select * from sourceTable where deviceName <> '' and `region` <> ''

""".stripMargin

tableEnv.executeSql(queryDDL)

}

}

这样代码是不是就清爽了很多,不需要再在代码中写建表的sql语句了

5. 遇到问题解决思路

在出现问题的时候,我们可以一步步的debug去查看方法的调用栈等思路,找到错误原因。