2021-07-22 DGL 图神经网络开源工作 更新翻译

这是一个新的主要版本,包含各种系统优化、新特性和增强功能、新模型和错误修复。

This is a new major release with various system optimizations, new features and enhancements, new models and bug fixes.

重要改变,在pypi安装上进行了与以往安装的区分。

目录

Important: Change on PyPI Installation

New Tutorials for Multi-GPU and Distributed Training

改进的CPU消息传递内核

Improved CPU Message Passing Kernel

PyTorch Lightning Compatibility

New Models

New Datasets

新功能

New Functionalities

性能优化

Performance Optimizations

Other Enhancements

异常修复

Bug Fixes

Important: Change on PyPI Installation

PyPI上不再提供DGL 的pip wheels 。请使用以下命令安装DGL

DGL pip wheels are no longer shipped on PyPI.Use the following command to install DGL with pip:

新的安装命令

pip install dgl -f https://data.dgl.ai/wheels/repo.htmlfor CPU.pip install dgl-cuXX -f https://data.dgl.ai/wheels/repo.htmlfor CUDA.pip install --pre dgl -f https://data.dgl.ai/wheels-test/repo.htmlfor CPU nightly builds.pip install --pre dgl-cuXX -f https://data.dgl.ai/wheels-test/repo.htmlfor CUDA nightly builds.

这不会影响conda的安装。

This does not impact conda installation.

基于GPU的邻居采样

GPU-based Neighbor Sampling

DGL现在支持GPU上的统一邻居采样和MFG转换,由NVIDIA的@nv dlasalle提供。ogbn乘积图(ogbn-product graph )上的GraphSAGE实验在g3.16x实例上获得了>10x的加速比(从每轮113s减少到11s)。以下文件已相应更新:

DGL now supports uniform neighbor sampling and MFG conversion on GPU, contributed by@nv-dlasallefrom NVIDIA. Experiment for GraphSAGE on the ogbn-product graph gets a>10xspeedup (reduced from 113s to 11s per epoch) on a g3.16x instance. The following docs have been updated accordingly:

- 一个新的用户指南章节使用GPU的邻居采样有关何时和如何使用这个新功能。

- NodeDataLoader的API文档。

- A new user guide chapter Using GPU for Neighborhood Sampling about when and how to use this new feature.

- The API doc of NodeDataLoader.

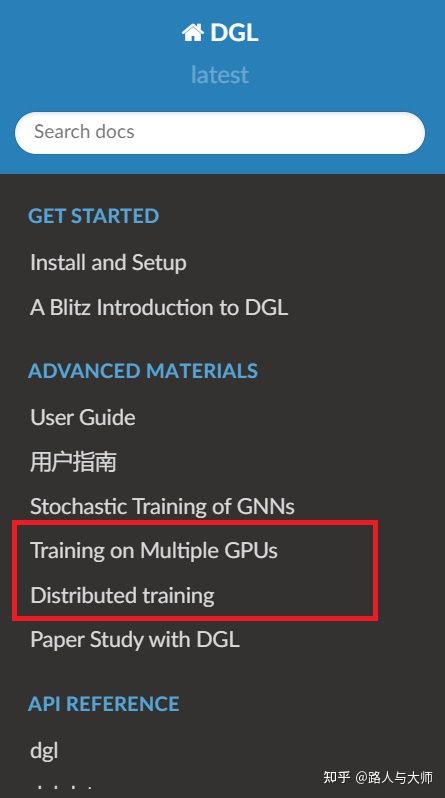

多GPU和分布式训练的新教程

New Tutorials for Multi-GPU and Distributed Training

该版本带来了两个新的教程,分别是关于节点分类和图形分类的多GPU训练。还有一个关于跨多台机器的分布式培训的新教程。所有这些都可以在 https://docs.dgl.ai/.

The release brings two new tutorials about multi-GPU training for node classification and graph classification, respectively. There is also a new tutorial about distributed training across multiple machines. All of them are available at https://docs.dgl.ai/.

改进的CPU消息传递内核

Improved CPU Message Passing Kernel

由于英特尔的@sanchitmisra,更新包含了用于GNN消息传递的核心GSpMM内核的新CPU实现。新内核在稀疏CSR矩阵上执行平铺,并利用Intel的LibXSMM生成内核,这比旧内核提供了高达4.4倍的加速。请看他们的论文https://arxiv.org/abs/2104.06700 详情。

The update includes a new CPU implementation of the core GSpMM kernel for GNN message passing, thanks to@sanchit-misrafrom Intel. The new kernel performs tiling on the sparse CSR matrix and leverages Intel’s LibXSMM for kernel generation, which gives an up to4.4x speedupover the old kernel. Please read their paperhttps://arxiv.org/abs/2104.06700for details.

为多GPU训练和分布式训练提供更高效的节点嵌入

More efficient NodeEmbedding for multi-GPU training and distributed training

DGL现在利用NCCL在训练期间同步稀疏节点嵌入(dgl.nn.NodeEmbedding)的梯度(归功于NVIDIA的@nv-dlasallefrom)。NCCL功能在dgl.optim.SparseAdam和dgl.optim.SparseAdagrad中都可用。实验表明,在g4dn.12xlarge(4 T4 GPU)实例上,在ogbn-mag图上训练RGCN的加速比为20%(从47.2s降到39.5s/epoch)。当检测到NCCL后端支持时,将自动启用优化。

DGL now utilizes NCCL to synchronize the gradients of sparse node embeddings (dgl.nn.NodeEmbedding) during training (credits to@nv-dlasallefrom NVIDIA). The NCCL feature is available in bothdgl.optim.SparseAdamanddgl.optim.SparseAdagrad. Experiments show a20% speedup(reduced from 47.2s to 39.5s per epoch) on a g4dn.12xlarge (4 T4 GPU) instance for training RGCN on ogbn-mag graph. The optimization is automatically turned on when NCCL backend support is detected.

dgl.distributed.DistEmbedding的稀疏优化器现在使用同步梯度更新策略。我们添加了一个新的优化器dgl.distributed.optim.SparseAdam。dgl.distributed.SparseAdagrad已移动到dgl.distributed.optim.SparseAdagrad。

The sparse optimizers fordgl.distributed.DistEmbeddingnow use a synchronized gradient update strategy. We add a new optimizerdgl.distributed.optim.SparseAdam. Thedgl.distributed.SparseAdagradhas been moved todgl.distributed.optim.SparseAdagrad.

稀疏矩阵乘法和加法支持

Sparse-sparse Matrix Multiplication and Addition Support

我们添加了两个新的api dgl.adj_product_graph和dgl.adj_sum_graph,分别执行稀疏矩阵乘法和加法作为图操作。他们可以运行与CPU和GPU与自动标签支持。这些函数的一个示例用法是图形变换器网络。

We add two new APIsdgl.adj_product_graphanddgl.adj_sum_graphthat perform sparse-sparse matrix multiplications and additions as graph operations respectively. They can run with both CPU and GPU with autograd support. An example usage of these functions isGraph Transformer Networks.

PyTorch Lightning兼容性

PyTorch Lightning Compatibility

DGL现在与PyTorch Lightning兼容,用于单个GPU训练或分布式数据并行训练。请看这个用PyTorch Lightning训练GraphSAGE的示例。

DGL is now compatible with PyTorch Lightning for single-GPU training or training with DistributedDataParallel. See this example of training GraphSAGE with PyTorch Lightning.

- 节点分类: https://github.com/dmlc/dgl/blob/master/examples/pytorch/graphsage/train_lightning.py

- 无监督学习: https://github.com/dmlc/dgl/blob/master/examples/pytorch/graphsage/train_lightning_unsupervised.py

- Node classification: https://github.com/dmlc/dgl/blob/master/examples/pytorch/graphsage/train_lightning.py

- Unsupervised learning: https://github.com/dmlc/dgl/blob/master/examples/pytorch/graphsage/train_lightning_unsupervised.py

感谢@justusschock使DGL数据加载程序与PyTorch Lightning(#2886)兼容。

We thank@justusschockfor making DGL DataLoaders compatible with PyTorch Lightning (#2886).

新模型

New Models

DGL在0.7中添加了一批19个新模型示例,使模型总数达到90+。用户现在可以使用上的搜索栏https://www.dgl.ai/ 快速定位带有标记关键字的示例。以下是新增模型列表。

A batch of19 new model examplesare added to DGL in 0.7 bringing the total number to be 90+. Users can now use the search bar onhttps://www.dgl.ai/to quickly locate the examples with tagged keywords. Below is the list of new models added.

- 学习对象、关系和物理的交互网络

- Interaction Networks for Learning about Objects, Relations, and Physics (https://arxiv.org/abs/1612.00222.pdf) (#2794, @Ericcsr)

- 多GPU RGAT支撑的OGB-LSC节点分类

- Multi-GPU RGAT for OGB-LSC Node Classification (#2835, @maqy1995)

- 标签完全不平衡的网络嵌入

- Network Embedding with Completely-imbalanced Labels (https://ieeexplore.ieee.org/document/8979355) (#2813, @Fizyhsp)

- 改进的时态图网络

- Temporal Graph Networks improved (#2860, @Ericcsr)

- 扩散卷积递归神经网络

- Diffusion Convolutional Recurrent Neural Network (https://arxiv.org/abs/1707.01926) (#2858, @Ericcsr)

- 大型时空图学习的门控注意网络

- Gated Attention Networks for Learning on Large and Spatiotemporal Graphs (https://arxiv.org/abs/1803.07294) (#2858, @Ericcsr)

- 更深的GCN

- DeeperGCN (https://arxiv.org/abs/2006.07739) (#2831, @xnuohz)

- 深度图对比表征学习

- Deep Graph Contrastive Representation Learning (https://arxiv.org/abs/2006.04131) (#2828, #3009, @hengruizhang98)

- 受经典迭代算法启发的图神经网络

- Graph Neural Networks Inspired by Classical Iterative Algorithms (https://arxiv.org/abs/2103.06064) (#2770, @ffttyy)

- GraphSAINT (#2792) (@lt610)

- 标签传播

- Label Propagation (#2852, @xnuohz)

- 将标签传播和简单模型相结合,可使图神经网络性能更佳

- Combining Label Propagation and Simple Models Out-performs Graph Neural Networks (https://arxiv.org/abs/2010.13993) (#2852, @xnuohz)

- GCNII (#2874, @kyawlin)

- 在GPU上训练的潜在Dirichlet分配

- Latent Dirichlet Allocation on GPU (#2883, @yifeim)

- 基于异构信息网络的冷启动用户跨域保险推荐系统

- A Heterogeneous Information Network based Cross Domain Insurance Recommendation System for Cold Start Users (#2864, @KounianhuaDu)

- 五种异构图模型:HetGNN/GTN/HAN/NSHE/MAGNN

- Five heterogeneous graph models: HetGNN/GTN/HAN/NSHE/MAGNN (#2993, @Theheavens)

- 新的OGB-arxiv和OGB蛋白(OGB-proteins)结果

- New OGB-arxiv and OGB-proteins results (#3018, @espylapiza)

- 基于小批量抽样的异构图注意网络

- Heterogeneous Graph Attention Networks with minibatch sampling (#3005, @maqy1995)

- 用于图像聚类的层次图神经网络学习方法

- Learning Hierarchical Graph Neural Networks for Image Clustering (https://arxiv.org/abs/2107.01319) (#3087, #3105)

新数据集

New Datasets

- 两个假新闻数据集,gossippop和Politifact。

- Two fake news datasets, Gossipcop and Politifact. (#2876, #2939, @kayzliu)

- 从Yelp和Amazon提取的两个碎片数据。可以去https://arxiv.org/pdf/2008.08692.pdf 和https://ponderly.github.io/pub/PCGNN_WWW2021.pdf 了解细节。

- Two fraud datasets extracted from Yelp and Amazon. See https://arxiv.org/pdf/2008.08692.pdf and https://ponderly.github.io/pub/PCGNN_WWW2021.pdf for details. (#2876, #2908, @kayzliu)

新功能

New Functionalities

- KD树、暴力家族和KNN的NN下降实现

- KD-Tree, Brute-force family, and NN-descent implementation of KNN (#2767, #2892, #2941) (@lygztq)

- GPU上基于BLAS的KNN实现

- BLAS-based KNN implementation on GPU (#2868, @milesial)

- 一个新的API dgl.sample_neighbors_biased 用于偏置相邻采样,其中每个节点都有一个标记,每个标记都有自己的(非标准化)概率(#1665, #2987, @soodoshll). 。我们还提供了两个助手函数sort_csr_by_tag和sort_csc_by_tag,用于根据标记对图形的内部存储进行排序,以允许这种相邻采样。

- A new API

dgl.sample_neighbors_biasedfor biased neighbor sampling where each node has a tag, and each tag has its own (unnormalized) probability (#1665, #2987, @soodoshll). We also provide two helper functionssort_csr_by_tagandsort_csc_by_tagto sort the internal storage of a graph based on tags to allow such kind of neighbor sampling (#1664, @soodoshll). - 分布式稀疏Adam节点嵌入优化器

- Distributed sparse Adam node embedding optimizer (#2733)

- 异类图的multi_update_all现在支持用户定义的cross-type reducers

- Heterogeneous graph’s

multi_update_allnow supports user-defined cross-type reducers (#2891, @Secbone) - 向dgl.DistGraph添加in_degrees和out_degrees支持

- Add

in_degreesandout_degreessupports todgl.DistGraph(#2918) - node2vec随机游动的新API dgl.sampling.node2vec_random_walk

- A new API

dgl.sampling.node2vec_random_walkfor Node2vec random walks (#2992, @Smilexuhc) - dgl.node_subgraph, dgl.edge_subgraph, dgl.in_subgraph 和dgl.out_subgraph都增加一个relabel_nodes参数,以允许图形压缩

dgl.node_subgraph,dgl.edge_subgraph,dgl.in_subgraphanddgl.out_subgraphall have arelabel_nodesargument to allow graph compaction (i.e. removing the nodes with no edges). (#2929)- 允许在不构造新数据结构的情况下对批处理图进行直接切片。

- Allow direct slicing of a batched graph without constructing a new data structure. (#2349, #2851, #2965)

- NodeEmbedding.all_set_embedding()允许使用设置分布式节点嵌入

- Allow setting the distributed node embeddings with

NodeEmbedding.all_set_embedding()(#3047) - 图形可以直接从CPU或GPU上的CSR或CSC表示创建,详见dgl.graph的API文档。

- Graphs can be directly created from CSR or CSC representations on either CPU or GPU (#3045). See the API doc of

dgl.graphfor more details. - 一种新的dgl.reorder API,用于根据RCMK、METIS或自定义策略排列图

- A new

dgl.reorderAPI to permute a graph according to RCMK, METIS or custom strategy (#3063) - dgl.nn.GraphConv现在有一个左标准化,它将传出的消息除以out-degrees,相当于随机游走标准化

dgl.nn.GraphConvnow has a left normalization which divides the outgoing messages by out-degrees, equivalent to random-walk normalization (#3114)- 将新的exclude='self'添加到EdgeDataLoader,以便在反向边不可用时,在相邻采样期间仅排除当前小批量中采样的边

- Add a new

exclude='self'to EdgeDataLoader to exclude the edges sampled in the current minibatch alone during neighbor sampling when reverse edges are not available (#3122)

性能优化

Performance Optimizations

- 检查是否对COO进行了排序,以避免在前向/后向和并行化排序的COO/CSR转换期间进行同步

- Check if a COO is sorted to avoid sync during forward/backward and parallelize sorted COO/CSR conversion. (#2645, @nv-dlasalle)

- 更快的均匀采样和替换

- Faster uniform sampling with replacement (#2953)

- 消除随机游动中的ctor&dtor&IsNullArray开销

- Eliminating ctor & dtor &

IsNullArrayoverheads in random walks (#2990, @AjayBrahmakshatriya) - 带有一个边缘类型的GatedGCNConv快捷方式

- GatedGCNConv shortcut with one edge type (#2994)

- 分布式训练中的层次划分,加速比为25%

- Hierarchical Partitioning in distributed training with 25% speedup (#3000, @soodoshll)

- 在分区过程中节省节点分割(node_split)和边缘分割(edge_split)中的内存使用

- Save memory usage in

node_splitandedge_splitduring partitioning (#3132, @JingchengYu94)

其他增强功能

Other Enhancements

- 图分区现在返回从旧节点/边到新节点/边的ID映射

- Graph partitioning now returns ID mapping from old nodes/edges to new ones (#2857)

- idx_list列表越界时的更好错误消息

- Better error message when

idx_listout of bound (#2848) - 在接收键盘中断时,在分布式训练中终止远程机器上的训练作业

- Kill training jobs on remote machines in distributed training when receiving KeyboardInterrupt (#2881)

- 为使用fork和OpenMP的多进程培训提供dgl.multiprocessing命名空间

- Provide a

dgl.multiprocessingnamespace for multiprocess training with fork and OpenMP (#2905) - GAT支持多维输入功能

- GAT supports multidimensional input features (#2912)

- 用户现在可以为分布式培训指定图形格式

- Users can now specify graph format for distributed training (#2948)

- CI现在可以在Kubernetes上运行

- CI now runs on Kubernetes (#2957)

- to_heterogeneous(to_homogeneous(hg))现在返回和hg相同的。

to_heterogeneous(to_homogeneous(hg))now returns the samehg. (#2958)- remove_nodes 和 remove_edges现在保留批处理信息。

remove_nodesandremove_edgesnow preserves batch information. (#3119)

异常修复

Bug Fixes

- python3.8中分布式训练中的多进程采样挂起

- Multiprocessing sampling in distributed training hangs in Python 3.8 (#2315, #2826)

- 使用正确的NIC进行分布式培训

- Use correct NIC for distributed training (#2798, @Tonny-Gu)

- 修复HGT示例中的潜在类型错误

- Fix potential TypeError in HGT example (#2830, @zhangtianle)

- 没有节点/边数据的图的分布式训练初始化失败

- Distributed training initialization fails with graphs without node/edge data (#2366, #2838)

- 当一些DGL节点嵌入不涉及向前传递DGL稀疏优化器将崩溃

- DGL Sparse Optimizer will crash when some DGL NodeEmbedding is not involved in the forward pass (#2856, #2859)

- 修复带有剩余连接的GATConv shape问题

- Fix GATConv shape issues with Residual Connections (#2867, #2921, #2922, #2947, #2962, @xieweiyi, @jxgu1016)

- 将图形移动到GPU将更改默认的CUDA设备

- Moving a graph to GPU will change the default CUDA device (#2895, #2897)

- 移除 __len__ 方法停止污染PyCharm输出

- Remove

__len__method to stop polluting PyCharm outputs (#2902) - load_partition分区返回的节点类型和边缘类型的类型不一致

- Inconsistency in the typing of node types and edge types returned by

load_partition(#2742, @chwan-rice) - NodeDataLoader和EdgeDataLoader现在支持DistributedDataParallel以及适当的打乱和批处理

NodeDataLoaderandEdgeDataLoadernow supportsDistributedDataParallelwith proper shuffling and batching (#2539, #2911)- 带替换的非均匀采样可能会取消对空指针的引用

- Nonuniform sampling with replacement may dereference null pointer (#2942, #2943, @nv-dlasalle)

- bipartite_from_networkx()奇怪的行为

- Strange behavior of

bipartite_from_networkx()(#2808, #2917) - 使GCMC示例与torchtext 0.9兼容+

- Make GCMC example compatible with torchtext 0.9+ (#2985, @alexpod1000)

- dgl.to_homogenous在具有给定类型的0个节点的图上无法正常工作

dgl.to_homogenousdoesn't work correctly on graphs with 0 nodes of a given type (#2870, #3011)- TU 回归数据集抛出错误

- TU regression datasets throw errors (#2952, #3010)

- RGCN 在PyTorch 1.8中生成nan,但在PyTorch 1.7.x中不生成

- RGCN generates nan in PyTorch 1.8 but not in PyTorch 1.7.x (#2760, #3013, @nv-dlasalle)

- 处理GraphSAGE 层数等于1的情况

- Deal with situation where

num_layersequals 1 for GraphSAGE (#3066, @Wang-Yu-Qing) - 延长分布式节点嵌入的超时时间

- Lengthen the timeout for distributed node embedding (#2966, #2967@sojiadeshina)

- 代码和文档中的杂项修复

- Misc fixes in code and documentation (#2844, #2869, #2840, #2879, #2863, #2822, #2907, #2928, #2935, #2960, #2938, #2968, #2961, #2983, #2981, #3017, #3051, #3040, #3064, #3065, #3133, #3139) (@Theheavens, @ab-10, @yunshiuan, @moritzblum, @kayzliu, @universvm, @europeanplaice, etc.)