Exact Feature Distribution Matching for Arbitrary Style Transfer and Domain Generalization

文章目录

- abstract

- introduction

- related work

-

- Arbitrary style transfer (AST)

- Domain generalization (DG)

- Exact histogram matching (EHM)

- Methodology

-

- Adaptive instance normalization (AdaIN)

- Histogram matching (HM)

- Exact Histogram Matching (EHM)

- EFDM for AST and DG

-

- EFDM for AST

- EFDM for DG

- Experiments

-

- Experiments on DG

-

- Generalization on category classification.

- Generalization on instance retrieval.

title: Exact Feature Distribution Matching for Arbitrary Style Transfer and Domain Generalization

适用 随机风格变换AST 和 领域泛化DG 的 精确特征分布匹配算法

abstract

Arbitrary style transfer (AST) and domain generalization (DG) are important yet challenging visual learning tasks, which can be cast as a feature distribution matching problem.

ast和dg, 可以映射为特征分布匹配问题

With the assumption of Gaussian feature distribution, conventional feature distribution matching methods usually match the mean and standard deviation of features.

基于高斯特征分布假设, 卷积特征分布匹配方法通常和特征的均值与标准差做匹配

In this work, we, for the first time to our best knowledge, propose to perform Exact Feature Distribution Matching (EFDM) by exactly matching the empirical Cumulative Distribution Functions (eCDFs) of image features, which could be implemented by applying the Exact Histogram Matching (EHM) in the image feature space.

本论文是第一次提出通过图像特征的经验累积分布eCDFs的精确匹配来实现精确特征分布匹配,具体可以通过在图像特征空间中应用精确直方图匹配来实现

Particularly, a fast EHM algorithm, named Sort-Matching, is employed to perform EFDM in a plug-and-play manner with minimal cost.

快速直方图匹配算法,也名为快速排序算法,实现即时最小代价实现精确特征分布匹配EFDM

代码:https://github.com/YBZh/EFDM ;

https://github.com/KaiyangZhou/Dassl.pytorch;

introduction

in arbitrary style transfer (AST) [12, 21], image styles can be interpreted as feature distributions and style transfer can be achieved by cross-distribution feature matching [25, 34].

随机风格转换中,图像风格可以解释为特征分布,风格转移可以通过跨分布特征匹配来实现

by using style transfer techniques to augment training data, one can address the domain generalization (DG) tasks [13, 72]

通过使用风格转换技术来对训练数据进行数据增强,可以实现领域泛化任务的相关问题

The most popular method of feature distribution matching is to match feature mean and standard deviation by assuming that features follow Gaussian distribution [21,32,37,41,72].

最常使用的特征分布匹配方法是假设特征符合高斯分布,来匹配特征的均值和标准差

feature distribution matching by using only mean and standard deviation is less accurate.

现实复杂,假设高斯分布不够

Motivated by the Glivenko–Cantelli theorem [54], which states that the empirical Cumulative Distribution Function (eCDF) asymptotically converges to the Cumulative Distribution Function when the number of samples approaches infinity, Risser et al. [46] introduce the classical Histogram Matching (HM) [16, 58] method as an auxiliary measurement to minimize the feature distribution divergence.

当样本数趋于无穷时,经验累积分布函数逐渐趋于累积分布函数。

此外,还有个 经典直方图匹配方法HM作为最小化特征分布方差的辅助计算方法

For features generated by deep models, equivalent feature values are also ineluctable due to their dependency on discrete image pixels and the use of activation functions, e.g., ReLU [42] and ReLU6 [26] (please refer to Fig. 3 for more details). All these facts impede the effectiveness of EFDM via HM.

对于深度模型生成的特征,依赖于离散图像像素和激活函数的使用,不可避免地获得等同的特征值,这些事实都阻碍EFDM通过HM的效果

Figure 1. Histograms of feature values in a randomly selected channel, where features are computed from the first residual block of a ResNet-18 [20] trained on the dataset of four domains [28]. We first normalize the mean and standard deviation of each channel to be 0 and 1, respectively, and then collect feature values among all test samples in each domain for visualization. One can clearly see that the feature distributions of real-world data are usually too complicated to be modeled by Gaussian.

随机选择通道特征值的直方图,其特征从resnet18第一块残差块计算所得,在四个领域中训练。我们首先将每个通道的均值和方差分别标准化到0和1,然后在每个领域的所有测试样本中手机特征值。显然,真实世界的数据的特征值难以被高斯模型化

Following [72], we extend EFDM to generate feature augmentations with mixed styles, leading to the Exact Feature Distribution Mixing (EFDMix) (cf. Eq. (10)), which can provide more diverse feature augmentations for DG applications.

拓展EFDM来使用混合风格来生成特征增强,得到EFDMix,这可以为DG的应用提供多样的特征增强

related work

Arbitrary style transfer (AST)

两个概念方向:基于迭代优化的方法iterative optimization-based methods;前馈方法feed-forward methods

Our method belongs to the latter one, which is generally faster and suitable for real-time applications.

前馈方法更快,更适合实时性的应用

Based on the Gaussian prior assumption, feature distribution matching is conducted by matching mean and standard deviation in AdaIN

基于 高斯先验假设 的 adaptive instance normalization 自适应实例标准化的特征分布匹配由匹配均值和标准差实现

Compared to AdaIN, WCT [33] additionally considers the covariance of feature channels via a pair of feature transforms, whitening and coloring.

不同于adain,WCT额外利用一对特征变换,白化和彩化,考虑了特征通道的协方差

To this end, we, for the first time to our best knowledge, propose an accurate and efficient way for EFDM by exactly matching the eCDFs of image features, leading to more faithful AST results (please refer to Fig. 5 for visual examples).

低阶矩效果差,高阶矩计算量大,因此通过对图像特征精确匹配经验累积函数提出一种精确且高效的EFDM,可以得到更有效的AST效果

Domain generalization (DG)

Typical DG methods include learning domain-invariant feature representations [5, 15, 31, 40, 65–67], meta-learning-based learning strategies [4, 9, 29], data augmentation [13, 43, 56, 61, 71, 72] and so on [57, 69].

通常的DG方法:

-

学习域不变特征表征

-

基于元学习的学习策略

-

数据增强

-

等等

Among all above methods, the recent state-of-the-art [72] augments cross-distribution features based on the feature distribution matching technique [21], which is introduced in the above AST part.

最新方法是基于特征分布匹配技术的跨域增强

By utilizing high-order statistics implicitly via the proposed EFDM method, more diverse feature augmentations can be achieved and significant performance improvements have been observed (please refer to Tabs. 1 and 2 for details).

通过提出的EFDM方法利用高阶统计量,可以实现更多样的特征增强和更大的性能提升

Exact histogram matching (EHM)

Compared to classical HM, EHM algorithms distinguish equivalent pixel values either randomly [47, 48] or according to their local mean [7, 18], leading to more accurate matching of histograms.

不同于经典直方图,EHM算法区别于等同的像素值,利用的是随机或根据局部均值的特征值,得到更精确的直方图匹配

The difference between outputs of EHM and HM in image pixel space is typically small, which is hardly perceptible to human eyes.

EHM和Em在图像像素空间的输出差别非常小,人眼难以区别

However, this small difference can be amplified in the feature space of deep models, leading to clear divergence in feature distribution matching. We hence propose to perform EFDM by exactly matching the eCDFs of image features via EHM. While EHM can be conducted with different strategies, we empirically find that they yield similar results in our applications, and thus we promote the fast Sort-Matching [47] algorithm for EHM.

不同种的EHM效果差不多,因此提出最快的算法,EHM的快排算法

Methodology

Adaptive instance normalization (AdaIN)

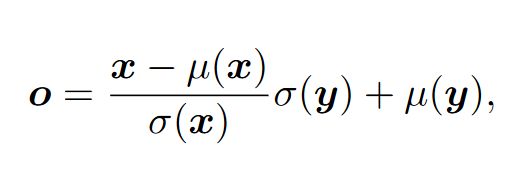

从一个随机变量X中抽样一个n维输入向量x,输出均值和标准差和目标向量y匹配的m维输出向量o

By assuming that X and Y follow Gaussian distributions and n and m approach infinity, AdaIN can achieve EFDM by matching feature mean and standard deviation [32, 37, 41].

假定X,Y服从高斯分布,n,m趋于无穷,adain可以通过特征均值和标准差实现EFDM

However, feature distributions of real-world data usually deviate much from Gaussian, as can be seen from Fig. 1. Therefore, matching feature distributions by AdaIN is less accurate.

由于现实世界数据的复杂性,adain的匹配特征分布精度相较理论更低

Histogram matching (HM)

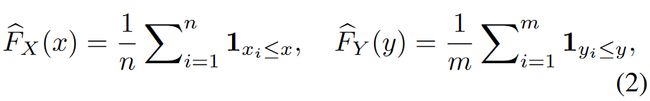

输入向量x到输出向量o,输出向量o的eCDF匹配目标域向量y的eCDF

It is worth mentioning that matching eCDFs is equivalent to matching histograms with bins of infinitesimal width, which is however hard to achieve due to the finite number of bits to represent features.

值得一提的是,匹配eCDFs等同于匹配无穷小宽的直方图,由于需要无穷位数来表征特征而非常难以实现

Ideally, HM could exactly match eCDFs of image features in the continuous case.

理想化,HM可以在连续空间精确匹配图像特征的eCDFs

Unfortunately, HM can only approximately match eCDFs when there exist equivalent feature values in inputs, since HM merges equivalent values as a single point and applies a point-wise transformation

当输入存在等效特征值时,HM只能大概匹配eCDFs,因为HM合并等效值为一个单点,并且应用基于点的变换

Exact Histogram Matching (EHM)

Different from HM, EHM algorithms distinguish equivalent pixel values and apply an element-wise transformation so that a more accurate histogram matching can be achieved.

EHM区别于等效像素值,其应用基于元素的变换,从而得到可实现的更高精度的直方图匹配

While EHM can be conducted with different strategies, we adopt the Sort-Matching algorithm [47] for its fast speed. SortMatching is based on the quicksort strategy [49], which is generally accepted as the fastest sort algorithm with complexity of O(n log n). As stated by its name, Sort-Matching is implemented by matching two sorted vectors, whose indexes are illustrated in a one-line notation [2] as:

快排的空间复杂度为nlogn

Compared to AdaIN, HM and other EHM algorithms [7, 18], Sort-Matching additionally assumes that the two vectors to be matched are of the same size, i.e. m = n, which is satisfied in our focused applications of AST and DG. In other applications where the two vectors are of different sizes, interpolation or dropping elements can be conducted to make y and x the same size.

AST和DG两组向量匹配,所以适用;其他应用向量维度不匹配的,需要填充或者裁切,使得维度相同

EFDM for AST and DG

EFDM for AST

A simple encoder-decoder architecture is adopted

采用编码器-解码器架构

we fix the encoder f as the first few layers (up to relu4 1) of a pre-trained VGG-19

固定编码器

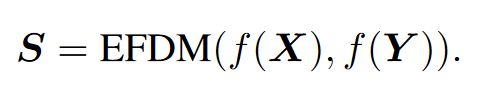

Given the content images X and style images Y , we first encode them to the feature space and apply EFDM to get the styletransferred features as

给定内容图像X和风格图像Y,首先将他们编码到特征空间,再应用EFDM来获取风格转换后的特征

Then, we train a randomly initialized decoder g to map S to the image space, resulting in the stylized images g(S).

然后训练一个随机初始化的解码器g去映射S到图像空间,得到风格化后的图像g(S)。

Following [10, 21], we train the decoder with the weighted combination of a content loss Lc and a style loss Ls, leading to the following objective:

然后训练带内容损失和风格损失权重的解码器

EFDM for DG

Inspired by the studies that style information can be represented by the mean and standard deviation of image features [21, 33, 37], Zhou et al. [72] proposed to generate style-transferred and content-preserved feature augmentations for DG problems.

Zhou提出生成风格转化、内容保留的对于DG问题的特征增强方法

As we discussed before, distributions beyond Gaussian have high-order statistics other than mean and standard deviation, and hence the style information can be more accurately represented by using high-order feature statistics.

非高斯分布比起均值和标准差有更高阶的统计量,因此使用更高阶的特征统计量可以使风格信息表征地更精确

楠木那=0时退化到EFDM

The advantage of utilizing high-order feature statistics could be intuitively clarified by the augmentation diversity.

使用高阶特征统计量的好处是可以由增强多样性说明。

Experiments

Experiments on DG

Generalization on category classification.

We adopt the popular DG benchmark dataset of PACS

Da Li, Yongxin Yang, Yi-Zhe Song, and Timothy M Hospedales. Deeper, broader and artier domain generalization. In Proceedings of the IEEE international conference on computer vision, pages 5542–5550, 2017. 2, 5, 7

Generalization on instance retrieval.

adopt the person re-identification (re-ID) datasets