M2DGR数据集在一些SLAM框架上的配置与运行:ORB-SLAM系列、VINS-Mono、LOAM系列、FAST-LIO系列、hdl_graph_slam

文章目录

- 一、M2DGR数据集

- 二、ORB-SLAM2

-

- 2.1 配置参数

- 2.2 单目

- 三、ORB-SLAM3

-

- 3.1 配置参数

- 3.2 运行单目+IMU

- 四、VINS-Mono

-

- 4.1 配置参数

- 4.2 运行单目+IMU

- 五、DM-VIO

-

- 5.1 安装

- 5.2 配置运行

- 六、A-LOAM

- 七、LeGO-LOAM

- 八、LIO-SAM

-

- 8.1 配置参数

- 8.2 运行

- 九、LVI-SAM

-

- 9.1 配置参数

- 9.2 运行

- 十、LINS

-

- 10.1 安装

- 10.2 配置参数

- 10.3 运行

- 十一、FAST-LIO2

-

- 11.1 安装

- 11.2 配置参数

- 11.3 运行

- 十二、FAST-LIVO

-

- 12.1 安装

- 12.2 运行

- 12.3 R3LIVE

- 十三、Faster-LIO

-

- 13.1 Faster-LIO的安装

- 13.2 配置参数

- 13.3 运行

- 十四、hdl_graph_slam

-

- 14.1 安装

- 14.2 纯激光

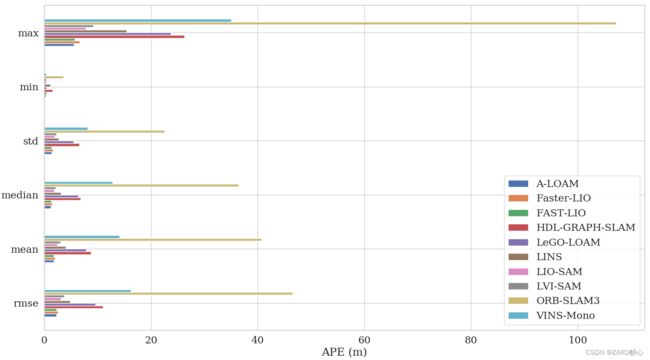

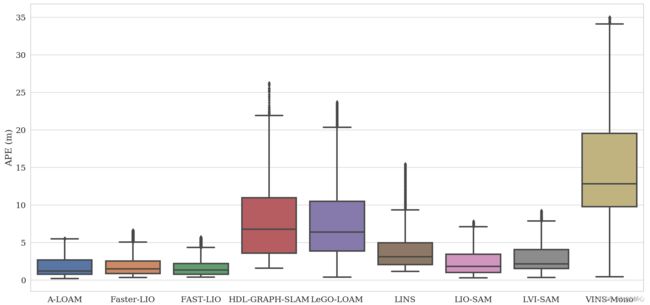

- 十五、定位结果质量评估与比较

为了说明以上这些SLAM框架的测试与数据集适配,以M2DGR数据集为例进行各自的配置与运行,方便我以后适配自己的数据集,运行的SLAM框架包括:ORB-SLAM2、ORB-SLAM3、VINS-Mono、A-LOAM、LeGO-LOAM、LIO-SAM、LVI-SAM、FAST-LIO2、FAST-LIVO、Faster-LIO、hdl_graph_slam。实验基于Ubuntu20.04,其中ORB、VINS-Mono、和LOAM系列的安装参考我以前的博客,有详细的安装方法,其他的框架在后面会简单介绍一下安装方法

由于学的还不够深入,主要目的就是记录参数配置方法,部分参数配置可能有错误而导致数据跑的有问题,如有发现错误或问题,欢迎讨论,我来修改

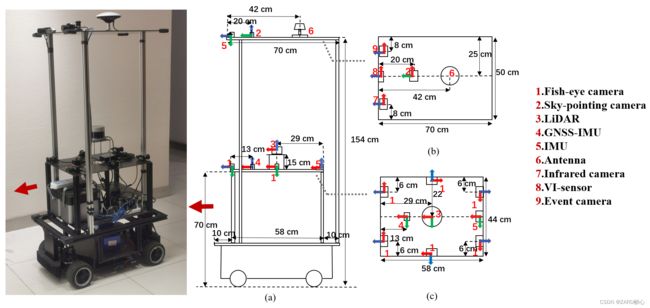

一、M2DGR数据集

M2DGR是由具有完整传感器套件的地面机器人收集的新型大规模数据集,传感器套件包括六个鱼眼和一个指向天空的RGB相机、红外相机、事件相机、视觉惯性传感器(VI-传感器)、惯性测量单元(IMU)、LiDAR、消费级全球导航卫星系统(GNSS)接收器和具有实时动态(RTK)信号的GNSS-IMU导航系统。 所有这些传感器都经过了良好的标定和同步,它们的数据被同时记录下来。通过运动捕捉设备、激光3D跟踪器和RTK获得轨迹真值。 该数据集包括36个序列(约1 TB),这些序列是在包括室内和室外环境在内的不同场景中捕获的。

传感器参数如下:

- LIDAR Velodyne VLP-32C, 360 Horizontal Field of View (FOV),-30 to +10 vertical FOV,10Hz,Max Range 200 m,Range Resolution 3 cm, Horizontal Angular Resolution 0.2°.

- RGB Camera FLIR Pointgrey CM3-U3-13Y3C-CS,fish-eye lens,1280*1024,190 HFOV,190 V-FOV, 15 Hz

- GNSS Ublox M8T, GPS/BeiDou, 1Hz

- Infrared Camera,PLUG 617,640*512,90.2 H-FOV,70.6 V-FOV,25Hz;

- V-I Sensor,Realsense d435i,RGB/Depth 640*480,69H-FOV,42.5V-FOV,15Hz;IMU 6-axix, 200Hz

- Event Camera Inivation DVXplorer, 640*480,15Hz;

- IMU,Handsfree A9,9-axis,150Hz;

- GNSS-IMU Xsens Mti 680G. GNSS-RTK,localization precision 2cm,100Hz;IMU 9-axis,100 Hz;

- Laser Scanner Leica MS60, localization 1mm+1.5ppm

- Motion-capture System Vicon Vero 2.2, localization accuracy 1mm, 50 Hz;

后面我们要配置参数,参考标定文件calibration_results.txt,rosbag序列的各数据话题如下:

- LIDAR:

/velodyne_points - RGB Camera:

/camera/left/image_raw/compressed,

/camera/right/image_raw/compressed,

/camera/third/image_raw/compressed,

/camera/fourth/image_raw/compressed,

/camera/fifth/image_raw/compressed,

/camera/sixth/image_raw/compressed,

/camera/head/image_raw/compressed - GNSS Ublox M8T:

/ublox/aidalm,

/ublox/aideph,

/ublox/fix,

/ublox/fix_velocity,

/ublox/monhw,

/ublox/navclock,

/ublox/navpvt,

/ublox/navsat,

/ublox/navstatus,

/ublox/rxmraw - Infrared Camera:

/thermal_image_raw - V-I Sensor:

/camera/color/image_raw/compressed,/camera/imu - Event Camera:

/dvs/events,/dvs_rendering/compressed - IMU:

/handsfree/imu

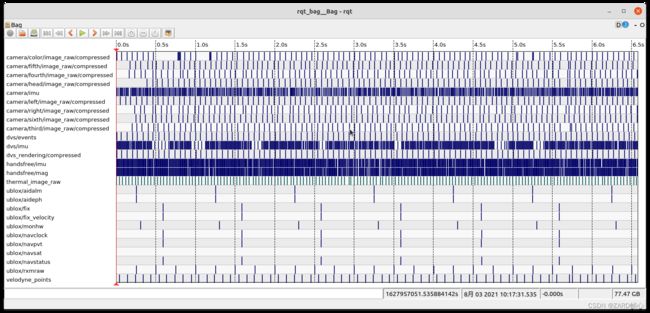

使用street_02.bag测试各个代码,我们首先通过rqt_bag命令将bag进行可视化,可以看到此数据的时间同步不太好

roscore

rqt_bag street_02.bag

二、ORB-SLAM2

2.1 配置参数

在Examples/Monocular/文件夹中添加参数文件M2DGR.yaml,仿照Examples/Monocular/TUM1.yaml文件修改参数,主要是修改相机内参,其余参数根据需要调整

# Camera calibration and distortion parameters (OpenCV)

Camera.fx: 617.971050917033

Camera.fy: 616.445131524790

Camera.cx: 327.710279392468

Camera.cy: 253.976983707814

Camera.k1: -0.28340811

Camera.k2: 0.07395907

Camera.p1: 0.00019359

Camera.p2: 1.76187114e-05

Camera.width: 640

Camera.height: 480

# Camera frames per second

Camera.fps: 15.0

2.2 单目

根据上述图像消息,单目ros_mono.cc文件的订阅:

//ros::Subscriber sub = nodeHandler.subscribe("/camera/image_raw", 1, &ImageGrabber::GrabImage,&igb);

// 替换为:

ros::Subscriber sub = nodeHandler.subscribe("/camera/color/image_raw", 1, &ImageGrabber::GrabImage,&igb);

重新编译ros节点

./build_ros.sh

打开四个终端,分别运行

roscore

#ORB_SLAM2目录下打开终端,运行ORB-SLAM2

rosrun ORB_SLAM2 Mono Vocabulary/ORBvoc.txt Examples/Monocular/M2DGR.yaml

# **注意**:bag中的图像为压缩格式,使用image_transport包将图像解压缩

rosrun image_transport republish compressed in:=/camera/color/image_raw raw out:=/camera/color/image_raw

# 在bag所在文件夹下打开终端,开始播放数据集:

rosbag play street_02.bag

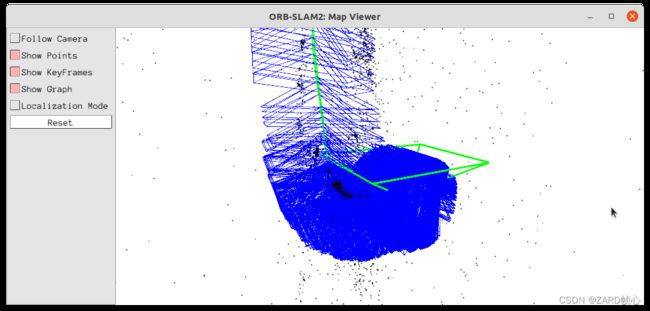

纯视觉的问题是初始化慢和特别容易跟踪丢失(运动过快、曝光以及动态物体干扰),另外单目的尺度漂移严重,完全失败

尺度变小挤一疙瘩啦!

三、ORB-SLAM3

3.1 配置参数

在Examples/Monocular-Inertial/文件夹中添加参数文件UrbanNav.yaml,仿照Examples/Monocular-Inertial/EuRoC.yaml文件,根据zed2_intrinsics.yaml、xsens_imu_param.yaml和extrinsic.yaml文件修改参数,主要是修改相机和IMU内参以及联合标定,其余参数根据需要调整

Camera.type: "PinHole"

# Camera calibration and distortion parameters (OpenCV)

Camera.fx: 617.971050917033

Camera.fy: 616.445131524790

Camera.cx: 327.710279392468

Camera.cy: 253.976983707814

Camera.k1: 0.148000794688248

Camera.k2: -0.217835187249065

Camera.p1: 0.0

Camera.p2: 0.0

Camera.width: 640

Camera.height: 480

# Camera frames per second

Camera.fps: 15.0

# Color order of the images (0: BGR, 1: RGB. It is ignored if images are grayscale)

Camera.RGB: 1

# Transformation from camera to body-frame (imu)

Tbc: !!opencv-matrix

rows: 4

cols: 4

dt: f

data: [0.0, 0.0, 1.0, 0.57711,

-1.0, 0.0, 0.0, -0.00012,

0.0, -1.0, 0.0, 0.83333,

0.0, 0.0, 0.0, 1.0]

# IMU noise

IMU.NoiseGyro: 2.1309311394972831e-02 #1.6968e-04

IMU.NoiseAcc: 1.2820343288774358e-01 #2.0e-3

IMU.GyroWalk: 3.6603917782528627e-04

IMU.AccWalk: 1.3677912958097768e-02

IMU.Frequency: 150

3.2 运行单目+IMU

将ros_mono_inertial.cc的IMU与图像消息订阅修改为:

// ros::Subscriber sub_imu = n.subscribe("/imu0", 1000, &ImuGrabber::GrabImu, &imugb);

// ros::Subscriber sub_img0 = n.subscribe("/cam0/image_raw", 100, &ImageGrabber::GrabImage,&igb);

ros::Subscriber sub_imu = n.subscribe("/handsfree/imu", 1000, &ImuGrabber::GrabImu, &imugb);

ros::Subscriber sub_img0 = n.subscribe("/camera/color/image_raw", 100, &ImageGrabber::GrabImage,&igb);

编译,然后运行

./build_ros.sh

# 配置环境:

source Examples/ROS

#ORB_SLAM3目录下打开终端,运行ORB-SLAM3

rosrun ORB_SLAM3 Mono_Inertial Vocabulary/ORBvoc.txt Examples/Monocular-Inertial/M2DGR.yaml

# **注意**:bag中的图像为压缩格式,使用image_transport包将图像解压缩

rosrun image_transport republish compressed in:=/camera/color/image_raw raw out:=/camera/color/image_raw

# 在bag所在文件夹下打开终端,开始播放数据集:

rosbag play street_02.bag

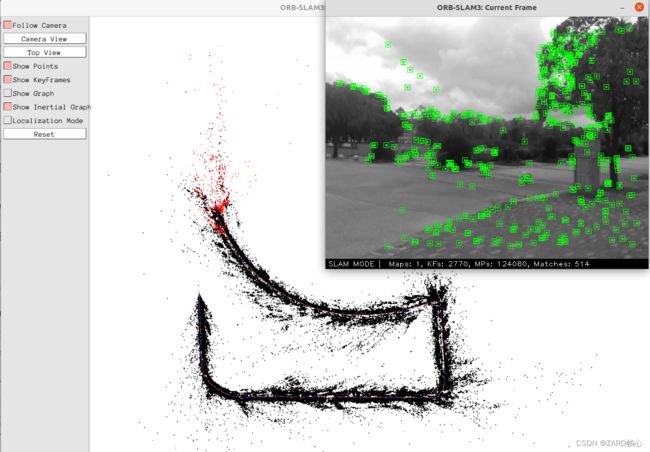

可以看到漂移十分严重,而且运行过程中也有尺度变化。没有分析出是何原因(猜测可能是/camera/color/image_raw时间戳跳变太严重),后面再作详细分析。总之运行失败

四、VINS-Mono

4.1 配置参数

在config文件夹中添加参数文件M2DGR.yaml,仿照config/euroc/euroc_config.yaml文件,根据my_params_camera.yaml文件修改参数,主要是修改图像以及IMU数据话题、结果输出路径、相机和IMU内参以及联合标定结果,其余参数根据需要调整

#common parameters

imu_topic: "/handsfree/imu"

image_topic: "/camera/color/image_raw"

output_path: "/home/zard/Downloads/VINS-Mono"

# camera model

model_type: PINHOLE

camera_name: camera

image_width: 640

image_height: 480

distortion_parameters:

k1: 0.148000794688248

k2: -0.217835187249065

p1: 0.0

p2: 0.0

projection_parameters:

fx: 617.971050917033

fy: 616.445131524790

cx: 327.710279392468

cy: 253.976983707814

#imu parameters The more accurate parameters you provide, the worse performance

acc_n: 1.2820343288774358e-01 # accelerometer measurement noise standard deviation. #0.2

gyr_n: 2.1309311394972831e-02 # gyroscope measurement noise standard deviation. #0.05

acc_w: 1.3677912958097768e-02 # accelerometer bias random work noise standard deviation. #0.02

gyr_w: 3.6603917782528627e-04 # gyroscope bias random work noise standard deviation. #4.0e-5

g_norm: 9.805 # gravity magnitude

# Extrinsic parameter between IMU and Camera.

estimate_extrinsic: 0 # 0 Have an accurate extrinsic parameters. We will trust the following imu^R_cam, imu^T_cam, don't change it.

# 1 Have an initial guess about extrinsic parameters. We will optimize around your initial guess.

# 2 Don't know anything about extrinsic parameters. You don't need to give R,T. We will try to calibrate it. Do some rotation movement at beginning.

#Rotation from camera frame to imu frame, imu^R_cam

extrinsicRotation: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 0.0, 0.0, 1.0,

-1.0, 0.0, 0.0,

0.0, -1.0, 0.0]

#Translation from camera frame to imu frame, imu^T_cam

extrinsicTranslation: !!opencv-matrix

rows: 3

cols: 1

dt: d

data: [0.57711, -0.00012, 0.83333]

仿照vins_estimator/launch/euroc.launch添加vins_estimator/launch/M2DGR.launch启动文件,修改参数文件路径,注意不要忘了将图像解压缩的命令

<arg name="config_path" default = "$(find feature_tracker)/../config/M2DGR.yaml" />

<node pkg="image_transport" type="republish" name="image_republish" args="compressed in:=/camera/color/image_raw raw out:=/camera/color/image_raw" output="screen" respawn="true"/>

4.2 运行单目+IMU

打开三个终端,source后再分别输入

# 运行feature_tracker节点和estimator节点, 订阅图像和IMU数据, 发布位姿, 3D特征点等消息给RVIZ显示

source devel/setup.bash

roslaunch vins_estimator M2DGR.launch

# rviz可视化节点

source devel/setup.bash

roslaunch vins_estimator vins_rviz.launch

# 在bag所在文件夹下打开终端,开始播放数据集:

rosbag play street_02.bag

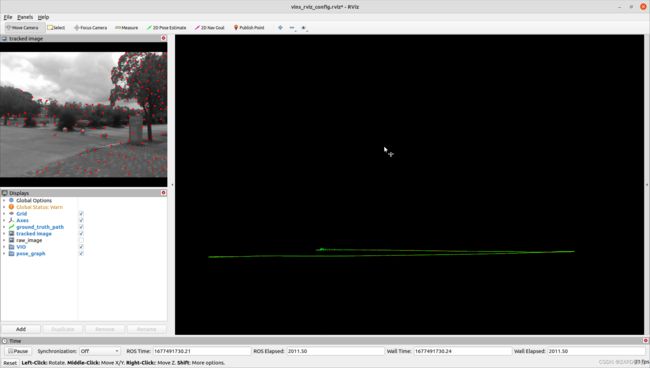

红色轨迹为前端里程计,绿色轨迹为局部优化和回环结果,此数据没有回环,有较大漂移(猜测也是图像时间戳的问题)

五、DM-VIO

5.1 安装

gtsam、OpenCV和pangolin的安装不赘述,直接编译DM-VIO:

git clone https://github.com/lukasvst/dm-vio.git

cd dm-vio

mkdir build && cd build

cmake ..

make -j8

编译完成后,在build/bin目录下,能看到可执行文件dmvio_dataset,接下来安装ros插件。随便进入一个目录,推荐是刚刚安装的dm-vio的一个子目录下:

git clone https://github.com/lukasvst/dm-vio-ros.git

为了能让这个插件找到刚刚编译的dm-vio.bashrc加上一个环境变量:

sudo gedit ~/.bashrc

# 在最后追加:

export DMVIO_BUILD=/YOURPATH/dm-vio/build

在CMakeLists.txt85行之后添加,不然编译的时候会因为找不到生成的msg格式而报错:

add_dependencies(${PROJECT_NAME}_node ${PROJECT_NAME}_generate_messages_cpp)

编译:

catkin_make

source devel/setup.bash

5.2 配置运行

首先要在config里加上M2DGR的相机模型:

echo -e "RadTan 617.971050917033 616.445131524790 327.710279392468 253.976983707814 0.148000794688248 -0.217835187249065 0.0 0.0\n640 480\nfull\n640 480\n" > M2DGR.txt

再仿照euroc.yaml配置参数文件M2DGR.yaml

accelerometer_noise_density: 1.2820343288774358e-01

gyroscope_noise_density: 2.1309311394972831e-02

accelerometer_random_walk: 1.3677912958097768e-02

gyroscope_random_walk: 3.6603917782528627e-04

integration_sigma: 0.316227

运行

roscore

source devel/setup.bash

rosrun dmvio_ros node calib=/PATH/M2DGR.txt settingsFile=/PATH/dm-vio/configs/M2DGR.yaml mode=1 nogui=0 preset=1 useimu=1 quiet=1 init_requestFullResetNormalizedErrorThreshold=0.8 init_pgba_skipFirstKFs=1

# **注意**:bag中的图像为压缩格式,使用image_transport包将图像解压缩

rosrun image_transport republish compressed in:=/camera/color/image_raw raw out:=/camera/color/image_raw

# 在bag所在文件夹下打开终端,开始播放数据集:

rosbag play street_02.bag /camera/color/image_raw:=cam0/image_raw /handsfree/imu:=imu0

但是运行不出来,没有反应,我不知为何,如果有人知道如何解决请在评论区告诉我,感谢!这里跑一下EuRoC数据吧

echo -e "458.654 457.296 367.215 248.375 -0.28340811 0.07395907 0.00019359 1.76187114e-05\n752 480\ncrop\n640 480\n" > camera.txt

rosrun dmvio_ros node calib=/PATH/camera.txt settingsFile=/PATH/dm-vio/configs/euroc.yaml mode=1 nogui=0 preset=1 useimu=1 quiet=1 init_requestFullResetNormalizedErrorThreshold=0.8 init_pgba_skipFirstKFs=1

rosbag play V1_01_easy.bag

六、A-LOAM

A-LOAM运行很简单,不需特别配置,LiDAR话题为/velodyne_points可直接运行:

source devel/setup.bash

roslaunch aloam_velodyne aloam_velodyne_VLP_32.launch

# 在bag所在文件夹下打开终端,开始播放数据集:

rosbag play street_02.bag

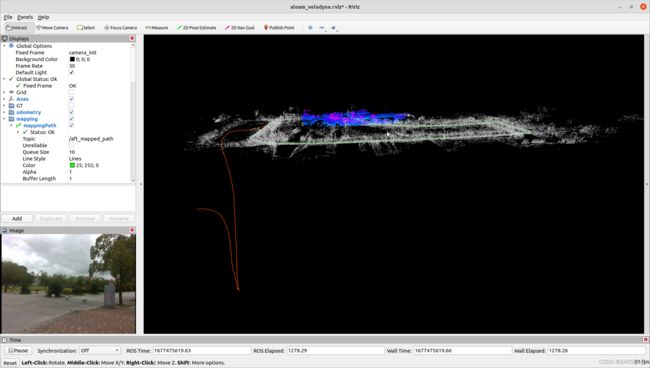

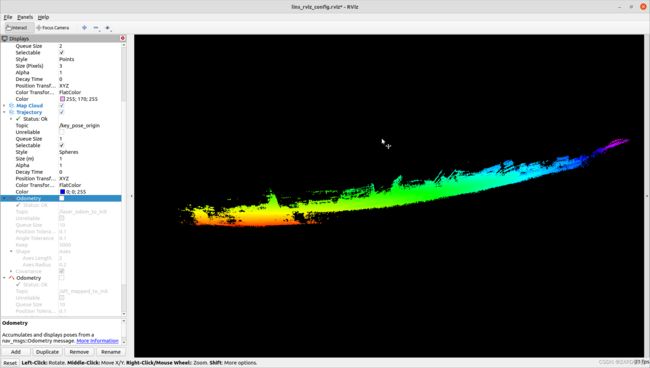

如下图所示,红色轨迹为前端里程计,绿色轨迹为局部优化结果,A-LOAM在此数据集上的效果还是十分好的

侧视图可以看到前端里程计漂移十分严重,说明后端局部优化的重要性

七、LeGO-LOAM

LeGO-LOAM运行也很简单,LiDAR话题为/velodyne_points,IMU话题/handsfree/imu更名为/imu/data:

source devel/setup.bash

roslaunch lego_loam run.launch

# 在bag所在文件夹下打开终端,开始播放数据集:

rosbag play street_02.bag --clock /handsfree/imu:=/imu/data

如下图所示,LeGO-LOAM有较大的漂移

八、LIO-SAM

8.1 配置参数

在config文件夹中添加参数文件config/params_street.yaml,仿照config/params.yaml文件,根xsens_imu_param.yaml和extrinsic.yaml文件修改参数,主要是修改LiDAR以及IMU数据话题、结果输出路径、IMU内参以及联合标定结果,其余参数根据需要调整

pointCloudTopic: "velodyne_points" # Point cloud data

imuTopic: "/handsfree/imu" # IMU data

# Export settings

savePCD: true # https://github.com/TixiaoShan/LIO-SAM/issues/3

savePCDDirectory: "~/Output/" # in your home folder, starts and ends with "/". Warning: the code deletes "LOAM" folder then recreates it. See "mapOptimization" for implementation

# Sensor Settings

sensor: velodyne # lidar sensor type, either 'velodyne' or 'ouster'

N_SCAN: 32 # number of lidar channel (i.e., 16, 32, 64, 128)

Horizon_SCAN: 1800 # lidar horizontal resolution (Velodyne:1800, Ouster:512,1024,2048)

downsampleRate: 1 # default: 1. Downsample your data if too many points. i.e., 16 = 64 / 4, 16 = 16 / 1

lidarMinRange: 1.0 # default: 1.0, minimum lidar range to be used

lidarMaxRange: 1000.0

# IMU Settings

imuAccNoise: 3.7686306102624571e-02

imuGyrNoise: 2.3417543020438883e-03

imuAccBiasN: 1.1416642385952368e-03

imuGyrBiasN: 1.4428407712885209e-05

# 上海位于北纬31°12′,重力加速度g的精确值为9.7940 m/s²。

imuGravity: 9.7940

imuRPYWeight: 0.01

# Extrinsics (lidar -> IMU)

# 给的是IMU -> lidar,但是旋转矩阵为单位阵,直接取负就行

extrinsicTrans: [0.27255, -0.00053, 0.17954]

extrinsicRot: [1, 0, 0,

0, 1, 0,

0, 0, 1]

extrinsicRPY: [1, 0, 0,

0, 1, 0,

0, 0, 1]

在launch/run.launch中修改参数文路径

<rosparam file="$(find lio_sam)/config/params_street.yaml" command="load" />

8.2 运行

source devel/setup.bash

roslaunch lio_sam run.launch

# 在bag所在文件夹下打开终端,开始播放数据集:

rosbag play street_02.bag

不知为何,LIO-SAM的结果看起来还不如A-LOAM,后面再作分析

九、LVI-SAM

9.1 配置参数

M2DGR的作者提供了LVI-SAM的参数配置文件module_sam.launch、my_params_camera.yaml、my_params_lidar.yaml,主要是要注意以下参数:

# my_params_camera.yaml

#common parameters

imu_topic: "/handsfree/imu"

image_topic: "/camera/color/image_raw"

# lidar to camera extrinsic

lidar_to_cam_tx: 0.27255

lidar_to_cam_ty: -0.00053

lidar_to_cam_tz: 0.17954

lidar_to_cam_rx: 0.0

lidar_to_cam_ry: 0.0

lidar_to_cam_rz: 0.0

# camera model

model_type: PINHOLE

camera_name: camera

# Mono camera config

image_width: 640

image_height: 480

distortion_parameters:

k1: 0.148000794688248

k2: -0.217835187249065

p1: 0

p2: 0

projection_parameters:

fx: 617.971050917033

fy: 616.445131524790

cx: 327.710279392468

cy: 253.976983707814

#imu parameters The more accurate parameters you provide, the worse performance

acc_n: 1.2820343288774358e-01 # accelerometer measurement noise standard deviation. #0.2

gyr_n: 2.1309311394972831e-02 # gyroscope measurement noise standard deviation. #0.05

acc_w: 1.3677912958097768e-02 # accelerometer bias random work noise standard deviation. #0.02

gyr_w: 3.6603917782528627e-04 # gyroscope bias random work noise standard deviation. #4.0e-5

g_norm: 9.805 # gravity magnitude

# Extrinsic parameter between IMU and Camera.

estimate_extrinsic: 0

extrinsicRotation: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 0.0, 0.0, 1.0,

-1.0, 0.0, 0.0,

0.0, -1.0, 0.0]

#Translation from camera frame to imu frame, imu^T_cam

extrinsicTranslation: !!opencv-matrix

rows: 3

cols: 1

dt: d

data: [0.57711, -0.00012, 0.83333]

#feature traker paprameters

freq: 20

# my_params_lidar.yaml

# Topics

pointCloudTopic: "velodyne_points" # Point cloud data

imuTopic: "handsfree/imu" # IMU data

# Heading

useImuHeadingInitialization: false # if using GPS data, set to "true"

# Export settings

savePCD: true # https://github.com/TixiaoShan/LIO-SAM/issues/3

savePCDDirectory: "~/Output/" # in your home folder, starts and ends with "/". Warning: the code deletes "LOAM" folder then recreates it. See "mapOptimization" for implementation

# Sensor Settings

N_SCAN: 32 # number of lidar channel (i.e., 16, 32, 64, 128)

Horizon_SCAN: 1800 # lidar horizontal resolution (Velodyne:1800, Ouster:512,1024,2048)

timeField: "time" # point timestamp field, Velodyne - "time", Ouster - "t"

downsampleRate: 1 # default: 1. Downsample your data if too many points. i.e., 16 = 64 / 4, 16 = 16 / 1

# IMU Settings

imuAccNoise: 3.7686306102624571e-02

imuGyrNoise: 2.3417543020438883e-03

imuAccBiasN: 1.1416642385952368e-03

imuGyrBiasN: 1.4428407712885209e-05

imuGravity: 9.805

# Extrinsics (lidar -> IMU)

extrinsicTrans: [0.27255, -0.00053,0.17954]

extrinsicRot: [1, 0, 0, 0, 1, 0, 0, 0, 1]

extrinsicRPY: [1, 0, 0, 0, 1, 0, 0, 0, 1]

<node pkg="image_transport" type="republish" name="image_republish" args="compressed in:=/camera/color/image_raw raw out:=/camera/color/image_raw" output="screen" respawn="true"/>

<rosparam file="$(find lvi_sam)/config/my_params_lidar.yaml" command="load" />

<param name="vins_config_file" type="string" value="$(find lvi_sam)/config/my_params_camera.yaml" />

9.2 运行

由于M2DGR使用的传感器坐标系与LVI-SAM的数据(实际上是LVI-SAM的IMU比较特殊)不同,M2DGR作者也提供了,需要在内部代码中稍作修改,修改如下:

- src/visual_odometry/visual_estimator/initial/initial_alignment.h

// n(n_in)

// {

// q_lidar_to_cam = tf::Quaternion(0, 1, 0, 0);

// q_lidar_to_cam_eigen = Eigen::Quaterniond(0, 0, 0, 1);

// }

n(n_in)

{

q_lidar_to_cam = tf::Quaternion(0, 0, 0, 1);

q_lidar_to_cam_eigen = Eigen::Quaterniond(1,0,0,0);

}

// tf::Quaternion q_odom_cam = tf::createQuaternionFromRPY(0, 0, M_PI) * (q_odom_lidar * q_lidar_to_cam);

tf::Quaternion q_odom_cam = tf::createQuaternionFromRPY(0, 0, M_PI / 2.0) * (q_odom_lidar * q_lidar_to_cam);

- src/visual_odometry/visual_estimator/utility/visualization.cpp

// tf::Quaternion q_cam_to_lidar(0, 1, 0, 0);

tf::Quaternion q_cam_to_lidar(0, 0, 0, 1);

// static tf::Transform t_odom_world = tf::Transform(tf::createQuaternionFromRPY(0, 0, M_PI), tf::Vector3(0, 0, 0));

static tf::Transform t_odom_world = tf::Transform(tf::createQuaternionFromRPY(0, 0, 0), tf::Vector3(0, 0, 0));

- src/visual_odometry/visual_feature/feature_tracker_node.cpp**

// 注释以下代码:

// pcl::PointCloud::Ptr laser_cloud_offset(new pcl::PointCloud());

// Eigen::Affine3f transOffset = pcl::getTransformation(L_C_TX, L_C_TY, L_C_TZ, L_C_RX, L_C_RY, L_C_RZ);

// pcl::transformPointCloud(*laser_cloud_in, *laser_cloud_offset, transOffset);

// *laser_cloud_in = *laser_cloud_offset;

修改完后重新编译:

cd ~/catkin_ws

catkin_make -j1

运行

source devel/setup.bash

roslaunch lvi_sam run.launch

# 在bag所在文件夹下打开终端,开始播放数据集:

rosbag play street_02.bag

与LIO-SAM一样,效果并不理想,看起来甚至不如LIO-SAM

十、LINS

10.1 安装

在已经安装ROS、gtsam和OpenCV的情况下:

cd ~/catkin_ws/src

git clone https://github.com/ChaoqinRobotics/LINS---LiDAR-inertial-SLAM.git

cd ..

catkin_make -j1

注意和LeGO-LOAM一样,第一次编译代码时,需要在“catkin_make”后面加上“-j1”来生成一些消息类型。以后的编译不需要“-j1”,并且如果和LeGO-LOAM放在一个功能包时消息名称会发生冲突(可以选择修改消息名称,也可以删除其中一个消息的编译功能包)

- 由于PCL版本1.10,将C++标准改为14:

#set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11 -O3")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++14 -O3")

- 遇到错误:

error: ‘string’ in namespace ‘std::__cxx11’ does not name a type,将lins/src/lib/parameters.cpp中134和141行的函数参数中的std::__cxx11::string改成std::string - 四个类似的错误:

/usr/bin/ld: cannot find -lBoost::serialization,找不到lBoost的四个库,CMakeLists.txt添加:

find_package(Boost REQUIRED COMPONENTS timer thread serialization chrono)

10.2 配置参数

acc_n: 3.7686306102624571e-02

gyr_n: 2.3417543020438883e-03

acc_w: 1.1416642385952368e-03

gyr_w: 1.4428407712885209e-05

# extrinsic parameters

init_tbl: !!opencv-matrix

rows: 3

cols: 1

dt: d

data: [0.27255, -0.00053,0.17954]

init_rbl: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [1, 0, 0,

0, 1, 0,

0, 0, 1]

<arg name="config_path" default = "$(find lins)/config/exp_config/M2DGR.yaml" />

10.3 运行

source devel/setup.bash

roslaunch lins run_M2DGR.launch

# 在bag所在文件夹下打开终端,开始播放数据集:

rosbag play street_02.bag --clock /handsfree/imu:=/imu/data

运行时报错:[ERROR] [1677898050.385013867, 1627957054.444385592]: Error transforming odometry 'Odometry' from frame '/camera_init' to frame 'map',在lidar_mapping_node.cpp、transform_fusion_node.cpp、Estimator.cpp代码里搜索所有的/camera_init改成camera_init

竖直方向上漂移较大

十一、FAST-LIO2

FAST-LIO是香港大学火星实验室推出的高效 LiDAR 惯性里程计框架,使用紧密耦合的迭代扩展卡尔曼滤波器将 LiDAR 特征点与 IMU 数据融合,以允许在发生退化的快速运动、嘈杂或杂乱环境中进行稳健导航。相较第一代版本,第二代使用ikd-Tree增量映射,实现支持超过 100Hz 的 LiDAR 速率,并且支持更多的LiDAR和设备

11.1 安装

不需要修改源码,首先安装依赖项:

- ROS (melodic or noetic),安装方法参考我之前的博客

- glog:

sudo apt-get install libgoogle-glog-dev - eigen:

sudo apt-get install libeigen3-dev - pcl:

sudo apt-get install libpcl-dev - yaml-cpp:

sudo apt-get install libyaml-cpp-dev

(1)Livox-sdk安装

git clone https://github.com/Livox-SDK/Livox-SDK.git

cd Livox-SDK

cd build && cmake ..

make

sudo make install

(2)Livox_ros_driver安装

# 打开终端下执行下面的内容

mkdir ws_livox/src -p

# 打开配置文件

gedit ~/.bashrc

# 将下面的内容添加到文件最后

source ~/ws_livox/devel/setup.bash

# 将livox_ros_driver clone 到 ws_livox/src 下

cd ~/ws_livox/src

git clone https://github.com/Livox-SDK/livox_ros_driver.git

cd ..

catkin_make

source devel/setup.sh

(3)FAST_LIO安装

cd ~/ws_livox/src

git clone https://github.com/hku-mars/FAST_LIO.git

cd ..

catkin_make

11.2 配置参数

同理:

common:

lid_topic: "/velodyne_points"

imu_topic: "/handsfree/imu"

preprocess:

lidar_type: 2 # 1 for Livox serials LiDAR, 2 for Velodyne LiDAR, 3 for ouster LiDAR,

scan_line: 32

scan_rate: 10 # only need to be set for velodyne, unit: Hz,

timestamp_unit: 2 # the unit of time/t field in the PointCloud2 rostopic: 0-second, 1-milisecond, 2-microsecond, 3-nanosecond.

blind: 2

mapping:

acc_cov: 3.7686306102624571e-02

gyr_cov: 2.3417543020438883e-03

b_acc_cov: 1.1416642385952368e-03

b_gyr_cov: 1.4428407712885209e-05

extrinsic_est_en: false # true: enable the online estimation of IMU-LiDAR extrinsic,

extrinsic_T: [ 0.27255, -0.00053,0.17954]

extrinsic_R: [ 1, 0, 0,

0, 1, 0,

0, 0, 1]

publish:

# 发布路径

path_en: true

pcd_save:

# 如无必要不用保存点云地图,太大了

pcd_save_en: false

<rosparam command="load" file="$(find fast_lio)/config/velodyne_M2DGR.yaml" />

11.3 运行

source devel/setup.bash

roslaunch fast_lio mapping_velodyne_M2DGR.launch

# 在bag所在文件夹下打开终端,开始播放数据集:

rosbag play street_02.bag

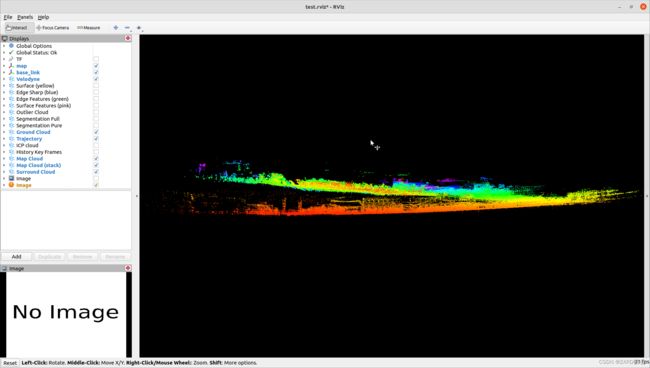

看起来还行

十二、FAST-LIVO

12.1 安装

(1)已安装:

- Ubuntu 16.04~20.04. ROS Installation.

- PCL>=1.6, Follow PCL Installation.

- Eigen>=3.3.4, Follow Eigen Installation.

- OpenCV>=3.2, Follow Opencv Installation.

- Livox-sdk

- Livox_ros_driver

(2)安装非模板类的Sophus:

git clone https://github.com/strasdat/Sophus.git

cd Sophus

# 版本回溯

git checkout a621ff

mkdir build && cd build

cmake ..

make

在make之前,先修改这个版本Sophus的bug的,打开Sophus/sophus/so2.cpp文件,将代码修改如下

// unit_complex_.real() = 1.;

// unit_complex_.imag() = 0.;

unit_complex_.real(1.);

unit_complex_.imag(0.);

make

# 如果已经安装过模板类的不用担心,安装时互不影响,*.h和*.hpp可以共存于一个文件夹

sudo make install

(3)安装Vikit

cd ~/catkin_ws/src

git clone https://github.com/uzh-rpg/rpg_vikit.git

(4)安装FAST-LIVO

cd ~/catkin_ws/src

git clone https://github.com/hku-mars/FAST-LIVO

cd ..

catkin_make

如果报OpenCV的错,将CMakeLists.txt中的

FIND_PACKAGE(OpenCV REQUIRED)

# 改为

FIND_PACKAGE(OpenCV 4 REQUIRED)

将报错的参数替换

// CV_RANSAC

cv::RANSAC

// CV_INTER_LINEAR //双线性插值

cv::INTER_LINEAR

// CV_WINDOW_AUTOSIZE

cv::WINDOW_AUTOSIZE

如果出现大量的类似错误:/usr/bin/ld: /home/zard/catkin_ws_LiDAR/devel/lib/libvikit_common.so: undefined reference to Sophus::SE3::operator*(Eigen::Matrix,执行FIND_PACKAGE(sophus REQUIRED)时,libSophus.so 应该被链接到 Sophus_LIBRARIES, 但cmake却没链接上(原因未知),因此出现这个错误。显式将Sophus_LIBRARIES 链接到libSophus.so:

FIND_PACKAGE(Sophus REQUIRED)

set(Sophus_LIBRARIES libSophus.so)

12.2 运行

写到这里发现由于FAST-LIVO需要严格的时间同步,M2DGR数据不太行,但是写了就不删了,这里运行示例数据:

roslaunch fast_livo mapping_avia.launch

rosbag play hk1.bag

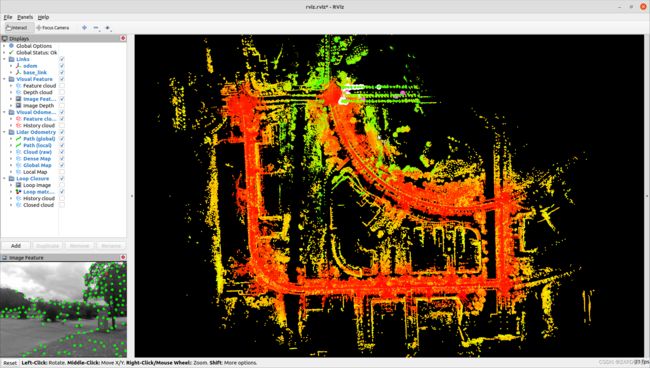

图建的十分漂亮,也很稳

12.3 R3LIVE

这里我补充一下类似的,R3LIVE的编译运行,已安装Livox-sdk、Livox_ros_driver

(1)CGAL

sudo apt-get install libcgal-dev

(2)编译

cd ~/catkin_ws/src

git clone https://github.com/hku-mars/r3live.git

catkin_make

source ~/catkin_ws/devel/setup.bash

(3)运行

roslaunch r3live r3live_bag.launch

rosbag play YOUR.bag

十三、Faster-LIO

Faster_LIO是高博在FAST_LIO上改进的开源SLAM,使得SLAM的效率更高。

13.1 Faster-LIO的安装

Faster-LIO的编译十分简单,高博已经在20.04测试过了,首先安装依赖项:

- ROS (melodic or noetic),安装方法参考我之前的博客

- glog:

sudo apt-get install libgoogle-glog-dev - eigen:

sudo apt-get install libeigen3-dev - pcl:

sudo apt-get install libpcl-dev - yaml-cpp:

sudo apt-get install libyaml-cpp-dev

之后可以编译:

# 注意不要和Livox_ros_driver在同一个工作空间中,否则Livox_ros_driver节点名称冲突

mkdir catkin_ws/src -p

cd catkin_ws

catkin_make

13.2 配置参数

添加配置文件以及launch文件,主要修改以下内容

common:

dataset: "M2DGR"

lid_topic: "velodyne_points"

imu_topic: "handsfree/imu"

time_sync_en: false # ONLY turn on when external time synchronization is really not possible

preprocess:

lidar_type: 2 # 1 for Livox serials LiDAR, 2 for Velodyne LiDAR, 3 for ouster LiDAR,

scan_line: 32

blind: 4

time_scale: 1e3 # 兼容不同数据集的时间单位

mapping:

acc_cov: 3.7686306102624571e-02

gyr_cov: 2.3417543020438883e-03

b_acc_cov: 1.1416642385952368e-03

b_gyr_cov: 1.4428407712885209e-05

fov_degree: 180

det_range: 100.0

extrinsic_est_en: true # true: enable the online estimation of IMU-LiDAR extrinsic

extrinsic_T: [ 0.27255, -0.00053,0.17954]

extrinsic_R: [ 1, 0, 0,

0, 1, 0,

0, 0, 1]

<rosparam command="load" file="$(find faster_lio)/config/velodyne_M2DGR.yaml" />

13.3 运行

source devel/setup.bash

roslaunch faster_lio mapping_velodyne_M2DGR.launch

# 在bag所在文件夹下打开终端,开始播放数据集:

rosbag play street_02.bag

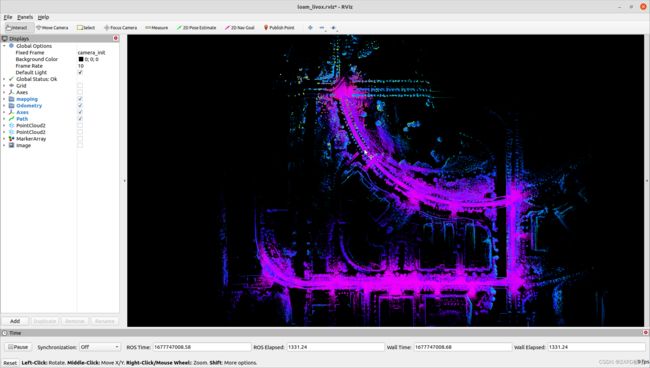

Faster-Lio效果在视觉上看着出奇的好,建的图细节上很漂亮。具体精度要看后面比较,不过运行效率亲测确实比FAST-LIO高很多

十四、hdl_graph_slam

hdl-graph-slam不仅融合了imu、gps、平面、激光等多种输入,同时也带闭环检测等后端处理,即完整的一个slam架构。

14.1 安装

首先我们安装了ROS,然后

sudo apt-get install ros-noetic-geodesy ros-noetic-pcl-ros ros-noetic-nmea-msgs ros-noetic-libg2o

cd catkin_ws/src

git clone https://github.com/koide3/ndt_omp.git

git clone https://github.com/SMRT-AIST/fast_gicp.git --recursive

git clone https://github.com/koide3/hdl_graph_slam

cd .. && catkin_make -DCMAKE_BUILD_TYPE=Release

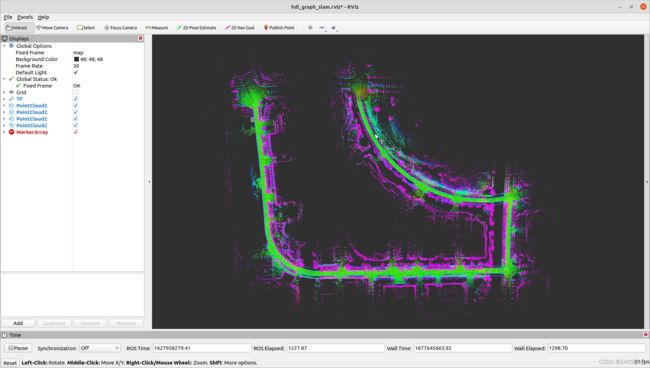

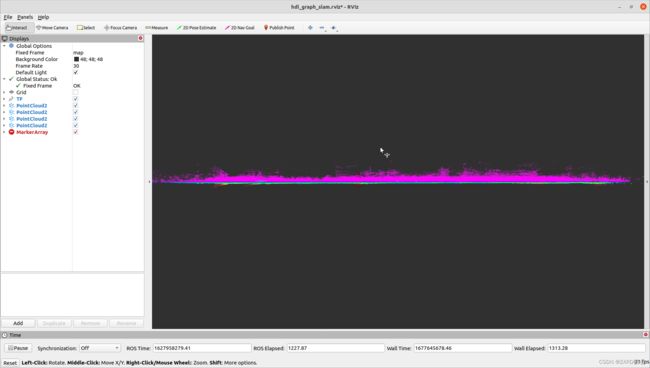

14.2 纯激光

打开四个终端,分别

# 启动master节点

roscore

# 设置use_sim_time参数并运行

rosparam set use_sim_time true

roslaunch hdl_graph_slam hdl_graph_slam_400.launch

# 启动rviz可视化

roscd hdl_graph_slam/rviz

rviz -d hdl_graph_slam.rviz

# 在bag所在文件夹下打开终端,开始播放数据集:

rosbag play street_02.bag --clock

十五、定位结果质量评估与比较

至于轨迹的保存,我都是在建图模块添加保存TUM格式位姿的代码,这里就不详细写了

首先求得每个轨迹的绝对误差(A-LOAM为例,street_02.txt为轨迹真值,A-LOAM为A-LOAM轨迹输出结果):

evo_ape tum street_02.txt A-LOAM -va -s --plot --plot_mode=xy --save_results A-LOAM.zip

同样的方法求得其他轨迹的绝对轨迹误差结果,放在同一个文件夹下,比较:

可以看到ORB-SLAM3的误差太大了,我们去掉它比较其他的

对于此数据,视觉效果不太好,猜测可能是图像时间戳跳变严重并且频率较低。而其他传感器的数据在各个算法的效果也都平平,把各个算法都拉到同一个水平线上,大多数多源融合算法甚至不如纯激光的A-LOAM,哈哈哈哈哈哈哈