Spark Streaming 整合 Kafka,实现交流

Spark Streaming 整合 Kafka

-

- 一、了解 Spark Streaming 整合 Kafka

-

- 1.1 KafkaUtis.createDstream方式

- 二、实战

-

- 2.1 导入依赖(与spark版本一致)

- 2.2 案列:KafkaUtis.createDstream方式实现词频统计

-

- 2.2.1 创建Topic,指定消息类别

- 1.2 KafkaUtis.createDirectStream方式

- 2.3 实例:

-

- 2.3.1 创建Topic,指定消息类别

1、MySQL安装教程

2、Spark Streaming 实现网站热词排序

3、Spark Streaming 实时计算框架

4、Spark Streaming 整合 Kafka,实现交流

一、了解 Spark Streaming 整合 Kafka

Kafka作为一个实时的分布式消息队列,实时地生产和消费消息。在大数据计算框架中,可利用Spark Streaming实时读取Kafka中的数据,再进行相关计算。

在Spark1.3版本后, KafkaUtils里面提供了两个创建DStream的方式,一种是KafkaUtis.createDstream 方式,另一种为 KafkaUtils.createDirectStream方式。

1.1 KafkaUtis.createDstream方式

KatkaUtils.createDstream 是通过 Zookeeper 连接Kafka , receivers接收器从Kafka中获取数据,并且所有receivers获取到的数据都会保存在Spark executors中,然后通过Spark Streaming启动job来外理这些数据。

二、实战

2.1 导入依赖(与spark版本一致)

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-kafka-0-8_2.11</artifactId>

<version>2.4.0</version>

</dependency>

2.2 案列:KafkaUtis.createDstream方式实现词频统计

import org.apache.spark.streaming.dstream.{DStream, ReceiverInputDStream}

import org.apache.spark.streaming.kafka.KafkaUtils

import org.apache.spark.streaming.{Seconds, StreamingContext}

import org.apache.spark.{SparkConf, SparkContext}

import scala.collection.immutable

object SparkStreaming_Kafka_createDstream {

def main(args: Array[String]): Unit = {

//1.创建sparkConf,并开启wal预写日志,保存数据源

val sparkConf: SparkConf = new SparkConf().setAppName("SparkStreaming_Kafka_createDstream").setMaster("local[4]")

.set("spark.streaming.receiver.writeAheadLog.enable", "true")

//2.创建sparkContext

val sc = new SparkContext(sparkConf)

//3.设置日志级别

sc.setLogLevel("WARN")

//3.创建StreamingContext

val ssc = new StreamingContext(sc, Seconds(5))

//4.设置checkpoint

ssc.checkpoint("./Kafka_Receiver")

//5.定义zk地址

val zkQuorum = "master:2181,slave1:2181,slave2:2181"

//6.定义消费者组

val groupId = "spark_receiver"

//7.定义topic相关信息 Map[String, Int],这里的value并不是topic分区数,它表示的topic中每一个分区被N个线程消费

val topics = Map("kafka_spark" -> 1)

//8.通过KafkaUtils.createDstream对接kafka这时相当于同时开启3个receiver接受数据

val receiverDstream:immutable.IndexedSeq[ReceiverInputDStream[(String,String)]] = (1 to 3)

.map(x => { val stream: ReceiverInputDStream[(String, String)] = KafkaUtils.createStream(ssc,zkQuorum,groupId,topics)

stream

})

//9.使用ssc中的union方法合并所有的receiver中的数据

val unionDStream: DStream[(String, String)] = ssc.union(receiverDstream)

//10.SparkStreaming获取topic中的数据

val topicData: DStream[String] = unionDStream.map(_._2)

//11.按空格进行切分每一行,并将切分的单词出现次数记录为1

val wordAndOne: DStream[(String, Int)] = topicData.flatMap(_.split(" ")).map((_, 1))

//12.统计单词在全局中出现的次数

val result: DStream[(String, Int)] = wordAndOne.reduceByKey(_ + _)

//13.打印输出结果

result.print()

//14.开启流式计算

ssc.start()

ssc.awaitTermination()

}

}

2.2.1 创建Topic,指定消息类别

在hadoop集群开启基础上,启动kafka(三个节点)

/usr/kafka/kafka_2.11-2.4.0/bin/kafka-server-start.sh config/server.propertie

// 创建kafak主题

kafka-topics.sh --create --topic kafka_spark --partitions 3 --replication-factor 1 --zookeeper master:2181,slave1:2181,slave2:2181

// 启动生产者

kafka-console-producer.sh --broker-list master:9092,slave1:9092,slave2:9092 --topic kafka_spark

// 发送信息(输入值)

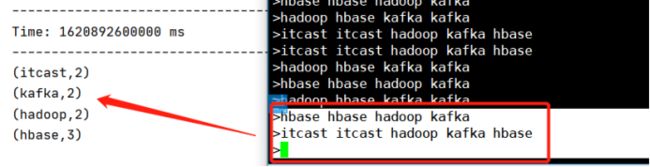

hbase hbase hadoop kafka

hadoop hbase kafka kafka

itcast itcast hadoop kafka hbase

词频统计结果完成。但是KafkaUtis.createDstream方式在系统出现异常时,重新启动程序运行,会发现程序会重复处理已经处理过的数据。因此官方不推荐这种方式,从而推荐KafkaUtis.createDirectStream 方式。

1.2 KafkaUtis.createDirectStream方式

当接收数据时,它会定期地从Kafka中Topic对应Partition中查询最新的偏移量,再根据偏移量范围在每个batch里面处理数据,然后Spark通过调用Kafka简单的消费者API (即低级API )来读取一定范围的数据。

2.3 实例:

import kafka.serializer.StringDecoder

import org.apache.spark.streaming.dstream.{DStream, InputDStream}

import org.apache.spark.streaming.kafka.KafkaUtils

import org.apache.spark.streaming.{Seconds, StreamingContext}

import org.apache.spark.{SparkConf, SparkContext}

object SparkStreaming_Kafka_createDirectStream {

def main(args: Array[String]): Unit = {

//1.创建sparkConf

val sparkConf: SparkConf = new SparkConf()

.setAppName("SparkStreaming_Kafka_createDirectStream").setMaster("local[2]")

//2.创建sparkContext

val sc = new SparkContext(sparkConf)

//3.设置日志级别

sc.setLogLevel("WARN")

//4.创建StreamingContext

val ssc = new StreamingContext(sc,Seconds(5))

//5.设置chectPoint

ssc.checkpoint("./Kafka_Direct")

//6.配置kafka相关参数 (metadata.broker.list为老版本的集群地址)

val kafkaParams=Map("metadata.broker.list"->"master:9092,slave1:9092,slave2:9092","group.id"->"spark_direct")

//7.定义topic

val topics=Set("kafka_direct0")

//8.通过低级api方式将kafka与sparkStreaming进行整合

val dstream: InputDStream[(String, String)] = KafkaUtils.createDirectStream[String,String,StringDecoder,StringDecoder](ssc,kafkaParams,topics)

//9.获取kafka中topic中的数据

val topicData: DStream[String] = dstream.map(_._2)

//10.按空格进行切分每一行,并将切分的单词出现次数记录为1

val wordAndOne: DStream[(String, Int)] = topicData.flatMap(_.split(" ")).map((_,1))

//11.统计单词在全局中出现的次数

val result: DStream[(String, Int)] = wordAndOne.reduceByKey(_+_)

//12.打印输出结果

result.print()

//13.开启流式计算

ssc.start()

ssc.awaitTermination()

}

}

执行程序。

2.3.1 创建Topic,指定消息类别

// 创建主题

kafka-topics.sh --create --topic kafka_direct0 --partitions 3 --replication-factor 2 --zookeeper master:2181,slave1:2181,slave2:2181

启动Kafka消息生产者

kafka-console-producer.sh --broker-list master:9092,slave1:9092,slave2:9092 --topic kafka_direct0

// 输入数据

hbase hbase hadoop kafka

itcast itcast hadoop kafka hbase