【Zookeeper】3. Zookeeper 集群安装

Zookeeper 集群安装

-

- 3.1 集群操作

-

- 3.1.1 ⭐集群安装

- 3.1.2 ⭐选举机制

- 3.1.3 ZooKeeper集群脚本

- 3.2 客户端命令操作集群

-

- 3.2.1 命令行语法

- 3.2.2 znode 节点数据信息

- 3.2.3 节点类型

- 3.2.4 监听器原理

- 3.2.5 节点的删除与查看

- 3.3 客户端 API 操作集群

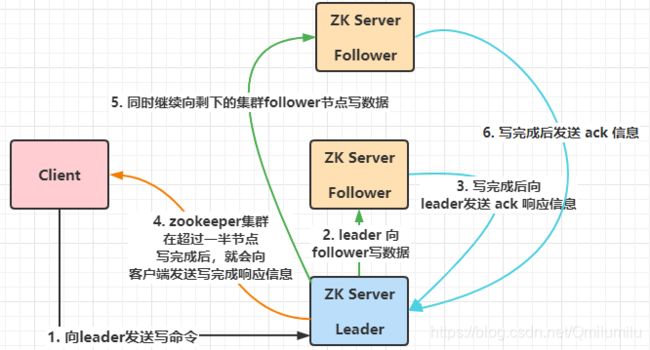

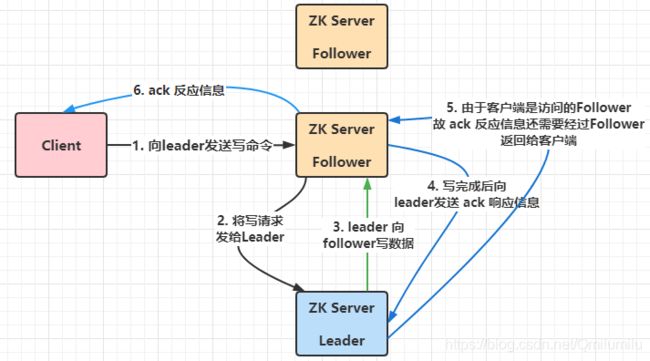

- 3.4 客户端向服务端写数据流程

3.1 集群操作

3.1.1 ⭐集群安装

-

集群规划

在 hadoop102、 hadoop103 和 hadoop104 三个节点上都部署 Zookeeper

思考:如果是 10 台服务器,需要部署多少台 Zookeeper?

安装奇数台生产经验:

-

10 台服务器: 3 台 zk

-

20 台服务器: 5 台 zk

-

100 台服务器: 11 台 zk

-

200 台服务器: 11 台 zk

服务器台数多:好处,提高可靠性;坏处:提高通信延时

-

-

拷贝 apache-zookeeper-3.5.7-bin.tar.gz 安装包到 Linux 系统下

-

解压到指定目录

tar -zxvf apache-zookeeper-3.5.7-bin.tar.gz -C /opt/module/ -

修改名称

mv apache-zookeeper-3.5.7-bin/ zookeeper-3.5.7/ -

配置服务器编号

-

在/opt/module/zookeeper-3.5.7/这个目录上创建zkData文件夹

[cool@hadoop102 zookeeper-3.5.7]$ mkdir zkData [cool@hadoop102 zookeeper-3.5.7]$ cd zkData/ [cool@hadoop102 zkData]$ pwd /opt/module/zookeeper-3.5.7/zkData -

在/opt/module/zookeeper-3.5.7/zkData 目录下创建一个myid文件,并在文件中添加与 server 对应的编号

vim myid[cool@hadoop102 zkData]$ vim myid2

-

-

修改配置文件

-

将/opt/module/zookeeper-3.5.7/conf 这个路径下的 zoo_sample.cfg 修改为 zoo.cfg

mv zoo_sample.cfg zoo.cfg -

打开 zoo.cfg 文件,修改 dataDir 路径

# The number of milliseconds of each tick tickTime=2000 # The number of ticks that the initial # synchronization phase can take initLimit=10 # The number of ticks that can pass between # sending a request and getting an acknowledgement syncLimit=5 # the directory where the snapshot is stored. # do not use /tmp for storage, /tmp here is just # example sakes. #======修改 dataDir 路径=========== #tmp/ 下的文件会定时清理 #dataDir=/tmp/zookeeper dataDir=/opt/module/zookeeper-3.5.7/zkData # the port at which the clients will connect clientPort=2181 -

打开 zoo.cfg 文件,增加如下配置

#######################cluster########################## server.2=hadoop102:2888:3888 server.3=hadoop103:2888:3888 server.4=hadoop104:2888:3888 -

配置参数解读:

server.A=B:C:D- A 是一个数字,表示这个是第几号服务器;集群模式下配置一个文件myid,这个文件在dataDir目录下,这个文件里面有一个数据就是 A 的值,Zookeeper 启动时读取此文件,拿到里面的数据与zoo.cfg 里面的配置信息比较从而判断到底是哪个server

- B 是这个服务器的地址

- C 是这个服务器 Follower 与集群中的 Leader 服务器交换信息的端口

- D 是万一集群中的 Leader 服务器挂了,需要一个端口来重新进行选举,选出一个新的Leader,而这个端口就是用来执行选举时服务器相互通信的端口

-

-

拷贝配置好的 zookeeper 到其他机器上

[cool@hadoop102 module]$ xsync zookeeper-3.5.7/ -

分别在 hadoop103、 hadoop104 上修改 myid 文件中内容为 3、 4

[cool@hadoop103 zkData]$ vim myid [cool@hadoop103 zkData]$ cat myid 3[cool@hadoop104 zkData]$ vim myid [cool@hadoop104 zkData]$ cat myid 4 -

操作ZooKeeper

-

当只启动一个Hadoop104节点后,查看Zookeeper状态

[cool@hadoop104 zookeeper-3.5.7]$ bin/zkServer.sh start ZooKeeper JMX enabled by default Using config: /opt/module/zookeeper-3.5.7/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [cool@hadoop104 zookeeper-3.5.7]$ bin/zkServer.sh status ZooKeeper JMX enabled by default Using config: /opt/module/zookeeper-3.5.7/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. //发生错误:集群中只有半数以上节点存活,Zookeeper集群才能正常服务 Error contacting service. It is probably not running. -

分别Hadoop102和Hadoop103启动 Zookeeper

[cool@hadoop102 zookeeper-3.5.7]$ bin/zkServer.sh start [cool@hadoop103 zookeeper-3.5.7]$ bin/zkServer.sh start -

查看状态

[cool@hadoop102 zookeeper-3.5.7]$ bin/zkServer.sh status ZooKeeper JMX enabled by default Using config: /opt/module/zookeeper-3.5.7/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Mode: follower //hadoop102为follower[cool@hadoop103 zookeeper-3.5.7]$ bin/zkServer.sh status ZooKeeper JMX enabled by default Using config: /opt/module/zookeeper-3.5.7/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Mode: follower //hadoop103为follower[cool@hadoop104 zookeeper-3.5.7]$ bin/zkServer.sh status ZooKeeper JMX enabled by default Using config: /opt/module/zookeeper-3.5.7/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Mode: leader //hadoop104为 leader

-

3.1.2 ⭐选举机制

Zookeeper中服务器信息中的几个标识信息:

- SID:服务器ID:用来唯一标识一台ZooKeeper集群中的机器,每台机器不能重复,和 myid 一致

- ZXID:事务ID:ZXID是一个事务ID,用来标识一次服务器状态的变更。在某一时刻,集群中的每台机器的ZXID值不一定完全一致,这和ZooKeeper服务器对于客户端“更新请求”的处理逻辑有关

- Epoch:每个Leader任期的代号:没有Leader时同一轮投票过程中的逻辑时钟值是相同的。每投完一次票这个数据就会增加

-

Zookeeper选举机制——第一次启动

- 服务器 1 启动, 发起一次选举:服务器 1 投自己一票。此时服务器1票数 1 票, 不够半数以上( 3票) ,选举无法完成,服务器1状态保持为LOOKING

- 服务器 2 启动,再发起一次选举:服务器 2 投自己一票。此时服务器 1 发现服务器2的 myid 比自己目前投票推举的(服务器1)大,更改选票为推举服务器2。此时服务器1票数0票,服务器2票数2票,没有半数以上结果,选举无法完成,服务器1,2状态保持LOOKING

- 服务器 3 启动, 发起一次选举:服务器 3 投自己一票。由于 myid = 3 最大,此时服务器 1 和 2 都会更改选票为投服务器3。 此次投票结果:服务器1为0票, 服务器2为0票, 服务器3为3票。 ⭐此时服务器3的票数已经超过半数, 服务器3当选Leader。 服务器1, 2更改状态为FOLLOWING, 服务器3更改状态为LEADING

- 服务器 4 启动, 发起一次选举:服务器 3 投自己一票。但此时服务器1, 2, 3已经不是LOOKING状态, 不会更改选票信息。 最后结果为服务器 3 为3票, 服务器 4 为1票。 此时服务器 4 服从多数, 更改选票信息为服务器3, 并更改状态为FOLLOWING

- 服务器 5 启动, 同4一样当小弟

-

Zookeeper选举机制——非第一次启动

-

当ZooKeeper集群中的一台服务器出现以下两种情况之一时, 就会开始进入Leader选举

- 服务器初始化启动(第一次启动)

- 服务器运行期间无法和Leader保持连接(任何一台服务器出现问题,连不上leader,都会首先认为是lesder挂掉了,自己就会开始发起选举)

-

而当一台机器进入 Leader 选举流程时,当前集群也可能会处于以下两种状态:

-

集群中本来就已经存在一个Leader

- 此时 leader并没有坏,而是发起选举的那个服务器自己坏了,此服务器试图去选举Leader时,会被告知当前服务器的Leader信息

- 对于该机器来说,仅仅需要和Leader机器建立连接,并进行状态同步即可

-

集群中确实不存在Leader

-

此时就是Leader 坏了,假设ZooKeeper由5台服务器组成, SID分别为1、 2、 3、 4、 5, ZXID分别为8、 8、 8、 7、 7,并且此时SID为3的服务器是 Leader。某一时刻,3 和 5 服务器出现故障,因此开始进行Leader选举

-

在服务器 1、2、4 之间重新选择一个 Leader 出来

-

⭐ Leader 选举规则如下:

- EPOCH(任期)大的直接胜出

- EPOCH相同,事务id(做的事多少)大的胜出

- 事务id相同,服务器id(序号)大的胜出

-

此时:

EPOCH, ZXID, SID 服务器1: 1 8 1 服务器2: 1 8 2 服务器4: 1 7 4 -

选举结果:

选取服务器 2 为 Leader

-

-

-

3.1.3 ZooKeeper集群脚本

-

在 hadoop102 的/home/cool/bin 目录下创建脚本

[cool@hadoop102 zookeeper-3.5.7]$ cd /home/cool/bin/ [cool@hadoop102 bin]$ vim zk.sh -

在脚本中编写如下内容

#!/bin/bash case $1 in "start"){ for i in hadoop102 hadoop103 hadoop104 do echo ---------- zookeeper $i 启动 ------------ ssh $i "/opt/module/zookeeper-3.5.7/bin/zkServer.sh start" done };; "stop"){ for i in hadoop102 hadoop103 hadoop104 do echo ---------- zookeeper $i 停止 ------------ ssh $i "/opt/module/zookeeper-3.5.7/bin/zkServer.sh stop" done };; "status"){ for i in hadoop102 hadoop103 hadoop104 do echo ---------- zookeeper $i 状态 ------------ ssh $i "/opt/module/zookeeper-3.5.7/bin/zkServer.sh status" done };; esac -

增加脚本执行权限

[cool@hadoop102 bin]$ chmod u+x zk.sh -

Zookeeper 集群启动脚本

[cool@hadoop102 zookeeper-3.5.7]$ zk.sh start ---------- zookeeper hadoop102 启动 ------------ ZooKeeper JMX enabled by default Using config: /opt/module/zookeeper-3.5.7/bin/../conf/zoo.cfg Starting zookeeper ... STARTED ---------- zookeeper hadoop103 启动 ------------ ZooKeeper JMX enabled by default Using config: /opt/module/zookeeper-3.5.7/bin/../conf/zoo.cfg Starting zookeeper ... STARTED ---------- zookeeper hadoop104 启动 ------------ ZooKeeper JMX enabled by default Using config: /opt/module/zookeeper-3.5.7/bin/../conf/zoo.cfg Starting zookeeper ... STARTED -

Zookeeper 集群停止脚本

[cool@hadoop102 bin]$ zk.sh stop ---------- zookeeper hadoop102 停止 ------------ ZooKeeper JMX enabled by default Using config: /opt/module/zookeeper-3.5.7/bin/../conf/zoo.cfg Stopping zookeeper ... STOPPED ---------- zookeeper hadoop103 停止 ------------ ZooKeeper JMX enabled by default Using config: /opt/module/zookeeper-3.5.7/bin/../conf/zoo.cfg Stopping zookeeper ... STOPPED ---------- zookeeper hadoop104 停止 ------------ ZooKeeper JMX enabled by default Using config: /opt/module/zookeeper-3.5.7/bin/../conf/zoo.cfg Stopping zookeeper ... STOPPED

3.2 客户端命令操作集群

3.2.1 命令行语法

-

help:显示所有操作命令 -

ls path:使用 ls 命令来查看当前 znode 的子节点 [可监听]- -w 监听子节点变化(路径变化)

- -s 附加次级信息

-

create:普通创建- -s 含有序列

- -e 临时(重启或者超时消失)

-

get path:获得节点的值 [可监听]- -w 监听节点内容变化

- -s 附加次级信息

-

set:设置节点的具体值 -

stat:查看节点状态 -

delete:删除节点 -

deleteall:递归删除节点 -

启动客户端

[cool@hadoop102 zookeeper-3.5.7]$ bin/zkCli.sh -

显示所有操作命令

[zk: localhost:2181(CONNECTED) 0] help

3.2.2 znode 节点数据信息

-

查看当前znode中所包含的内容

[zk: localhost:2181(CONNECTED) 1] ls / [zookeeper] -

查看当前节点详细数据

[zk: localhost:2181(CONNECTED) 2] ls -s / [zookeeper]cZxid = 0x0 ctime = Thu Jan 01 08:00:00 CST 1970 mZxid = 0x0 mtime = Thu Jan 01 08:00:00 CST 1970 pZxid = 0x0 cversion = -1 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 0 numChildren = 1节点信息描述:

-

czxid: 创建节点的事务 zxid每次修改 ZooKeeper 状态都会产生一个 ZooKeeper 事务 ID。事务ID 是 ZooKeeper 中所有修改总的次序。每次修改都有唯一的 zxid,如果 zxid1 小于 zxid2,那么 zxid1 在 zxid2 之前发生

-

ctime: znode 被创建的毫秒数(从 1970 年开始)

-

mzxid: znode 最后更新的事务 zxid

-

mtime: znode 最后修改的毫秒数(从 1970 年开始)

-

pZxid: znode 最后更新的子节点 zxid

-

cversion: znode 子节点变化号, znode 子节点修改次数

-

dataversion: znode 数据变化号 -

aclVersion: znode 访问控制列表的变化号

-

ephemeralOwner: 如果是临时节点,这个是 znode 拥有者的session id,如果不是临时节点则是 0

-

dataLength: znode 的数据长度 -

numChildren: znode 子节点数量

-

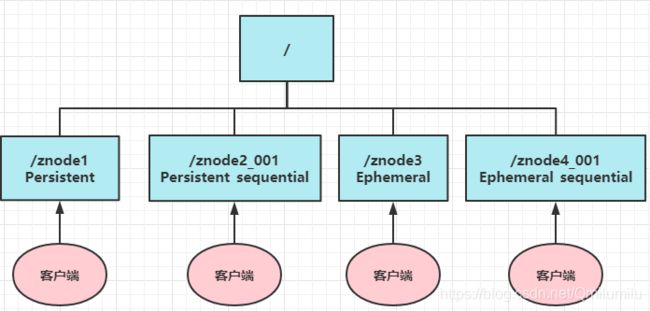

3.2.3 节点类型

-

分类1:

- 持久(Persistent) :客户端和服务器端断开连接后, 创建的节点不删除

- 短暂(Ephemeral) :客户端和服务器端断开连接后, 创建的节点自己删除

-

分类2:

-

无序号

-

有序号:创建znode时设置顺序标识, znode名称后会附加一个值, 顺序号是一个单调递增的计数器, 由父节点维护

注意:在分布式系统中, 顺序号可以被用于为所有的事件进行全局排序, 这样客户端可以通过顺序号推断事件的顺序

-

-

节点分类:

- 持久化目录节点:客户端与Zookeeper断开连接后,该节点依旧存在

- 持久化顺序编号目录节点:客户端与Zookeeper断开连接后,该节点依旧存在,只是Zookeeper给该节点名称进行顺序编号

- 临时目录节点:客户端与Zookeeper断开连接后, 该节点被删除

- 临时顺序编号目录节点:客 户 端 与 Zookeeper 断 开 连 接 后 , 该 节 点 被 删 除 , 只 是Zookeeper给该节点名称进行顺序编号

-

案例实操:

-

分别创建2个普通节点(永久节点 + 不带序号)

//⭐注意:创建节点时,要赋值 //创建一个节点 [zk: localhost:2181(CONNECTED) 3] create /sanguo "diaochan" Created /sanguo [zk: localhost:2181(CONNECTED) 5] ls / [sanguo, zookeeper] //创建一个sanguo的子节点 [zk: localhost:2181(CONNECTED) 6] create /sanguo/shuguo "liubei" Created /sanguo/shuguo [zk: localhost:2181(CONNECTED) 7] ls / [sanguo, zookeeper] -

获得节点的值

//获得sanguo节点的值 [zk: localhost:2181(CONNECTED) 11] get /sanguo diaochan //查看sanguo节点详细信息 -s [zk: localhost:2181(CONNECTED) 8] get -s /sanguo diaochan cZxid = 0x200000002 ctime = Thu Aug 19 20:41:23 CST 2021 mZxid = 0x200000002 mtime = Thu Aug 19 20:41:23 CST 2021 pZxid = 0x200000003 cversion = 1 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 8 numChildren = 1 //查看sanguo的子节点 [zk: localhost:2181(CONNECTED) 9] get -s /sanguo/shuguo liubei cZxid = 0x200000003 ctime = Thu Aug 19 20:41:52 CST 2021 mZxid = 0x200000003 mtime = Thu Aug 19 20:41:52 CST 2021 pZxid = 0x200000003 cversion = 0 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 6 numChildren = 0 -

创建带序号的节点(永久节点 + 带序号)

-

先创建一个普通的根节点/sanguo/weiguo

[zk: localhost:2181(CONNECTED) 12] create /sanguo/weiguo "caocao" Created /sanguo/weiguo -

创建带序号的节点 -s

[zk: localhost:2181(CONNECTED) 13] create -s /sanguo/weiguo/zhangliao "zhangliao" Created /sanguo/weiguo/zhangliao0000000000 -

查看

[zk: localhost:2181(CONNECTED) 16] ls /sanguo/weiguo [zhangliao0000000000] //该节点是带序号的 -

再执行一次刚刚的创建操作(带序号的节点是可以连续创建的)

[zk: localhost:2181(CONNECTED) 17] create -s /sanguo/weiguo/zhangliao "zhangliao" Created /sanguo/weiguo/zhangliao0000000001 //如果原来没有序号节点,序号从 0 开始依次递增。 如果原节点下已有 1 个节点,则再排序时从 0001 开始,以此类推 -

查看

[zk: localhost:2181(CONNECTED) 18] ls /sanguo/weiguo [zhangliao0000000000, zhangliao0000000001]

-

-

创建短暂节点(短暂节点 + 不带序号 or 带序号)

-

创建短暂的不带序号的节点

[zk: localhost:2181(CONNECTED) 19] create -e /sanguo/wuguo "zhouyu" Created /sanguo/wuguo //短暂节点是不可以创建子节点 [zk: localhost:2181(CONNECTED) 20] create -e -s /sanguo/wuguo/xiaoqiao "xiaoqiao" Ephemerals cannot have children: /sanguo/wuguo/xiaoqiao -

创建短暂的带序号的节点

[zk: localhost:2181(CONNECTED) 21] create -e -s /sanguo/wuguo "zhouyu" Created /sanguo/wuguo0000000003 -

在当前客户端是能查看到的

[zk: localhost:2181(CONNECTED) 22] ls /sanguo [shuguo, weiguo, wuguo, wuguo0000000003] -

退出当前客户端然后再重启客户端

[zk: localhost:2181(CONNECTED) 23] quit [cool@hadoop102 zookeeper-3.5.7]$ bin/zkCli.sh -

再次查看根目录下短暂节点已经删除

[zk: localhost:2181(CONNECTED) 0] ls / [sanguo, zookeeper]

-

-

修改节点数据值

[zk: localhost:2181(CONNECTED) 1] get /sanguo diaochan //修改 [zk: localhost:2181(CONNECTED) 3] set /sanguo "qhj" [zk: localhost:2181(CONNECTED) 4] get /sanguo qhj

-

3.2.4 监听器原理

客户端注册监听它关心的目录节点,当目录节点发生变化(数据改变、节点删除、子目录节点增加删除)时, ZooKeeper 会通知客户端。监听机制保证 ZooKeeper 保存的任何的数据的任何改变都能快速的响应到监听了该节点的应用程序

-

常见的监听

- 监听节点的数据变化:

get path [watch] - 监听子节点增减的变化:

ls path [watch]

- 监听节点的数据变化:

-

监听原理详解

- 首先要有一个 main( ) 线程

- 在 main 线程中创建 Zookeeper 客户端,这时就会创建两个线程, 一个负责网络连接通信(connet) ,一个负责监听(listener)

- 通过 connect 线程将注册的监听事件发送给 Zookeeper

- 在 Zookeeper 的注册监听器列表中将注册的监听事件添加到列表中

- Zookeeper 监听到有数据或路径变化,就会将这个消息发送给listener线程

- listener 线程内部调用了 process( ) 方法

-

实操案例:

-

节点的值变化监听

-

在 hadoop104 主机上注册监听/sanguo 节点数据变化

[zk: localhost:2181(CONNECTED) 0] get -w /sanguo qhj -

在 hadoop103 主机上修改/sanguo 节点的数据

[zk: localhost:2181(CONNECTED) 0] set /sanguo "xisi" -

观察 hadoop104 主机收到数据变化的监听

[zk: localhost:2181(CONNECTED) 1] WATCHER:: WatchedEvent state:SyncConnected type:NodeDataChanged path:/sanguo注意:

- 在hadoop103再多次修改 /sanguo的值,hadoop104上不会再收到监听

- 因为注册一次,只能监听一次。想再次监听,需要再次注册

-

-

节点的子节点变化监听(路径变化)

-

在 hadoop104 主机上注册监听/sanguo 节点的子节点变化

[zk: localhost:2181(CONNECTED) 1] ls -w /sanguo [shuguo, weiguo] -

在 hadoop103 主机/sanguo 节点上创建子节点

[zk: localhost:2181(CONNECTED) 1] create /sanguo/jin "simayi" Created /sanguo/jin -

观察 hadoop104 主机收到子节点变化的监听

[zk: localhost:2181(CONNECTED) 2] WATCHER:: WatchedEvent state:SyncConnected type:NodeChildrenChanged path:/sanguo注意:

节点的路径变化,也是注册一次,生效一次。想多次生效,就需要多次注册

-

-

3.2.5 节点的删除与查看

-

删除节点

[zk: localhost:2181(CONNECTED) 2] delete /sanguo/jin [zk: localhost:2181(CONNECTED) 4] ls /sanguo [shuguo, weiguo] -

递归删除节点

[zk: localhost:2181(CONNECTED) 6] deleteall /sanguo/shuguo [zk: localhost:2181(CONNECTED) 7] ls /sanguo [weiguo] -

查看节点状态

[zk: localhost:2181(CONNECTED) 8] stat /sanguo cZxid = 0x200000002 ctime = Thu Aug 19 20:41:23 CST 2021 mZxid = 0x20000000f mtime = Thu Aug 19 22:10:01 CST 2021 pZxid = 0x200000014 cversion = 9 dataVersion = 2 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 4 numChildren = 1

3.3 客户端 API 操作集群

-

创建maven工程

-

添加pom文件

<dependencies> <dependency> <groupId>junitgroupId> <artifactId>junitartifactId> <version>RELEASEversion> dependency> <dependency> <groupId>org.apache.logging.log4jgroupId> <artifactId>log4j-coreartifactId> <version>2.8.2version> dependency> <dependency> <groupId>org.apache.zookeepergroupId> <artifactId>zookeeperartifactId> <version>3.5.7version> dependency> dependencies> -

在src/main/resources下添加log4j.properties文件

log4j.rootLogger=INFO, stdout log4j.appender.stdout=org.apache.log4j.ConsoleAppender log4j.appender.stdout.layout=org.apache.log4j.PatternLayout log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n log4j.appender.logfile=org.apache.log4j.FileAppender log4j.appender.logfile.File=target/spring.log log4j.appender.logfile.layout=org.apache.log4j.PatternLayout log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n -

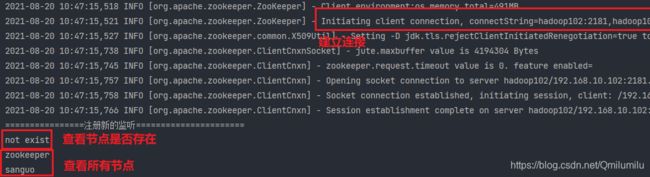

zkClient类

public class zkClient { //设置连接时限 private int sessionTimeout = 2000; //设置要连接的zookeeper地址(没有设置主机映射hosts时,需要写IP地址) private String connectString = "hadoop102:2181,hadoop103:2181,hadoop104:2181"; private ZooKeeper zooClient; //⭐创建客户端 @Before public void init() throws IOException { zooClient = new ZooKeeper(connectString,sessionTimeout, new Watcher() { @Override public void process(WatchedEvent watchedEvent) { //将注册放在此处的话,每完成一个监听事件后,就会接着注册一个新的监听 System.out.println("================注册新的监听======================"); List<String> children = null; try { children = zooClient.getChildren("/", true); for (String child : children) { System.out.println(child); } } catch (KeeperException e) { e.printStackTrace(); } catch (InterruptedException e) { e.printStackTrace(); } } }); } //⭐创建节点 @Test public void create() throws InterruptedException, KeeperException { //传入的参数依次为:(节点创建路径,数据内容-字节型,权限,节点类型) String nodeCreate = zooClient.create("/cool", "qhj.txt".getBytes(), ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT); } //⭐注册监听 @Test public void getChildren() throws InterruptedException, KeeperException { //注册放在这里,会存在一个问题:一次注册只能监听一次,后续的操作并不会被监听到 //传入的参数依次为:(要监听哪个路径,监听器-如果为true则使用创建客户端时传入的那个Watcher,也可以自己再new) List<String> children = zooClient.getChildren("/", true); for (String child : children) { System.out.println(child); } //延时(不让监听停止,保证后续集群操作可以被监听到) Thread.sleep(Long.MAX_VALUE); } //⭐判断znode节点是否存在 @Test public void exit() throws InterruptedException, KeeperException { //此处没有开启监听 Stat stat = zooClient.exists("/coolcool", false); System.out.println(stat == null ? "not exist" : "exist"); } } -

测试结果: