整理uvc驱动相关函数的调用流程

目录

- 1、uvc_video.c

-

- 初始化函数的调用关系

- 2、uvc_queue.c

- 3、uvc_v4l2.c

- 4、v4l2-core

- 5、数据传输

-

- 1、分配一个gadget请求

- 2、请求一个queue

1、uvc_video.c

// uvc_video.c

uvc_video_encode_header

uvc_video_encode_data

uvc_video_encode_bulk

uvc_video_encode_isoc

uvcg_video_ep_queue

uvc_video_complete

uvc_video_free_requests //释放video请求

uvc_video_alloc_requests //分配video请求

uvcg_video_pump //Pump video data into the USB requests

uvcg_video_enable //Enable or disable the video stream

uvcg_video_init //Initialize UVC video stream

初始化函数的调用关系

uvc_function_bind //f_uvc.c

uvcg_video_init(&uvc->video, uvc) //f_uvc.c Initialise video

uvcg_queue_init //uvc_video.c Initialize the video buffers queue

vb2_queue_init // videobuf2-v4l2.c

vb2_core_queue_init //videobuf2-core.c

2、uvc_queue.c

uvc_queue_setup

uvc_buffer_prepare

uvc_buffer_queue

uvcg_queue_init

uvcg_free_buffers

uvcg_alloc_buffers

uvcg_query_buffer

uvcg_dequeue_buffer

uvcg_queue_poll

uvcg_queue_mmap

uvcg_queue_get_unmapped_area

uvcg_queue_cancel

uvcg_queue_enable

uvcg_queue_next_buffer

uvcg_queue_head

3、uvc_v4l2.c

uvc_send_response

uvc_v4l2_querycap

uvc_v4l2_get_format

uvc_v4l2_set_format

uvc_v4l2_reqbufs

uvc_v4l2_querybuf

uvc_v4l2_qbuf

uvc_v4l2_dqbuf

uvc_v4l2_streamon

uvc_v4l2_streamoff

uvc_v4l2_subscribe_event

uvc_v4l2_unsubscribe_event

uvc_v4l2_extend_configuration

uvc_v4l2_ioctl_default

uvc_v4l2_open

uvc_v4l2_release

uvc_v4l2_mmap

uvc_v4l2_poll

uvcg_v4l2_get_unmapped_area

#define call_qop(q, op, args...) \

({ \

int err; \

\

log_qop(q, op); \

err = (q)->ops->op ? (q)->ops->op(args) : 0; \

if (!err) \

(q)->cnt_ ## op++; \

err; \

})

uvc_function_bind //f_uvc.c

uvc_register_video //f_uvc.c

结构体uvc_v4l2_ioctl_ops //uvc_v4l2.c

uvc_v4l2_streamon //uvc_v4l2.c

uvcg_video_enable //uvc_video.c

uvcg_queue_enable //uvc_queue.c

vb2_streamon //videobuf2-v4l2.c

vb2_core_streamon //videobuf2-core.c

vb2_start_streaming //videobuf2-core.c

call_qop(q, start_streaming, q,atomic_read(&q->owned_by_drv_count)) videobuf2-core.c

4、v4l2-core

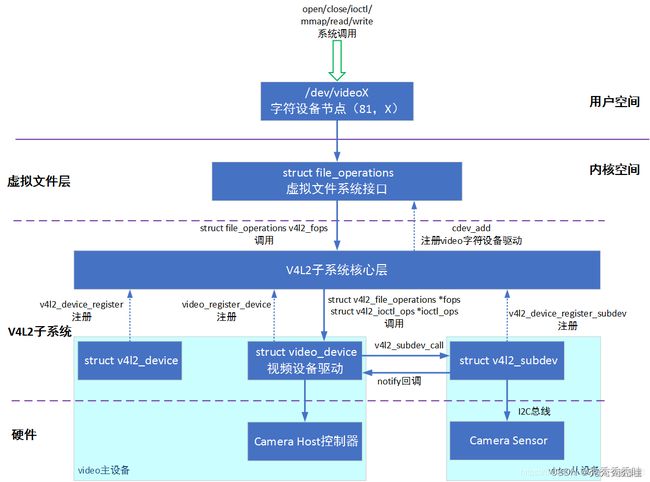

参考文章:https://blog.csdn.net/u013836909/article/details/125360024

//drivers\media\v4l2-core\v4l2-dev.c

static const struct file_operations v4l2_fops = {

.owner = THIS_MODULE,

.read = v4l2_read,

.write = v4l2_write,

.open = v4l2_open,

.get_unmapped_area = v4l2_get_unmapped_area,

.mmap = v4l2_mmap,

.unlocked_ioctl = v4l2_ioctl,

#ifdef CONFIG_COMPAT

.compat_ioctl = v4l2_compat_ioctl32,

#endif

.release = v4l2_release,

.poll = v4l2_poll,

.llseek = no_llseek,

};

video_register_device //include/media/v4l2-dev.h

__video_register_device //drivers/media/v4l2-core/v4l2-dev.c

//自动创建设备节点

device_register //drivers/media/v4l2-core/v4l2-dev.c

// include\media\v4l2-dev.h

/**

* struct v4l2_file_operations - fs operations used by a V4L2 device

*

* @owner: pointer to struct module

* @read: operations needed to implement the read() syscall

* @write: operations needed to implement the write() syscall

* @poll: operations needed to implement the poll() syscall

* @unlocked_ioctl: operations needed to implement the ioctl() syscall

* @compat_ioctl32: operations needed to implement the ioctl() syscall for

* the special case where the Kernel uses 64 bits instructions, but

* the userspace uses 32 bits.

* @get_unmapped_area: called by the mmap() syscall, used when %!CONFIG_MMU

* @mmap: operations needed to implement the mmap() syscall

* @open: operations needed to implement the open() syscall

* @release: operations needed to implement the release() syscall

*

* .. note::

*

* Those operations are used to implemente the fs struct file_operations

* at the V4L2 drivers. The V4L2 core overrides the fs ops with some

* extra logic needed by the subsystem.

*/

struct v4l2_file_operations {

struct module *owner;

ssize_t (*read) (struct file *, char __user *, size_t, loff_t *);

ssize_t (*write) (struct file *, const char __user *, size_t, loff_t *);

__poll_t (*poll) (struct file *, struct poll_table_struct *);

long (*unlocked_ioctl) (struct file *, unsigned int, unsigned long);

#ifdef CONFIG_COMPAT

long (*compat_ioctl32) (struct file *, unsigned int, unsigned long);

#endif

unsigned long (*get_unmapped_area) (struct file *, unsigned long,

unsigned long, unsigned long, unsigned long);

int (*mmap) (struct file *, struct vm_area_struct *);

int (*open) (struct file *);

int (*release) (struct file *);

};

static const struct file_operations v4l2_fops = {

.owner = THIS_MODULE,

.read = v4l2_read,

.write = v4l2_write,

.open = v4l2_open,

.get_unmapped_area = v4l2_get_unmapped_area,

.mmap = v4l2_mmap,

.unlocked_ioctl = v4l2_ioctl,

#ifdef CONFIG_COMPAT

.compat_ioctl = v4l2_compat_ioctl32,

#endif

.release = v4l2_release,

.poll = v4l2_poll,

.llseek = no_llseek,

};

通过V4L2子系统提供的v4l2_fops集合,可直接调用底层驱动实现的Video主设备struct v4l2_file_operations方法

__video_register_device

vdev->cdev->ops = &v4l2_fops

v4l2_open

vdev->fops->open(filp) //调用uvc_v4l2.c中的uvc_v4l2_open

v4l2_ioctl

vdev->fops->unlocked_ioctl(filp, cmd, arg) //调用uvc_v4l2.c中的video_ioctl2

video_usercopy(file, cmd, arg, __video_do_ioctl)

回调__video_do_ioctl

ops->vidioc_default //调用uvc_v4l2.c中的vidioc_default

//drivers\media\v4l2-core\v4l2-ioctl.c

long video_ioctl2(struct file *file,

unsigned int cmd, unsigned long arg)

{

return video_usercopy(file, cmd, arg, __video_do_ioctl);

}

EXPORT_SYMBOL(video_ioctl2);

5、数据传输

参考文章:https://blog.csdn.net/qq_32938605/article/details/119787380

1、分配一个gadget请求

// \udc\ambarella_udc.c

static const struct usb_ep_ops ambarella_ep_ops = {

.enable = ambarella_udc_ep_enable,

.disable = ambarella_udc_ep_disable,

.alloc_request = ambarella_udc_alloc_request,

.free_request = ambarella_udc_free_request,

.queue = ambarella_udc_queue,

.dequeue = ambarella_udc_dequeue,

.set_halt = ambarella_udc_set_halt,

/* fifo ops not implemented */

};

usb_ep_alloc_request 分配一个gadget请求

// \udc\core.c

/**

* usb_ep_alloc_request - allocate a request object to use with this endpoint

* @ep:the endpoint to be used with with the request

* @gfp_flags:GFP_* flags to use

*

* Request objects must be allocated with this call, since they normally

* need controller-specific setup and may even need endpoint-specific

* resources such as allocation of DMA descriptors.

* Requests may be submitted with usb_ep_queue(), and receive a single

* completion callback. Free requests with usb_ep_free_request(), when

* they are no longer needed.

*

* Returns the request, or null if one could not be allocated.

*/

struct usb_request *usb_ep_alloc_request(struct usb_ep *ep,

gfp_t gfp_flags)

{

struct usb_request *req = NULL;

req = ep->ops->alloc_request(ep, gfp_flags);

trace_usb_ep_alloc_request(ep, req, req ? 0 : -ENOMEM);

return req;

}

EXPORT_SYMBOL_GPL(usb_ep_alloc_request);

//f_uvc.c的bind()函数

/* Preallocate control endpoint request. */

uvc->control_req = usb_ep_alloc_request(cdev->gadget->ep0, GFP_KERNEL);

uvc->control_buf = kmalloc(UVC_MAX_REQUEST_SIZE, GFP_KERNEL);

if (uvc->control_req == NULL || uvc->control_buf == NULL) {

ret = -ENOMEM;

goto error;

}

uvc->control_req->buf = uvc->control_buf;

uvc->control_req->complete = uvc_function_ep0_complete;

uvc->control_req->context = uvc;

//ambarella_udc.c

ambarella_udc_probe

ambarella_init_gadget

ep->ep.ops = &ambarella_ep_ops

2、请求一个queue

usb_ep_queue 请求一个queue

/**

* usb_ep_queue - queues (submits) an I/O request to an endpoint.

* @ep:the endpoint associated with the request

* @req:the request being submitted

* @gfp_flags: GFP_* flags to use in case the lower level driver couldn't

* pre-allocate all necessary memory with the request.

*

* This tells the device controller to perform the specified request through

* that endpoint (reading or writing a buffer). When the request completes,

* including being canceled by usb_ep_dequeue(), the request's completion

* routine is called to return the request to the driver. Any endpoint

* (except control endpoints like ep0) may have more than one transfer

* request queued; they complete in FIFO order. Once a gadget driver

* submits a request, that request may not be examined or modified until it

* is given back to that driver through the completion callback.

*

* Each request is turned into one or more packets. The controller driver

* never merges adjacent requests into the same packet. OUT transfers

* will sometimes use data that's already buffered in the hardware.

* Drivers can rely on the fact that the first byte of the request's buffer

* always corresponds to the first byte of some USB packet, for both

* IN and OUT transfers.

*

* Bulk endpoints can queue any amount of data; the transfer is packetized

* automatically. The last packet will be short if the request doesn't fill it

* out completely. Zero length packets (ZLPs) should be avoided in portable

* protocols since not all usb hardware can successfully handle zero length

* packets. (ZLPs may be explicitly written, and may be implicitly written if

* the request 'zero' flag is set.) Bulk endpoints may also be used

* for interrupt transfers; but the reverse is not true, and some endpoints

* won't support every interrupt transfer. (Such as 768 byte packets.)

*

* Interrupt-only endpoints are less functional than bulk endpoints, for

* example by not supporting queueing or not handling buffers that are

* larger than the endpoint's maxpacket size. They may also treat data

* toggle differently.

*

* Control endpoints ... after getting a setup() callback, the driver queues

* one response (even if it would be zero length). That enables the

* status ack, after transferring data as specified in the response. Setup

* functions may return negative error codes to generate protocol stalls.

* (Note that some USB device controllers disallow protocol stall responses

* in some cases.) When control responses are deferred (the response is

* written after the setup callback returns), then usb_ep_set_halt() may be

* used on ep0 to trigger protocol stalls. Depending on the controller,

* it may not be possible to trigger a status-stage protocol stall when the

* data stage is over, that is, from within the response's completion

* routine.

*

* For periodic endpoints, like interrupt or isochronous ones, the usb host

* arranges to poll once per interval, and the gadget driver usually will

* have queued some data to transfer at that time.

*

* Note that @req's ->complete() callback must never be called from

* within usb_ep_queue() as that can create deadlock situations.

*

* This routine may be called in interrupt context.

*

* Returns zero, or a negative error code. Endpoints that are not enabled

* report errors; errors will also be

* reported when the usb peripheral is disconnected.

*

* If and only if @req is successfully queued (the return value is zero),

* @req->complete() will be called exactly once, when the Gadget core and

* UDC are finished with the request. When the completion function is called,

* control of the request is returned to the device driver which submitted it.

* The completion handler may then immediately free or reuse @req.

*/

int usb_ep_queue(struct usb_ep *ep,

struct usb_request *req, gfp_t gfp_flags)

{

int ret = 0;

if (WARN_ON_ONCE(!ep->enabled && ep->address)) {

ret = -ESHUTDOWN;

goto out;

}

ret = ep->ops->queue(ep, req, gfp_flags);

out:

trace_usb_ep_queue(ep, req, ret);

return ret;

}

EXPORT_SYMBOL_GPL(usb_ep_queue);

usb_ep_queue的调用流程

// uvc_v4l2.c

ioctl(dev->fd, UVCIOC_SEND_RESPONSE, &resp)//应用层

uvc_v4l2_ioctl_default

uvc_send_response(uvc, arg)

usb_ep_queue(cdev->gadget->ep0, req, GFP_KERNEL)