mongodb节点一直处于recovering状态问题修复

mongoDB版本:5.0.4

该节点mongod服务日志一直在刷如下日志

{"t":{"$date":"2023-06-19T15:24:50.156+08:00"},"s":"I", "c":"REPL", "id":5579708, "ctx":"ReplCoordExtern-0","msg":"We are too stale to use candidate as a sync source. Denylisting this sync source because our last fetched timestamp is before their earliest timestamp","attr":{"candidate":"192.168.xx.xx2:27017","lastOpTimeFetchedTimestamp":{"$timestamp":{"t":1679574780,"i":1}},"remoteEarliestOpTimeTimestamp":{"$timestamp":{"t":1685543933,"i":49}},"denylistDurationMinutes":1,"denylistUntil":{"$date":"2023-06-19T07:25:50.156Z"}}}

{"t":{"$date":"2023-06-19T15:24:50.157+08:00"},"s":"I", "c":"REPL", "id":21799, "ctx":"ReplCoordExtern-0","msg":"Sync source candidate chosen","attr":{"syncSource":"192.168.xx.xx1:27017"}}

{"t":{"$date":"2023-06-19T15:24:50.157+08:00"},"s":"I", "c":"REPL", "id":5579708, "ctx":"ReplCoordExtern-0","msg":"We are too stale to use candidate as a sync source. Denylisting this sync source because our last fetched timestamp is before their earliest timestamp","attr":{"candidate":"192.168.xx.xx1:27017","lastOpTimeFetchedTimestamp":{"$timestamp":{"t":1679574780,"i":1}},"remoteEarliestOpTimeTimestamp":{"$timestamp":{"t":1685543941,"i":9}},"denylistDurationMinutes":1,"denylistUntil":{"$date":"2023-06-19T07:25:50.157Z"}}}

{"t":{"$date":"2023-06-19T15:24:50.157+08:00"},"s":"I", "c":"REPL", "id":21798, "ctx":"ReplCoordExtern-0","msg":"Could not find member to sync from"}

{"t":{"$date":"2023-06-19T15:25:27.576+08:00"},"s":"I", "c":"STORAGE", "id":22430, "ctx":"Checkpointer","msg":"WiredTiger message","attr":{"message":"[1687159527:576881][80855:0x7f73a7094700], WT_SESSION.checkpoint: [WT_VERB_CHECKPOINT_PROGRESS] saving checkpoint snapshot min: 8008, snapshot max: 8008 snapshot count: 0, oldest timestamp: (1679574480, 1) , meta checkpoint timestamp: (1679574780, 1) base write gen: 83012912"}}

{"t":{"$date":"2023-06-19T15:25:50.165+08:00"},"s":"I", "c":"REPL", "id":21799, "ctx":"BackgroundSync","msg":"Sync source candidate chosen","attr":{"syncSource":"192.168.xx.xx2:27017"}}

{"t":{"$date":"2023-06-19T15:25:50.166+08:00"},"s":"I", "c":"REPL", "id":5579708, "ctx":"ReplCoordExtern-0","msg":"We are too stale to use candidate as a sync source. Denylisting this sync source because our last fetched timestamp is before their earliest timestamp","attr":{"candidate":"192.168.xx.xx2:27017","lastOpTimeFetchedTimestamp":{"$timestamp":{"t":1679574780,"i":1}},"remoteEarliestOpTimeTimestamp":{"$timestamp":{"t":1685543933,"i":49}},"denylistDurationMinutes":1,"denylistUntil":{"$date":"2023-06-19T07:26:50.166Z"}}}

{"t":{"$date":"2023-06-19T15:25:50.166+08:00"},"s":"I", "c":"REPL", "id":21799, "ctx":"ReplCoordExtern-0","msg":"Sync source candidate chosen","attr":{"syncSource":"192.168.xx.xx1:27017"}}

{"t":{"$date":"2023-06-19T15:25:50.167+08:00"},"s":"I", "c":"REPL", "id":5579708, "ctx":"ReplCoordExtern-0","msg":"We are too stale to use candidate as a sync source. Denylisting this sync source because our last fetched timestamp is before their earliest timestamp","attr":{"candidate":"192.168.xx.xx1:27017","lastOpTimeFetchedTimestamp":{"$timestamp":{"t":1679574780,"i":1}},"remoteEarliestOpTimeTimestamp":{"$timestamp":{"t":1685543941,"i":9}},"denylistDurationMinutes":1,"denylistUntil":{"$date":"2023-06-19T07:26:50.167Z"}}}

{"t":{"$date":"2023-06-19T15:25:50.167+08:00"},"s":"I", "c":"REPL", "id":21798, "ctx":"ReplCoordExtern-0","msg":"Could not find member to sync from"}

主要是这一句

We are too stale to use candidate as a sync source. Denylisting this sync source because our last fetched timestamp is before their earliest timestamp 意思就是说这个节点上的数据过于陈旧,无法实现主从同步。

那就是数据版本落后太多了。

解决办法是先备份集群数据,然后再重做这个节点。步骤如下:

- 备份

mongodump --host=192.168.x.x --port=20000 --authenticationDatabase admin --username=root --password="xxxxx" -d databaseName --out=./databaseName

-

关停有问题的节点

systemctl stop mongod-shard1.service -

删除问题节点数据

比如我这个节点是shard1,则三处shard1数据目录下的所有数据

rm -rf /data/mongodb/shard1/data/* -

重新启动该节点

systemctl start mongod-shard1.service

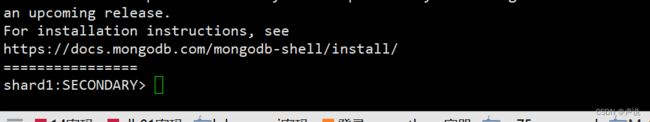

刚开始启动的时候,该节点状态会处于STARTUP2的状态,这表名它正在从主节点复制数据,如果现在去查看节点监控,会发现其入口带宽占用比较大,相对于primary节点出口带宽也比较大。

等数据同步完就正常了

接下来需要优化的工作:

- 添加副本集状态监控,只要不是primary或者secondary就告警;推荐使用mongodb_exporter

一些可能会使用到的命令:

rs.status();

mongorestore --host mongodb1.example.net --port 27017 --username user --password “pass” /opt/backup/mongodump-2011-10-24

Reference:

- https://stackoverflow.com/questions/14371239/why-a-member-of-mongodb-keep-recovering

- https://dba.stackexchange.com/questions/77881/mongo-db-replica-set-stuck-at-recovering-state