PySpark入口架构及Jupyter Notebook集成环境搭建

- 在Linux上安装Anaconda

- 集成PySpark-Installation

- 集成PySpark-Configuration

- 集成PySpark

- PySpark简介

- PySpark包介绍

- 使用PySpark处理数据

- PySpark中使用匿名函数

- SparkContext.addPyFile

- 在PySpark中使用SparkSQL

- Spark与Python第三方库混用

- Pandas DF与Spark DF

- 使用PySpark通过图形进行数据探索

在Linux上安装Anaconda

https://www.anaconda.com/distribution/

bash Anaconda3-5.1.0-Linux-x86_64.sh

集成PySpark-Installation

https://spark.apache.org/downloads.html

- 安装Spark

- 解压压缩包

- 在~/.bashrc中设置Spark环境变量

- SPARK_HOME

- SPARK_CONF_DIR

集成PySpark-Configuration

- 设置Jupyter Notebook允许从外部访问

Generate a password:

pyana

from notebook.auth import passwd

passwd()

cd ~

jupyter notebook --generate-config

vi ./.jupyter/jupyter_notebook_config.py

Update the following fields:

c.NotebookApp.allow_root = True

c.NotebookApp.ip = '*’

c.NotebookApp.open_browser = False

c.NotebookApp.password = 'sha1:*****************’

c.NotebookApp.port = 7070

集成PySpark

export PYSPARK_PYTHON=$anaconda_install_dir/bin/python3

export PYSPARK_DRIVER_PYTHON=$anaconda_install_dir/bin/jupyter

export PYSPARK_DRIVER_PYTHON_OPTS="notebook"

ipython_opts="notebook -pylab inline"

cd ~

source ./.bashrc

cd xxx/anaconda3/share

chmod +777 jupyter

pyspark

PySpark简介

- PySpark的使用场景

- 大数据处理或机器学习时的原型(prototype)开发

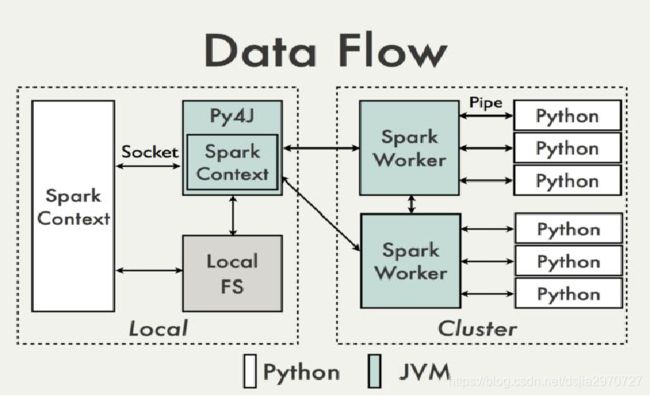

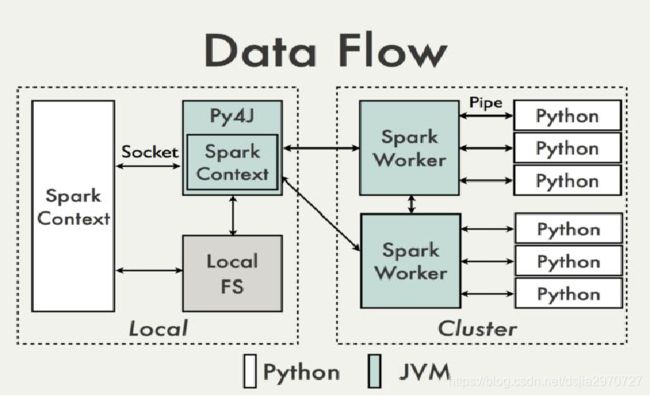

- PySpark结构体系

PySpark包介绍

- PySpark

- Core Classes:

- pyspark.SparkContext

- pyspark.RDD

- pyspark.sql.SQLContext

- pysparl.sql.DataFrame

- pyspark.streaming

- pyspark.streaming.StreamingContext

- pyspark.streaming.DStream

- pyspark.ml

- pyspark.mllib

使用PySpark处理数据

from pyspark import SparkContext

SparkContext.getOrCreate()

- 创建RDD

- 不支持

- 支持

- parallelize()

- textFile()

- wholeTextFiles()

PySpark中使用匿名函数

val a=sc.parallelize(List("dog","tiger","lion","cat","panther","eagle"))

val b=a.map(x=>(x,1))

b.collect

a=sc.parallelize(("dog","tiger","lion","cat","panther","eagle"))

b=a.map(lambda x:(x,1))

b.collect()

SparkContext.addPyFile

- addFile(path,recursive=False)

- 接收本地文件

- 通过SparkFiles.get()方法来获取文件的绝对路径

- addPyFile(path)

- 加载已存在的文件并调用其中的方法

#sci.py

def sqrt(num):

return num * num

def circle_area(r):

return 3.14 * sqrt(r)

sc.addPyFile("file:///root/sci.py")

from sci import circle_area

sc.parallelize([5, 9, 21]).map(lambda x : circle_area(x)).collect()

在PySpark中使用SparkSQL

from pyspark.sql import SparkSession

ss = SparkSession.builder.getOrCreate()

ss.read.format("csv").option("header", "true").load("file:///xxx.csv")

Spark与Python第三方库混用

- 使用Spark做大数据ETL

- 处理后的数据使用Python第三方库分析或展示

- Pandas做数分析

- Pandas DataFrame转Spark DataFrame

- spark.createDataFrame(pandas_df)

- Spark DataFrame转Pandas DataFrame

- Matplotlib实现数据可视化

- Scikit-learn完成机器学习

Pandas DF与Spark DF

# Pandas DataFrame to Spark DataFrame

import numpy as np

import pandas as pd

from pyspark.sql import SparkSession

spark = SparkSession.builder.getOrCreate()

pandas_df = pd.read_csv("./products.csv", header=None, usecols=[1, 3, 5])

print(pandas_df)

# convert to Spark DataFrame

spark_df = spark.createDataFrame(pandas_df)

spark_df.show()

df = spark_df.withColumnRenamed("1", "id").withColumnRenamed("3", "name").withColumnRenamed("5", "remark")

# convert back to Pandas DataFrame

df.toPandas()

使用PySpark通过图形进行数据探索

# from previous LifeExpentancy example

rdd = df.select("LifeExp").rdd.map(lambda x: x[0])

#把数据划为10个区间,并获得每个区间中的数据个数

(countries, bins) = rdd.histogram(10)

print(countries)

print(bins)

import matplotlib.pyplot as plt

import numpy as np

plt.hist(rdd.collect(), 10) # by default the # of bins is 10

plt.title("Life Expectancy Histogram")

plt.xlabel("Life Expectancy")

plt.ylabel("# of Countries")

![]()