NVCaffe P2PManager

Caffe多GPU训练主要涉及4个类:P2PManager、P2PSync、Solver 和 Net。

假设使用4个GPU进行训练,模块依赖图如下图所示:

值得注意的是,每个Solver会创建线程运行Solver::Reduce。而Solver::Reduce会借由Net::ReduceAndUpdate调用Net::Reduce和Solver::ApplyUpdate。这意味着Net::ForwardBackward和Net::ReduceAndUpdate会异步执行。

每个迭代的操作可以简化为:

P2PManager

dl_bar用于data layer。

bar用于P2PSync::soft_barrier(),以避免GPU上的忙碌轮询。

rbar0和rbar1用于P2PSync::reduce_barrier。有两个似乎是因为Forward和Backward参数类型不同。

SharedScores结构体维护的内存可以共享。P2PManager::Run中,P2PSync均会指向该结构体变量。P2PSync::aggregateTestResults和P2PSync::saveTestResults会使用该变量存储损失和分数。

class P2PManager {

public:

P2PManager(shared_ptr<Solver> root_solver, int nranks, const SolverParameter& param);

void Run(const vector<int>& gpus);

void EarlyCancel(P2PSync* killed);

static void dl_bar_wait() {

dl_bar->wait();

}

static void bar_wait() {

bar->wait();

}

static void rbar_wait(int type_id) {

if (type_id == 0) {

rbar0->wait();

} else {

rbar1->wait();

}

}

protected:

const size_t nranks_;

vector<unique_ptr<P2PSync>> syncs_;

shared_ptr<SharedScores<float>> shared_;

shared_ptr<Solver> root_solver_;

#ifdef USE_NCCL

ncclUniqueId nccl_id_;

#endif

static unique_ptr<boost::barrier> dl_bar; // DataLayer sync helper

static unique_ptr<boost::barrier> bar;

static unique_ptr<boost::barrier> rbar0;

static unique_ptr<boost::barrier> rbar1;

};

P2PManager::P2PManager

root_solver_指向求解器。

构造函数主要初始化了barrier。

P2PManager::P2PManager(shared_ptr<Solver> root_solver,

int nranks, const SolverParameter& solver_param) :

nranks_(nranks),

syncs_(nranks),

root_solver_(root_solver) {

#ifndef USE_NCCL

LOG(FATAL) << "USE_NCCL must be specified for multi-GPU mode";

#endif

dl_bar.reset(new boost::barrier(nranks_));

bar.reset(new boost::barrier(nranks_));

rbar0.reset(new boost::barrier(nranks_));

rbar1.reset(new boost::barrier(nranks_));

}

P2PManager::Run

多卡训练需要NCCL。检查数量是否一致。

ncclGetUniqueId函数生成要在ncclCommInitRank函数中使用的Id。

应该调用一次ncclGetUniqueId函数。在调用ncclCommInitRank函数之前,应将Id分发给通信器中的所有队列。

#ifdef USE_NCCL

CHECK_EQ(nranks_, gpus.size());

CHECK_EQ(nranks_, Caffe::solver_count());

NCCL_CHECK(ncclGetUniqueId(&nccl_id_));

#else

LOG(FATAL) << "Multi-GPU execution not available - rebuild with USE_NCCL";

#endif // USE_NCCL

读取求解器参数,切换设备id后用于初始化多个P2PSync。每个P2PSync的shared_均指向P2PManager创建出的共享内存。

SolverParameter param = root_solver_->param();

this->shared_ = make_shared<SharedScores<float>>(nranks_);

for (int i = 0; i < gpus.size(); ++i) {

param.set_device_id(gpus[i]);

syncs_[i].reset(new P2PSync(this, root_solver_, i, gpus.size(), param));

#ifdef USE_NCCL

syncs_[i]->aux_ = &nccl_id_;

#else

LOG(FATAL) << "Multi-GPU execution not available - rebuild with USE_NCCL";

#endif // USE_NCCL

syncs_[i]->shared_ = this->shared_;

}

每个P2PSync启动线程。

StartInternalThread触发了P2PSync::InternalThreadEntry。

LOG(INFO)<< "Starting Optimization";

for (int i = 0; i < syncs_.size(); ++i) {

syncs_[i]->StartInternalThread(true, static_cast<uint64_t>(param.random_seed()));

}

等待线程完成。

for (int i = 0; i < syncs_.size(); ++i) {

syncs_[i]->WaitAll();

}

输出性能报告。

std::ostringstream os;

os.precision(4);

float total_perf = this->root_solver_->perf_report(os, syncs_[0]->target_device_);

LOG(INFO) << "Root " << os.str();

for (int i = 1; i < syncs_.size(); ++i) {

std::ostringstream os;

os.precision(4);

total_perf += syncs_[i]->solver_->perf_report(os, syncs_[i]->target_device_, 5 /* "Root " */);

LOG(INFO) << os.str();

}

if (syncs_.size() > 1) {

LOG(INFO) << "Overall multi-GPU performance: " << total_perf << " img/sec";

}

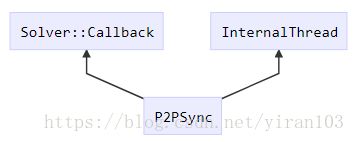

P2PSync

在本地GPU之间使用map-reduce实现同步数据并行。

// Synchronous data parallelism using map-reduce between local GPUs.

class P2PSync : public Solver::Callback, public InternalThread {

friend class P2PManager;

public:

P2PSync(P2PManager* mgr, shared_ptr<Solver> root_solver,

int rank, int nranks, const SolverParameter& param);

virtual ~P2PSync();

// Divide the batch size by the number of solvers

static unsigned int divide_batch_size(NetParameter* net);

void allreduce(int type_id, int param_id) override;

void allreduce_bucket(int type_id, size_t count, void* bucket, Type type) override;

void soft_barrier() override;

void reduce_barrier(int type_id) override;

void saveTestResults(float loss, const vector<float>& scores) override;

void aggregateTestResults(float* loss, vector<float>* scores) override;

cudaStream_t comm_stream(int type_id) {

return comm_stream_[type_id]->get();

}

protected:

void on_start(const vector<shared_ptr<Blob>>& net) override;

#ifdef USE_NCCL

ncclComm_t nccl_comm_;

#endif

void InternalThreadEntry() override;

P2PManager* mgr_;

const int rank_;

const size_t nranks_;

const int initial_iter_;

shared_ptr<Solver> solver_, root_solver_;

SolverParameter solver_param_;

shared_ptr<CudaStream> comm_stream_[2];

// memory shared between threads

shared_ptr<SharedScores<float>> shared_;

};

P2PManager和P2PSync各自拥有指向对方的指针,双向关联。

此外,P2PSync 拥有两个Solver指针,solver_和root_solver_。

P2PSync::InternalThreadEntry()

如果rank_为0将线程设置为根求解器,否则根据参数创建求解器。

SolverRegistry::CreateSolver借助Registry访问已注册的求解器。

如果是root线程则将自身设置为root_callbacks_的调用。

所有线程均将自身设置为Solver的callback_调用。

if (rank_ == 0) {

Caffe::set_root_solver(true);

solver_ = root_solver_;

solver_->root_add_callback(this);

} else {

Caffe::set_root_solver(false);

solver_.reset(caffe::SolverRegistry::CreateSolver(solver_param_, root_solver_.get(), rank_));

}

solver_->set_callback(this);

检查rank和设备。

CHECK_EQ(nranks_, Caffe::solver_count());

CHECK_EQ(target_device_, Caffe::current_device());

soft_barrier作为CPU屏障避免频繁轮询GPU。

ncclCommInitRank函数为当前的CUDA设备创建一个新的通信器对象。该功能允许多进程初始化。ncclCommInitRank函数隐式地与其他rank同步,所以它必须由不同的线程和进程调用,或者使用ncclGroupStart和ncclGroupEnd函数。

#ifdef USE_NCCL

ncclUniqueId* nccl_id = reinterpret_cast<ncclUniqueId*>(this->aux_);

soft_barrier();

NCCL_CHECK(ncclCommInitRank(&nccl_comm_, nranks_, *nccl_id, rank_));

soft_barrier();

#endif

为什么需要两个stream?其通过comm_stream函数进行访问。

CudaStream::create创建一个CudaStream对象。CudaStream是用于生命周期管理的共享CUDA流。

comm_stream_[0] = CudaStream::create(true);

comm_stream_[1] = CudaStream::create(true);

查看是否存在已定义的种子,(如果有的话)重置随机状态。获取随机种子并通过设备ID进行调制,以确保每个人都没有相同的种子。如果我们让每个求解器都拥有相同的种子或系统生成一个,那么求解器可能不稳定。

LOG(INFO) << "[" << rank_ << " - " << target_device_ << "] P2pSync adding callback";

// See if there is a defined seed and reset random state if so

if (solver_->param().random_seed() >= 0) {

// Fetch random seed and modulate by device ID to make sure

// everyone doesn't have the same seed. We seem to have some

// solver instability if we have everyone with the same seed

Caffe::set_random_seed(solver_->param().random_seed() + static_cast<uint64_t>(rank_));

} else {

// Or system generated one

Caffe::set_random_seed(Caffe::SEED_NOT_SET);

}

执行Solver::Solve函数。

if (solver_->Solve()) {

mgr_->EarlyCancel(this);

}

P2PSync::on_start

P2PSync::on_start在训练开始前广播网络参数。

ncclCommCount函数返回通信器中的rank数量。

#ifdef USE_NCCL

int count = 0;

NCCL_CHECK(ncclCommCount(nccl_comm_, &count));

CHECK_EQ(count, nranks_);

采用循环的形式可以广播多个网络的参数。

for (int i = 0; i < net.size(); ++i) {

Blob* param = net[i].get();

const Type param_type = param->data_type();

const int type_id = solver_->net()->learnable_types()[0] == param_type ? 0 : 1;

reduce_barrier(type_id);

NCCL_CHECK(ncclBcast(param->current_mutable_data_memory(true),

even(param->count()),

nccl::nccl_type(param_type),

0,

nccl_comm_,

comm_stream(type_id)));

CUDA_CHECK(cudaStreamSynchronize(comm_stream(type_id)));

reduce_barrier(type_id);

}

#endif // USE_NCCL

P2PSync::allreduce

调用ncclAllReduce,对指定learnable_params的梯度进行规约。

之后,每个Net会运行ApplyUpdate()函数更新网络参数。

cudaStreamSynchronize等待流任务完成。

void P2PSync::allreduce(int type_id, int param_id) {

#ifdef USE_NCCL

const shared_ptr<Blob>& param = solver_->net()->learnable_params()[param_id];

NCCL_CHECK(ncclAllReduce(param->current_diff_memory(true),

param->current_mutable_diff_memory(true),

even(param->count()),

nccl::nccl_type(param->diff_type()),

ncclSum,

nccl_comm_,

comm_stream(type_id)));

CUDA_CHECK(cudaStreamSynchronize(comm_stream(type_id)));

#endif // USE_NCCL

}

P2PSync::allreduce_bucket

void P2PSync::allreduce_bucket(int type_id, size_t count, void* bucket, Type type) {

#ifdef USE_NCCL

CHECK(bucket);

NCCL_CHECK(ncclAllReduce(bucket,

bucket,

count,

nccl::nccl_type(type),

ncclSum,

nccl_comm_,

comm_stream(type_id)));

CUDA_CHECK(cudaStreamSynchronize(comm_stream(type_id)));

#endif // USE_NCCL

}

P2PSync::aggregateTestResults

多GPU测试时,主线程获取输出结果的汇总。得益于SharedScores结构体,主线程可以访问其他线程的结果。

// master thread gets aggregate of results for output

void P2PSync::aggregateTestResults(float* loss, vector<float>* scores) {

// only run on master thread

if (this->rank_ == 0) {

// initialize results

*loss = 0.F;

for (size_t i = 0; i < scores->size(); ++i) {

(*scores)[i] = 0.F;

}

// all test threads

for (size_t i = 0; i < nranks_; ++i) {

vector<float>& shared_scr = shared_->rank_scores(this->rank_);

*loss += shared_scr[0];

// all scores within each test thread

for (size_t j = 0; j < scores->size(); ++j) {

(*scores)[j] += shared_scr[j+1];

}

}

}

}

P2PSync::saveTestResults

在多GPU测试时,P2PSync::saveTestResults用于记录损失和得分。

void P2PSync::saveTestResults(float loss, const vector<float>& scores) {

vector<float>& shared_scr = shared_->rank_scores(this->rank_);

CHECK_GE(shared_scr.size(), scores.size() + 1);

shared_scr[0] = loss;

for (size_t i = 0; i < scores.size(); ++i) {

shared_scr[i+1] = scores[i];

}

}

参考资料:

- NVCaffe 0.16.2 多 GPU 训练过程代码分析

- (Caffe,LeNet)权值更新(七)