yolov8 c++进行部署

注意使用opencv4.8

手动安装指定版本opencv

-

官网下载指定版本的source代码,并解压到本地。

-

解压后执行make命令

mkdir build cd build cmake .. make -j8 sudo make install -

/etc/ld.so.conf.d/路径下创建任意一个.conf文件,把lib文件的路径写在里面,一般是 /usr/local/lib,然后执行 sudo ldconfig即可。(否则会报错:error while loading shared libraries: libopencv_core.so.4.8: cannot open shared object file)

导出onnx模型

from ultralytics import YOLO

# Load a model

model = YOLO('yolov8n.pt') # load an official model

# Export the model

model.export(format='onnx', imgsz=[480, 640], opset=12)

执行python export.py

C++ 推理代码

main.cpp

#include inference.h

#ifndef INFERENCE_H

#define INFERENCE_H

// Cpp native

#include inference.cpp

#include "inference.h"

Inference::Inference(const std::string &onnxModelPath, const cv::Size &modelInputShape, const std::string &classesTxtFile, const bool &runWithCuda)

{

modelPath = onnxModelPath;

modelShape = modelInputShape;

classesPath = classesTxtFile;

cudaEnabled = runWithCuda;

loadOnnxNetwork();

// loadClassesFromFile(); The classes are hard-coded for this example

}

std::vector<Detection> Inference::runInference(const cv::Mat &input)

{

cv::Mat modelInput = input;

if (letterBoxForSquare && modelShape.width == modelShape.height)

modelInput = formatToSquare(modelInput);

cv::Mat blob;

cv::dnn::blobFromImage(modelInput, blob, 1.0/255.0, modelShape, cv::Scalar(), true, false);

net.setInput(blob);

std::vector<cv::Mat> outputs;

net.forward(outputs, net.getUnconnectedOutLayersNames());

int rows = outputs[0].size[1];

int dimensions = outputs[0].size[2];

bool yolov8 = false;

// yolov5 has an output of shape (batchSize, 25200, 85) (Num classes + box[x,y,w,h] + confidence[c])

// yolov8 has an output of shape (batchSize, 84, 8400) (Num classes + box[x,y,w,h])

if (dimensions > rows) // Check if the shape[2] is more than shape[1] (yolov8)

{

yolov8 = true;

rows = outputs[0].size[2];

dimensions = outputs[0].size[1];

outputs[0] = outputs[0].reshape(1, dimensions);

cv::transpose(outputs[0], outputs[0]);

}

float *data = (float *)outputs[0].data;

float x_factor = modelInput.cols / modelShape.width;

float y_factor = modelInput.rows / modelShape.height;

//std::cout<

std::vector<int> class_ids;

std::vector<float> confidences;

std::vector<cv::Rect> boxes;

std::cout<<yolov8<<std::endl;

for (int i = 0; i < rows; ++i)

{

if (yolov8)

{

float *classes_scores = data+4;

cv::Mat scores(1, classes.size(), CV_32FC1, classes_scores);

cv::Point class_id;

double maxClassScore;

minMaxLoc(scores, 0, &maxClassScore, 0, &class_id);

if (maxClassScore > modelScoreThreshold)

{

confidences.push_back(maxClassScore);

class_ids.push_back(class_id.x);

float x = data[0];

float y = data[1];

float w = data[2];

float h = data[3];

int left = int((x - 0.5 * w) * x_factor);

int top = int((y - 0.5 * h) * y_factor);

int width = int(w * x_factor);

int height = int(h * y_factor);

//std::cout<

boxes.push_back(cv::Rect(left, top, width, height));

}

}

else // yolov5

{

float confidence = data[4];

if (confidence >= modelConfidenceThreshold)

{

float *classes_scores = data+5;

cv::Mat scores(1, classes.size(), CV_32FC1, classes_scores);

cv::Point class_id;

double max_class_score;

minMaxLoc(scores, 0, &max_class_score, 0, &class_id);

if (max_class_score > modelScoreThreshold)

{

confidences.push_back(confidence);

class_ids.push_back(class_id.x);

float x = data[0];

float y = data[1];

float w = data[2];

float h = data[3];

int left = int((x - 0.5 * w) * x_factor);

int top = int((y - 0.5 * h) * y_factor);

int width = int(w * x_factor);

int height = int(h * y_factor);

boxes.push_back(cv::Rect(left, top, width, height));

}

}

}

data += dimensions;

}

std::vector<int> nms_result;

cv::dnn::NMSBoxes(boxes, confidences, modelScoreThreshold, modelNMSThreshold, nms_result);

std::vector<Detection> detections{};

for (unsigned long i = 0; i < nms_result.size(); ++i)

{

int idx = nms_result[i];

Detection result;

result.class_id = class_ids[idx];

result.confidence = confidences[idx];

std::random_device rd;

std::mt19937 gen(rd());

std::uniform_int_distribution<int> dis(100, 255);

result.color = cv::Scalar(dis(gen),

dis(gen),

dis(gen));

result.className = classes[result.class_id];

result.box = boxes[idx];

detections.push_back(result);

}

return detections;

}

void Inference::loadClassesFromFile()

{

std::ifstream inputFile(classesPath);

if (inputFile.is_open())

{

std::string classLine;

while (std::getline(inputFile, classLine))

classes.push_back(classLine);

inputFile.close();

}

}

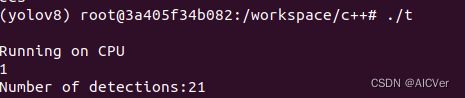

void Inference::loadOnnxNetwork()

{

net = cv::dnn::readNetFromONNX(modelPath);

if (cudaEnabled)

{

std::cout << "\nRunning on CUDA" << std::endl;

net.setPreferableBackend(cv::dnn::DNN_BACKEND_CUDA);

net.setPreferableTarget(cv::dnn::DNN_TARGET_CUDA);

}

else

{

std::cout << "\nRunning on CPU" << std::endl;

net.setPreferableBackend(cv::dnn::DNN_BACKEND_OPENCV);

net.setPreferableTarget(cv::dnn::DNN_TARGET_CPU);

}

}

cv::Mat Inference::formatToSquare(const cv::Mat &source)

{

int col = source.cols;

int row = source.rows;

int _max = MAX(col, row);

cv::Mat result = cv::Mat::zeros(_max, _max, CV_8UC3);

source.copyTo(result(cv::Rect(0, 0, col, row)));

return result;

}

运行代码:g++ -g inference.cpp -o t main.cpp -I/usr/local/include/opencv4 -L/usr/local/lib -lopencv_core -lopencv_highgui -lopencv_imgproc -lopencv_dnn -lopencv_imgcodecs