学内核之八:Linux内核的smp_processor_id是如何实现的

smp_processor_id在内核中大量使用。这个接口用于获取当前代码(就是调用该接口所在位置的代码)当前在哪个逻辑CPU上运行。

这篇文章探讨这样一个问题,就是上述接口是如何实现的。

我第一次了解到这个接口时,以为是通过读取CPU内部的特定寄存器或者通过特定指令实现的。为了验证这个想法,就简单看了下代码,发现在ARM32下,是通过当前内核线程信息结构的cpu字段来获取的。如下:

./include/linux/smp.h:

# define smp_processor_id() raw_smp_processor_id()

//smp_processor_id是raw_smp_processor_id的别名

//而raw_processor_id则实现为如下接口

./arch/arm/include/asm/smp.h:

#define raw_smp_processor_id() (current_thread_info()->cpu)看到这个结果,我有点纳闷,怎么可以通过这个字段就可以知道当前自己在哪个逻辑CPU上呢?那这个疑问的答案就在这个变量啥时候赋值了。为了这个赋值,曾开启了一段艰苦的探索之旅。

其实,要追踪这个赋值,我们需要清楚CPU的执行上下文都有哪些。

对于任何一个CPU核心,当前执行的代码,可能属于:

1 用户代码,属于某一个用户进程或线程,至于说进程还是线程,关系不大。

2 用户切换到内核过程,比如系统调用

3 内核逻辑执行,要么属于用户进程,要么属于独立的内核线程

4 中断,要么从内核态切换,要么从用户态切换

5 异常,要么内核态触发,要么用户态触发

6 信号处理。从内核返回用户空间时,如果有待处理的信号,那么内核需要让用户代码恢复执行前先执行信号注册的部分。

可以看到,无论在那种情况下,当前执行的代码都是有主的,也就是有上下文的,要么是用户线程,要么是内核线程,要么是各种异常,总之是有来源的。这是非常关键的信息。

如果CPU核心开始运行的时候,知道自己是在哪个核心上,那么从最初的idle任务开始,核心就可以传递这个编号信息。

首先,idle任务通过人为设置,确定编号,就是创世之源。

之后,从idle开始,无论是切换到上述6种中的哪一种场景,如果我们都能够在切换时传递编号,就像是现实世界的代代相传

那么,任何时候,代码要知道自己被执行的CPU,就只需要查找自己处在什么上下文中,然后看看这个上下文的CPU编号是什么,就最终能够确定自己在那个核心上运行。

整个流程就是,当前代码调用接口获取CPU编号--接口查找当前上下文编号--当前上下文编号来自切换者传递--切换者来自更早的切换者--最初的idle由人为设定

只要保证上述的调用链条正确,那么查找结果就是正确的。

那么问题来了,编号的本源来自哪里,就是最初给第一个内核态的idle线程栈赋值编号的地方在哪里?

首先,上电启动的核心一般都是编号为0的核心,这个是CPU设计时候决定的,所以整个内核的初始化,基本都是由编号为0的核心完成的。

其他核心在核心0完成必要的初始化工作后,唤醒,开始接任务,共同分担系统负载。

所以,其他核心启动前,运行代码的核心编号是0,这个好确定。待其他核心启动后,如何知道各个核心的编号?

我们先来看看内核启动的日志

Booting Linux on physical CPU 0x0

Initializing cgroup subsys cpu

Linux version 3.18.20 (root@ubuntu) (gcc version 4.9.4 20150629 (prerelease) (Hisilicon_v600_20180525) ) #19 SMP Wed May 29 19:23:24 PDT 2019

CPU: ARMv7 Processor [410fc075] revision 5 (ARMv7), cr=10c5387d

CPU: PIPT / VIPT nonaliasing data cache, VIPT aliasing instruction cache

Machine model: Hisilicon HI3536C DEMO Board

Memory policy: Data cache writealloc

On node 0 totalpages: 65536

free_area_init_node: node 0, pgdat c074ee00, node_mem_map cfdf7000

Normal zone: 512 pages used for memmap

Normal zone: 0 pages reserved

Normal zone: 65536 pages, LIFO batch:15

PERCPU: Embedded 9 pages/cpu @cfddc000 s6144 r8192 d22528 u36864

pcpu-alloc: s6144 r8192 d22528 u36864 alloc=9*4096

pcpu-alloc: [0] 0 [0] 1

Built 1 zonelists in Zone order, mobility grouping on. Total pages: 65024

Kernel command line: mem=256M console=ttyAMA0,115200 root=/dev/mtdblock2 rootfstype=jffs2 rw mtdparts=hi_sfc:1M(boot),4M(kernel),27M(rootfs)

PID hash table entries: 1024 (order: 0, 4096 bytes)

Dentry cache hash table entries: 32768 (order: 5, 131072 bytes)

Inode-cache hash table entries: 16384 (order: 4, 65536 bytes)

Memory: 251976K/262144K available (5483K kernel code, 230K rwdata, 1496K rodata, 240K init, 297K bss, 10168K reserved, 0K highmem)

Virtual kernel memory layout:

vector : 0xffff0000 - 0xffff1000 ( 4 kB)

fixmap : 0xffc00000 - 0xffe00000 (2048 kB)

vmalloc : 0xd0800000 - 0xff000000 ( 744 MB)

lowmem : 0xc0000000 - 0xd0000000 ( 256 MB)

pkmap : 0xbfe00000 - 0xc0000000 ( 2 MB)

modules : 0xbf000000 - 0xbfe00000 ( 14 MB)

.text : 0xc0008000 - 0xc06d9070 (6981 kB)

.init : 0xc06da000 - 0xc0716000 ( 240 kB)

.data : 0xc0716000 - 0xc074fb40 ( 231 kB)

.bss : 0xc074fb40 - 0xc079a1fc ( 298 kB)

SLUB: HWalign=64, Order=0-3, MinObjects=0, CPUs=2, Nodes=1

Hierarchical RCU implementation.

RCU restricting CPUs from NR_CPUS=4 to nr_cpu_ids=2.

RCU: Adjusting geometry for rcu_fanout_leaf=16, nr_cpu_ids=2

NR_IRQS:16 nr_irqs:16 16

sched_clock: 32 bits at 3000kHz, resolution 333ns, wraps every 1431655765682ns

Console: colour dummy device 80x30

Calibrating delay loop... 2580.48 BogoMIPS (lpj=1290240)

pid_max: default: 32768 minimum: 301

Mount-cache hash table entries: 1024 (order: 0, 4096 bytes)

Mountpoint-cache hash table entries: 1024 (order: 0, 4096 bytes)

CPU: Testing write buffer coherency: ok

CPU0: thread -1, cpu 0, socket 0, mpidr 80000000

Setting up static identity map for 0x8053c180 - 0x8053c1d8

CPU1: Booted secondary processor

CPU1: thread -1, cpu 1, socket 0, mpidr 80000001

Brought up 2 CPUs

SMP: Total of 2 processors activated (5173.24 BogoMIPS).

CPU: All CPU(s) started in SVC mode.

devtmpfs: initialized

VFP support v0.3: implementor 41 architecture 2 part 30 variant 7 rev 5可以看到,SMP其他核心启动在驱动之前,在核心0完成必要的初始化boot操作后。

smp_init中,这个CPU编号是自己生成,等到之后的代码,系统都开始采用smp_processor_id获取编号了。

/* Called by boot processor to activate the rest. */

void __init smp_init(void)

{

unsigned int cpu;

idle_threads_init();

/* FIXME: This should be done in userspace --RR */

for_each_present_cpu(cpu) {

if (num_online_cpus() >= setup_max_cpus)

break;

if (!cpu_online(cpu))

cpu_up(cpu);

}

/* Any cleanup work */

smp_announce();

smp_cpus_done(setup_max_cpus);

}

这时候已经可以拿取编号了

/**

* idle_threads_init - Initialize idle threads for all cpus

*/

void __init idle_threads_init(void)

{

unsigned int cpu, boot_cpu;

boot_cpu = smp_processor_id();

for_each_possible_cpu(cpu) {

if (cpu != boot_cpu)

idle_init(cpu);

}

}

但这里是boot CPU,所以是零,其他CPU,需要进一步看idle_init

/**

* idle_init - Initialize the idle thread for a cpu

* @cpu: The cpu for which the idle thread should be initialized

*

* Creates the thread if it does not exist.

*/

static inline void idle_init(unsigned int cpu)

{

struct task_struct *tsk = per_cpu(idle_threads, cpu);

if (!tsk) {

tsk = fork_idle(cpu);

if (IS_ERR(tsk))

pr_err("SMP: fork_idle() failed for CPU %u\n", cpu);

else

per_cpu(idle_threads, cpu) = tsk;

}

}

struct task_struct *fork_idle(int cpu)

{

struct task_struct *task;

task = copy_process(CLONE_VM, 0, 0, NULL, &init_struct_pid, 0);

if (!IS_ERR(task)) {

init_idle_pids(task->pids);

init_idle(task, cpu);

}

return task;

}

/**

* init_idle - set up an idle thread for a given CPU

* @idle: task in question

* @cpu: cpu the idle task belongs to

*

* NOTE: this function does not set the idle thread's NEED_RESCHED

* flag, to make booting more robust.

*/

void init_idle(struct task_struct *idle, int cpu)

{

struct rq *rq = cpu_rq(cpu);

unsigned long flags;

raw_spin_lock_irqsave(&rq->lock, flags);

__sched_fork(0, idle);

idle->state = TASK_RUNNING;

idle->se.exec_start = sched_clock();

do_set_cpus_allowed(idle, cpumask_of(cpu));

/*

* We're having a chicken and egg problem, even though we are

* holding rq->lock, the cpu isn't yet set to this cpu so the

* lockdep check in task_group() will fail.

*

* Similar case to sched_fork(). / Alternatively we could

* use task_rq_lock() here and obtain the other rq->lock.

*

* Silence PROVE_RCU

*/

rcu_read_lock();

__set_task_cpu(idle, cpu);

rcu_read_unlock();

rq->curr = rq->idle = idle;

idle->on_rq = TASK_ON_RQ_QUEUED;

#if defined(CONFIG_SMP)

idle->on_cpu = 1;

#endif

raw_spin_unlock_irqrestore(&rq->lock, flags);

/* Set the preempt count _outside_ the spinlocks! */

init_idle_preempt_count(idle, cpu);

/*

* The idle tasks have their own, simple scheduling class:

*/

idle->sched_class = &idle_sched_class;

ftrace_graph_init_idle_task(idle, cpu);

vtime_init_idle(idle, cpu);

#if defined(CONFIG_SMP)

sprintf(idle->comm, "%s/%d", INIT_TASK_COMM, cpu);

#endif

}

static inline void __set_task_cpu(struct task_struct *p, unsigned int cpu)

{

set_task_rq(p, cpu);

#ifdef CONFIG_SMP

/*

* After ->cpu is set up to a new value, task_rq_lock(p, ...) can be

* successfuly executed on another CPU. We must ensure that updates of

* per-task data have been completed by this moment.

*/

smp_wmb();

task_thread_info(p)->cpu = cpu;

p->wake_cpu = cpu;

#endif

}我们注意到,最后这里,task_thread_info(p)->cpu = cpu;

这里函数调用过程都传递了参数CPU,也就是说,对SMP中的每一个其他逻辑CPU,创建了idle task,并将task的CPU设置为参数传递的编号。注意,这里,其他CPU还没有运行,这些编号是预置的,也就是编号设置为1的idle,到时候是需要CPU编号为1的核心来执行的。这一点又是如何做到的。

这里先把前面的流程补充上。idle的创建来自fork

static noinline void __init_refok rest_init(void)

{

int pid;

rcu_scheduler_starting();

/*

* We need to spawn init first so that it obtains pid 1, however

* the init task will end up wanting to create kthreads, which, if

* we schedule it before we create kthreadd, will OOPS.

*/

kernel_thread(kernel_init, NULL, CLONE_FS);

numa_default_policy();

pid = kernel_thread(kthreadd, NULL, CLONE_FS | CLONE_FILES);

rcu_read_lock();

kthreadd_task = find_task_by_pid_ns(pid, &init_pid_ns);

rcu_read_unlock();

complete(&kthreadd_done);

/*

* The boot idle thread must execute schedule()

* at least once to get things moving:

*/

init_idle_bootup_task(current);

schedule_preempt_disabled();

/* Call into cpu_idle with preempt disabled */

cpu_startup_entry(CPUHP_ONLINE);

}注意到,kernel_init是在内核线程中执行的,此时是boot CPU执行线程,从idle线程fork而来

/*

* Create a kernel thread.

*/

pid_t kernel_thread(int (*fn)(void *), void *arg, unsigned long flags)

{

return do_fork(flags|CLONE_VM|CLONE_UNTRACED, (unsigned long)fn,

(unsigned long)arg, NULL, NULL);

}合起来,整个调用栈是

rest_init

kernel_init

kernel_init_freeable

smp_init

idle_threads_init

idle_init

fork_idle

init_idle

__set_task_cpu

到目前,我们补充了完整的CPU编号设置路径。

现在再次回到上面的问题,如何让编号为 i 核心的CPU执行编号为 i 的idle线程?

1 上电后,boot CPU 也就是CPU0先运行,其他CPU处于等待状态,使用wfi指令,arm平台

2 CPU0完成准备工作后,通知其他CPU工作,这是通过核间中断发送的,sev指令,arm平台

3 其他CPU接收到事件后,就可以从配置的地址运行代码了。关键就在这里。

4 其他核心运行的代码最终都会关联到核心自己的idle任务,无论是通过直接写内存地址还是跳转方式。

5 这样,主CPU就可以将从CPU的idle任务的CPU编号(上面堆栈记录)和从CPU的启动地址对应起来,也就是 i 号CPU的启动地址在其 i 偏移地址处。

最终,从CPU到自己的idle任务代码段去执行,每个从CPU的代码段不一样,这个是主CPU配置的,自然主CPU得知道自己配置的是那个从CPU的代码段,那么也就知道配置那个编号。

Thread 1 hit Breakpoint 3, psci_boot_secondary (cpu=1, idle=0xdb09c800) at arch/arm/kernel/psci_smp.c:54

54 if (psci_ops.cpu_on)

(gdb) bt

#0 psci_boot_secondary (cpu=1, idle=0xdb09c800) at arch/arm/kernel/psci_smp.c:54

#1 0xc030fde4 in __cpu_up (cpu=1, idle=0xdb09c800) at arch/arm/kernel/smp.c:163

#2 0xc0349478 in bringup_cpu (cpu=1) at kernel/cpu.c:530

#3 0xc0349ae8 in cpuhp_invoke_callback (cpu=1, state=CPUHP_BRINGUP_CPU, bringup=, node=, lastp=0x0) at kernel/cpu.c:170

#4 0xc034ae8c in cpuhp_up_callbacks (target=, st=, cpu=) at kernel/cpu.c:584

#5 _cpu_up (cpu=1, tasks_frozen=, target=) at kernel/cpu.c:1192

#6 0xc034afa4 in do_cpu_up (cpu=1, target=CPUHP_ONLINE) at kernel/cpu.c:1228

#7 0xc034afbc in cpu_up (cpu=) at kernel/cpu.c:1236

#8 0xc14252ac in smp_init () at kernel/smp.c:578

#9 0xc14011a0 in kernel_init_freeable () at init/main.c:1140

#10 0xc0e7be2c in kernel_init (unused=) at init/main.c:1064

#11 0xc03010e8 in ret_from_fork () at arch/arm/kernel/entry-common.S:158

Backtrace stopped: previous frame identical to this frame (corrupt stack?)

(gdb) bt

#0 psci_cpu_on (cpuid=2, entry_point=1076896960) at drivers/firmware/psci.c:194

#1 0xc0316430 in psci_boot_secondary (cpu=, idle=) at ./arch/arm/include/asm/memory.h:323

#2 0xc030fde4 in __cpu_up (cpu=2, idle=0xdb09ce00) at arch/arm/kernel/smp.c:163

#3 0xc0349478 in bringup_cpu (cpu=2) at kernel/cpu.c:530

#4 0xc0349ae8 in cpuhp_invoke_callback (cpu=2, state=CPUHP_BRINGUP_CPU, bringup=, node=, lastp=0x0) at kernel/cpu.c:170

#5 0xc034ae8c in cpuhp_up_callbacks (target=, st=, cpu=) at kernel/cpu.c:584

#6 _cpu_up (cpu=2, tasks_frozen=, target=) at kernel/cpu.c:1192

#7 0xc034afa4 in do_cpu_up (cpu=2, target=CPUHP_ONLINE) at kernel/cpu.c:1228

#8 0xc034afbc in cpu_up (cpu=) at kernel/cpu.c:1236

#9 0xc14252ac in smp_init () at kernel/smp.c:578

#10 0xc14011a0 in kernel_init_freeable () at init/main.c:1140

#11 0xc0e7be2c in kernel_init (unused=) at init/main.c:1064

#12 0xc03010e8 in ret_from_fork () at arch/arm/kernel/entry-common.S:158

Backtrace stopped: previous frame identical to this frame (corrupt stack?)

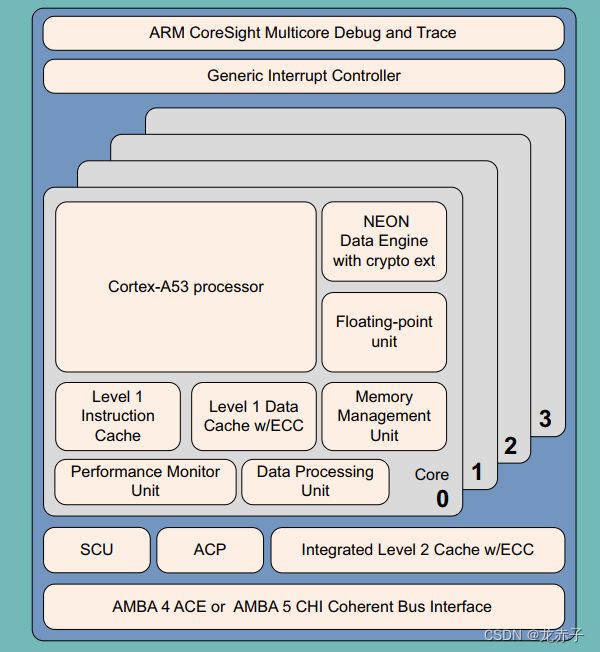

以上是qemu模拟里面,启动从CPU的部分堆栈。(图片来自arm官方)