Java获取rtsp流生成m3u8文件nginx代理实时播放

话不多说直接上代码

先看下java代码

pom依赖

<dependency>

<groupId>com.dahuatech.icc</groupId>

<artifactId>java-sdk-oauth</artifactId>

<version>1.0.9</version>

</dependency>

<dependency>

<groupId>org.bytedeco</groupId>

<artifactId>javacv-platform</artifactId>

<version>1.5.7</version>

</dependency>

<dependency>

<groupId>org.mitre.dsmiley.httpproxy</groupId>

<artifactId>smiley-http-proxy-servlet</artifactId>

<version>1.6</version>

</dependency>

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

<version>18.0</version>

</dependency>

/**

* 转m3u8

* input rtsp的账号密码端口流字符串

* output 要保存的文件目录生成m3u8文件 一直生成ts文件

*/

public void push(String input, String output)

throws org.bytedeco.javacv.FrameGrabber.Exception, org.bytedeco.javacv.FrameRecorder.Exception {

FFmpegFrameGrabber grabber = null;// 采集器

FFmpegFrameRecorder recorder = null;// 解码器

int bitrate = 2500000;// 比特率

double framerate;// 帧率

int err_index = 0;// 推流过程中出现错误的次数

int timebase;// 时钟基

long dts = 0, pts = 0;// pkt的dts、pts时间戳

try {

// 开启ffmpeg日志级别;QUIET是屏蔽所有,可选INFO、DEBUG、ERROR等

avutil.av_log_set_level(avutil.AV_LOG_INFO);

FFmpegLogCallback.set();

grabber = new FFmpegFrameGrabber(input);

grabber.setOption("rtsp_transport", "tcp");

grabber.start();

// 异常的framerate,强制使用25帧

if (grabber.getFrameRate() > 0 && grabber.getFrameRate() < 100) {

framerate = grabber.getFrameRate();

} else {

framerate = 25.0;

}

bitrate = grabber.getVideoBitrate();// 获取到的比特率 0

recorder = new FFmpegFrameRecorder(output, grabber.getImageWidth(), grabber.getImageHeight(), 0);

// 设置比特率

recorder.setVideoBitrate(bitrate);

// h264编/解码器

recorder.setVideoCodec(avcodec.AV_CODEC_ID_H264);

// 设置音频编码

recorder.setAudioCodec(avcodec.AV_CODEC_ID_AAC);

// 视频帧率(保证视频质量的情况下最低25,低于25会出现闪屏)

recorder.setFrameRate(framerate);

// 关键帧间隔,一般与帧率相同或者是视频帧率的两倍

recorder.setGopSize((int) framerate);

// 解码器格式

recorder.setFormat("hls");

// 单个切片时长,单位是s,默认为2s

recorder.setOption("hls_time", "5");

// HLS播放的列表长度,0标识不做限制

recorder.setOption("hls_list_size", "20");

// 设置切片的ts文件序号起始值,默认从0开始,可以通过此项更改

recorder.setOption("start_number", "120");

// recorder.setOption("hls_segment_type","mpegts");

// 自动删除切片,如果切片数量大于hls_list_size的数量,则会开始自动删除之前的ts切片,只保 留hls_list_size个数量的切片

recorder.setOption("hls_flags", "delete_segments");

// ts切片自动删除阈值,默认值为1,表示早于hls_list_size+1的切片将被删除

//recorder.setOption("hls_delete_threshold","1");

/*

* hls的切片类型: 'mpegts':以MPEG-2传输流格式输出ts切片文件,可以与所有HLS版本兼容。 'fmp4':以Fragmented

* MP4(简称:fmp4)格式输出切片文件,类似于MPEG-DASH,fmp4文件可用于HLS version 7和更高版本。

*/

// recorder.setOption("hls_segment_type","mpegts");

AVFormatContext fc = null;

fc = grabber.getFormatContext();

recorder.start(fc);

// 清空探测时留下的缓存

// grabber.flush();

AVPacket pkt = null;

for (int no_pkt_index = 0; no_pkt_index < 5000000 && err_index < 5000000; ) {

pkt = grabber.grabPacket();

if (pkt == null || pkt.size() <= 0 || pkt.data() == null) {

Thread.sleep(1);

no_pkt_index++;

continue;

}

// 获取到的pkt的dts,pts异常,将此包丢弃掉。

if (pkt.dts() == avutil.AV_NOPTS_VALUE && pkt.pts() == avutil.AV_NOPTS_VALUE || pkt.pts() < dts) {

err_index++;

av_packet_unref(pkt);

continue;

}

// 矫正dts,pts

pkt.pts(pts);

pkt.dts(dts);

err_index += (recorder.recordPacket(pkt) ? 0 : 1);

// pts,dts累加

timebase = grabber.getFormatContext().streams(pkt.stream_index()).time_base().den();

pts += (timebase / (int) framerate);

dts += (timebase / (int) framerate);

}

} catch (Exception e) {

grabber.stop();

grabber.close();

if (recorder != null) {

recorder.stop();

recorder.release();

}

} finally {

grabber.stop();

grabber.close();

if (recorder != null) {

recorder.stop();

recorder.release();

}

}

}

/**

* 创建BufferedImage对象

*/

public static BufferedImage FrameToBufferedImage(Frame frame) {

Java2DFrameConverter converter = new Java2DFrameConverter();

BufferedImage bufferedImage = converter.getBufferedImage(frame);

//旋转90度

bufferedImage=rotateClockwise90(bufferedImage);

return bufferedImage;

}

/**

* 处理图片,将图片旋转90度

*/

public static BufferedImage rotateClockwise90(BufferedImage bi) {

int width = bi.getWidth();

int height = bi.getHeight();

BufferedImage bufferedImage = new BufferedImage(height, width, bi.getType());

for (int i = 0; i < width; i++)

for (int j = 0; j < height; j++)

bufferedImage.setRGB(j, i, bi.getRGB(i, j));

return bufferedImage;

}

/**

* 摄像头截图

*/

public static void screenshot(String rtspURL, String fileName) throws IOException {

FFmpegFrameGrabber grabber = FFmpegFrameGrabber.createDefault(rtspURL);

grabber.setOption("rtsp_transport", "tcp");

grabber.setImageWidth(960);

grabber.setImageHeight(540);

grabber.start();

File outPut = new File(fileName);

while (true) {

Frame frame = grabber.grabImage();

if (frame != null) {

ImageIO.write(FrameToBufferedImage(frame), "jpg", outPut);

grabber.stop();

grabber.release();

break;

}

}

}

然后在启动任意进程跑这个服务,因为要一直生成文件,可以在springboot启动时一起启动。

@EnableAsync

@EnableScheduling

@SpringBootApplication

public class PlayApplication {

public static void main(String[] args) throws FrameGrabber.Exception, FrameRecorder.Exception {

SpringApplication.run(PlayApplication.class, args);

System.out.println("摄像头1");

String rtspURL = "rtsp://账号:密码@IP地址:554/cam/realmonitor?channel=1&subtype=0";

File directory = new File("C:/Users/as/Desktop/nginx-1.24.0/html/m3u8one/");

if (!directory.exists()) {

directory.mkdirs();

CameraUtil cu = new CameraUtil();

cu.push(rtspURL, "C:/Users/as/Desktop/nginx-1.24.0/html/m3u8one/0.m3u8");

} else {

CameraUtil cu = new CameraUtil();

cu.push(rtspURL, "C:/Users/as/Desktop/nginx-1.24.0/html/m3u8one/0.m3u8");

}

}

}

生成的路径用nginx代理,我这里直接生成在nginx代理的文件目录里面了。

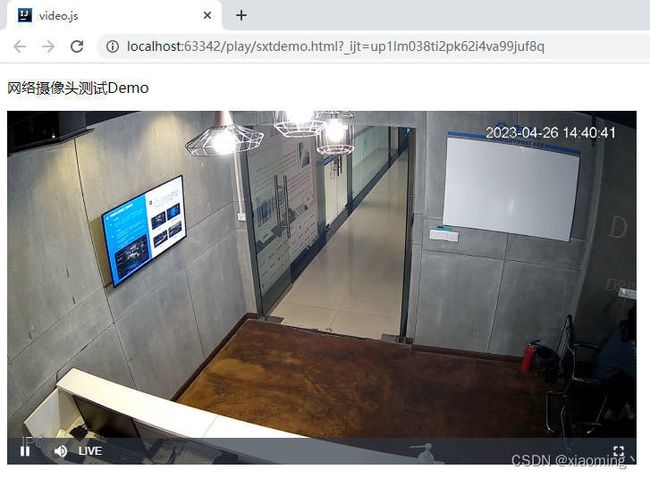

可以写一个简单的前段代码测试一下

<!DOCTYPE html>

<html lang="en" dir="ltr">

<head>

<meta charset="utf-8">

<title>video.js</title>

<link href="https://unpkg.com/[email protected]/dist/video-js.min.css" rel="stylesheet">

<script src="https://unpkg.com/[email protected]/dist/video.min.js"></script>

<script src="https://unpkg.com/videojs-flash/dist/videojs-flash.js"></script>

<script src="https://unpkg.com/videojs-contrib-hls/dist/videojs-contrib-hls.js"></script>

</head>

<body>

<div>

<p>网络摄像头测试Demo</p>

<video id="my-player1" autoplay class="video-js" controls>

<source src="http://127.0.0.1:81/m3u8one/0.m3u8" type="application/x-mpegURL">

<p class="vjs-no-js">

not support

</p>

</video>

<script type="text/javascript">

var player = videojs('my-player1', {

width: 704,

heigh: 576

});

</script>

</div>

</body>

</html>

=