Kubernetes Federation集群联邦

什么是集群联邦

K8s集群联邦(Kubernetes Federation)是一种将多个Kubernetes集群组织成一个统一管理的解决方案。它允许用户在多个集群之间进行资源和工作负载的跨集群调度和管理。

集群联邦有什么功能

- 跨集群资源调度:可以在多个集群之间自动调度和平衡工作负载,以实现资源的最优利用。

- 跨集群服务发现和负载均衡:可以将服务在多个集群之间进行自动发现和负载均衡,提供高可用性和可扩展性。

- 跨集群策略管理:可以在多个集群之间统一管理访问控制、配额和限制等策略,确保集群之间的一致性。

- 跨集群监控和日志管理:可以对多个集群的监控和日志进行集中管理和分析,提供全局的可视化和故障排查能力。

统一部署主要是指定一个联邦资源在哪些k8s集群部署,被指定的k8s集群会创建相应的k8s资源,有副本数的资源k8s集群上部署的副本数与联邦资源写的副本数一致(除非针对特定k8s集群进行了覆写,后面有例子),其中deployment 和 replicaset可以用 ReplicaSchedulingPreference 实现更复杂的调度策略,比如指定总副本数,以及在每个k8s集群的副本数权重,调度器会按要求调节每个k8s集群的副本数达到相加后是总数的目的。

统一服务暴露主要通过DNS来达成统一暴露多集群Service或Ingress的目的,需要外界的DNS服务器(云厂商的DNS服务,或者自己部署DNS服务比如PowerDNS)支持,还需要有LoadBalancer(云厂商的LB,或者自己搭建LB比如MetalLB)。暴露后可以通过一个域名访问多个集群暴露的Service或Ingress,还具有一定的高可用功能,比如一个集群挂了,域名解析会自动只解析到存活的集群的资源。

kubefed原先版本是v1,由于不好扩展,已经被废弃。目前版本是v2,也是处于alpha阶段。

kubefed把由其管理k8s集群分为host cluster和member cluster,也就是

控制集群和业务集群,前者负责集群联邦的管理,后者负责部署业务容器,host cluster也可以作为member cluster。

kubefed的功能是通过在控制集群上的CRD实现的,CRD用kubebuilder编写。

其中有两个比较重要的资源是 KubeFedCluster 和 FederatedTypeConfig:

- KubeFedCluster : 描述加入到联邦的k8s集群,包括这个k8s集群的访问地址和认证信息;

- FederatedTypeConfig : 描述联邦的资源和k8s的资源的对应关系。

KubeFedCluster 的例子如下(集群join到联邦的时候自动生成这个资源),apiEndpoint 就是加入联邦的这个k8s集群的访问地址,caBundle 和 secretRef 保存了这个集群的认证信息:

kubectl get KubeFedCluster -n kube-federation-system cluster-member1 -o yamlapiVersion: core.kubefed.io/v1beta1

kind: KubeFedCluster

metadata:

creationTimestamp: "2021-03-09T08:18:04Z"

generation: 1

labels:

cluster: app

name: cluster-member1

namespace: kube-federation-system

resourceVersion: "2440133"

selfLink: /apis/core.kubefed.io/v1beta1/namespaces/kube-federation-system/kubefedclusters/cluster-member1

uid: 3bb4fcb1-3027-4cc8-9739-587317a64d17

spec:

apiEndpoint: https://192.168.48.204:6443

caBundle: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUN5akNDQWJLZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQ0FYRFRJeE1ETXdPVEEzTkRNMU9Wb1lEekl4TWpFd01qRXpNRGMwTXpVNVdqQVZNUk13RVFZRApWUVFERXdwcmRXSmxjbTVsZEdWek1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBCnRPNkcyU1ZkVHU1Q0IwYURKd0FhU1VZMUo1MHpEbUU1RXJQaEk0RGdYM3JHMDZNYWhGRDJxK3VNblVFY1VsaVYKZDF2eWZMc2lCTGZiMVRSN3Q0bW80cEdiMnExQWhUQzBzZFNVTGwzQVRaY0VkVjF6QjFCQTQ4cU9RdE5lRURTZQprb2w5MWlCcnh2MWo2QzQ3S3pLa05rL2JDNG1vV1FNdzkvSTQyK3dMRlJSb29iY1NCbVRUN3R3NWVkZTFtbmRDCjF4M1hKUWVZejJRUjJtTFlPZDBBS1BwZlN1UDlpWGd2bnVxMHBTbTRQZ0JLLzYwRDZSblh6VlZHbmt4RE52VnkKVDRmMW93V0VJaDRJSHgvU2dnbW1VWEl3VGpwODN0RTBzSFBxam9Vak1Mb0ZUN3NNNit0SU5YbXFITzZDdStIagpTYmJUYUNEY21KWXhUalVnMVNiT2lRSURBUUFCb3lNd0lUQU9CZ05WSFE4QkFmOEVCQU1DQXFRd0R3WURWUjBUCkFRSC9CQVV3QXdFQi96QU5CZ2txaGtpRzl3MEJBUXNGQUFPQ0FRRUFWTzAzRy9RNHBjY0R5eWdOZnJwZEJrY04KT3IvQzhmMmoxZGo0YXl5U004SnZvV0Q3L2lpL1ZGaU9WUGIvWkJnZ3lBa3o3VXBwUlc3UjE4OVQvdmN0VDVoNwpJMWgzcDNPU3BXSklqN2ZLUFY3c3JWKzJhaDhLSjdGc2ZNSHNoVEorby9McjhpUG03eHBQTGVHSjFlVk1BcUpSCmhhR1VYODIxTXZhR0ZMWHlibUxaNjJ4RGdVS2J2SEkxYmFab1F5RmZzZUJVY2gyV2NHSjMycHkzWmdES0EzTkUKZk9aQzNhUnk2dVk2cEpOOG1aRnRjcDZrd1JLNlVwVThISUpybXRkeWxDeGNZRVR0NklsTm0zeDZEM1pXYmV5VAo2SnhsN3ZEYU5BVlhtU2t4WVBUYm02bFpGOTBXVy9QVzdVVDhXWmZxeDhqUTFRU1RqMkNGSGZQc3FvODBxUT09Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

secretRef:

name: cluster-member1-tlhjn

status:

conditions:

- lastProbeTime: "2021-03-12T08:29:56Z"

lastTransitionTime: "2021-03-11T22:01:27Z"

message: /healthz responded with ok

reason: ClusterReady

status: "True"

type: Readykubectl get FederatedTypeConfig -n kube-federation-system deployments.apps -o yamlapiVersion: core.kubefed.io/v1beta1

kind: FederatedTypeConfig

metadata:

creationTimestamp: "2021-03-10T09:30:55Z"

finalizers:

- core.kubefed.io/federated-type-config

generation: 1

name: deployments.apps

namespace: kube-federation-system

resourceVersion: "1765315"

selfLink: /apis/core.kubefed.io/v1beta1/namespaces/kube-federation-system/federatedtypeconfigs/deployments.apps

uid: 164df2f9-1949-4e89-838a-c052a6ed5df4

spec:

federatedType:

group: types.kubefed.io

kind: FederatedDeployment

pluralName: federateddeployments

scope: Namespaced

version: v1beta1

propagation: Enabled

targetType:

group: apps

kind: Deployment

pluralName: deployments

scope: Namespaced

version: v1

status:

observedGeneration: 1

propagationController: Running

statusController: NotRunning安装与使用

版本

kubefed 0.6.1 ( Federation v2 )

需要:

Kubernetes 1.16+

Helm 3.2+

安装helm

下载:

Releases · helm/helm · GitHub

安装:

tar zxvf helm-v3.5.2-linux-amd64.tar.gz

cp linux-amd64/helm /usr/local/bin/安装集群联邦

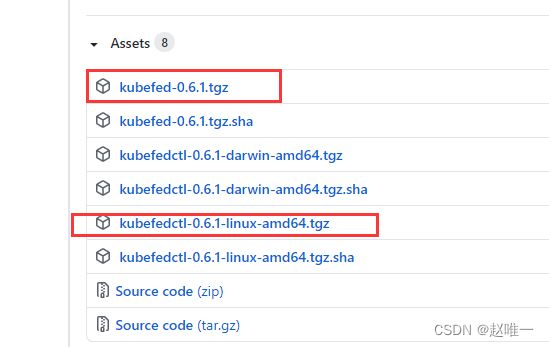

下载集群联邦客户端及helm包

Releases · kubernetes-retired/kubefed · GitHub

安装集群联邦客户端:

tar zxvf kubefedctl-0.6.1-linux-amd64.tgz

cp kubefedctl /usr/local/bin/准备镜像,能上网的机器下载好镜像并打包:

docker pull quay.io/kubernetes-multicluster/kubefed:v0.6.1

docker pull bitnami/kubectl:1.17.16

docker save quay.io/kubernetes-multicluster/kubefed:v0.6.1 | gzip > kubefed_v0.6.1.tgz

docker save bitnami/kubectl:1.17.16 | gzip > kubectl_1.17.16.tgz上面镜像包放到k8s机器

导入镜像:

docker load -i kubefed_v0.6.1.tgz

docker load -i kubectl_1.17.16.tgz安装集群联邦控制面

helm --namespace kube-federation-system upgrade -i kubefed --set controllermanager.featureGates.CrossClusterServiceDiscovery=Enabled --set controllermanager.featureGates.FederatedIngress=Enabled ./kubefed-0.6.1.tgz --version=0.6.1 --create-namespace其中 CrossClusterServiceDiscovery 、 FederatedIngress 两个变量用来启动后续会用到的DNS相关的功能。

查看:

helm --namespace kube-federation-system list

删除:

kubectl -n kube-federation-system delete FederatedTypeConfig --all

helm --namespace kube-federation-system delete kubefed

加入集群联邦

控制集群自己加入集群联邦:

准备 cluster-context

cp /etc/kubernetes/admin.conf /etc/kubernetes/fed.conf修改 /etc/kubernetes/fed.conf 里的 kubernetes-admin@kubernetes 为 kubernetes-context-host,因为后续名字不能带 @

控制集群本身加入联邦

kubefedctl --kubeconfig /etc/kubernetes/fed.conf join cluster-host --cluster-context kubernetes-context-host --host-cluster-context kubernetes-context-host --v=2查看

kubectl -n kube-federation-system get kubefedclusters从联邦移除控制集群:

kubefedctl --kubeconfig /etc/kubernetes/fed.conf unjoin cluster-host --cluster-context kubernetes-context-host --host-cluster-context kubernetes-context-host --v=2

其他集群加入集群联邦:

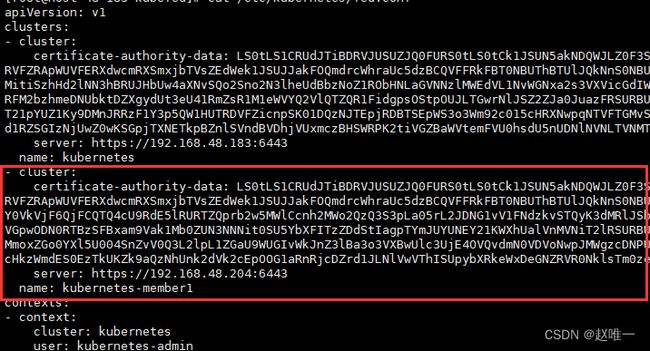

准备 cluster-context

把其他集群master的 /etc/kubernetes/admin.conf 里的 cluster 、contexts、 users 加入 控制集群的 /etc/kubernetes/fed.conf 相应位置 ,并修改名字为 kubernetes-member1、 kubernetes-context-member1、 kubernetes-admin-member1,如下:

加入联邦

kubefedctl --kubeconfig /etc/kubernetes/fed.conf join cluster-member1 --cluster-context kubernetes-context-member1 --host-cluster-context kubernetes-context-host --v=2查看

kubectl -n kube-federation-system get kubefedclusters从联邦移除:

kubefedctl --kubeconfig /etc/kubernetes/fed.conf unjoin cluster-member1 --cluster-context kubernetes-context-member1 --host-cluster-context kubernetes-context-host --v=2

使用

创建控制集群上联邦CR的命名空间

kubectl create ns test-namespace部署deployment、service

使namespace这种k8s资源可以被联邦管理:

kubefedctl enable ns可以看到多了一个 FederatedTypeConfig :

# kubectl get ftc -A

NAMESPACE NAME AGE

kube-federation-system namespaces 20h

后面enable了新的资源都可以用这个命令看多出的 FederatedTypeConfig 。

部署联邦namespace

# cat fns.yaml

apiVersion: types.kubefed.io/v1beta1

kind: FederatedNamespace

metadata:

name: test-namespace

namespace: test-namespace

spec:

placement:

clusters:

- name: cluster-host

- name: cluster-member1

kubectl apply -f fns.yaml上面yaml里的 placement 指定在哪些集群部署资源,不写的话将不在任何集群部署。

使deployment资源可以被联邦管理:

kubefedctl enable deploy部署联邦deployment

# cat fdeploy.yaml

apiVersion: types.kubefed.io/v1beta1

kind: FederatedDeployment

metadata:

name: test-deployment

namespace: test-namespace

spec:

template:

metadata:

labels:

app: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: registry.hundsun.com/hcs/web_echo_centos:0.1

name: nginx

placement:

clusters:

- name: cluster-host

- name: cluster-member1

overrides:

- clusterName: cluster-member1

clusterOverrides:

- path: "/spec/replicas"

value: 3

- path: "/spec/template/spec/containers/0/image"

value: "registry.hundsun.com/hcs/web_echo_centos:0.1-7.5"

- path: "/metadata/annotations"

op: "add"

value:

foo: bar

# - path: "/metadata/annotations/foo"

# op: "remove"

kubectl apply -f fdeploy.yaml上述的 fdeploy.yaml 里overrides指定了在特定集群用特定的参数覆盖默认参数,以便实现不同集群的差异化部署。比如上面的例子就把在cluster-member1集群的副本数改为了3,覆盖了默认的2,这样在cluster-member1集群会部署3个副本,其他集群部署2个副本。

也可以在 placement 把 clusters 替换为 clusterSelector ,这样会在有相应label的cluster部署deployment:

placement:

clusterSelector:

matchLabels:

cluster: app

如果 clusterSelector 和 clusters 同时存在,以 clusters 为准。

使service资源可以被联邦管理:

kubefedctl enable Service部署联邦service

# cat fsvc.yaml

apiVersion: types.kubefed.io/v1beta1

kind: FederatedService

metadata:

name: test-service

namespace: test-namespace

spec:

template:

spec:

selector:

app: nginx

type: NodePort

ports:

- name: http

port: 8080

placement:

clusters:

- name: cluster-host

- name: cluster-member1

kubectl apply -f fsvc.yaml可以用 ReplicaSchedulingPreference 实现更复杂的调度策略(只支持 deployment 和 replicaset ),如:

# cat rsp.yaml

apiVersion: scheduling.kubefed.io/v1alpha1

kind: ReplicaSchedulingPreference

metadata:

name: test-deployment

namespace: test-namespace

spec:

targetKind: FederatedDeployment

totalReplicas: 9

clusters:

cluster-host:

minReplicas: 2

maxReplicas: 6

weight: 1

cluster-member1:

minReplicas: 4

maxReplicas: 8

weight: 2

kubectl apply -f rsp.yamltotalReplicas代表总的副本数,weight代表不同集群分配的权重,如上面例子所示,会在 cluster-host 集群部署3个副本,cluster-member1 集群部署6个副本。

ReplicaSchedulingPreference 会覆盖targetKind(即原先的FederatedDeployment)的配置。

相关例子:

https://github.com/kubernetes-sigs/kubefed/tree/master/example/sample1

DNS相关

IngressDNSRecord 和 ServiceDNSRecord 的使用需要结合 external-dns ( https://github.com/kubernetes-sigs/external-dns ) 和 云服务厂商的dns服务,其中 external-dns 用于同步 k8s 的 service 和 ingress 到 云服务厂商的dns服务上,external-dns 本身并不是dns服务器。

在非云环境下,可以用 PowerDNS (需要PowerDNS Auth Server >= 4.1.x) 代替云服务厂商的dns服务,下面用PowerDNS测试dns相关功能。

注意:

本功能需要在部署kubefed时打开 CrossClusterServiceDiscovery 、 FederatedIngress 两个 featureGates 参数,如果按上文操作,则已经打开。

IngressDNSRecord 和 ServiceDNSRecord 这两个功能后续可能被废弃,这里提到要用 service meshes 提供 ServiceDNSRecord 的功能:

https://github.com/kubernetes-sigs/kubefed/blob/v0.6.1/docs/keps/20200619-kubefed-pull-reconciliation.md , 还有这两个issue提到用第三方工具比如 service meshes 或 multi-cluster mesh 提供跨集群服务发现功能: https://github.com/kubernetes-sigs/kubefed/issues/1283 , https://github.com/kubernetes-sigs/kubefed/issues/1284 , kubefed v0.7.0 版本已经移除这两个功能,可以看v0.7.0的Changelog( #1351 、 #1360 ): https://github.com/kubernetes-sigs/kubefed/releases/tag/v0.7.0

安装及配置PowerDNS

先安装PowerDNS (数据存储选择mysql):

yum -y install epel-release yum-plugin-priorities

yum -y install pdns

yum -y install pdns-backend-mysql

查看版本:

pdns_server --version安装好mysql(步骤略),连上mysql,执行:

create database pdns;

grant all privileges on pdns.* to 'admin'@'localhost' identified by 'Abcd1234';

use pdns;

source /usr/share/doc/pdns-backend-mysql-4.1.14/schema.mysql.sql;

INSERT INTO domains (name, type) values ('example.com', 'NATIVE');

INSERT INTO records (domain_id, name, content, type,ttl,prio)

VALUES (1,'example.com','localhost admin.example.com 1 10380 3600 604800 3600','SOA',86400,NULL);

INSERT INTO records (domain_id, name, content, type,ttl,prio)

VALUES (1,'example.com','dns-us1.powerdns.net','NS',86400,NULL);

INSERT INTO records (domain_id, name, content, type,ttl,prio)

VALUES (1,'example.com','dns-eu1.powerdns.net','NS',86400,NULL);

INSERT INTO records (domain_id, name, content, type,ttl,prio)

VALUES (1,'www.example.com','192.0.2.10','A',120,NULL);

INSERT INTO records (domain_id, name, content, type,ttl,prio)

VALUES (1,'mail.example.com','192.0.2.12','A',120,NULL);

INSERT INTO records (domain_id, name, content, type,ttl,prio)

VALUES (1,'localhost.example.com','127.0.0.1','A',120,NULL);

INSERT INTO records (domain_id, name, content, type,ttl,prio)

VALUES (1,'example.com','mail.example.com','MX',120,25);

source那一步的文件根据安装的 PowerDNS 版本不同目录可能不同,后面的多条INSERT语句是插入实验数据。

修改 /etc/pdns/pdns.conf , 把

# launch Which backends to launch and order to query them in下面的内容改为:

launch=gmysql

gmysql-host=127.0.0.1

gmysql-user=admin

gmysql-password=Abcd1234

gmysql-dbname=pdns

并修改下面的配置(去掉#号注释,并把值改为下面这样,api-key 自己定义一个, 192.168.48.0/24 改为自己k8s宿主机网段,或者直接 0.0.0.0/0 允许所有IP访问webserver )来打开 webserver :

api=yes

api-key=my-api-key-abc123

webserver=yes

webserver-address=

webserver-allow-from=127.0.0.1,::1,192.168.48.0/24

webserver-port=18081

启动 PowerDNS :

systemctl enable pdns

systemctl start pdns

测试dns服务器:

找一台centos机器安装测试工具 dig (也可以是 PowerDNS 那台机器):

yum install -y bind-utils修改测试机器dns服务器配置,修改 /etc/resolv.conf , nameserver 后面改为 PowerDNS 那台机器IP:

# cat /etc/resolv.conf

# Generated by NetworkManager

nameserver 192.168.48.204

search localdomain

在测试机器测试dns解析:

# dig +short mail.example.com

192.0.2.12

也可以不修改 /etc/resolv.conf ,直接把 PowerDNS 那台机器IP跟在 @ 后面,如下:

# dig +short mail.example.com @192.168.48.204

192.0.2.12

也可以用 nslookup 命令测试:

# nslookup mail.example.com

Server: 192.168.48.204

Address: 192.168.48.204#53

Name: mail.example.com

Address: 192.0.2.12可以看到返回都是 192.0.2.12 ,正是前面插入mysql的数据,说明 PowerDNS 部署成功。

给所有节点打标签表示不同的地域和可用区

给 cluster-host 集群的 host-48-129 机器打上 r1 地域和 z1 可用区 的标签:

kubectl --kubeconfig /etc/kubernetes/fed.conf --context kubernetes-context-host label node host-48-129 failure-domain.beta.kubernetes.io/region=r1

kubectl --kubeconfig /etc/kubernetes/fed.conf --context kubernetes-context-host label node host-48-129 failure-domain.beta.kubernetes.io/zone=z1查看:

kubectl --kubeconfig /etc/kubernetes/fed.conf --context kubernetes-context-host describe no host-48-129给 cluster-member1 集群的 host-48-183 机器打上 r2 地域和 z2 可用区 的标签:

kubectl --kubeconfig /etc/kubernetes/fed.conf --context kubernetes-context-member1 label node host-48-183 failure-domain.beta.kubernetes.io/region=r2

kubectl --kubeconfig /etc/kubernetes/fed.conf --context kubernetes-context-member1 label node host-48-183 failure-domain.beta.kubernetes.io/zone=z2

查看:

kubectl --kubeconfig /etc/kubernetes/fed.conf --context kubernetes-context-member1 describe no host-48-183部署 ExternalDNS

需要 ExternalDNS version >= v0.5

新建yaml文件 external-dns.yaml :

# cat external-dns.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: external-dns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: external-dns

rules:

- apiGroups: [""]

resources: ["services","endpoints","pods"]

verbs: ["get","watch","list"]

- apiGroups: ["extensions","networking.k8s.io"]

resources: ["ingresses"]

verbs: ["get","watch","list"]

- apiGroups: [""]

resources: ["pods"]

verbs: ["get","watch","list"]

- apiGroups: [""]

resources: ["nodes"]

verbs: ["list"]

- apiGroups: ["multiclusterdns.kubefed.io"]

resources: ["*"]

verbs: ["*"]

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: external-dns-viewer

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: external-dns

subjects:

- kind: ServiceAccount

name: external-dns

namespace: kube-system

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: external-dns

namespace: kube-system

spec:

strategy:

type: Recreate

selector:

matchLabels:

app: external-dns

template:

metadata:

labels:

app: external-dns

spec:

# Only use if you're also using RBAC

serviceAccountName: external-dns

containers:

- name: external-dns

image: registry.hundsun.com/library/external-dns:v0.7.6

args:

- --source=crd # service or ingress or both

- --crd-source-apiversion=multiclusterdns.kubefed.io/v1alpha1

- --crd-source-kind=DNSEndpoint

- --registry=txt

- --txt-prefix=cname

- --provider=pdns

- --pdns-server=http://192.168.48.204:18081

- --txt-owner-id=my-cluster-id-100

- --pdns-api-key=my-api-key-abc123

- --domain-filter=my-org.com # will make ExternalDNS see only the zones matching provided domain; omit to process all available zones in PowerDNS

- --log-level=debug

- --interval=30s

上面的 --pdns-server 改为自己的 PowerDNS 机器地址和webserver端口,--txt-owner-id 自己随便定义一个, --pdns-api-key 与上面 PowerDNS 的 api-key 配置保持一致。

部署:

kubectl apply -f external-dns.yaml由于这里定义了一个新的域名my-org.com,所以需要在PowerDNS的mysql数据库里插入这个域名的基础数据

INSERT INTO domains (name, type) values ('my-org.com', 'NATIVE');查出插入的数据的id:

> select * from domains where name='my-org.com';

+----+------------+--------+------------+--------+-----------------+---------+

| id | name | master | last_check | type | notified_serial | account |

+----+------------+--------+------------+--------+-----------------+---------+

| 2 | my-org.com | NULL | NULL | NATIVE | NULL | NULL |

+----+------------+--------+------------+--------+-----------------+---------+

1 row in set (0.000 sec)

根据查出的id,再插入数据( domain_id的值改为上面查出的id,这里是2 ):

INSERT INTO records (domain_id, name, content, type,ttl,prio)

VALUES (2,'my-org.com','localhost admin.my-org.com 1 10380 3600 604800 3600','SOA',86400,NULL);

部署 MetalLB(略)

由于 ServiceDNSRecord 相关功能需要LoadBalancer类型的service ( 参考 https://github.com/kubernetes-sigs/kubefed/blob/v0.6.1/docs/servicedns-with-externaldns.md ),所以需要云厂商LoadBalancer支持,非云环境可以用MetalLB代替( https://github.com/metallb/metallb ),这里部署了MetalLB 0.9.3版本,使用 layer2 模式,需要预留宿主机同网段一些IP以作为虚IP池子。

测试 ServiceDNSRecord

部署测试用的 FederatedDeployment , 这里是启动了一个自己写的web_echo镜像,里面提供一个http服务,访问该服务会返回自己的IP, yaml文件:

# cat fdeploy_external-dns.yaml

apiVersion: types.kubefed.io/v1beta1

kind: FederatedDeployment

metadata:

name: webecho-deployment

namespace: test-namespace

spec:

template:

metadata:

labels:

name: webecho-metallb

spec:

replicas: 2

selector:

matchLabels:

name: webecho-metallb

template:

metadata:

labels:

name: webecho-metallb

spec:

containers:

- image: registry.hundsun.com/hcs/web_echo_centos:0.1

name: webecho

ports:

- containerPort: 8080

placement:

clusters:

- name: cluster-host

- name: cluster-member1

执行:

kubectl apply -f fdeploy_external-dns.yaml部署测试用的 FederatedService ,yaml文件:

# cat fsvc_external-dns.yaml

apiVersion: types.kubefed.io/v1beta1

kind: FederatedService

metadata:

name: webecho-service

namespace: test-namespace

spec:

template:

spec:

selector:

name: webecho-metallb

type: LoadBalancer

ports:

- name: http

port: 8080

placement:

clusters:

- name: cluster-host

- name: cluster-member1

执行:

kubectl apply -f fsvc_external-dns.yaml部署测试用的 Domain 和 ServiceDNSRecord ,yaml文件:

# cat ServiceDNSRecord.yaml

apiVersion: multiclusterdns.kubefed.io/v1alpha1

kind: Domain

metadata:

# Corresponds to in the resource records.

name: webecho-domain

# The namespace running kubefed-controller-manager.

namespace: kube-federation-system

# namespace: test-namespace

# The domain/subdomain that is setup in your external-dns provider.

domain: webecho.my-org.com

---

apiVersion: multiclusterdns.kubefed.io/v1alpha1

kind: ServiceDNSRecord

metadata:

# The name of the sample service.

name: webecho-service

# The namespace of the sample deployment/service.

namespace: test-namespace

spec:

# The name of the corresponding `Domain`.

domainRef: webecho-domain

recordTTL: 300

其中 Domain 的 namespace 定义为 kubefed-controller-manager 所在的namespace, ServiceDNSRecord 的 name 和 namespace 与 FederatedService 的保持一致(重要),ServiceDNSRecord 的 domainRef 写的就是 Domain 的 name。

执行:

kubectl apply -f ServiceDNSRecord.yaml部署完后kubefed控制器里面的 DNS Endpoint 控制器会自动根据 ServiceDNSRecord 资源里面的 status 信息生成 DNSEndpoint 资源,查看该资源(多余输出这里省略):

# kubectl get DNSEndpoint -n test-namespace service-webecho-service -o yaml

...

spec:

endpoints:

- dnsName: webecho-service.test-namespace.webecho-domain.svc.r1.webecho.my-org.com

recordTTL: 300

recordType: A

targets:

- 192.168.48.182

- dnsName: webecho-service.test-namespace.webecho-domain.svc.r2.webecho.my-org.com

recordTTL: 300

recordType: A

targets:

- 192.168.48.188

- dnsName: webecho-service.test-namespace.webecho-domain.svc.webecho.my-org.com

recordTTL: 300

recordType: A

targets:

- 192.168.48.182

- 192.168.48.188

- dnsName: webecho-service.test-namespace.webecho-domain.svc.z1.r1.webecho.my-org.com

recordTTL: 300

recordType: A

targets:

- 192.168.48.182

- dnsName: webecho-service.test-namespace.webecho-domain.svc.z2.r2.webecho.my-org.com

recordTTL: 300

recordType: A

targets:

- 192.168.48.188

...

可以看到针对不同的地域和可用区生成了不同的域名,还有一个总的域名 webecho-service.test-namespace.webecho-domain.svc.webecho.my-org.com 对应的IP是两个,对应的是测试 FederatedService 在两个集群部署的 Service 的EXTERNAL-IP,就是MetalLB分配的 LoadBalancer 的IP:

# kubectl --kubeconfig /etc/kubernetes/fed.conf --context kubernetes-context-host get svc -n test-namespace

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

webecho-service LoadBalancer 10.110.240.46 192.168.48.182 8080:4683/TCP 120m

# kubectl --kubeconfig /etc/kubernetes/fed.conf --context kubernetes-context-member1 get svc -n test-namespace

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

webecho-service LoadBalancer 10.103.81.221 192.168.48.188 8080:47617/TCP 120m

在前面的装了dig的测试PowerDNS的机器查看域名:

# dig +short webecho-service.test-namespace.webecho-domain.svc.webecho.my-org.com

192.168.48.188

192.168.48.182

# dig +short webecho-service.test-namespace.webecho-domain.svc.r1.webecho.my-org.com

192.168.48.182

# dig +short webecho-service.test-namespace.webecho-domain.svc.z1.r1.webecho.my-org.com

192.168.48.182

# dig +short webecho-service.test-namespace.webecho-domain.svc.r2.webecho.my-org.com

192.168.48.188

# dig +short webecho-service.test-namespace.webecho-domain.svc.z2.r2.webecho.my-org.com

192.168.48.188

可以看到所有的域名都对应到了相应service的LoadBalancer的IP,curl访问总域名测试(测试service的端口是8080,所以要加上8080):

# curl webecho-service.test-namespace.webecho-domain.svc.webecho.my-org.com:8080

This is:

10.244.154.123

hostname is:

webecho-deployment-598f4ddd99-5dd65

# curl webecho-service.test-namespace.webecho-domain.svc.webecho.my-org.com:8080

This is:

10.244.154.122

hostname is:

webecho-deployment-598f4ddd99-4kbmx

# curl webecho-service.test-namespace.webecho-domain.svc.webecho.my-org.com:8080

This is:

10.244.189.135

hostname is:

webecho-deployment-598f4ddd99-rbzdf

# curl webecho-service.test-namespace.webecho-domain.svc.webecho.my-org.com:8080

This is:

10.244.189.176

hostname is:

webecho-deployment-598f4ddd99-l8hb6

可以访问并返回了自己的Pod IP和容器hostname(webecho镜像的功能),有负载均衡效果,上面的 10.244.154.123 和 10.244.154.123 是同一个集群的两个Pod IP, 10.244.189.135 和 10.244.189.176 是另一个集群的两个Pod IP,说明DNS服务器PowerDNS把域名解析到了不同集群的service LoadBalancer,根据观察会几分钟内一直解析到第一个集群,过几分钟又解析到另一个集群。

用带地域和可用区的域名也可以访问:

# curl webecho-service.test-namespace.webecho-domain.svc.z1.r1.webecho.my-org.com:8080

This is:

10.244.189.176

hostname is:

webecho-deployment-598f4ddd99-l8hb6

# curl webecho-service.test-namespace.webecho-domain.svc.z2.r2.webecho.my-org.com:8080

This is:

10.244.154.122

hostname is:

webecho-deployment-598f4ddd99-4kbmx

下面测试一下高可用,到cluster-member1集群把kubelet和docker停止,模拟集群挂掉:

systemctl stop kubelet docker等待几分钟后测试原先可以解析到两个IP的域名:

# dig +short webecho-service.test-namespace.webecho-domain.svc.webecho.my-org.com

192.168.48.182

发现只有一个IP了,说明kubefed和external-dns感知到了cluster-member1集群挂了,删除了域名到这个集群的service LoadBalancer IP的记录,只保留可以访问的那个集群的IP,具有一定的高可用的效果。启动cluster-member1集群后域名对应的IP又会恢复为两个。

测试 IngressDNSRecord

部署 ingress controller(略):

这里部署了 nginx ingress controller 0.26.1 的版本( https://github.com/kubernetes/ingress-nginx ),并用 LoadBalancer 类型的 service 进行了暴露(不能用NodePort,用NodePort会导致最后 IngressDNSRecord 的域名解析到ingress controller的service的cluster IP, k8s集群外不能访问; 也不能直接用hostNetwork,用hostNetwork没部署service,部署了ingress后ADDRESS那一栏为空,相应的DNSEndpoint不会有地址,external-dns也没法把域名对应到IP并注册到PowerDNS。):

# kubectl --kubeconfig /etc/kubernetes/fed.conf --context kubernetes-context-host get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx LoadBalancer 10.107.66.119 192.168.48.187 80:6633/TCP,443:51029/TCP 24h

# kubectl --kubeconfig /etc/kubernetes/fed.conf --context kubernetes-context-member1 get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx LoadBalancer 10.110.102.146 192.168.48.189 80:39965/TCP,443:54909/TCP 24h

部署测试FederatedIngress, yaml文件(关联了前面的webecho service):

# cat fingress.yaml

apiVersion: types.kubefed.io/v1beta1

kind: FederatedIngress

metadata:

name: test-ingress

namespace: test-namespace

spec:

template:

spec:

rules:

- host: ingress.my-org.com

http:

paths:

- backend:

serviceName: webecho-service

servicePort: 8080

placement:

clusters:

- name: cluster-host

- name: cluster-member1

执行:

kubectl apply -f fingress.yaml 查看两个集群的ingress:

# kubectl --kubeconfig /etc/kubernetes/fed.conf --context kubernetes-context-host get ingress -A

NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE

test-namespace test-ingress ingress.my-org.com 192.168.48.187 80 4h40m

# kubectl --kubeconfig /etc/kubernetes/fed.conf --context kubernetes-context-member1 get ingress -A

NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE

test-namespace test-ingress ingress.my-org.com 192.168.48.189 80 4h40m

部署IngressDNSRecord,yaml文件:

# cat IngressDNSRecord.yaml

apiVersion: multiclusterdns.kubefed.io/v1alpha1

kind: IngressDNSRecord

metadata:

name: test-ingress

namespace: test-namespace

spec:

hosts:

- ingress.my-org.com

recordTTL: 300

执行:

kubectl apply -f IngressDNSRecord.yaml查看kubefed控制器里面的 DNS Endpoint 控制器自动生成的 DNSEndpoint 资源:

# kubectl get DNSEndpoint -n test-namespace ingress-test-ingress -o yaml

...

spec:

endpoints:

- dnsName: ingress.my-org.com

recordTTL: 300

recordType: A

targets:

- 192.168.48.187

- 192.168.48.189

...

可以看到有两个target,就是两个集群ingress的ADDRESS。

在前面的装了dig的测试PowerDNS的机器查看域名:

# dig +short ingress.my-org.com

192.168.48.189

192.168.48.187

可以看到域名解析到了两个集群的ingress controller的service的EXTERNAL-IP,也就是两个集群ingress的ADDRESS。

在测试PowerDNS的机器curl测试:

# curl ingress.my-org.com

This is:

10.244.154.73

hostname is:

webecho-deployment-598f4ddd99-5dd65

# curl ingress.my-org.com

This is:

10.244.189.135

hostname is:

webecho-deployment-598f4ddd99-rbzdf

经测试也有负载均衡效果。停掉一个集群,原先解析到两个IP的域名变为解析到一个IP,有一定的高可用效果。重新启动集群后,域名对应的IP恢复为两个。

部署自定义CRD

首先在所有的集群都部署好CRD和CRD controller。

kubefedctl enable 来使新的资源可以被联邦管理,比如我有一个自定义CRD为Guestbook,enable这个新的CRD:

kubefedctl enable Guestbook查看:

# kubectl get ftc -A

NAMESPACE NAME AGE

kube-federation-system guestbooks.webapp.my.domain 11s

原先我创建自己资源的CR的yaml是这样的:

apiVersion: webapp.my.domain/v1

kind: Guestbook

metadata:

name: guestbook-sample

spec:

cpu: "120m"

memory: "200Mi"

replicas: 2

创建对应的联邦CR:

# cat fguestbook.yaml

apiVersion: types.kubefed.io/v1beta1

kind: FederatedGuestbook

metadata:

name: test-guestbook

namespace: test-namespace

spec:

template:

spec:

cpu: "120m"

memory: "200Mi"

replicas: 3

placement:

clusterSelector:

matchLabels:

cluster: app

副本数改为了3。

部署这个资源的联邦CR:

kubectl apply -f fguestbook.yaml可以看到两个集群都部署了3个我的guestbook的pod:

# kubectl --kubeconfig /etc/kubernetes/fed.conf --context kubernetes-context-host get po -A | grep guestbook

test-namespace test-guestbook-85df649f9f-j6vxj 1/1 Running 0 8m35s

test-namespace test-guestbook-85df649f9f-jrgdx 1/1 Running 0 8m35s

test-namespace test-guestbook-85df649f9f-mtbx6 1/1 Running 0 8m35s

# kubectl --kubeconfig /etc/kubernetes/fed.conf --context kubernetes-context-member1 get po -A | grep guestbook

test-namespace test-guestbook-85df649f9f-jwqxp 1/1 Running 0 9m3s

test-namespace test-guestbook-85df649f9f-mngzk 1/1 Running 0 9m3s

test-namespace test-guestbook-85df649f9f-txdpp 1/1 Running 0 9m3s

把已经部署好的k8s资源转为联邦的资源

比如我原先在 test 命名空间部署了一个名为 centos 的 deployment,通过 kubefedctl federate 命令可以把这个资源转为联邦的资源,转化完后在其他集群也会自动在 test 命名空间部署centos这个deployment,命令如下:

kubefedctl federate Namespace test --enable-type

kubefedctl federate Deployment centos -n test --enable-type

因为centos这个deployment所在的namespace也没有转化过,所以先转化Namespace,再转化deployment,转化完后会自动创建 FederatedNamespace 和 FederatedDeployment 资源:

# kubectl get fns -A

NAMESPACE NAME AGE

test test 4m51s

# kubectl get fdeploy centos -n test

NAME AGE

centos 4m10s

在两个集群都有了centos这个deployment:

# kubectl --kubeconfig /etc/kubernetes/fed.conf --context kubernetes-context-host get deploy -n test

NAME READY UP-TO-DATE AVAILABLE AGE

centos 1/1 1 1 7m16s

# kubectl --kubeconfig /etc/kubernetes/fed.conf --context kubernetes-context-member1 get deploy -n test

NAME READY UP-TO-DATE AVAILABLE AGE

centos 1/1 1 1 7m16s