基于docker搭建seata对接nacos和mysql

nacos版本 2.1.1

seata版本 1.5.2

mysql 8.0.28

测试代码 https://github.com/seata/seata-samples/tree/1.5.0

文章目录

- docker启动nacos

- docker启动seata

- seata配置修改

-

- 在空文件夹下新建 application.yaml文件

- 配置文件复制到seata中

- nacos配置修改

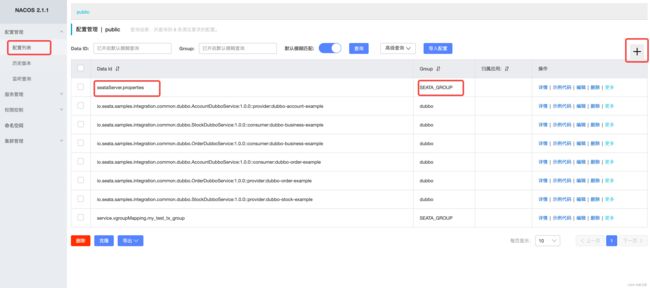

- 新建seataServer.properties配置

- 创建数据库表

- 重启seata

- 验证是否配置成功

- 测试

- 踩到的坑及解决

-

- 1. 运行测试的时候service.vgroupMapping.my_test_tx_group 找不到

- 2. 执行时程序172.17.0.2访问不到

- 效果展示

docker启动nacos

docker pull nacos/nacos-server:v2.1.1-slim

docker run -itd --name nacos \

-p 8848:8848 \

-p 9848:9848 \

-p 9849:9849 \

-e PREFER_HOST_MODE=hostname \

-e MODE=standalone \

nacos/nacos-server:v2.1.1-slim

docker启动seata

docker pull seataio/seata-server:1.5.2

docker run --name seata-server -p 8091:8091 -p 7091:7091 seataio/seata-server:1.5.2

seata配置修改

在空文件夹下新建 application.yaml文件

server:

port: 7091

spring:

application:

name: seata-server

logging:

config: classpath:logback-spring.xml

file:

path: ${user.home}/logs/seata

extend:

logstash-appender:

destination: 127.0.0.1:4560

kafka-appender:

bootstrap-servers: 127.0.0.1:9092

topic: logback_to_logstash

console:

user:

username: seata

password: seata

seata:

config:

# support: nacos, consul, apollo, zk, etcd3

type: nacos

nacos:

server-addr: host.docker.internal:8848

namespace:

group: SEATA_GROUP

username:

password:

##if use MSE Nacos with auth, mutex with username/password attribute

#access-key: ""

#secret-key: ""

data-id: seataServer.properties

registry:

# support: nacos, eureka, redis, zk, consul, etcd3, sofa

type: nacos

nacos:

application: seata-server

server-addr: host.docker.internal:8848

group: SEATA_GROUP

namespace:

cluster: default

username:

password:

##if use MSE Nacos with auth, mutex with username/password attribute

#access-key: ""

#secret-key: ""

# server:

# service-port: 8091 #If not configured, the default is '${server.port} + 1000'

security:

secretKey: SeataSecretKey0c382ef121d778043159209298fd40bf3850a017

tokenValidityInMilliseconds: 1800000

ignore:

urls: /,/**/*.css,/**/*.js,/**/*.html,/**/*.map,/**/*.svg,/**/*.png,/**/*.ico,/console-fe/public/**,/api/v1/auth/login

配置文件复制到seata中

docker cp ./ seata-server:seata-server/resources/

nacos配置修改

新建seataServer.properties配置

service.vgroupMapping.default-tx-group=default

service.enableDegrade=false

service.disableGlobalTransaction=false

#Transaction storage configuration, only for the server. The file, DB, and redis configuration values are optional.

store.mode=db

store.lock.mode=db

store.session.mode=db

#Used for password encryption

store.publicKey=

#These configurations are required if the `store mode` is `db`. If `store.mode,store.lock.mode,store.session.mode` are not equal to `db`, you can remove the configuration block.

store.db.datasource=druid

store.db.dbType=mysql

store.db.driverClassName=com.mysql.cj.jdbc.Driver

store.db.url=jdbc:mysql://host.docker.internal:3306/seata?serverTimezone=UTC&useSSL=false&useUnicode=true&characterEncoding=UTF-8

store.db.user=root

store.db.password=123456

store.db.minConn=5

store.db.maxConn=30

store.db.globalTable=global_table

store.db.branchTable=branch_table

store.db.distributedLockTable=distributed_lock

store.db.queryLimit=100

store.db.lockTable=lock_table

store.db.maxWait=5000

#Transaction rule configuration, only for the server

server.recovery.committingRetryPeriod=1000

server.recovery.asynCommittingRetryPeriod=1000

server.recovery.rollbackingRetryPeriod=1000

server.recovery.timeoutRetryPeriod=1000

server.maxCommitRetryTimeout=-1

server.maxRollbackRetryTimeout=-1

server.rollbackRetryTimeoutUnlockEnable=false

server.distributedLockExpireTime=10000

server.xaerNotaRetryTimeout=60000

server.session.branchAsyncQueueSize=5000

server.session.enableBranchAsyncRemove=false

#Transaction rule configuration, only for the client

client.rm.asyncCommitBufferLimit=10000

client.rm.lock.retryInterval=10

client.rm.lock.retryTimes=30

client.rm.lock.retryPolicyBranchRollbackOnConflict=true

client.rm.reportRetryCount=5

client.rm.tableMetaCheckEnable=true

client.rm.tableMetaCheckerInterval=60000

client.rm.sqlParserType=druid

client.rm.reportSuccessEnable=false

client.rm.sagaBranchRegisterEnable=false

client.rm.sagaJsonParser=fastjson

client.rm.tccActionInterceptorOrder=-2147482648

client.tm.commitRetryCount=5

client.tm.rollbackRetryCount=5

client.tm.defaultGlobalTransactionTimeout=60000

client.tm.degradeCheck=false

client.tm.degradeCheckAllowTimes=10

client.tm.degradeCheckPeriod=2000

client.tm.interceptorOrder=-2147482648

client.undo.dataValidation=true

client.undo.logSerialization=jackson

client.undo.onlyCareUpdateColumns=true

server.undo.logSaveDays=7

server.undo.logDeletePeriod=86400000

client.undo.logTable=undo_log

client.undo.compress.enable=true

client.undo.compress.type=zip

client.undo.compress.threshold=64k

#For TCC transaction mode

tcc.fence.logTableName=tcc_fence_log

tcc.fence.cleanPeriod=1h

#Log rule configuration, for client and server

log.exceptionRate=100

#Metrics configuration, only for the server

metrics.enabled=false

metrics.registryType=compact

metrics.exporterList=prometheus

metrics.exporterPrometheusPort=9898

#For details about configuration items, see https://seata.io/zh-cn/docs/user/configurations.html

#Transport configuration, for client and server

transport.type=TCP

transport.server=NIO

transport.heartbeat=true

transport.enableTmClientBatchSendRequest=false

transport.enableRmClientBatchSendRequest=true

transport.enableTcServerBatchSendResponse=false

transport.rpcRmRequestTimeout=30000

transport.rpcTmRequestTimeout=30000

transport.rpcTcRequestTimeout=30000

transport.threadFactory.bossThreadPrefix=NettyBoss

transport.threadFactory.workerThreadPrefix=NettyServerNIOWorker

transport.threadFactory.serverExecutorThreadPrefix=NettyServerBizHandler

transport.threadFactory.shareBossWorker=false

transport.threadFactory.clientSelectorThreadPrefix=NettyClientSelector

transport.threadFactory.clientSelectorThreadSize=1

transport.threadFactory.clientWorkerThreadPrefix=NettyClientWorkerThread

transport.threadFactory.bossThreadSize=1

transport.threadFactory.workerThreadSize=default

transport.shutdown.wait=3

transport.serialization=seata

transport.compressor=none

创建数据库表

create database seata;

use seata;

-- -------------------------------- The script used when storeMode is 'db' --------------------------------

-- the table to store GlobalSession data

CREATE TABLE IF NOT EXISTS `global_table`

(

`xid` VARCHAR(128) NOT NULL,

`transaction_id` BIGINT,

`status` TINYINT NOT NULL,

`application_id` VARCHAR(32),

`transaction_service_group` VARCHAR(32),

`transaction_name` VARCHAR(128),

`timeout` INT,

`begin_time` BIGINT,

`application_data` VARCHAR(2000),

`gmt_create` DATETIME,

`gmt_modified` DATETIME,

PRIMARY KEY (`xid`),

KEY `idx_status_gmt_modified` (`status` , `gmt_modified`),

KEY `idx_transaction_id` (`transaction_id`)

) ENGINE = InnoDB

DEFAULT CHARSET = utf8mb4;

-- the table to store BranchSession data

CREATE TABLE IF NOT EXISTS `branch_table`

(

`branch_id` BIGINT NOT NULL,

`xid` VARCHAR(128) NOT NULL,

`transaction_id` BIGINT,

`resource_group_id` VARCHAR(32),

`resource_id` VARCHAR(256),

`branch_type` VARCHAR(8),

`status` TINYINT,

`client_id` VARCHAR(64),

`application_data` VARCHAR(2000),

`gmt_create` DATETIME(6),

`gmt_modified` DATETIME(6),

PRIMARY KEY (`branch_id`),

KEY `idx_xid` (`xid`)

) ENGINE = InnoDB

DEFAULT CHARSET = utf8mb4;

-- the table to store lock data

CREATE TABLE IF NOT EXISTS `lock_table`

(

`row_key` VARCHAR(128) NOT NULL,

`xid` VARCHAR(128),

`transaction_id` BIGINT,

`branch_id` BIGINT NOT NULL,

`resource_id` VARCHAR(256),

`table_name` VARCHAR(32),

`pk` VARCHAR(36),

`status` TINYINT NOT NULL DEFAULT '0' COMMENT '0:locked ,1:rollbacking',

`gmt_create` DATETIME,

`gmt_modified` DATETIME,

PRIMARY KEY (`row_key`),

KEY `idx_status` (`status`),

KEY `idx_branch_id` (`branch_id`),

KEY `idx_xid_and_branch_id` (`xid` , `branch_id`)

) ENGINE = InnoDB

DEFAULT CHARSET = utf8mb4;

CREATE TABLE IF NOT EXISTS `distributed_lock`

(

`lock_key` CHAR(20) NOT NULL,

`lock_value` VARCHAR(20) NOT NULL,

`expire` BIGINT,

primary key (`lock_key`)

) ENGINE = InnoDB

DEFAULT CHARSET = utf8mb4;

CREATE TABLE `undo_log` (

`id` bigint(20) NOT NULL AUTO_INCREMENT,

`branch_id` bigint(20) NOT NULL,

`xid` varchar(100) NOT NULL,

`context` varchar(128) NOT NULL,

`rollback_info` longblob NOT NULL,

`log_status` int(11) NOT NULL,

`log_created` datetime NOT NULL,

`log_modified` datetime NOT NULL,

`ext` varchar(100) DEFAULT NULL,

PRIMARY KEY (`id`),

UNIQUE KEY `ux_undo_log` (`xid`,`branch_id`)

) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8;

INSERT INTO `distributed_lock` (lock_key, lock_value, expire) VALUES ('AsyncCommitting', ' ', 0);

INSERT INTO `distributed_lock` (lock_key, lock_value, expire) VALUES ('RetryCommitting', ' ', 0);

INSERT INTO `distributed_lock` (lock_key, lock_value, expire) VALUES ('RetryRollbacking', ' ', 0);

INSERT INTO `distributed_lock` (lock_key, lock_value, expire) VALUES ('TxTimeoutCheck', ' ', 0);

重启seata

docker restart seata-server

验证是否配置成功

测试

测试代码使用的是seata官方提供的例子

https://github.com/seata/seata-samples/tree/1.5.0

踩到的坑及解决

1. 运行测试的时候service.vgroupMapping.my_test_tx_group 找不到

新建service.vgroupMapping.my_test_tx_group配置文件

内容里面写一个 default

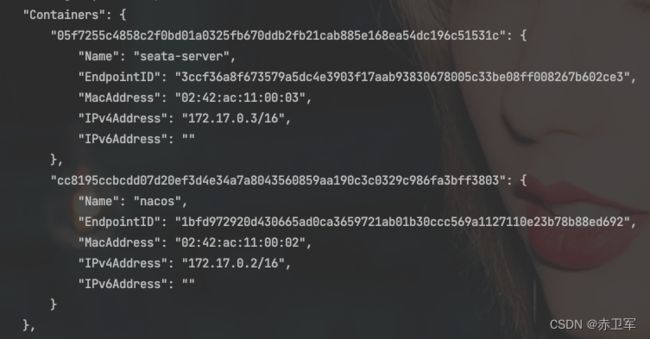

2. 执行时程序172.17.0.2访问不到

172.12.0.0/16是docker默认的bridge网络

docker inspect bridge

问题原因是宿主机访问不了docker容器

解决方式可以参考另一篇

https://blog.csdn.net/qq_23934475/article/details/127076421

最近在写springboot相关的代码https://github.com/googalAmbition/hello-spring-boot