第七课:尚硅谷K8s学习- Prometheus、Grafana和EFK、证书年限

第七课:尚硅谷K8s学习- Prometheus、Grafana和EFK、证书年限

tags:

- golang

- 2019尚硅谷

categories:

- K8s

- Helm安装使用

- Prometheus

- Grafana

- HPA

- k8s资源限制

- 日志收集EFK

- 证书可用年限修改

文章目录

- 第七课:尚硅谷K8s学习- Prometheus、Grafana和EFK、证书年限

-

- 第一节 helm介绍

-

- 1.1 为什么需要helm

- 1.2 helm中几个概念

- 1.3 helm安装

- 第二节 自定义的chart

-

- 2.1 模板文件目录结构

- 2.2 自定义chart的示例

- 2.3 helm的基本操作

- 2.4 使用Helm部署dashboard

- 第三节 Prometheus资源限制

-

- 3.1 Prometheus相关介绍

- 3.2 Prometheus构建

- 3.3 安装后服务访问

- 3.4 k8s-资源限制补充

- 第四节 日志收集EFK和证书可用年限

-

- 4.1 helm安装EFK

- 4.2 证书可用年限修改

第一节 helm介绍

1.1 为什么需要helm

- 在没使用helm之前,向kubernetes部署应用,我们要依次部署deployment,service,configMap等,步骤较繁琐。况且随着很多项目微服务化,复杂的应用在容器中部署以及管理显得较为复杂。

- helm通过打包的方式,支持发布的版本管理和控制,很大程度上简化了Kubernetes应用的部署和管理

- Helm本质就是让K8s的应用管理(Deployment,Service 等)可配置,能动态生成。通过动态生成K8s资源清单文件(deployment.yaml, service.yaml) 。然后调用Kubectl自动执行K8s资源部署

1.2 helm中几个概念

- Helm是官方提供的类似于YUM的包管理器,是部署环境的流程封装。Helm 有两个重要的概念: Chart 和Release

- Chart: 一个Helm包,其中包含了运行一个应用所需要的镜像、依赖和资源定义等,还可能包含Kubernetes集群中的服务定义(可以理解为docker的image)

- Release: 在Kubernetes集群上运行的 Chart的一个实例。在同一个集群上,一个 Chart可以安装很多次。每次安装都会创建一个新的release(可以理解为docker的container实例)

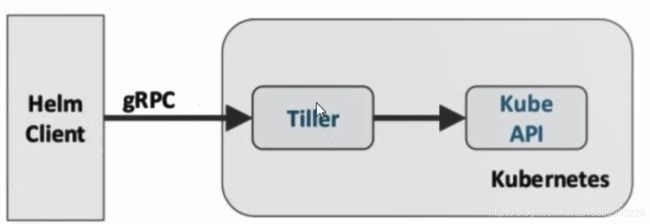

- Helm包含两个组件: Helm客户端和Tiller服务器,如下图所示

- Helm客户端负责chart和release的创建和管理以及和Tiller的交互。Tiller 服务器运行在Kubernetes集群中,它会处理Helm客户端的请求,与Kubernetes API Server交互

1.3 helm安装

- Helm 的安装十分简单。下载 helm命令行具到master节点.

- 安装github网站:https://github.com/helm/helm/releases/tag/v3.2.4

- 这里安装3.2.4版本,通过脚本的方式后查看版本。helm version

- 或者通过下载 Linux amd64 版本。

# 如无需更换版本,直接执行下载

wget https://get.helm.sh/helm-v3.2.4-linux-amd64.tar.gz

# 解压

tar -zxvf helm-v3.2.4-linux-amd64.tar.gz

# 进入到解压后的目录

cd linux-amd64/

cp helm /usr/local/bin/

# 赋予权限

chmod a+x /usr/local/bin/helm

# 查看版本

helm version

- Kubernetes 1.6 中开启了 RBAC ,权限控制变得简单了。Helm 也不必与 Kubernetes 做重复的事情,因此 Helm 3 彻底移除了 Tiller 。 移除 Tiller 之后,Helm 的安全模型也变得简单(使用 RBAC 来控制生产环境 Tiller 的权限非常不易于管理)。Helm 3 使用 kubeconfig 鉴权。集群管理员针对应用,可以设置任何所需级别的权限控制,而其他功能保持不变。

- 官网仓库: https://hub.helm.sh/ 这里是官方或别人的仓库。

第二节 自定义的chart

2.1 模板文件目录结构

- 上面仓库中的模板是别人做的。如何做自己的模板呢?

- 目录结构如下

.

├── Chart.yaml

├── templates

| ├── deployment.yaml

| └── service.yaml

├── values.yaml

- 一个基本的自定义chart的文件目录结构大概是如上:

- Chart.yaml: 定义当前chart的基本metadata, 比如name,tag啥的

- templates: 这个文件夹下放当前chart需要的一些yaml资源清单

- 资源清单支持变量模版语法

- values.yaml: 定义变量,可被template下的yaml资源清单使用

2.2 自定义chart的示例

# 1. 新建一个文件夹demo存放chart

mkdir demo && cd demo && mkdir templates

# 2. 新建Chart.yaml 必须包含name和version两个字段

cat << EOF > Chart.yaml

name: hello-world

version: 1.0.0

EOF

# 3. 新建./templates/deployment.yaml

# 模板目录必须是templates 注意image部分可以使用变量的模板语法,可以动态插入

cat << EOF > ./templates/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-world

spec:

replicas: 1

selector:

matchLabels:

app: hello-world

template:

metadata:

labels:

app: hello-world

spec:

containers:

- name: hello-world

image: hub.qnhyn.com/library/myapp:v1

ports:

- containerPort: 80

protocol: TCP

EOF

# 4. 新建./templates/service.yaml

cat << EOF > ./templates/service.yaml

apiVersion: v1

kind: Service

metadata:

name: hello-world

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

protocol: TCP

selector:

app: hello-world

EOF

# 5. 新建values.yaml 这个用来动态导入的image: {{ .Values.image.repository }}:{{ .Values.image.tag }}

# 这样做有个好处 只需要修改values.yaml文件 可以动态修改配置

#cat << EOF > values.yaml

#image:

# repository: lzw5399/tocgenerator

# tag: '951'

#EOF

# 将chart实例化成release

# 格式:helm install [RELEASE-NAME] [CHART-PATH]

helm install testname .

# 查看release

helm ls

# 查看历史

helm history <RELEASE_NAME>

# 安装成功!!

kubectl get pod

2.3 helm的基本操作

- 查看release

# 列出已经部署的Release

helm ls

# 查询一个特定的Release的状态

helm status <RELEASE_NAME>

# 查看被移除了,但保留了历史记录的release

helm ls --uninstalled

- 安装release

# 安装

helm install <RELEASE-NAME> <CHART-PATH>

# 命令行指定变量

helm install --set image.tag=233 <RELEASE-NAME> <CHART-PATH>

- 更新release

# 更新操作, flag是可选操作

helm upgrade [FLAG] <RELEASE> <CHART-PATH>

# 指定文件更新

helm upgrade -f myvalues.yaml -f override.yaml <RELEASE-NAME> <CHART-PATH>

# 命令行指定变量

helm upgrade --set foo=bar --set foo=newbar redis ./redis

- 卸载release

# 移除Release

helm uninstall <RELEASE_NAME>

# 移除Release,但保留历史记录

# 可以通过以下查看:helm ls --uninstalled

# 可以通过以下回滚:helm rollback [REVISION]

helm uninstall <RELEASE_NAME> --keep-history

- 回滚release

# 更新操作, flag是可选操作

helm rollback <RELEASE> [REVISION]

- 尝试运行但是不运行。测试下能否运行找BUG

helm install --dry-run <RELEASE-NAME> <CHART-PATH>

2.4 使用Helm部署dashboard

- helm拉取dashboard的chart

# 添加helmhub上的dashboard官方repo仓库

helm repo add k8s-dashboard https://kubernetes.github.io/dashboard

# 查看添加完成后的仓库

helm repo list

# 查询dashboard的chart

helm search repo kubernetes-dashboard

# 新建文件夹用于保存chart

mkdir dashboard-chart && cd dashboard-chart

# 拉取chart

helm pull k8s-dashboard/kubernetes-dashboard

# 此时会有一个压缩包,解压它

tar -zxvf kubernetes-dashboard-2.3.0.tgz

# 进入到解压后的文件夹

cd kubernetes-dashboard

- 配置dashboard的chart包配置

- 如果需要跟自定义的配置,可以通过values.yaml修改。安装好后,**看服务在哪个节点用那个节点的ip+nodeport端口访问。**节点ip通过ifconfig获取

# 源文件安装 这里不用修改yaml

helm install -f values.yaml --namespace kube-system kubernetes-dashboard .

kubectl get pod -n kube-system

kubectl describe pod kubernetes-dashboard-7c4f68b554-h5l62 -n kube-system

kubectl get svc -n kube-system

# 把svc类型改成NodePort 当然也可以通过ingress类型进行分享

kubectl edit svc kubernetes-dashboard -n kube-system

- 遗憾的是,由于证书问题,我们无法访问,需要在部署Dashboard时指定有效的证书,才可以访问。由于在正式环境中,并不推荐使用NodePort的方式来访问Dashboard。

- Ingress将开源的反向代理负载均衡器(如 Nginx、Apache、Haproxy等)与k8s进行集成,并可以动态的更新Nginx配置等,是比较灵活,更为推荐的暴露服务的方式。这里我们不使用。

- 配置一个拥有完整权限的token,输入到浏览器中。token如果配置错误,浏览器页面一直显示500

kubectl create serviceaccount dashboard-admin -n kube-system

kubectl create clusterrolebinding dashboard-cluster-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

# 执行完以上操作后,由于管理用户的名称为dashboard-admin,生成的对应的secret的值则为dashboard-admin-token-随机字符串

kubectl get secret -n=kube-system |grep dashboard-admin-token

kubectl describe -n=kube-system secret dashboard-admin-token-fk95t

第三节 Prometheus资源限制

3.1 Prometheus相关介绍

- Prometheus github 地址: https://github.com/prometheus-operator/kube-prometheus

- Prometheus的方案中已经用到了MetricServer组件去收集数据。

- Prometheus组件说明

- MetricServer:是kubernetes 集群资源使用情况的聚合器,收集数据给kubernetes 集群内使用,如kubect,hpa,scheduler等。

- PrometheusOperator:是一个系统监测和警报工具箱,用来存储监控数据。

- NodeExporter:用于各node的关键度量指标状态数据。

- KubeStateMetrics:收集kubernetes 集群内资源对象数据,制定告警规则。

- Prometheus:采用pull方式收集apiserver, scheduler, controller-manager, kubelet组件数据, 通过http协议传输。

- Grafana:是可视化数据统计和监控平台。

3.2 Prometheus构建

mkdir -p /root/plugin/Prometheus && cd /root/plugin/Prometheus

git clone https://github.com/prometheus-operator/kube-prometheus.git

cd kube-prometheus/manifests

# 修改grafana-service.yaml文件, 使用nodepode方式访问grafana

vim grafana-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: grafana

name: grafana

namespace: monitoring

spec:

type: NodePort # 添加内容

ports:

- name: http

port: 3000

targetPort: http

nodePort: 30100 # 添加内容

selector:

app: grafana

# 修改prometheus-service.yaml,改为nodepode

apiVersion: v1

kind: Service

metadata:

labels:

prometheus: k8s

name: prometheus-k8s

namespace: monitoring

spec:

type: NodePort #添加内容

ports:

- name: web

port: 9090

targetPort: web

nodePort: 30200 #添加内容

selector:

app: prometheus

prometheus: k8s

sessionAffinity: ClientIP

# 修改alertmanager-service.yaml,改为nodepode

apiVersion: v1

kind: Service

metadata:

labels:

alertmanager: main

name: alertmanager-main

namespace: monitoring

spec:

type: NodePort #添加内容

ports:

- name: web

port: 9093

targetPort: web

nodePort: 30300 #添加内容

selector:

alertmanager: main

app: alertmanager

sessionAffinity: ClientIP

# 直接部署 docker版本19.03-ce这里k8s版本选择1.15.1 之前的1.18.0会有一个就绪探针的报错和Grafana的磁盘挂载报错 这里先做出效果以后在研究

kubectl create -f manifests/setup

kubectl create -f manifests/

# 查看pod是否完成

kubectl get pod -n monitoring

NAME READY STATUS RESTARTS AGE

alertmanager-main-0 2/2 Running 0 103s

alertmanager-main-1 2/2 Running 0 83s

alertmanager-main-2 2/2 Running 0 76s

grafana-7dc5f8f9f6-xb5ld 1/1 Running 0 109s

kube-state-metrics-5cbd67455c-rtgtm 4/4 Running 0 83s

node-exporter-9mr8k 2/2 Running 0 109s

node-exporter-jdmcd 2/2 Running 0 109s

node-exporter-vk769 2/2 Running 0 109s

prometheus-adapter-668748ddbd-8lxqx 1/1 Running 0 109s

prometheus-k8s-0 3/3 Running 1 74s

prometheus-k8s-1 3/3 Running 1 74s

prometheus-operator-7447bf4dcb-g4rbf 1/1 Running 0 110s

# 安装完之后测试

kubectl top node

kubectl top pod

3.3 安装后服务访问

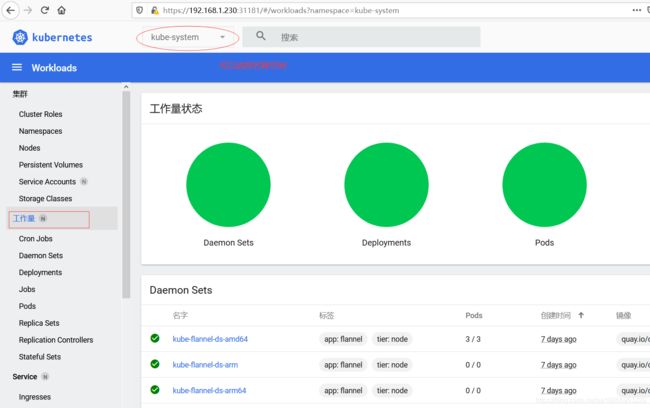

-

访问prometheus。根据上面配置 prometheus对应的nodeport端口为30200,访问http://MasterIP:30200

-

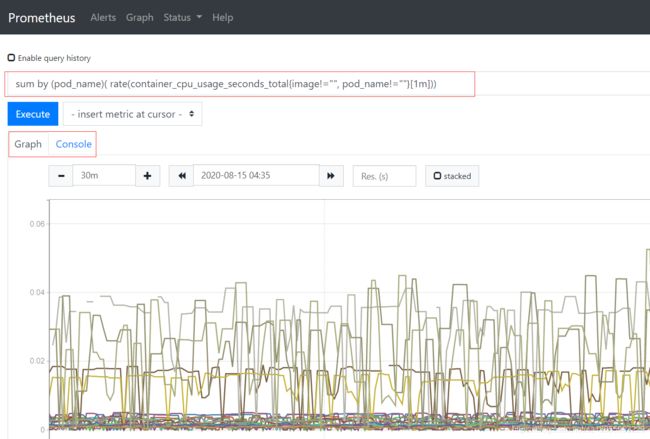

通过Prometheus语言。获取CPU访问的请求数目。也可以自动画出时间波线图。

sum by (pod_name)( rate(container_cpu_usage_seconds_total{image!="", pod_name!=""}[1m]))

-

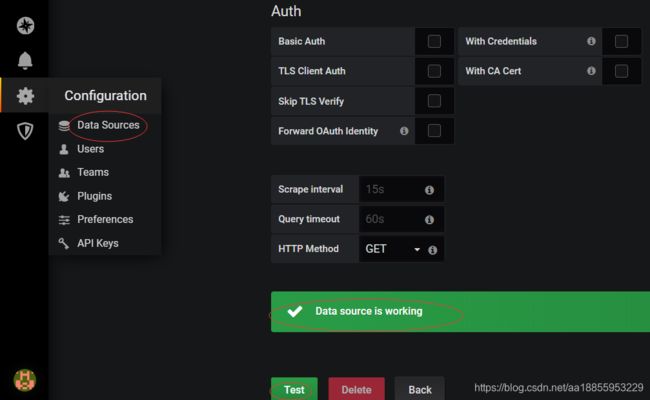

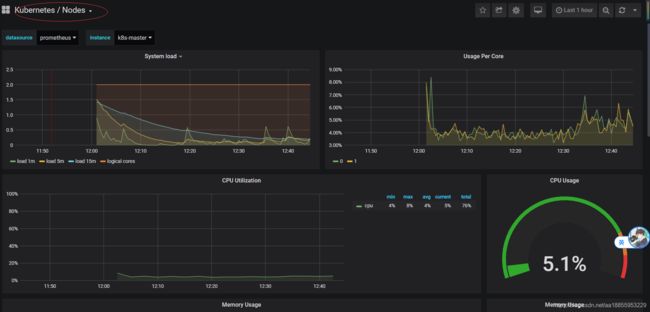

访问Grafana。访问http://MasterIP:30100 用户名和密码都是admin

-

Horizontal Pod Autoscaling可以根据CPU利用率自动伸缩一个Replication Controller、Deployment 或者Replica Set中的Pod数量

# 安装HPA拓展 复制到其他节点上

scp hpa-example.tar root@k8s-node2:~/

docker load -i hpa-example.tar

# 创建一个php-apache Pod

kubectl run php-apache --image=gcr.io/google_containers/hpa-example --requests=cpu=200m --expose --port=80

# 创建HPA控制器 cpu控制在50% 每个节点最少一个最多十个

kubectl autoscale deployment php-apache --cpu-percent=50 --min=1 --max=10

[root@k8s-master manifests]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 0%/50% 1 10 1 52s

# 增加负载,查看负载节点数目

kubectl run -i --tty load-generator --image=busybox /bin/sh

# 进入之后无限访问

while true; do wget -q -O- http://php-apache.default.SVC.cluster.local; done

# 新开一个窗口

kubectl get hpa

# 回收时候比创建时候慢 防止突然回收压死仅存的节点

3.4 k8s-资源限制补充

- Kubernetes对资源的限制实际上是通过cgroup来控制的,cgroup是容器的一组用来控制内核如何运行进程的相关属性集合,针对内存、CPU各种设备都有对应的cgroup。

- 默认情况下,Pod运行没有CPU和内存的限制,这就意味着系统中的任何pod将能够像执行该pod所在的节点一样,消耗足够多的CPU和内存,一般会针对某些应用的Pod资源进行资源限制,这个资源限制是通过resources的requests和limits来实现。

- requests要分配的资源,limits 为最高请求的资源值。可以简单理解为初始值和最大值

spec:

containers:

- image: xxx

name: xxxx

resources:

limits: #最大

cpu: "4"

memory: 2Gi

requests: #初始

cpu: 250m

memory: 250Mi

- 基于namespace-计算资源配额yaml

apiVersion: v1

kind: ResourceQuota

metadata:

name: computer-resources

namespace: spark-cluster

spec:

hard:

pods: "20"

requests.cpu: "20"

requests.memory: 100Gi

limits.cpu: "40"

limits.memory: 200Gi

- 基于namespace-配置对象数量配额限制

apiVersion: v1

kind: ResourceQuota

metadata:

name: object-counts

namespace: spark-cluster

spec:

hard:

configmaps: "10"

persistentvolumeclaims: "4"

replicationcontrollers: "20"

secrets: "10"

services: "10"

services.loadbalancers: "2"

- 基于namespace-配置CPU和内存LimitRange

- default 即limit的值

- defaultRequest即request的值

apiVersion: v1

kind: LimitRange

metadata:

name: mem-limit-range

spec:

limits:

- default:

memory: 50Gi

cpu: 5

defaultRequest:

memory: 1Gi

cpu: 1

type: Container

第四节 日志收集EFK和证书可用年限

4.1 helm安装EFK

- 当前节点的Pod的日志信息所在目录:cd /var/log/containers/

- 这里先导入镜像, 例:

docker load -i elasticsearch-oss.tar

docker load -i fluentd-elasticsearch.tar

docker load -i kibana.tar

# 添加源helm2.14.1 k8s1.15.1 docker 19.03

cp linux-amd64/helm /usr/local/bin/

vim helm-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: tiller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tiller

namespace: kube-system

helm init --service-account tiller --skip-refresh

helm init --upgrade -i registry.cn-hangzhou.aliyuncs.com/google_containers/tiller:v2.14.1 --service-account=tiller --stable-repo-url https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

helm repo add stable http://mirror.azure.cn/kubernetes/charts/

helm repo add incubator http://storage.googleapis.com/kubernetes-charts-incubator

helm repo update

# helm es和kibana 的版本,必须一致

helm search incubator/elasticsearch -l

helm fetch incubator/elasticsearch --version 1.10.2

helm fetch stable/fluentd-elasticsearch --version 2.0.7

helm fetch stable/kibana --version 0.14.8

# 先下载镜像

docker pull docker.elastic.co/elasticsearch/elasticsearch-oss:6.4.2

docker pull gcr.io/google-containers/fluentd-elasticsearch:v2.3.2

docker pu1l docker.elastic.co/kibana/kibana-oss:6.4.2

# 部署Elasticsearch 修改value.yaml,因为机器16G带不动所以设置为一。降低开销

MINIMUM_MASTER_NODES: "1"

client replicas: 1

master replicas: 1 enabled: false

data replicas: 1 enabled: false

# 当前目录下运行

kubectl create namespace efk

helm install --name els1 --namespace=efk -f values.yaml .

kubectl get pod -n efk

kubectl run cirror-$RANDOM --rm -it --image=cirros -- /bin/sh

# 看一下地址

kubectl get svc -n efk

# r这里看到三个地址 没有问题

curl Elasticsearch:Port/_cat/nodes # curl 10.102.204.238:9200/_cat/nodes

# 部署Fluentd

tar -zvxf fluentd-elasticsearch-2.0.7.tgz

# 修改values.yaml

host: '10.102.204.238'

helm install --name flu1 --namespace=efk -f values.yaml .

# 部署kibana

tar -zvxf kibana-0.14.8.tgz

# 修改vlues.yaml

elasticsearch.url: http://10.102.204.238:9200

helm install --name kib1 --namespace=efk -f values.yaml .

kubectl get pod -n efk

kubectl get svc -n efk

# 修改ClusterIP为NodePort方式可以在web直接访问

kubectl edit svc kib1-kibana -n efk

type: NodePort

# 通过web访问 可以通过时间检索和切片查看

http://192.168.1.10:31430/

4.2 证书可用年限修改

- 查看证书的可用时间

# 进入证书目录

cd /etc/kubernetes/pki

# 查看apiserver证书的可用时间

openssl x509 -in apiserver.crt -text -noout # 一年

openssl x509 -in ca.crt -text -noout # 十年

- 修改kubeadm源码,去修改可用年限。

mkdir ~/data && cd ~/data

# 下载golang源码: https://studygolang.com/dl go1.12.9.linux-amd64.tar.gz

tar -zvxf go1.12.9.linux-amd64.tar.gz -C /usr/local

vim /etc/profile

export PATH=$PATH:/usr/local/go/bin

source /etc/profile

go version

# 复制k8s源代码到本地

git clone https://github.com/kubernetes/kubernetes

# 切换分支

git checkout -b remotes/origin/release-1.15.1 v1.15.1

# 修改Kubeadm源码包更新证书策略

vim cmd/kubeadm/app/util/pkiutil/pki_helpers.go # kubeadm 1.14至今

# 添加创建证书模板常量

const duration365d = time.Hour * 24 * 365

NotAfter:time.Now().Add(duration365d).UTC(),

# 保存退出后编译

make WHAT=cmd/kubeadm GOFLAGS=-v

cp output/bin/kubeadm /root/

# 备份下

cp /usr/bin/kubeadm /usr/bin/kubeadm.old

cp kubeadm /usr/bin/

chmod a+X /usr/bin/kubeadm

cd /etc/kubernetes/

cp -r pki/ pki.old

# 重新生成所有证书文件

kubeadm alpha certs renew all --config=/usr/local/install-k8s/core/kubeadm-config.yaml

# 重新查看证书时间

cd /etc/kubernetes/pki

openssl x509 -in apiserver.crt -text -noout # 十年