二进制安装Kubernetes(k8s) v1.24.0 IPv4

感谢:二进制安装Kubernetes(k8s) v1.24.0 IPv4/IPv6双栈 - 小陈运维

kubernetes 1.24 变化较大,详细见:Kubernetes 1.24 的删除和弃用 | Kubernetes

1.k8s基础系统环境配置

宿主机、部署软件:

为了节省资源,将Lb01中的资源部署到Node01、将Lb02中的资源部署到Node02,建议k8s集群与etcd集群分开安装

| 主机名称 | IP地址 | 说明 | 软件 |

|---|---|---|---|

| Master01 | 10.4.7.11 | master节点 | kube-apiserver、kube-controller-manager、kube-scheduler、etcd、 kubelet、kube-proxy、nfs-client |

| Master02 | 10.4.7.12 | master节点 | kube-apiserver、kube-controller-manager、kube-scheduler、etcd、 kubelet、kube-proxy、nfs-client |

| Master03 | 10.4.7.21 | master节点 | kube-apiserver、kube-controller-manager、kube-scheduler、etcd、 kubelet、kube-proxy、nfs-client |

| Node01 | 10.4.7.22 | node节点 | kubelet、kube-proxy、nfs-client、haproxy、keepalived |

| Node02 | 10.4.7.200 | node节点 | kubelet、kube-proxy、nfs-client、haproxy、keepalived |

| 10.4.7.10 | VIP |

宿主机要求:

宿主机要求:必须是Centos8以上,因为 k8s v1.24.0 在CentOS 7有兼容性问题,会导致有些字段不识别kubelet Error getting node 问题求助_Jerry00713的博客-CSDN博客

| 软件 | 版本 |

|---|---|

| 内核 | 5.17.5-1.el8.elrepo 以上 |

| CentOS 8 | v8 或者 v7 |

| kube-apiserver、kube-controller-manager、kube-scheduler、kubelet、kube-proxy | v1.24.0 |

| etcd | v3.5.4 |

| containerd | v1.5.11 |

| cfssl | v1.6.1 |

| cni | v1.1.1 |

| crictl | v1.23.0 |

| haproxy | v1.8.27 |

| keepalived | v2.1.5 |

网段:

宿主机:10.4.7.0/24

k8s 集群IP(clusterIP):192.168.0.0/16

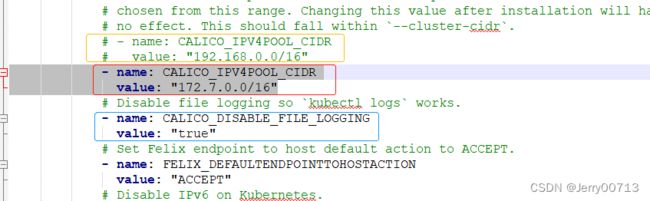

k8s pod集群(cluster-cidr):172.7.0.0/16

别名:

(all) 代表 k8s-master01、k8s-master02、k8s-master03、k8s-node01、k8s--node2

(k8s_all) 代表 k8s-master01、k8s-master02、k8s-master03、k8s-node01、k8s--node2

(k8s_master) 代表 k8s-master01、k8s-master02、k8s-master03

(k8s_node) 代表 k8s-node01、k8s--node2

(Lb) 正常找另外两台设备,作为负载均衡、VIP等操作,此文章为节约资源,部署到 k8s-node01、k8s--node2 中

1.1.配置IP (all)

略自己配置宿主机IP

1.2.设置主机名 (all)

10.4.7.11 hostnamectl set-hostname k8s-master01

10.4.7.12 hostnamectl set-hostname k8s-master02

10.4.7.21 hostnamectl set-hostname k8s-master03

10.4.7.22 hostnamectl set-hostname k8s-node01

10.4.7.200 hostnamectl set-hostname k8s-node02

1.3.配置yum源 (all)

1.修改 vi /etc/yum.repos.d/CentOS-Linux-BaseOS.repo 文件

将mirrorlist配置注释掉,并将baseurl修改为阿里云镜像地址

[BaseOS]

name=CentOS-$releasever - Base

#mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=BaseOS&infra=$infra

baseurl=https://mirrors.aliyun.com/centos/$releasever-stream/BaseOS/$basearch/os/

http://mirrors.aliyuncs.com/centos/$releasever-stream/BaseOS/$basearch/os/

http://mirrors.cloud.aliyuncs.com/centos/$releasever-stream/BaseOS/$basearch/os/

gpgcheck=1

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

2.修改 vi /etc/yum.repos.d/CentOS-Linux-AppStream.repo 文件

将mirrorlist配置注释掉,并将baseurl修改为阿里云镜像地址

[AppStream]

name=CentOS-$releasever - AppStream

#mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=AppStream&infra=$infra

baseurl=https://mirrors.aliyun.com/centos/$releasever-stream/AppStream/$basearch/os/

http://mirrors.aliyuncs.com/centos/$releasever-stream/AppStream/$basearch/os/

http://mirrors.cloud.aliyuncs.com/centos/$releasever-stream/AppStream/$basearch/os/

gpgcheck=1

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

3.修改 vi /etc/yum.repos.d/CentOS-Linux-Extras.repo 文件

将mirrorlist配置注释掉,并将baseurl修改为阿里云镜像地址[extras]

name=CentOS-$releasever - Extras

#mirrorlist=http://mirrorlist.centos.org/?release=$releasever&arch=$basearch&repo=extras&infra=$infra

baseurl=https://mirrors.aliyun.com/centos/$releasever-stream/extras/$basearch/os/

http://mirrors.aliyuncs.com/centos/$releasever-stream/extras/$basearch/os/

http://mirrors.cloud.aliyuncs.com/centos/$releasever-stream/extras/$basearch/os/

gpgcheck=1

enabled=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-centosofficial

yum clean all && yum makecache

写成脚本:

sed -e 's|^mirrorlist=|#mirrorlist=|g' -e '/#baseurl=/d' -e '/#mirrorlist/a\baseurl=https://mirrors.aliyun.com/centos/$releasever-stream/BaseOS/$basearch/os/\n http://mirrors.aliyuncs.com/centos/$releasever-stream/BaseOS/$basearch/os/\n http://mirrors.cloud.aliyuncs.com/centos/$releasever-stream/BaseOS/$basearch/os/' -i /etc/yum.repos.d/CentOS-Linux-BaseOS.repo

sed -e 's|^mirrorlist=|#mirrorlist=|g' -e '/#baseurl=/d' -e '/#mirrorlist/a\baseurl=https://mirrors.aliyun.com/centos/$releasever-stream/AppStream/$basearch/os/\n http://mirrors.aliyuncs.com/centos/$releasever-stream/AppStream/$basearch/os/\n http://mirrors.cloud.aliyuncs.com/centos/$releasever-stream/AppStream/$basearch/os/' -i /etc/yum.repos.d/CentOS-Linux-AppStream.repo

sed -e 's|^mirrorlist=|#mirrorlist=|g' -e '/#baseurl=/d' -e '/#mirrorlist/a\baseurl=https://mirrors.aliyun.com/centos/$releasever-stream/extras/$basearch/os/\n http://mirrors.aliyuncs.com/centos/$releasever-stream/extras/$basearch/os/\n http://mirrors.cloud.aliyuncs.com/centos/$releasever-stream/extras/$basearch/os/' -i /etc/yum.repos.d/CentOS-Linux-Extras.repo

yum clean all && yum makecache1.4.安装一些必备工具 (all)

yum -y install vim wget jq psmisc vim net-tools nfs-utils telnet yum-utils device-mapper-persistent-data lvm2 git network-scripts tar curl -y1.5.下载需要工具

mkdir /opt/src/;cd /opt/src/

1.下载kubernetes1.24.+的二进制包 (在master01,通过master01发送给各个k8s节点)

github二进制包下载地址:https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.24.mdwget https://dl.k8s.io/v1.24.0/kubernetes-server-linux-amd64.tar.gz

2.下载etcdctl二进制包 (etcd节点,此文章etcd部署在k8s-master01、k8s-master02、k8s-master03)

github二进制包下载地址:https://github.com/etcd-io/etcd/releaseswget https://github.com/etcd-io/etcd/releases/download/v3.5.4/etcd-v3.5.4-linux-amd64.tar.gz

3.docker-ce二进制包下载地址 (k8s_all)

二进制包下载地址:https://download.docker.com/linux/static/stable/x86_64/这里需要下载20.10.+版本

wget https://download.docker.com/linux/static/stable/x86_64/docker-20.10.14.tgz

4.containerd二进制包下载 (k8s_all)

github下载地址:https://github.com/containerd/containerd/releasescontainerd下载时下载带cni插件的二进制包。

wget https://github.com/containerd/containerd/releases/download/v1.6.4/cri-containerd-cni-1.6.4-linux-amd64.tar.gz

5.下载cfssl二进制包 (master01,通过master01制作证书发送给各个k8s节点)

github二进制包下载地址:https://github.com/cloudflare/cfssl/releaseswget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl_1.6.1_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssljson_1.6.1_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.1/cfssl-certinfo_1.6.1_linux_amd646.cni插件下载 (k8s_all)

github下载地址:https://github.com/containernetworking/plugins/releaseswget https://github.com/containernetworking/plugins/releases/download/v1.1.1/cni-plugins-linux-amd64-v1.1.1.tgz

7.crictl客户端二进制下载 (k8s_all)

github下载:https://github.com/kubernetes-sigs/cri-tools/releaseswget https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.23.0/crictl-v1.23.0-linux-amd64.tar.gz

1.6.关闭防火墙、SELinux (all)

systemctl disable --now firewalld

setenforce 0

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config关闭防火墙、selinux写成一句话:

systemctl stop firewalld.service;systemctl disable firewalld.service;sed -i -r 's#(^SELIN.*=)enforcing#\1disable#g' /etc/selinux/config;setenforce 0

1.7.关闭交换分区 (all)

sed -ri 's/.*swap.*/#&/' /etc/fstab

swapoff -a && sysctl -w vm.swappiness=0

cat /etc/fstab

# /dev/mapper/centos-swap swap swap defaults 0 01.8.关闭NetworkManager 并启用 network (k8s_all、Lb可不做)

systemctl disable --now NetworkManager

systemctl start network && systemctl enable network1.9.进行时间同步 (Lb可不做)

# 找一台机器做服务端、或者选择matser01也可,让作为服务端pool更新阿里云时间

yum install chrony -y

cat > /etc/chrony.conf << EOF

pool ntp.aliyun.com iburst

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

allow 10.4.7.0/24

local stratum 10

keyfile /etc/chrony.keys

leapsectz right/UTC

logdir /var/log/chrony

EOF

systemctl restart chronyd

systemctl enable chronyd# 客户端,要保证所k8s-master、k8s-node的时间都一致

yum install chrony -y

vim /etc/chrony.conf

cat /etc/chrony.conf | grep -v "^#" | grep -v "^$"

pool 10.4.7.11 iburst

driftfile /var/lib/chrony/drift

makestep 1.0 3

rtcsync

keyfile /etc/chrony.keys

leapsectz right/UTC

logdir /var/log/chrony

systemctl restart chronyd ; systemctl enable chronyd

# 客户端安装一条命令

yum install chrony -y ; sed -i "s#2.centos.pool.ntp.org#10.4.7.11#g" /etc/chrony.conf ; systemctl restart chronyd ; systemctl enable chronyd

#使用客户端进行验证

chronyc sources -v1.10.配置ulimit (k8s_all、Lb可不做)

ulimit -SHn 655350

cat >> /etc/security/limits.conf <1.11.配置免密登录 (master01节点)

在master01节点做ssh密钥对,拷贝ssh_pub到所有节点,其中SSHPASS为各个节点的统一密码,StrictHostKeyChecking=no取消交互式询问(也就是yes\no)的提示,sshpass进行自动登录分发密钥

yum install -y sshpass

ssh-keygen -f /root/.ssh/id_rsa -P ''

export IP="10.4.7.12 10.4.7.21 10.4.7.22 10.4.7.200"

export SSHPASS=123456

for HOST in $IP;do sshpass -e ssh-copy-id -o StrictHostKeyChecking=no $HOST;done1.12.添加启用源 (Lb可不做)

# 为 RHEL-8或 CentOS-8配置源

yum install -y https://www.elrepo.org/elrepo-release-8.el8.elrepo.noarch.rpm

# 查看可用安装包

yum --disablerepo="*" --enablerepo="elrepo-kernel" list available1.13.升级内核至4.18版本以上 (Lb可不做)

# 查看当前使用的内核

[root@localhost ~]# grubby --default-kernel

/boot/vmlinuz-4.18.0-348.el8.x86_64

# 查看已安装那些内核

[root@localhost ~]# rpm -qa | grep kernel

kernel-modules-4.18.0-348.el8.x86_64

kernel-tools-4.18.0-348.el8.x86_64

kernel-4.18.0-348.el8.x86_64

kernel-core-4.18.0-348.el8.x86_64

kernel-tools-libs-4.18.0-348.el8.x86_64

[root@localhost yum.repos.d]#

# 安装最新的内核

# 我这里选择的是稳定版kernel-ml 如需更新长期维护版本kernel-lt

[root@localhost ~]# yum --enablerepo=elrepo-kernel install kernel-ml -y

# 查看已安装那些内核

[root@localhost ~]# rpm -qa | grep kernel

kernel-modules-4.18.0-348.el8.x86_64

kernel-tools-4.18.0-348.el8.x86_64

kernel-4.18.0-348.el8.x86_64

kernel-ml-core-5.18.3-1.el8.elrepo.x86_64

kernel-ml-5.18.3-1.el8.elrepo.x86_64 (此内核为刚刚yum安装的)

kernel-core-4.18.0-348.el8.x86_64

kernel-tools-libs-4.18.0-348.el8.x86_64

kernel-ml-modules-5.18.3-1.el8.elrepo.x86_64

# 查看默认内核

grubby --default-kernel

/boot/vmlinuz-5.16.7-1.el8.elrepo.x86_64

# 若不是最新的使用命令设置

grubby --set-default /boot/vmlinuz-「您的内核版本」.x86_64

grubby --set-default "/boot/vmlinuz-5.18.3-1.el8.elrepo.x86_64"

# 重启生效

reboot

1.14.安装ipvsadm (Lb可不做)

yum install ipvsadm ipset sysstat conntrack libseccomp -y

cat >> /etc/modules-load.d/ipvs.conf <1.15.修改内核参数 (Lb可不做)

cat < /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

net.ipv6.conf.all.disable_ipv6 = 0

net.ipv6.conf.default.disable_ipv6 = 0

net.ipv6.conf.lo.disable_ipv6 = 0

net.ipv6.conf.all.forwarding = 1

EOF

sysctl --system 1.16.所有节点搭建配置域名解析 (all)

域名解析的作用:

1、让各个pc机器能够互相通过域名访问彼此

2、外网访问一个网址,将流量通过域名解析抛给到apiserver,在通过ingress控制器转发到pod中,实现外网能够通过访问网址进行访问pod

[root@k8s-master01 ~]# yum install bind bind9.16-utils -y

[root@k8s-master01 ~]# vi /etc/named.conf # 修改options下的内容,注释去掉options { listen-on port 53 { 10.4.7.11; }; // 默认监听53端口,IP改成自己的本机的IP,要让内网的所有机器能够访问我的bind进行 dns解析,127.0.0.1只能自己用,注意bind语法严格,所以格式要写对 listen-on-v6 port 53 { ::1; }; //去掉这行,不需要IPV6 directory "/var/named"; dump-file "/var/named/data/cache_dump.db"; statistics-file "/var/named/data/named_stats.txt"; memstatistics-file "/var/named/data/named_mem_stats.txt"; recursing-file "/var/named/data/named.recursing"; secroots-file "/var/named/data/named.secroots"; allow-query { any; }; // 改成any,让任何人都可以访问你 forwarders { 10.4.7.254; }; // 增加一条,如果dns解析不了,王上一级dns,也就是网关 recursion yes; //递归模式 dnssec-enable no; //是否支持DNSSEC开关,默认为yes。 dnssec-validation no; //是否进行DNSSEC确认开关,默认为no。 dnssec-enable配置项用来设置是否启用DNSSEC支持,DNS安全扩展(DNSSEC)提供了验证DNS数据有效性的系统。[root@k8s-master01 ~]# named-checkconf # 检查是否有问题。无任何提示代表无问题

[root@k8s-master01 ~]# vi /etc/named.rfc1912.zones # 配置区域文件,在最末尾增加两条,注释去掉

# 主机域,在内部自己使用,比如物理机(10.4.7.11 ping 10.4.7.11) # 可以使用ping hdss7-12.host.com,所以host是可以变化的 zone "host.com" IN { type master; file "host.com.zone"; allow-update { 10.4.7.11; }; }; # 业务域,把流量引入到k8s中,比如访问jenkins,页面地址输入jenkins.od.com # 所以od是可以变化的,按照公司要求 zone "od.com" IN { type master; file "od.com.zone"; allow-update { 10.4.7.11; }; };

编写主机域的配置文件,需要域名解析的主机都写入才可通过域名访问:

[root@k8s-master01 ~]# vi /var/named/host.com.zone$ORIGIN host.com. $TTL 600 ; 10 minutes @ IN SOA dns.host.com. dnsadmin.host.com. ( 2020010501 ; serial 10800 ; refresh (3 hours) 900 ; retry (15 minutes) 604800 ; expire (1 week) 86400 ; minimum (1 day) ) NS dns.host.com. $TTL 60 ; 1 minute dns A 10.4.7.11 k8s-master01 A 10.4.7.11 k8s-master02 A 10.4.7.12 k8s-master03 A 10.4.7.21 k8s-node01 A 10.4.7.22 k8s-node02 A 10.4.7.200 lb-vip A 10.4.7.10

业务域配置文件,流量经过bind,通过业务域配置文件中对应的关系,解析出对应的IP:

[root@k8s-master01 ~]# vi /var/named/od.com.zone$ORIGIN od.com. $TTL 600 ; 10 minutes @ IN SOA dns.od.com. dnsadmin.od.com. ( 2020010501 ; serial 10800 ; refresh (3 hours) 900 ; retry (15 minutes) 604800 ; expire (1 week) 86400 ; minimum (1 day) ) NS dns.od.com. $TTL 60 ; 1 minute dns A 10.4.7.11[root@k8s-master01 ~]# named-checkconf 检查是否有问题。无任何提示代表无问题

启动named服务:

[root@k8s-master01 ~]# systemctl start named

[root@k8s-master01 ~]# systemctl enable named

测试:

[root@k8s-master01 ~]# dig -t A k8s-master02.host.com @10.4.7.11 +short # 代表主机域无问题

10.4.7.12

对所有的机器的网卡,配置DNS为10.4.7.11:

涉及机器:(hdss7-11、hdss7-12、hdss7-21、hdss7-22、hdss7-200)

vi /etc/sysconfig/network-scripts/ifcfg-ens33 |grep "DNS1"

DNS1=10.4.7.11

重启网卡:systemctl restart network

测试:ping k8s-master02.host.com 如果可以说明已经生效

[root@k8s-master01 ~]# ping k8s-master02.host.com

PING k8s-master02.host.com (10.4.7.12) 56(84) bytes of data.

64 bytes from 10.4.7.12 (10.4.7.12): icmp_seq=1 ttl=64 time=0.503 ms

64 bytes from 10.4.7.12 (10.4.7.12): icmp_seq=2 ttl=64 time=0.709 ms

实现短域名(不带host.com)

[root@k8s-master01 ~]# ping k8s-master02

ping: k8s-master02: 未知的名称或服务

涉及机器:(hdss7-11、hdss7-12、hdss7-21、hdss7-22、hdss7-200)

vi /etc/resolv.conf # 添加search host.com,比如hdss7-11的esolv.conf

# Generated by NetworkManager

search host.com

nameserver 10.4.7.11测试:ping hdss7-12 等等

[root@k8s-master01 ~]# ping k8s-master02

PING k8s-master02.host.com (10.4.7.12) 56(84) bytes of data.

64 bytes from 10.4.7.12 (10.4.7.12): icmp_seq=1 ttl=64 time=0.666 ms

64 bytes from 10.4.7.12 (10.4.7.12): icmp_seq=2 ttl=64 time=0.801 ms

问题:重启后,resolv.conf会恢复源文件,导致search host.com丢失

涉及机器:(hdss7-11、hdss7-12、hdss7-21、hdss7-22、hdss7-200)

[root@k8s-master01 ~]# vi /etc/rc.d/rc.local # 开机向resolv.conf 插入短域名,注意脚本提权

# Please note that you must run 'chmod +x /etc/rc.d/rc.local' to ensure

# 请注意,您必须运行'chmod+x/etc/rc.d/rc.local“以确保

sed -i "2isearch host.com" /etc/resolv.conf [root@k8s-master01 ~]# chmod +x /etc/rc.d/rc.local

写成一句话:sed -i '$a\sed -i "2isearch host.com" /etc/resolv.conf' /etc/rc.d/rc.local;chmod +x /etc/rc.d/rc.local

2.k8s基本组件安装

2.1.所有k8s节点安装Containerd作为Runtime (k8s_all)

cd /opt/src

#创建cni插件所需目录

mkdir -p /etc/cni/net.d /opt/cni/bin

#解压cni二进制包

tar xf cni-plugins-linux-amd64-v1.1.1.tgz -C /opt/cni/bin/

#解压

tar -C / -xzf cri-containerd-cni-1.6.4-linux-amd64.tar.gz

#创建服务启动文件

cat > /etc/systemd/system/containerd.service <2.1.1配置Containerd所需的模块

cat <2.1.2加载模块

systemctl restart systemd-modules-load.service2.1.3配置Containerd所需的内核

cat <2.1.4创建Containerd的配置文件

mkdir -p /etc/containerd

containerd config default | tee /etc/containerd/config.toml

修改Containerd的配置文件

sed -i "s#SystemdCgroup\ \=\ false#SystemdCgroup\ \=\ true#g" /etc/containerd/config.toml

# 找到containerd.runtimes.runc.options,要存在SystemdCgroup = true

[root@k8s-master01 src]# grep -B 13 -A 2 "SystemdCgroup" /etc/containerd/config.toml

runtime_type = "io.containerd.runc.v2"

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

BinaryName = ""

CriuImagePath = ""

CriuPath = ""

CriuWorkPath = ""

IoGid = 0

IoUid = 0

NoNewKeyring = false

NoPivotRoot = false

Root = ""

ShimCgroup = ""

SystemdCgroup = true

[plugins."io.containerd.grpc.v1.cri".containerd.untrusted_workload_runtime]

[root@k8s-master01 src]#

# 将/etc/containerd/config.toml 的sandbox_image默认地址改为符合版本地址

[root@k8s-master01 src]# grep -C 2 "sandbox_image" /etc/containerd/config.toml

netns_mounts_under_state_dir = false

restrict_oom_score_adj = false

# sandbox_image = "k8s.gcr.io/pause:3.6"

sandbox_image = "registry.cn-hangzhou.aliyuncs.com/chenby/pause:3.6"

selinux_category_range = 1024

stats_collect_period = 102.1.5启动并设置为开机启动

systemctl daemon-reload

systemctl enable --now containerd2.1.6配置crictl客户端连接的运行时位置

#解压

tar xf crictl-v1.23.0-linux-amd64.tar.gz -C /usr/bin/

#生成配置文件

cat > /etc/crictl.yaml <2.2.k8s与etcd下载及安装(仅在master01操作)

2.2.1解压k8s安装包

# 解压k8s安装文件

cd /opt/src

tar -xf kubernetes-server-linux-amd64.tar.gz --strip-components=3 -C /usr/local/bin kubernetes/server/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy}

# 解压etcd安装文件

tar -xf etcd-v3.5.4-linux-amd64.tar.gz --strip-components=1 -C /usr/local/bin etcd-v3.5.4-linux-amd64/etcd{,ctl}

# 查看/usr/local/bin下内容

[root@k8s-master01 src]# ls /usr/local/bin/

containerd containerd-shim-runc-v1 containerd-stress critest ctr etcdctl kube-controller-manager kubelet kube-scheduler

containerd-shim containerd-shim-runc-v2 crictl ctd-decoder etcd kube-apiserver kubectl kube-proxy2.2.2查看版本

[root@k8s-master01 src]# kubelet --version

Kubernetes v1.24.0

[root@k8s-master01 src]# etcdctl version

etcdctl version: 3.5.4

API version: 3.5

[root@k8s-master01 src]# 2.2.3将组件发送至其他k8s节点

Master='k8s-master02 k8s-master03'

Work='k8s-node01 k8s-node02'

for NODE in $Master; do echo $NODE; scp /usr/local/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy} $NODE:/usr/local/bin/; scp /usr/local/bin/etcd* $NODE:/usr/local/bin/; done

for NODE in $Work; do scp /usr/local/bin/kube{let,-proxy} $NODE:/usr/local/bin/ ; done

2.3创建证书相关文件 (仅在master01操作)

mkdir /root/pki;cd /root/pki

cat > admin-csr.json << EOF

{

"CN": "admin",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:masters",

"OU": "Kubernetes-manual"

}

]

}

EOF

cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "876000h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "876000h"

}

}

}

}

EOF

cat > etcd-ca-csr.json << EOF

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "etcd",

"OU": "Etcd Security"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF

cat > front-proxy-ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"ca": {

"expiry": "876000h"

}

}

EOF

cat > kubelet-csr.json << EOF

{

"CN": "system:node:$NODE",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "system:nodes",

"OU": "Kubernetes-manual"

}

]

}

EOF

cat > manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-controller-manager",

"OU": "Kubernetes-manual"

}

]

}

EOF

cat > apiserver-csr.json << EOF

{

"CN": "kube-apiserver",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "Kubernetes",

"OU": "Kubernetes-manual"

}

]

}

EOF

cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "Kubernetes",

"OU": "Kubernetes-manual"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF

cat > etcd-csr.json << EOF

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "etcd",

"OU": "Etcd Security"

}

]

}

EOF

cat > front-proxy-client-csr.json << EOF

{

"CN": "front-proxy-client",

"key": {

"algo": "rsa",

"size": 2048

}

}

EOF

cat > kube-proxy-csr.json << EOF

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-proxy",

"OU": "Kubernetes-manual"

}

]

}

EOF

cat > scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-scheduler",

"OU": "Kubernetes-manual"

}

]

}

EOFmkdir /root/bootstrap;cd /root/bootstrap

cat > bootstrap.secret.yaml << EOF

apiVersion: v1

kind: Secret

metadata:

name: bootstrap-token-c8ad9c

namespace: kube-system

type: bootstrap.kubernetes.io/token

stringData:

description: "The default bootstrap token generated by 'kubelet '."

token-id: c8ad9c

token-secret: 2e4d610cf3e7426e

usage-bootstrap-authentication: "true"

usage-bootstrap-signing: "true"

auth-extra-groups: system:bootstrappers:default-node-token,system:bootstrappers:worker,system:bootstrappers:ingress

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubelet-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node-bootstrapper

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-certificate-rotation

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:nodes

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kube-apiserver

EOFmkdir /root/coredns;cd /root/coredns

cat > coredns.yaml << EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. Default is 1.

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

containers:

- name: coredns

image: registry.cn-beijing.aliyuncs.com/dotbalo/coredns:1.8.6

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

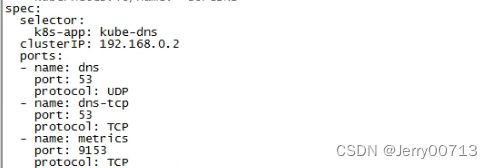

clusterIP: 192.168.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

EOFmkdir /root/metrics-server;cd /root/metrics-server

cat > metrics-server.yaml << EOF

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem # change to front-proxy-ca.crt for kubeadm

- --requestheader-username-headers=X-Remote-User

- --requestheader-group-headers=X-Remote-Group

- --requestheader-extra-headers-prefix=X-Remote-Extra-

image: registry.cn-beijing.aliyuncs.com/dotbalo/metrics-server:0.5.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

- name: ca-ssl

mountPath: /etc/kubernetes/pki

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

- name: ca-ssl

hostPath:

path: /etc/kubernetes/pki

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

EOF3.相关证书生成(仅在master01操作)

master01节点下载证书生成工具

[root@k8s-master01 bootstrap]# cd /opt/src/

[root@k8s-master01 src]# mv cfssl_1.6.1_linux_amd64 cfssl

[root@k8s-master01 src]# mv cfssl-certinfo_1.6.1_linux_amd64 cfssl-certinfo

[root@k8s-master01 src]# mv cfssljson_1.6.1_linux_amd64 cfssljson

[root@k8s-master01 src]# cp cfssl* /usr/local/bin/

[root@k8s-master01 src]# chmod +x /usr/local/bin/cfssl*

[root@k8s-master01 src]# ll /usr/local/bin/cfssl*

-rwxr-xr-x. 1 root root 16659824 6月 13 02:00 /usr/local/bin/cfssl

-rwxr-xr-x. 1 root root 13502544 6月 13 02:00 /usr/local/bin/cfssl-certinfo

-rwxr-xr-x. 1 root root 11029744 6月 13 02:00 /usr/local/bin/cfssljson

3.1.生成etcd证书

特别说明除外,以下操作在所有master节点操作

3.1.1所有master节点创建证书存放目录

mkdir /etc/etcd/ssl -p3.1.2master01节点生成etcd证书

cd /root/pki/

# 生成一个ca机构,给etcd认证

cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare /etc/etcd/ssl/etcd-ca

# 生成etcd证书和etcd证书的key(如果你觉得以后可能会扩容,可以在ip那多写几个预留出来)

cfssl gencert \

-ca=/etc/etcd/ssl/etcd-ca.pem \

-ca-key=/etc/etcd/ssl/etcd-ca-key.pem \

-config=ca-config.json \

-hostname=127.0.0.1,k8s-master01,k8s-master02,k8s-master03,10.4.7.11,10.4.7.12,10.4.7.21 \

-profile=kubernetes \

etcd-csr.json | cfssljson -bare /etc/etcd/ssl/etcd3.1.3将证书复制到其他节点

Master='k8s-master02 k8s-master03'

for NODE in $Master; do ssh $NODE "mkdir -p /etc/etcd/ssl"; for FILE in etcd-ca-key.pem etcd-ca.pem etcd-key.pem etcd.pem; do scp /etc/etcd/ssl/${FILE} $NODE:/etc/etcd/ssl/${FILE}; done; done3.2.生成k8s相关证书

特别说明除外,以下操作在所有master节点操作

3.2.1所有k8s节点创建证书存放目录

mkdir -p /etc/kubernetes/pki3.2.2master01节点生成k8s证书

# 生成一个根证书

cfssl gencert -initca ca-csr.json | cfssljson -bare /etc/kubernetes/pki/ca

# 192.168.0.1是service网段的第一个地址,需要计算,10.4.7.10为高可用vip地址

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-hostname=192.168.0.1,10.4.7.10,127.0.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,10.4.7.11,10.4.7.12,10.4.7.21,10.4.7.22,10.4.7.200 \

-profile=kubernetes apiserver-csr.json | cfssljson -bare /etc/kubernetes/pki/apiserver3.2.3生成apiserver聚合证书

# 生成一个ca机构,给后续的证书认证

cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-ca

# 有一个警告,可以忽略

cfssl gencert \

-ca=/etc/kubernetes/pki/front-proxy-ca.pem \

-ca-key=/etc/kubernetes/pki/front-proxy-ca-key.pem \

-config=ca-config.json \

-profile=kubernetes front-proxy-client-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-client3.2.4生成controller-manage的证书

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

manager-csr.json | cfssljson -bare /etc/kubernetes/pki/controller-manager

# 自定义一个k8s用户,如下叫kubernetes,此用户绑定ca.pem根证书,传递给apiserver,这样此账户-证书-apiserver进行了关联

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://10.4.7.10:8443 \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

# 定义一个用户账号(system:kube-controller-manager),用这个用户账号,跟上述创建的自定义一个k8s用户kubernetes进行绑定,

用于使用提供的认证信息和命名空间将请求发送到指定的集群。给这个绑定起个名字system:kube-controller-manager@kubernetes

kubectl config set-context system:kube-controller-manager@kubernetes \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

# 定义用于向 k8s 集群进行身份验证的客户端凭据。将客户端证书controller-manager.pem根controller-manager-key.pem

服务端证书上传进去,用于账户(system:kube-controller-manager)申请的验证

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=/etc/kubernetes/pki/controller-manager.pem \

--client-key=/etc/kubernetes/pki/controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

# 把这写信息作为承载式文件

kubectl config use-context system:kube-controller-manager@kubernetes \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

3.2.5生成scheduler的证书

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

scheduler-csr.json | cfssljson -bare /etc/kubernetes/pki/scheduler

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://10.4.7.10:8443 \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

kubectl config set-credentials system:kube-scheduler \

--client-certificate=/etc/kubernetes/pki/scheduler.pem \

--client-key=/etc/kubernetes/pki/scheduler-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

kubectl config set-context system:kube-scheduler@kubernetes \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

kubectl config use-context system:kube-scheduler@kubernetes \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

3.2.6生成kube-proxy的证书

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare /etc/kubernetes/pki/admin

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true \

--server=https://10.4.7.10:8443 \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

kubectl config set-credentials kubernetes-admin \

--client-certificate=/etc/kubernetes/pki/admin.pem \

--client-key=/etc/kubernetes/pki/admin-key.pem \

--embed-certs=true \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

kubectl config set-context kubernetes-admin@kubernetes \

--cluster=kubernetes \

--user=kubernetes-admin \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

kubectl config use-context kubernetes-admin@kubernetes --kubeconfig=/etc/kubernetes/admin.kubeconfig3.2.7创建ServiceAccount Key ——secret

openssl genrsa -out /etc/kubernetes/pki/sa.key 2048

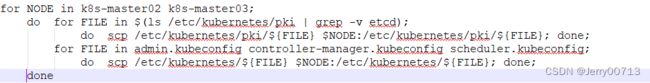

openssl rsa -in /etc/kubernetes/pki/sa.key -pubout -out /etc/kubernetes/pki/sa.pub3.2.8将证书发送到其他master节点

for NODE in k8s-master02 k8s-master03; do ssh $NODE "mkdir -p /etc/kubernetes/pki"; for FILE in $(ls /etc/kubernetes/pki | grep -v etcd); do scp /etc/kubernetes/pki/${FILE} $NODE:/etc/kubernetes/pki/${FILE}; done; for FILE in admin.kubeconfig controller-manager.kubeconfig scheduler.kubeconfig; do scp /etc/kubernetes/${FILE} $NODE:/etc/kubernetes/${FILE}; done; done3.2.9查看证书(k8s_all)

ls /etc/kubernetes/pki/

admin.csr apiserver-key.pem ca.pem front-proxy-ca.csr front-proxy-client-key.pem scheduler.csr

admin-key.pem apiserver.pem controller-manager.csr front-proxy-ca-key.pem front-proxy-client.pem scheduler-key.pem

admin.pem ca.csr controller-manager-key.pem front-proxy-ca.pem sa.key scheduler.pem

apiserver.csr ca-key.pem controller-manager.pem front-proxy-client.csr sa.pub

# 一共23个就对了

ls /etc/kubernetes/pki/ |wc -l

234.k8s系统组件配置

4.1 安装rsyslog

将 etcd 启动的命令、参数, 通过systemctl 进行启动,但 systemctl 启动后,日志输出怎么办?

systemctl 测试过用 StandardOutput=/tmp/services/logs/xxxx.log 根本无法输出,所以我们将systemctl 启动的程序输出的日志,通过rsyslogs转发写到固定的日志中

yum install -y rsyslogrsyslogs具体如何操作?

一、在自定义test.service文件中[Service]增加

StandardOutput=syslog

StandardError=syslog

SyslogIdentifier=your program identifier

二、编辑/etc/rsyslog.d/you_file.conf文件,其内容为

if $programname == 'your program identifier' then {

/data/logs/kubernetes/kube-kubelet/kubele.stdout.log

}

三、重启rsyslog

sudo systemctl restart rsyslog

您的程序stdout / stderr仍可通过journalctl(sudo journalctl -u your program identifier获得,但它们也将在您选择的文件中提供。

四、重新你的服务

systemctl restart test

五、查看/var/log/file.log 文件内容4.2.etcd配置

4.2.1master01配置

# 如果要用IPv6那么把IPv4地址修改为IPv6即可

cat > /etc/etcd/etcd.config.yml << EOF

name: 'k8s-master01'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://10.4.7.11:2380'

listen-client-urls: 'https://10.4.7.11:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://10.4.7.11:2380'

advertise-client-urls: 'https://10.4.7.11:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master01=https://10.4.7.11:2380,k8s-master02=https://10.4.7.12:2380,k8s-master03=https://10.4.7.21:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

EOF4.2.2master02配置

# 如果要用IPv6那么把IPv4地址修改为IPv6即可

cat > /etc/etcd/etcd.config.yml << EOF

name: 'k8s-master02'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://10.4.7.12:2380'

listen-client-urls: 'https://10.4.7.12:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://10.4.7.12:2380'

advertise-client-urls: 'https://10.4.7.12:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master01=https://10.4.7.11:2380,k8s-master02=https://10.4.7.12:2380,k8s-master03=https://10.4.7.21:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

EOF4.2.3master03配置

# 如果要用IPv6那么把IPv4地址修改为IPv6即可

cat > /etc/etcd/etcd.config.yml << EOF

name: 'k8s-master03'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://10.4.7.21:2380'

listen-client-urls: 'https://10.4.7.21:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://10.4.7.21:2380'

advertise-client-urls: 'https://10.4.7.21:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master01=https://10.4.7.11:2380,k8s-master02=https://10.4.7.12:2380,k8s-master03=https://10.4.7.21:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

EOF4.3.创建service(所有master节点操作)

4.3.1创建etcd.service并启动

cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Service

Documentation=https://coreos.com/etcd/docs/latest/

After=network.target

[Service]

Type=notify

ExecStart=/usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.yml

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

StandardOutput=syslog

StandardError=syslog

SyslogIdentifier=etcd

[Install]

WantedBy=multi-user.target

Alias=etcd3.service

EOFvi /etc/rsyslog.d/etcd.conf

if $programname == 'etcd' then {

/data/logs/etcd/etcd.stdout.log

}4.3.2创建etcd证书目录、日志目录

mkdir /etc/kubernetes/pki/etcd

ln -s /etc/etcd/ssl/* /etc/kubernetes/pki/etcd/

mkdir -p /data/logs/etcd/

touch /data/logs/etcd/etcd.stdout.log

sudo systemctl restart rsyslog

systemctl daemon-reload

systemctl enable --now etcd4.3.3查看etcd状态

如果检查后有报错,三台etcd重启后在查看

# 如果要用IPv6那么把IPv4地址修改为IPv6即可

export ETCDCTL_API=3

etcdctl --endpoints="10.4.7.11:2379,10.4.7.12:2379,10.4.7.21:2379" --cacert=/etc/kubernetes/pki/etcd/etcd-ca.pem --cert=/etc/kubernetes/pki/etcd/etcd.pem --key=/etc/kubernetes/pki/etcd/etcd-key.pem endpoint status --write-out=table

+----------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+----------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| 10.0.0.83:2379 | c0c8142615b9523f | 3.5.4 | 20 kB | false | false | 2 | 9 | 9 | |

| 10.0.0.82:2379 | de8396604d2c160d | 3.5.4 | 20 kB | false | false | 2 | 9 | 9 | |

| 10.0.0.81:2379 | 33c9d6df0037ab97 | 3.5.4 | 20 kB | true | false | 2 | 9 | 9 | |

+----------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

[root@k8s-master01 pki]# 4.3.4 etcd数据定时备份

mkdir -p /data/backup/etcd

vi /etc/etcd/backup.sh

#!/bin/sh

cd /var/lib

name="etcd-bak"`date "+%Y%m%d"`

tar -cvf "/data/backup/etcd/"$name".tar.gz" etcd

chmod 755 /etc/etcd/backup.sh

crontab -e

00 00 * * * /etc/etcd/backup.sh

或者

SHELL=/bin/bash

PATH=/sbin:/bin:/usr/sbin:/usr/bin

00 00 * * * (/path/to/backup.sh)

crontab -l

00 00 * * * /etc/etcd/backup.shsystemctl restart crond

5.高可用配置

5.1在lb01和lb02两台服务器上操作 (Lb)

5.1.1安装keepalived和haproxy服务

yum -y install keepalived haproxy5.1.2修改haproxy配置文件(两台配置文件一样)

# cp /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg.bak

cat >/etc/haproxy/haproxy.cfg<<"EOF"

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

frontend k8s-master

bind 0.0.0.0:8443

bind 127.0.0.1:8443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-master01 10.4.7.11:6443 check

server k8s-master02 10.4.7.12:6443 check

server k8s-master03 10.4.7.21:6443 check

EOF5.1.3lb01配置keepalived master节点

#cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

cat > /etc/keepalived/keepalived.conf << EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface eth1

mcast_src_ip 10.4.7.22

virtual_router_id 51

priority 100

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.4.7.10

}

track_script {

chk_apiserver

} }

EOF5.1.4lb02配置keepalived backup节点

# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak

cat > /etc/keepalived/keepalived.conf << EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface eth1

mcast_src_ip 10.4.7.200

virtual_router_id 51

priority 50

nopreempt

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.4.7.10

}

track_script {

chk_apiserver

} }

EOF5.1.5健康检查脚本配置(两台lb主机)

cat > /etc/keepalived/check_apiserver.sh << EOF

#!/bin/bash

err=0

for k in \$(seq 1 3)

do

check_code=\$(pgrep haproxy)

if [[ \$check_code == "" ]]; then

err=\$(expr \$err + 1)

sleep 1

continue

else

err=0

break

fi

done

if [[ \$err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

EOF

# 给脚本授权

chmod +x /etc/keepalived/check_apiserver.sh5.1.6启动服务

systemctl daemon-reload

systemctl enable --now haproxy

systemctl enable --now keepalived5.1.7测试高可用

# 能ping同

[root@k8s-node02 ~]# ping 10.4.7.10

# 能telnet访问

[root@k8s-node02 ~]# telnet 10.4.7.10 8443

# 关闭主节点,看vip是否漂移到备节点6.k8s组件配置(区别于第4点)

所有k8s节点创建以下目录

mkdir -p /etc/kubernetes/manifests/ /etc/systemd/system/kubelet.service.d /var/lib/kubelet /var/log/kubernetes6.1.部署 supervisord 工具

使用supervisord 工具代替systemctl

yum install -y https://mirrors.aliyun.com/epel/epel-release-latest-8.noarch.rpm

yum clean all && yum makecache

yum install -y supervisor

启动supervisor

sudo supervisord -c /etc/supervisord.conf

sudo supervisorctl -c /etc/supervisord.conf

写入启动脚本中

echo -e "supervisord -c /etc/supervisord.conf\nsupervisorctl -c /etc/supervisord.conf" >> /etc/rc.d/rc.local6.2.创建apiserver(所有master节点)

6.2.1master01节点配置

mkdir -p /opt/kubernetes/

mkdir -p /data/logs/kubernetes/kube-apiserver/

touch /data/logs/kubernetes/kube-apiserver/apiserver.stdout.log

vim /opt/kubernetes/kube-apiserver-startup.sh # 注意vim后:set paste

#!/bin/bash

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/usr/local/bin/kube-apiserver \

--v=2 \

--logtostderr=true \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--advertise-address=10.4.7.11 \

--service-cluster-ip-range=192.168.0.0/16 \

--feature-gates=IPv6DualStack=true \

--service-node-port-range=30000-32767 \

--etcd-servers=https://10.4.7.11:2379,https://10.4.7.12:2379,https://10.4.7.21:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User \

--enable-aggregator-routing=true

# --token-auth-file=/etc/kubernetes/token.csvchmod +x /opt/kubernetes/kube-apiserver-startup.sh

vim /etc/supervisord.d/kube-apiserver.ini # apiserver 使用 supervisord 启动的配置文件

[program:kube-apiserver-7-11]

command=/opt/kubernetes/kube-apiserver-startup.sh

numprocs=1

directory=/opt/kubernetes/

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=5

stdout_capture_maxbytes=1MB

stdout_events_enabled=false6.2.2master02节点配置

mkdir -p /opt/kubernetes/

mkdir -p /data/logs/kubernetes/kube-apiserver/

touch /data/logs/kubernetes/kube-apiserver/apiserver.stdout.log

vim /opt/kubernetes/kube-apiserver-startup.sh # 注意vim后:set paste

#!/bin/bash

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/usr/local/bin/kube-apiserver \

--v=2 \

--logtostderr=true \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--advertise-address=10.4.7.12 \

--service-cluster-ip-range=192.168.0.0/16 \

--feature-gates=IPv6DualStack=true \

--service-node-port-range=30000-32767 \

--etcd-servers=https://10.4.7.11:2379,https://10.4.7.12:2379,https://10.4.7.21:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User \

--enable-aggregator-routing=true

# --token-auth-file=/etc/kubernetes/token.csvchmod +x /opt/kubernetes/kube-apiserver-startup.sh

vim /etc/supervisord.d/kube-apiserver.ini # apiserver 使用 supervisord 启动的配置文件

[program:kube-apiserver-7-12]

command=/opt/kubernetes/kube-apiserver-startup.sh

numprocs=1

directory=/opt/kubernetes/

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=5

stdout_capture_maxbytes=1MB

stdout_events_enabled=false6.2.3master03节点配置

mkdir -p /opt/kubernetes/

mkdir -p /data/logs/kubernetes/kube-apiserver/

touch /data/logs/kubernetes/kube-apiserver/apiserver.stdout.log

vim /opt/kubernetes/kube-apiserver-startup.sh # 注意vim后:set paste

#!/bin/bash

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/usr/local/bin/kube-apiserver \

--v=2 \

--logtostderr=true \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--advertise-address=10.4.7.12 \

--service-cluster-ip-range=192.168.0.0/16 \

--feature-gates=IPv6DualStack=true \

--service-node-port-range=30000-32767 \

--etcd-servers=https://10.4.7.11:2379,https://10.4.7.12:2379,https://10.4.7.21:2379 \

--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-allowed-names=aggregator \

--requestheader-group-headers=X-Remote-Group \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User \

--enable-aggregator-routing=truechmod +x /opt/kubernetes/kube-apiserver-startup.sh

vim /etc/supervisord.d/kube-apiserver.ini # apiserver 使用 supervisord 启动的配置文件

[program:kube-apiserver-7-21]

command=/opt/kubernetes/kube-apiserver-startup.sh

numprocs=1

directory=/opt/kubernetes/

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-apiserver/apiserver.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=5

stdout_capture_maxbytes=1MB

stdout_events_enabled=false6.2.4启动apiserver(所有master节点)

[root@k8s-master01 ~]# supervisorctl update

[root@k8s-master02 ~]# supervisorctl update

[root@k8s-master03 ~]# supervisorctl update

[root@k8s-master01 ~]# supervisorctl status

kube-apiserver-7-21 RUNNING pid 1421, uptime 16:33:20

[root@k8s-master02 ~]# supervisorctl status

kube-apiserver-7-12 RUNNING pid 1989, uptime 0:36:19

[root@k8s-master03 ~]# supervisorctl status

kube-apiserver-7-21 RUNNING pid 1989, uptime 0:36:11使用supervisorctl update后报错

error:, [Errno 2] No such file or directory: file: /usr/lib/python3.6/site-packages/supervisor/xmlrpc.py line: 560 没有使用如下命令启动

sudo supervisord -c /etc/supervisord.conf

sudo supervisorctl -c /etc/supervisord.conf

6.3.配置kube-controller-manager service

# 所有master节点配置,且配置相同

# 172.7.0.0/16 为pod网段,按需求设置你自己的网段

vim /opt/kubernetes/kube-controller-manager-startup.sh # 注意vim后:set paste

#!/bin/sh

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/usr/local/bin/kube-controller-manager \

--v=2 \

--logtostderr=true \

--bind-address=127.0.0.1 \

--root-ca-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \

--service-account-private-key-file=/etc/kubernetes/pki/sa.key \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig \

--leader-elect=true \

--use-service-account-credentials=true \

--node-monitor-grace-period=40s \

--node-monitor-period=5s \

--pod-eviction-timeout=2m0s \

--controllers=*,bootstrapsigner,tokencleaner \

--allocate-node-cidrs=true \

--feature-gates=IPv6DualStack=true \

--service-cluster-ip-range=192.168.0.0/16 \

--cluster-cidr=172.7.0.0/16 \

--node-cidr-mask-size-ipv4=24 \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pemmkdir -p /data/logs/kubernetes/kube-controller-manager

chmod u+x /opt/kubernetes/kube-controller-manager-startup.sh

touch /data/logs/kubernetes/kube-controller-manager/controller.stdout.logvim /etc/supervisord.d/kube-controller-manager.ini # controller-manager 使用 supervisord 启动的配置文件

[program:kube-controller-manager-7-11]

command=/opt/kubernetes/kube-controller-manager-startup.sh ; the program (relative uses PATH, can take args)

numprocs=1 ; number of processes copies to start (def 1)

directory=/opt/kubernetes/ ; directory to cwd to before exec (def no cwd)

autostart=true ; start at supervisord start (default: true)

autorestart=true ; retstart at unexpected quit (default: true)

startsecs=30 ; number of secs prog must stay running (def. 1)

startretries=3 ; max # of serial start failures (default 3)

exitcodes=0,2 ; 'expected' exit codes for process (default 0,2)

stopsignal=QUIT ; signal used to kill process (default TERM)

stopwaitsecs=10 ; max num secs to wait b4 SIGKILL (default 10)

user=root ; setuid to this UNIX account to run the program

redirect_stderr=true ; redirect proc stderr to stdout (default false)

stdout_logfile=/data/logs/kubernetes/kube-controller-manager/controller.stdout.log ; stderr log path, NONE for none; default AUTO

stdout_logfile_maxbytes=64MB ; max # logfile bytes b4 rotation (default 50MB)

stdout_logfile_backups=4 ; # of stdout logfile backups (default 10)

stdout_capture_maxbytes=1MB ; number of bytes in 'capturemode' (default 0)

stdout_events_enabled=false6.3.1启动kube-controller-manager,并查看状态

[root@k8s-master01 ~]# supervisorctl update

[root@k8s-master02 ~]# supervisorctl update

[root@k8s-master03 ~]# supervisorctl update

[root@k8s-master01 ~]# supervisorctl status

kube-apiserver-7-11 RUNNING pid 2225, uptime 0:58:44

kube-controller-manager-7-11 RUNNING pid 2711, uptime 0:00:38

[root@k8s-master02 ~]# supervisorctl status

kube-apiserver-7-12 RUNNING pid 1989, uptime 1:00:42

kube-controller-manager-7-12 RUNNING pid 2092, uptime 0:02:06

[root@k8s-master03 ~]# supervisorctl status

kube-apiserver-7-21 RUNNING pid 1578, uptime 0:52:27

kube-controller-manager-7-21 RUNNING pid 1657, uptime 0:00:326.3.配置kube-scheduler service

6.3.1所有master节点配置,且配置相同

mkdir -p /data/logs/kubernetes/kube-scheduler

touch /data/logs/kubernetes/kube-scheduler/scheduler.stdout.logvim /opt/kubernetes/kube-scheduler-startup.sh # 注意vim后:set paste

#!/bin/sh

WORK_DIR=$(dirname $(readlink -f $0))

[ $? -eq 0 ] && cd $WORK_DIR || exit

/usr/local/bin/kube-scheduler \

--v=2 \

--logtostderr=true \

--bind-address=127.0.0.1 \

--leader-elect=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfigchmod u+x /opt/kubernetes/kube-scheduler-startup.sh

vim /etc/supervisord.d/kube-scheduler.ini # controller-scheduler 使用 supervisord 启动的配置文件

[program:kube-scheduler-7-11]

command=/opt/kubernetes/kube-scheduler-startup.sh

numprocs=1

directory=/opt/kubernetes/

autostart=true

autorestart=true

startsecs=30

startretries=3

exitcodes=0,2

stopsignal=QUIT

stopwaitsecs=10

user=root

redirect_stderr=true

stdout_logfile=/data/logs/kubernetes/kube-scheduler/scheduler.stdout.log

stdout_logfile_maxbytes=64MB

stdout_logfile_backups=4

stdout_capture_maxbytes=1MB

stdout_events_enabled=false6.3.2启动并查看服务状态

[root@k8s-master01 ~]# supervisorctl update

[root@k8s-master02 ~]# supervisorctl update

[root@k8s-master03 ~]# supervisorctl update

[root@k8s-master01 ~]# supervisorctl status

kube-apiserver-7-11 RUNNING pid 2225, uptime 2:38:21

kube-controller-manager-7-11 RUNNING pid 2711, uptime 1:40:15

kube-scheduler-7-11 RUNNING pid 2829, uptime 0:01:43

[root@k8s-master02 ~]# supervisorctl status

kube-apiserver-7-12 RUNNING pid 1989, uptime 2:35:56

kube-controller-manager-7-12 RUNNING pid 2092, uptime 1:37:20

kube-scheduler-7-12 RUNNING pid 2201, uptime 0:02:00

[root@k8s-master03 ~]# supervisorctl status

kube-apiserver-7-21 RUNNING pid 1578, uptime 2:27:49

kube-controller-manager-7-21 RUNNING pid 1657, uptime 1:35:54

kube-scheduler-7-21 RUNNING pid 1757, uptime 0:02:117.TLS Bootstrapping配置

7.1在master01上配置

cd /root/bootstrap

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true --server=https://10.4.7.10:8443 \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

kubectl config set-credentials tls-bootstrap-token-user \

--token=c8ad9c.2e4d610cf3e7426e \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

kubectl config set-context tls-bootstrap-token-user@kubernetes \

--cluster=kubernetes \

--user=tls-bootstrap-token-user \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

kubectl config use-context tls-bootstrap-token-user@kubernetes \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

# token的位置在bootstrap.secret.yaml,如果修改的话到这个文件修改

执行 kubectl get cs 发现会报错 The connection to the server localhost:8080 was refused - did you specify the right host or port?

原因是kubectl 默认使用/root/.kube/config文件中,配置的集群角色的账户,跟api通信,config文件其实就是我们上述配置的bootstrap-kubelet.kubeconfig

mkdir -p /root/.kube ; cp /etc/kubernetes/admin.kubeconfig /root/.kube/config[root@k8s-master01 ~]# cd /root/.kube/ [root@k8s-master01 .kube]# kubectl config view apiVersion: v1 clusters: - cluster: certificate-authority-data: DATA+OMITTED server: https://10.4.7.10:8443 name: kubernetes contexts: - context: cluster: kubernetes user: kubernetes-admin name: kubernetes-admin@kubernetes current-context: kubernetes-admin@kubernetes kind: Config preferences: {} users: - name: kubernetes-admin user: client-certificate-data: REDACTED client-key-data: REDACTED [root@k8s-master01 .kube]#

7.2查看集群状态,没问题的话继续后续操作

[root@k8s-master01 .kube]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-1 Healthy {"health":"true","reason":""}

etcd-2 Healthy {"health":"true","reason":""}

etcd-0 Healthy {"health":"true","reason":""}

[root@k8s-master01 .kube]# cd /root/bootstrap/

[root@k8s-master01 bootstrap]# kubectl create -f bootstrap.secret.yaml

secret/bootstrap-token-c8ad9c created

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

clusterrolebinding.rbac.authorization.k8s.io/node-autoapprove-bootstrap created

clusterrolebinding.rbac.authorization.k8s.io/node-autoapprove-certificate-rotation created

clusterrole.rbac.authorization.k8s.io/system:kube-apiserver-to-kubelet created

clusterrolebinding.rbac.authorization.k8s.io/system:kube-apiserver created8.node节点配置

8.1.在master01上将证书复制到node节点

cd /etc/kubernetes/

for NODE in k8s-master02 k8s-master03 k8s-node01 k8s-node02 ; do ssh $NODE mkdir -p /etc/kubernetes/pki; for FILE in pki/ca.pem pki/ca-key.pem pki/front-proxy-ca.pem bootstrap-kubelet.kubeconfig; do scp /etc/kubernetes/$FILE $NODE:/etc/kubernetes/${FILE}; done; done8.2.kubelet配置

8.2.1所有k8s节点创建相关目录

mkdir -p /var/lib/kubelet /var/log/kubernetes /etc/systemd/system/kubelet.service.d /etc/kubernetes/manifests/vi /usr/lib/systemd/system/kubelet.service # 所有k8s节点配置kubelet service,set paste

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=containerd.service

Requires=containerd.service

[Service]

ExecStart=/usr/local/bin/kubelet \

--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--config=/etc/kubernetes/kubelet-conf.yml \

--container-runtime=remote \

--runtime-request-timeout=15m \

--container-runtime-endpoint=unix:///run/containerd/containerd.sock \

--cgroup-driver=systemd \

--node-labels=node.kubernetes.io/node=''

# --feature-gates=IPv6DualStack=true

StandardOutput=syslog

StandardError=syslog

SyslogIdentifier=kubelet

[Install]

WantedBy=multi-user.target

vi /etc/rsyslog.d/kubelet.conf

if $programname == 'kubelet' then {

/data/logs/kubernetes/kube-kubelet/kubelet.stdout.log

}mkdir -p /data/logs/kubernetes/kube-kubelet/

touch /data/logs/kubernetes/kube-kubelet/kubelet.stdout.log

sudo systemctl restart rsyslog8.2.2所有k8s节点创建kubelet的配置文件

vi /etc/kubernetes/kubelet-conf.yml # set paste

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

cgroupsPerQOS: true

clusterDNS:

- 192.168.0.2

clusterDomain: cluster.local

containerLogMaxFiles: 5

containerLogMaxSize: 10Mi

contentType: application/vnd.kubernetes.protobuf

cpuCFSQuota: true

cpuManagerPolicy: none

cpuManagerReconcilePeriod: 10s

enableControllerAttachDetach: true

enableDebuggingHandlers: true

enforceNodeAllocatable:

- pods

eventBurst: 10

eventRecordQPS: 5

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

evictionPressureTransitionPeriod: 5m0s

failSwapOn: true

fileCheckFrequency: 20s

hairpinMode: promiscuous-bridge

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 20s

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

imageMinimumGCAge: 2m0s

iptablesDropBit: 15

iptablesMasqueradeBit: 14

kubeAPIBurst: 10

kubeAPIQPS: 5

makeIPTablesUtilChains: true

maxOpenFiles: 1000000

maxPods: 110

nodeStatusUpdateFrequency: 10s

oomScoreAdj: -999

podPidsLimit: -1

registryBurst: 10

registryPullQPS: 5

resolvConf: /etc/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 2m0s

serializeImagePulls: true

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 4h0m0s

syncFrequency: 1m0s

volumeStatsAggPeriod: 1m0s8.2.3启动kubelet

systemctl daemon-reload

systemctl restart kubelet

systemctl enable --now kubelet8.2.4查看集群

[root@k8s-master01 rsyslog.d]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 NotRead 108m v1.24.0

k8s-master02 NotRead 10m v1.24.0

k8s-master03 NotRead 7m5s v1.24.0

k8s-node01 NotRead 4m8s v1.24.0

k8s-node02 NotRead 11s v1.24.0 8.3.kube-proxy配置

8.3.1此配置只在master01操作

cp /etc/kubernetes/admin.kubeconfig /etc/kubernetes/kube-proxy.kubeconfig

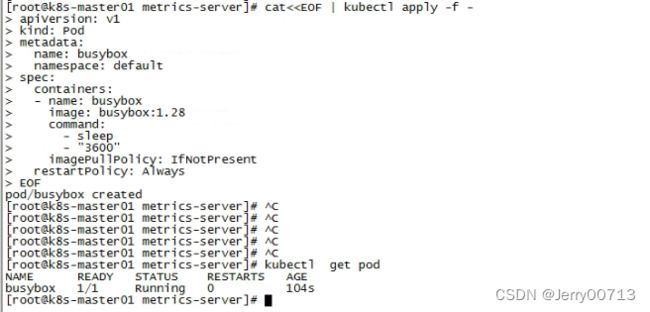

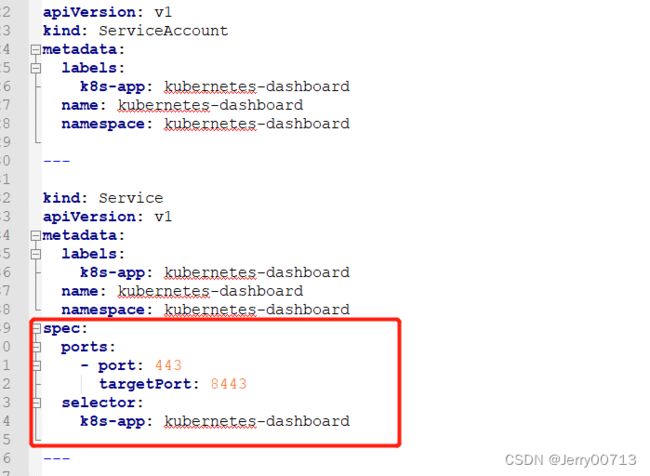

8.3.2将kubeconfig发送至其他节点