kubeadm安装部署k8s(1)

2 K8s 安装部署

2.1 安装方式

2.1.1 部署工具

使用批量部署工具(anbile / slatstack)、手动二进制、kebeadm、apt-get/yum 等方式安装、以守护进程的方式启动在宿主机上,类似于是 Nginx 一样使用 service 脚本启动

二进制部署:兼容性最好,类似于在宿主机上启动了一个服务,这个服务可以直接使用宿主机内核的很多特性

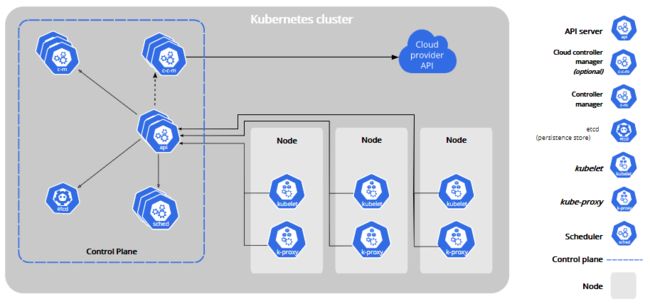

kubeadm部署:以容器的方式启动,会在宿主机上启动很多容器,比如 api-server 容器、controller manager 容器等,这样就导致容器运行环境受限,只能使用容器中的命令,很多宿主机内核的功能无法使用

2.1.2 kubeadm

注意:kubeadm 部署 k8s,只用于测试环境,不用于生产环境,生产环境的 Kubernetes 集群会使用二进制部署

https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/

https://v1-18.docs.kubernetes.io/zh/docs/setup/independent/create-cluster-kubeadm/

使用 k8s 官方提供的部署工具 kubeadm 自动安装,需要在 master 和 node 节点上安装 docker 等组件,然后初始化,把管理端的控制服务和 node 上的服务都以 pod 的方式运行

2.1.3 安装注释事项

注意事项

- 禁用 swap

swapoff -a

如果不关闭 swap 将会报错

[root@K8s-node5 ~]# kubeadm join 172.18.8.111:6443 --token e1zb26.ujwhegxxi452w53m \

> --discovery-token-ca-cert-hash sha256:3fe215f2b665c40659a06ab1a874e44b64bf784fe78a3d7f2cbb792f26b34ada

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR Swap]: running with swap on is not supported. Please disable swap

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

[root@K8s-node5 ~]#

- 关闭 selinux

# 先临时关闭(及时生效不用重启系统)

setenforce 0

# 再永久关闭selinux,以防止系统重起后恢复为 enforcing 模式

vim /etc/sysconfig/selinux

SELINUX=disable

- 关闭 iptables

systemctl stop iptables

systemctl stop firewalld

systemctl disable firewalld

- 优化内核参数及资源限制参数

# 二层的网桥在转发包时会被宿主机 iptables 的 FORWARD 规则匹配

# 注意:如果无法查到,在安装完 docker 之后再次查找 sysctl -a | grep bridge-nf-call-iptables

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

- 开启路由转发(Centos 系统,Ubuntu 默认是打开的)

cat /etc/sysctl.conf

net.ipv4.ip_forward = 1

sysctl -p

2.2 部署过程

组件规划及版本选择

# CRI 运行时选择

https://kubernetes.io/zh/docs/setup/production-environment/container-runtimes/

# CNI 选择

https://kubernetes.io/zh/docs/concepts/cluster-administration/addons/

2.2.1 具体步骤

- 基础环境准备

- 部署 haproxy 和 harbor 高可用反向代理,实现控制节点的 API 反向入口高可用

- 在所有 master 节点安装指定版本的

kubeadm、kubelet、kubectl、docker - 在所有 node 节点安装指定版本的

kubeadm、kubelet、docker,在 node 节点 kubectl 为可选安装(考虑到安全,不建议安装),看是否需要在 node 执行 kubectl 命令进行集群管理及 pod 管理等操作 - master 节点运行 kubeadm init 初始化命令

- 验证 master 节点状态

- 在 node 节点使用 kubeadm 命令将自己加入 k8s master(需要手动使用 master 生成 token 认证)

- 验证 node 节点状态

- 创建 pod 并测试网络通信

- 部署 web 服务 Dashboard

- k8s 集群升级案例

目前官方最新版本为 1.21.3,因为涉及到后续的版本升级案例,所以 1.21.x 的版本无法演示后续的版本升级

2.2.2 基础环境准备

服务器环境

最小化安装基础系统,如果使用 centos 系统,则关闭防火墙 selinux 和 swap,更新软件源,时间同步,安装常用命令,重启后验证基础配置,centos 推荐使用 centos7.5 及以上的系统,ubuntu 推荐 18.04 及以上稳定版本

| 角色 | IP地址 | 软件版本 | 操作系统版本 | 生产建议配置 |

|---|---|---|---|---|

| K8s-master1 | 172.18.8.9 | kubeadm-1.20 | Ubuntu 18.04 | 8 CPU + 16G Memory + 100G 磁盘 |

| K8s-master2 | 172.18.8.19 | kubeadm-1.20 | Ubuntu 18.04 | 8 CPU + 16G Memory + 100G 磁盘 |

| K8s-master3 | 172.18.8.29 | kubeadm-1.20 | Ubuntu 18.04 | 8 CPU + 16G Memory + 100G 磁盘 |

| K8s-node1 | 172.18.8.39 | Ubuntu 18.04 | 96 CPU + 386G Memory + SSD + 万兆 | |

| K8s-node2 | 172.18.8.49 | Ubuntu 18.04 | 96 CPU + 386G Memory + SSD + 万兆 | |

| K8s-node3 | 172.18.8.59 | Ubuntu 18.04 | 96 CPU + 386G Memory + SSD + 万兆 | |

| K8s-node4 | 172.18.8.69 | Ubuntu 18.04 | 96 CPU + 386G Memory + SSD + 万兆 | |

| Harbor1 | 172.18.8.214(vip 172.18.8.9) | Ubuntu 18.04 | 8 CPU + 16G Memory + 2T | |

| Harbor2 | 172.18.8.215(vip 172.18.8.9) | Ubuntu 18.04 | 8 CPU + 16G Memory + 2T | |

| Ha+keepalived1 | 172.18.8.79 | Ubuntu 18.04 | 8 CPU + 16G Memory + 100G 磁盘 | |

| Ha+keepalived2 | 172.18.8.89 | Ubuntu 18.04 | 8 CPU + 16G Memory + 100G 磁盘 |

172.18.8.9 hostnamectl set-hostname K8s-master1

172.18.8.19 hostnamectl set-hostname K8s-master2

172.18.8.29 hostnamectl set-hostname K8s-master3

172.18.8.39 hostnamectl set-hostname K8s-node1

172.18.8.49 hostnamectl set-hostname K8s-node2

172.18.8.59 hostnamectl set-hostname K8s-node3

172.18.8.69 hostnamectl set-hostname K8s-node4

172.18.8.79 hostnamectl set-hostname ha_keepalive1

172.18.8.89 hostnamectl set-hostname ha_keepalive2

2.3 高可用反向代理

基于 keepalive 和 haproxy 实现高可用反向代理环境,为 k8s apiserver 提供高可用反向代理

2.3.1 Keepalive 安装及配置

安装及配置 Keepalived,并测试 VIP 的高可用

节点1安装及配置 Keepalived

[root@ha_keepalive1 ~]# apt -y install keepalived

[root@ha_keepalive1 ~]# cp /usr/share/doc/keepalived/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf

[root@ha_keepalive1 ~]# grep -Ev "#|^$" /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.18.8.111/24 dev eth0 label eth0:1 # haproxy:bind 172.18.8.111:6443

172.18.8.222/24 dev eth0 label eth0:2 # haproxy:bind 172.18.8.222:80

}

}

[root@ha_keepalive1 ~]# systemctl enable --now keepalived

节点2安装及配置 Keepalived

[root@ha_keepalive2 ~]# apt -y install keepalived

[root@ha_keepalive2 ~]# cp /usr/share/doc/keepalived/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf

[root@ha_keepalive2 ~]# grep -Ev "#|^$" /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from [email protected]

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state BACKUP # 修改为备机

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 51 # 确保和 Master 相同,否则会发生脑裂

priority 80 # 修改优先级,要比 Master 低

advert_int 1

authentication {

auth_type PASS

auth_pass 1111 # 确保和 Master 相同,否则会发生脑裂

}

virtual_ipaddress {

172.18.8.111/24 dev eth0 label eth0:1 # haproxy:bind 172.18.8.111:6443

172.18.8.222/24 dev eth0 label eth0:2 # haproxy:bind 172.18.8.222:80

}

}

[root@ha_keepalive2 ~]# systemctl enable --now keepalived

2.3.2 Haproxy 安装及配置

节点1安装及配置 haproxy

[root@ha_keepalive1 ~]# apt -y install haproxy

[root@ha_keepalive1 ~]# grep -Ev "#|^$" /etc/haproxy/haproxy.cfg

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listeners

stats timeout 30s

user haproxy

group haproxy

daemon

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

ssl-default-bind-ciphers ECDH+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:RSA+AESGCM:RSA+AES:!aNULL:!MD5:!DSS

ssl-default-bind-options no-sslv3

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

listen stats

mode http

bind 0.0.0.0:9999

stats enable

log global

stats uri /haproxy-status

stats auth haadmin:123456

listen k8s-6443

bind 172.18.8.111:6443

mode tcp

balance roundrobin

server 172.18.8.9 172.18.8.9:6443 check inter 3s fall 3 rise 5 # k8s-master1

server 172.18.8.19 172.18.8.19:6443 check inter 3s fall 3 rise 5 # k8s-master2

server 172.18.8.29 172.18.8.29:6443 check inter 3s fall 3 rise 5 # k8s-master3

listen nginx-80

bind 172.18.8.222:80

mode tcp

balance roundrobin

server 172.18.8.39 172.18.8.39:30004 check inter 3s fall 3 rise 5 # k8s-node1

server 172.18.8.49 172.18.8.49:30004 check inter 3s fall 3 rise 5 # k8s-node2

server 172.18.8.59 172.18.8.59:30004 check inter 3s fall 3 rise 5 # k8s-node3

server 172.18.8.69 172.18.8.69:30004 check inter 3s fall 3 rise 5 # k8s-node4

[root@ha_keepalive1 ~]# systemctl enable --now haproxy

# 不增加内核参数,无法看到 haproxy 的端口

[root@k8s-ha1 ~]# vim /etc/sysctl.conf

[root@k8s-ha1 ~]# sysctl -p

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

[root@k8s-ha1 ~]# systemctl restart haproxy.service

节点2安装及配置haproxy

[root@ha_keepalive2 ~]# apt -y install haproxy

[root@ha_keepalive2 ~]# grep -Ev "#|^$" /etc/haproxy/haproxy.cfg

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listeners

stats timeout 30s

user haproxy

group haproxy

daemon

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

ssl-default-bind-ciphers ECDH+AESGCM:DH+AESGCM:ECDH+AES256:DH+AES256:ECDH+AES128:DH+AES:RSA+AESGCM:RSA+AES:!aNULL:!MD5:!DSS

ssl-default-bind-options no-sslv3

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

listen stats

mode http

bind 0.0.0.0:9999

stats enable

log global

stats uri /haproxy-status

stats auth haadmin:123456

listen k8s-6443

bind 172.18.8.111:6443

mode tcp

balance roundrobin

server 172.18.8.9 172.18.8.9:6443 check inter 3s fall 3 rise 5 # k8s-master1

server 172.18.8.19 172.18.8.19:6443 check inter 3s fall 3 rise 5 # k8s-master2

server 172.18.8.29 172.18.8.29:6443 check inter 3s fall 3 rise 5 # k8s-master3

listen nginx-80

bind 172.18.8.222:80

mode tcp

balance roundrobin

server 172.18.8.39 172.18.8.39:30004 check inter 3s fall 3 rise 5 # k8s-node1

server 172.18.8.49 172.18.8.49:30004 check inter 3s fall 3 rise 5 # k8s-node2

server 172.18.8.59 172.18.8.59:30004 check inter 3s fall 3 rise 5 # k8s-node3

server 172.18.8.69 172.18.8.69:30004 check inter 3s fall 3 rise 5 # k8s-node4

[root@ha_keepalive2 ~]# systemctl enable --now haproxy

# 不增加内核参数,无法看到 haproxy 的端口

[root@k8s-ha1 ~]# vim /etc/sysctl.conf

[root@k8s-ha1 ~]# sysctl -p

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

[root@k8s-ha1 ~]# systemctl restart haproxy.service

2.4 安装 Harbor

请查看

2.5 安装 kubeadm 等组件

在 master 和 node 节点安装 kubeadm、kubelet、kubectl、docker等组件,负载均衡服务器不需要安装

2.5.1 版本选择

在每个 master 节点和 node 节点安装 docker

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.17.md#v11711

Update the latest validated version of Docker to 19.03 (#84476, @neolit123)

2.5.2 安装 docker

# 卸载旧版 docker

sudo apt-get -y remove docker docker-engine docker.io containerd runc

# 安装必要的一些系统工具

sudo apt-get update

sudo apt -y install apt-transport-https ca-certificates curl gnupg2 software-properties-common

# 安装 GPG 证书

curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

# 写入软件源信息

sudo add-apt-repository \

"deb [arch=amd64] https://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux/ubuntu \

$(lsb_release -cs) \

stable"

# 更新软件源

apt-get update

# 查看可安装的 docker 版本

apt-cache madison docker-ce docker-ce-cli

# 安装并启动 docker 19.03.x

apt install -y docker-ce=5:19.03.15~3-0~ubuntu-bionic docker-ce-cli=5:19.03.15~3-0~ubuntu-bionic

# 启动 docker,并设置为开机自启动

systemctl enable --now docker

# 验证 docker 版本

docker version

# 查看 docker 详细信息

docker info

2.5.3 所有节点安装 kubelet kubadm kubectl

所有 master 和 node 节点配置阿里云仓库地址并安装相关组件,node 节点可选安装 kubectl

配置阿里云镜像的 Kubernetes 源(用于安装 kubelet kubeadm kubectl 命令)

也可以使用清华的 kubernetes 镜像源

apt-get update && apt -y install apt-transport-https

curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

echo "deb https://mirrors.tuna.tsinghua.edu.cn/kubernetes/apt kubernetes-xenial main" >> /etc/apt/sources.list.d/kubernetes.list

# 开始安装 kubeadm

apt update

apt-cache madison kubeadm

apt-cache madison kubelet

apt-cache madison kubectl

apt -y install kubelet=1.20.5-00 kubeadm=1.20.5-00 kubectl=1.20.5-00

# 验证版本

[root@K8s-master1 ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"20", GitVersion:"v1.20.5", GitCommit:"6b1d87acf3c8253c123756b9e61dac642678305f", GitTreeState:"clean", BuildDate:"2021-03-18T01:08:27Z", GoVersion:"go1.15.8", Compiler:"gc", Platform:"linux/amd64"}

[root@K8s-master1 ~]#

2.5.4 验证 master 节点 kubelet 服务

目前启动 kubelet 报错

[root@K8s-master1 ~]# systemctl start kubelet

[root@K8s-master1 ~]# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/lib/systemd/system/kubelet.service; enabled; vendor preset: enabled)

Drop-In: /etc/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: activating (auto-restart) (Result: exit-code) since Sat 2021-07-24 03:39:38 UTC; 759ms ago

Docs: https://kubernetes.io/docs/home/

Process: 12191 ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $K

Main PID: 12191 (code=exited, status=255)

...skipping...

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/lib/systemd/system/kubelet.service; enabled; vendor preset: enabled)

Drop-In: /etc/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: activating (auto-restart) (Result: exit-code) since Sat 2021-07-24 03:39:38 UTC; 759ms ago

Docs: https://kubernetes.io/docs/home/

Process: 12191 ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $K

Main PID: 12191 (code=exited, status=255)

[root@K8s-master1 ~]# vim /var/log/syslog

2.6 master 节点运行 kubeadm init 初始化命令

在三台 master 中任意一台 master 进行集群初始化,而且集群初始化只需要初始化一次

2.6.1 kubeadm 命令使用

# 官网

https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/

查看帮助

[root@K8s-master1 ~]# kubeadm --help

┌──────────────────────────────────────────────────────────┐

│ KUBEADM │

│ Easily bootstrap a secure Kubernetes cluster │

│ │

│ Please give us feedback at: │

│ https://github.com/kubernetes/kubeadm/issues │

└──────────────────────────────────────────────────────────┘

Example usage:

Create a two-machine cluster with one control-plane node

(which controls the cluster), and one worker node

(where your workloads, like Pods and Deployments run).

┌──────────────────────────────────────────────────────────┐

│ On the first machine: │

├──────────────────────────────────────────────────────────┤

│ control-plane# kubeadm init │

└──────────────────────────────────────────────────────────┘

┌──────────────────────────────────────────────────────────┐

│ On the second machine: │

├──────────────────────────────────────────────────────────┤

│ worker# kubeadm join │

└──────────────────────────────────────────────────────────┘

You can then repeat the second step on as many other machines as you like.

Usage:

kubeadm [command]

Available Commands:

alpha Kubeadm experimental sub-commands

certs Commands related to handling kubernetes certificates

completion Output shell completion code for the specified shell (bash or zsh)

config Manage configuration for a kubeadm cluster persisted in a ConfigMap in the cluster

help Help about any command

init Run this command in order to set up the Kubernetes control plane

join Run this on any machine you wish to join an existing cluster

reset Performs a best effort revert of changes made to this host by 'kubeadm init' or 'kubeadm join'

token Manage bootstrap tokens

upgrade Upgrade your cluster smoothly to a newer version with this command

version Print the version of kubeadm

Flags:

--add-dir-header If true, adds the file directory to the header of the log messages

-h, --help help for kubeadm

--log-file string If non-empty, use this log file

--log-file-max-size uint Defines the maximum size a log file can grow to. Unit is megabytes. If the value is 0, the maximum file size is unlimited. (default 1800)

--one-output If true, only write logs to their native severity level (vs also writing to each lower severity level

--rootfs string [EXPERIMENTAL] The path to the 'real' host root filesystem.

--skip-headers If true, avoid header prefixes in the log messages

--skip-log-headers If true, avoid headers when opening log files

-v, --v Level number for the log level verbosity

Use "kubeadm [command] --help" for more information about a command.

[root@K8s-master1 ~]#

| header 1 | header 2 |

|---|---|

| alpha | kubeadm 处于测试阶段的命令 |

| completion | bash 命令补全,需要安装 bash-completion |

| config | 管理 kubeadm 集群的配置,该配置保留在集群的 configMap 中 kubeadm config print init-defaults |

| help | 帮助 |

| init | 初始化一个 kubernetes 控制平面 |

| join | 将节点加入到已经存在的 k8s master |

| reset | 用于恢复通过 kubeadm init 或者 kubeadm join 命令对节点进行的任何变更 |

| token | 管理 token |

| upgrade | 升级 k8s 版本 |

| version | 查看版本信息 |

bash 命令补全 completion

mkdir -p /data/scripts

kubeadm completion bash > /data/scripts/kubeadm_completion.sh

srouce /data/scripts/kubeadm_completion.sh

vim /etc/profile

source /data/scripts/kubeadm_completion.sh

2.6.2 kubeadm init 命令简介

https://kubernetes.io/zh/docs/reference/setup-tools/kubeadm/kubeadm-init/

| header 1 | header 2 |

|---|---|

| –apiserver-advertise-address string | K8s API Server 将要监听的本机 IP |

| –apiserver-bind-port int32 | API 服务器绑定的端口,默认值:6443 |

| –apiserver-cert-extra-sans stringSlice | 可选的证书额外信息,用于指定 API Server 的服务证书。可以是 IP 地址和 DNS 名称 |

| –cert-dir string | 保存和存储证书的路径,默认值:"/etc/kubernetes/pki" |

| –certificate-key string | 定义一个用于加密 kubeadm-certs Secret 中的控制平台证书的密钥 |

| –config string | kubeadm 配置文件的路径 |

| –control-plane-endpoint string | 为控制平台指定一个固定的 IP 地址或 DNS 名称 即配置一个可以长期使用且是高可用的 VIP 或者域名,K8s 多 master 高可用基于此参数实现 |

| –cri-socket string | 要连接的 CRI(容器运行时接口,Container Runtime Interface,简称CRI) 套接字的路径。 如果为空,则 kubeadm 将尝试自动检测此值;仅当安装了多个 CRI 或具有非标准 CRI 插槽时, 才使用此选项 |

| –dry-run | 不要应用任何更改;只是输出将要执行的操作,其实就是测试运行 |

| –experimental-patches string | 用于存储 kustomize 为静态 pod 清单所提供的补丁路径 |

| –feature-gates string | 一组用来描述各种功能特性的键值(key=value)对。 选项是: IPv6DualStack=true|false (ALPHA - default=false) |

| –ignore-preflight-errors stringSlice | 可以忽略检查过程中出现的错误信息,比如忽略 swap,取值为 ‘all’ 时将忽略检查中的所有错误 |

| –image-repository string | 设置一个镜像仓库仓库, 默认值:"k8s.gcr.io" |

| –kubernetes-version string | 指定安装 Kubernetes 版本,默认值:“stable-1” |

| –node-name string | 指定 node 节点的名称 |

| –pod-network-cidr string | 指明 pod 网络可以使用的 IP 地址段。如果设置了这个参数, 控制平台将会为每一个节点自动分配 CIDRs |

| –service-cidr string | 设置 service 网络地址范围,默认值:“10.96.0.0/12” |

| –service-dns-domain string | 设置 k8s 内部域名,默认值:“cluster.local”, 会有相应的 DNS 服务(kube-dns/coredns)解析生成的域名记录 |

| –skip-certificate-key-print | 不打印用于加密控制平台证书的密钥。 |

| –skip-phases stringSlice | 要跳过的阶段列表 |

| –skip-token-print | 跳过打印 ‘kubeadm init’ 生成的默认引导令牌,即跳过打印 token 信息 |

| –token string | 指定 token |

| –token-ttl duration | 指定 token 过期时间,默认为 24 小时,如果设置为 ‘0’,则表示永不过期 |

| –upload-certs | 更新证书 |

全局可选项

| header 1 | header 2 |

|---|---|

| –add-dir-header | 如果为 true,在日志头部添加日志目录 |

| –log-file string | 如果不为空,将使用此日志文件 |

| –log-file-max-size unit | 设置日志文件的最大大小,单位为兆,默认为 1800 兆,0 表示没有限制 |

| –rootfs | 宿主机的根目录,也就是绝对路径 |

| –skip-headers | 如果为 true,在 log 日志里面不显示标题前缀 |

| –skip-log-headers | 如果为 true,在 log 日志里不显示标题 |

2.6.3 验证当前 kubeadm 版本

[root@K8s-master1 ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"20", GitVersion:"v1.20.5", GitCommit:"6b1d87acf3c8253c123756b9e61dac642678305f",

GitTreeState:"clean", BuildDate:"2021-03-18T01:08:27Z", GoVersion:"go1.15.8", Compiler:"gc", Platform:"linux/amd64"}

[root@K8s-master1 ~]#

2.6.4 准备镜像

[root@K8s-master1 ~]# kubeadm config images list --kubernetes-version v1.20.5

k8s.gcr.io/kube-apiserver:v1.20.5

k8s.gcr.io/kube-controller-manager:v1.20.5

k8s.gcr.io/kube-scheduler:v1.20.5

k8s.gcr.io/kube-proxy:v1.20.5

k8s.gcr.io/pause:3.2

k8s.gcr.io/etcd:3.4.13-0

k8s.gcr.io/coredns:1.7.0

[root@K8s-master1 ~]#

2.6.5 master 节点下载镜像

建议提前在 master 节点下载镜像以减少等待时间,但是镜像默认使用 Google 的镜像仓库,所以国内无法使用下载,但是可以通过阿里云的镜像仓库把镜像先提前下载下来,可以避免后期因镜像下载异常导致 k8s 部署异常

[root@K8s-master1 ~]# cat images_download.sh

#!/bin/bash

#

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.20.5

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.20.5

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.20.5

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.20.5

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.7.0

[root@K8s-master1 ~]# bash images_download.sh

2.6.6 验证当前镜像

[root@K8s-master1 ~]# docker images|grep aliyuncs

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.20.5 5384b1650507 4 months ago 118MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver v1.20.5 d7e24aeb3b10 4 months ago 122MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager v1.20.5 6f0c3da8c99e 4 months ago 116MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler v1.20.5 8d13f1db8bfb 4 months ago 47.3MB

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd 3.4.13-0 0369cf4303ff 11 months ago 253MB

registry.cn-hangzhou.aliyuncs.com/google_containers/coredns 1.7.0 bfe3a36ebd25 13 months ago 45.2MB

registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.2 80d28bedfe5d 17 months ago 683kB

[root@K8s-master1 ~]#

2.6.7 将外网下载的镜像上传到公司的 Harbor 上

我们可以将这些从外网下载的镜像,push 到公司内部的 habor 上

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.20.5 harbor.tech.com/baseimages/k8s/kube-proxy:v1.20.5

docker push harbor.tech.com/baseimages/k8s/kube-proxy:v1.20.5

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.20.5 harbor.tech.com/baseimages/k8s/kube-controller-manager:v1.20.5

docker push harbor.tech.com/baseimages/k8s/kube-controller-manager:v1.20.5

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.20.5 harbor.tech.com/baseimages/k8s/kube-scheduler:v1.20.5

docker push harbor.tech.com/baseimages/k8s/kube-scheduler:v1.20.5

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.20.5 harbor.tech.com/baseimages/k8s/kube-apiserver:v1.20.5

docker push harbor.tech.com/baseimages/k8s/kube-apiserver:v1.20.5

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0 harbor.tech.com/baseimages/k8s/etcd:3.4.13-0

docker push harbor.tech.com/baseimages/k8s/etcd:3.4.13-0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.7.0 harbor.tech.com/baseimages/k8s/coredns:1.7.0

docker push harbor.tech.com/baseimages/k8s/coredns:1.7.0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2 harbor.tech.com/baseimages/k8s/pause:3.2

docker push harbor.tech.com/baseimages/k8s/pause:3.2

2.6.8 从公司 harbor 获取镜像

每个 Master 节点都需要执行

# 增加 harbor 域名解析

echo "172.18.8.9 harbor.tech.com" >> /etc/hosts

# 编写获取镜像脚本

cat > images_download.sh << EOF

#!/bin/bash

#

docker pull harbor.tech.com/baseimages/k8s/kube-proxy:v1.20.5

docker pull harbor.tech.com/baseimages/k8s/kube-controller-manager:v1.20.5

docker pull harbor.tech.com/baseimages/k8s/kube-scheduler:v1.20.5

docker pull harbor.tech.com/baseimages/k8s/kube-apiserver:v1.20.5

docker pull harbor.tech.com/baseimages/k8s/etcd:3.4.13-0

docker pull harbor.tech.com/baseimages/k8s/coredns:1.7.0

docker pull harbor.tech.com/baseimages/k8s/pause:3.2

EOF

# 私有 Harbor 地址

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors":["https://0cde955d3600f3000fe5c004160e0320.mirror.swr.myhuaweicloud.com"],

"insecure-registries": ["172.18.8.215","172.18.8.214","172.18.8.9","harbor.tech.com"]

}

EOF

# 重启 docker

systemctl restart docker

# 从私有 Habor 下载镜像

bash images_download.sh

2.8 高可用 master 初始化

基于 keepalived 实现高可用 VIP,通过 haproxy 实现 kube-apiserver 的反向代理,然后将对 kube-apiserver 的管理请求转发至多台 k8s master 以实现管理端高可用

2.8.1 基于命令初始化高可用 master 方式(本例使用此方式)

在 Master1:172.18.8.9 上执行,因为 apiserver-advertise-address=172.18.8.9,如果要在 Master2 或者 Master3 上执行,只需要修改地址为 172.18.8.19 或 172.18.8.29

kubeadm init \

--apiserver-advertise-address=172.18.8.9 \

--control-plane-endpoint=172.18.8.111 \

--apiserver-bind-port=6443 \

--kubernetes-version=v1.20.5 \

--pod-network-cidr=10.100.0.0/16 \

--service-cidr=10.200.0.0/16 \

--service-dns-domain=song.local \

--image-repository=harbor.tech.com/baseimages/k8s \

--ignore-preflight-errors=swap

初始化过程

初始化结果

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

kubeadm join 172.18.8.111:6443 --token 1grr4q.3v09zfh9pl6q9546 \

--discovery-token-ca-cert-hash sha256:3fe215f2b665c40659a06ab1a874e44b64bf784fe78a3d7f2cbb792f26b34ada \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.18.8.111:6443 --token 1grr4q.3v09zfh9pl6q9546 \

--discovery-token-ca-cert-hash sha256:3fe215f2b665c40659a06ab1a874e44b64bf784fe78a3d7f2cbb792f26b34ada

--token 1grr4q.3v09zfh9pl6q9546 的默认有效期是 24 小时,过期后无法再使用这个 token 给服务器集群添加 Master 或者 Node 节点了,需要重新生成,操作如下

# 列出有效的 token

[root@K8s-master1 ~]# kubeadm token list

# 创建 token,使用新创建的 token(e1zb26.ujwhegxxi452w53m) 替换上面失效的token(1grr4q.3v09zfh9pl6q9546)

[root@K8s-master1 ~]# kubeadm token create

e1zb26.ujwhegxxi452w53m

# 列出有效的 token,会显示剩余过期时间

[root@K8s-master1 ~]# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

e1zb26.ujwhegxxi452w53m 23h 2021-07-28T01:47:40Z authentication,signing <none> system:bootstrappers:kubeadm:default-node-token

[root@K8s-master1 ~]#

2.8.2 基于文件初始化高可用 master 方式(本例未使用基于文件的方式)

# 输出默认初始化配置

kubeadm config print init-defaults

# 将默认配置输出至文件

kubeadm config print init-defaults > kubeadm-init.yaml

# 修改后的初始化文件内容

[root@K8s-master1 ~]# cat kubeadm-init.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.18.8.9

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master1

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 172.18.8.111:6443

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.20.5

networking:

dnsDomain: song.local

podSubnet: 10.100.0.0/16

serviceSubnet: 10.200.0.0/16

scheduler: {}

# 基于文件执行 k8s master 初始化

[root@K8s-master1 ~]# kubeadm init --config kubeadm-init.yaml

2.9 配置 kube-config 文件及网络组件

无论是使用命令还是文件初始化的 k8s 环境,无论是单机还是集群,需要配置一下 kube-config 文件及网络组件

2.9.1 kube-config 文件

kube-config 文件中包含 kube-apiserver 地址及相关认证信息

[root@K8s-master1 ~]# mkdir -p $HOME/.kube

[root@K8s-master1 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@K8s-master1 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@K8s-master1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1 NotReady control-plane,master 69m v1.20.5

[root@K8s-master1 ~]#

部署网络组件 flannel

https://github.com/coreos/flannel

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

[root@K8s-master1 ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

修改 kube-flannel.yml 文件(修改三处)

[root@K8s-master1 ~]# cat kube-flannel.yml

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.100.0.0/16", # 改为自定义的 pod 网关

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: harbor.tech.com/baseimages/flannel:v0.14.0 # 最好是下载下来,上传到公司的 Harbor 上,速度快

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: harbor.tech.com/baseimages/flannel:v0.14.0 # 最好是下载下来,上传到公司的 Harbor 上,速度快

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

[root@K8s-master1 ~]#

应用 flannel.yml 文件

[root@K8s-master1 ~]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

[root@K8s-master1 ~]#

检查 master 节点状态

# 状态从 NotReady 编程 Ready

[root@K8s-master1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready control-plane,master 85m v1.20.5

[root@K8s-master1 ~]#

k8s 认证信息

# k8s-Master2 默认无法使用

root@K8s-master2:~# kubectl get node

The connection to the server localhost:8080 was refused - did you specify the right host or port?

# 在 k8s-master1 将认证信息传到 k8s-master2 上

root@K8s-master1:~# scp -r /root/.kube 172.18.8.19:/root

# k8s-Master2 验证

root@K8s-master2:~# ll /root/.kube/

total 20

drwxr-xr-x 3 root root 4096 Jul 26 01:34 ./

drwx------ 6 root root 4096 Jul 26 01:34 ../

drwxr-x--- 4 root root 4096 Jul 26 01:34 cache/

-rw------- 1 root root 5568 Jul 26 01:34 config

root@K8s-master2:~# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready control-plane,master 42h v1.20.5

k8s-master2 Ready control-plane,master 39h v1.20.5

k8s-master3 Ready control-plane,master 39h v1.20.5

k8s-node1 Ready 39h v1.20.5

k8s-node2 Ready 39h v1.20.5

k8s-node3 Ready 39h v1.20.5

k8s-node4 Ready 39h v1.20.5

root@K8s-master2:~#

2.9.2 当前 master 生成证书用于添加新控制节点

[root@K8s-master1 ~]# kubeadm init phase upload-certs --upload-certs

I0724 08:34:59.116907 7356 version.go:254] remote version is much newer: v1.21.3; falling back to: stable-1.20

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

06caaf47534774d1a9459b47771c3f2792536e5aa1a59bfa8a29bc5b2a5e4a42

[root@K8s-master1 ~]#

2.10 添加节点到 k8s 集群

将其他的 master 节点及 node 节点分别添加到 k8s 集群中

2.10.1 master 节点2

在另外一台已经安装docker、kubeadm 和 kubelet 的 master 节点上执行以下操作

# 注意 --token 1grr4q.3v09zfh9pl6q9546 的有效期是 24小时,过期后需要重新生成新的 token

kubeadm join 172.18.8.111:6443 --token 1grr4q.3v09zfh9pl6q9546 \

--discovery-token-ca-cert-hash sha256:3fe215f2b665c40659a06ab1a874e44b64bf784fe78a3d7f2cbb792f26b34ada \

--control-plane --certificate-key 06caaf47534774d1a9459b47771c3f2792536e5aa1a59bfa8a29bc5b2a5e4a42

2.10.2 master 节点3

# 注意 --token 1grr4q.3v09zfh9pl6q9546 的有效期是 24小时,过期后需要重新生成新的 token

kubeadm join 172.18.8.111:6443 --token 1grr4q.3v09zfh9pl6q9546 \

--discovery-token-ca-cert-hash sha256:3fe215f2b665c40659a06ab1a874e44b64bf784fe78a3d7f2cbb792f26b34ada \

--control-plane --certificate-key 06caaf47534774d1a9459b47771c3f2792536e5aa1a59bfa8a29bc5b2a5e4a42

检查 master 节点状态

[root@K8s-master1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready control-plane,master 164m v1.20.5

k8s-master2 Ready control-plane,master 9m23s v1.20.5

k8s-master3 Ready control-plane,master 2m42s v1.20.5

[root@K8s-master1 ~]#

2.10.3 添加 node 节点

各需要加入到 k8s master 集群中的 node 节点都要安装 docker、kubeadm、kubelet,因为都要重新执行安装 docker kubeam kubelet 的步骤,即配置 apt 仓库,配置 docker 加速器,安装命令,启动 kubelet 服务

添加命令为 master 端 kubeadm init 初始化完成之后返回的添加命令

# 注意 --token 1grr4q.3v09zfh9pl6q9546 的有效期是 24小时,过期后需要重新生成新的 token

[root@K8s-node1 ~]# kubeadm join 172.18.8.111:6443 --token 1grr4q.3v09zfh9pl6q9546 \

> --discovery-token-ca-cert-hash sha256:3fe215f2b665c40659a06ab1a874e44b64bf784fe78a3d7f2cbb792f26b34ada

[root@K8s-node2 ~]# kubeadm join 172.18.8.111:6443 --token 1grr4q.3v09zfh9pl6q9546 \

> --discovery-token-ca-cert-hash sha256:3fe215f2b665c40659a06ab1a874e44b64bf784fe78a3d7f2cbb792f26b34ada

[root@K8s-node3 ~]# kubeadm join 172.18.8.111:6443 --token 1grr4q.3v09zfh9pl6q9546 \

> --discovery-token-ca-cert-hash sha256:3fe215f2b665c40659a06ab1a874e44b64bf784fe78a3d7f2cbb792f26b34ada

[root@K8s-node4 ~]# kubeadm join 172.18.8.111:6443 --token 1grr4q.3v09zfh9pl6q9546 \

> --discovery-token-ca-cert-hash sha256:3fe215f2b665c40659a06ab1a874e44b64bf784fe78a3d7f2cbb792f26b34ada

2.10.4 验证当前 node 状态

各 node 节点会自动加入到 master 节点,下载镜像并启动 flannel,直到最终在 master 看到 node 处于 Ready 状态

[root@K8s-master1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready control-plane,master 3h31m v1.20.5

k8s-master2 Ready control-plane,master 57m v1.20.5

k8s-master3 Ready control-plane,master 50m v1.20.5

k8s-node1 Ready 8m38s v1.20.5

k8s-node2 Ready 8m33s v1.20.5

k8s-node3 Ready 8m30s v1.20.5

k8s-node4 Ready 8m27s v1.20.5

[root@K8s-master1 ~]#

2.10.5 验证当前证书状态

[root@K8s-master1 ~]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

csr-2cr92 9m35s kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:1grr4q Approved,Issued

csr-5l6gf 58m kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:1grr4q Approved,Issued

csr-7hnct 9m40s kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:1grr4q Approved,Issued

csr-b9pbb 51m kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:1grr4q Approved,Issued

csr-pxs4q 9m29s kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:1grr4q Approved,Issued

csr-vqhtx 9m32s kubernetes.io/kube-apiserver-client-kubelet system:bootstrap:1grr4q Approved,Issued

[root@K8s-master1 ~]#

2.10.6 k8s 创建容器并测试内部网络

创建测试容器,测试网络连接是否可以通信

注:单 master 节点要允许 pod 运行在 master节点kubectl taint nodes --all node-role.kubernetes.io/master-

[root@K8s-master1 ~]# kubectl run net-test1 --image=alpine sleep 360000

pod/net-test1 created

[root@K8s-master1 ~]# kubectl run net-test2 --image=alpine sleep 360000

pod/net-test2 created

[root@K8s-master1 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

net-test1 0/1 ContainerCreating 0 42s k8s-node2

net-test2 0/1 ContainerCreating 0 34s k8s-node1

# 状态都为 Running 表示创建成功

[root@K8s-master1 ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

net-test1 1/1 Running 0 72s 10.100.4.2 k8s-node2

net-test2 1/1 Running 0 64s 10.100.3.2 k8s-node1

[root@K8s-master1 ~]#

验证容器间通信

2.10.7 验证外部网络

# docker exec 只能进入本机的容器

# kubectl exec 可以进入集群中任意节点的容器

[root@K8s-master1 ~]# kubectl exec -it net-test1 sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # ping www.baidu.com -c5

PING www.baidu.com (110.242.68.4): 56 data bytes

64 bytes from 110.242.68.4: seq=0 ttl=52 time=38.544 ms

64 bytes from 110.242.68.4: seq=1 ttl=52 time=37.649 ms

64 bytes from 110.242.68.4: seq=2 ttl=52 time=45.911 ms

64 bytes from 110.242.68.4: seq=3 ttl=52 time=37.187 ms

64 bytes from 110.242.68.4: seq=4 ttl=52 time=35.279 ms

--- www.baidu.com ping statistics ---

5 packets transmitted, 5 packets received, 0% packet loss

round-trip min/avg/max = 35.279/38.914/45.911 ms

/ #