机器学习_XGB模型训练内存溢出解决方案

在我们的机器学习任务之中,当数据量非常之大的时候。我们会在两个地方遇到内存溢出的情况。

- 数据读取与处理

- 模型训练

之前笔者有写过数据读取与处理解决内存溢出的相关处理方案(核心是用生成器分批处理)

可以看笔者之前的文章:机器学习预处理效率及内存优化(多进程协程优化)

本文主要讲解如何处理xgb模型训练的时候内存溢出的情况

一、内存数据转libsvm文件

主要是将数据转成生成器,然后分批以csr_matrix形式压缩写入相应文件。

import xgboost as xgb

import os

from typing import List, Callable

import pandas as pd

import numpy as np

from sklearn.datasets import load_svmlight_file, dump_svmlight_file

from sklearn.datasets import load_boston

from scipy.sparse import csc_matrix, csr_matrix

def pandas_iter(df, chunksize):

n = 0

max_n = df.shape[0] // chunksize + 1

while n < max_n:

yield df.iloc[chunksize * n : chunksize* (n+1), :]

n += 1

def file2svm(pandas_chunk, train_columns, target_column='target',

out_prefix='smvlight',

out_afterfix_start_num=0):

out_files = []

while pandas_chunk:

try:

tmp = next(pandas_chunk)

except:

break

X, y = tmp[train_columns], tmp[target_column]

print(X.shape, y.shape)

file_name_tmp = f'{out_prefix}_{out_afterfix_start_num}.dat'

dump_svmlight_file(X, y, file_name_tmp, zero_based=False, multilabel=False)

out_afterfix_start_num += 1

out_files.append(file_name_tmp)

return out_files, out_afterfix_start_num

bst = load_boston()

df = pd.DataFrame(bst.data, columns=bst.feature_names)

df['target'] = bst.target

# 1- 将df转换成生成器 当是一个非常大的文件的时候:pd_chunk = pd.read_csv('', chunksize=500000)

pd_chunk = pandas_iter(df, chunksize=100)

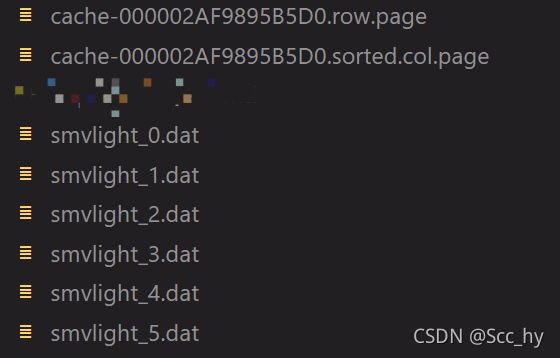

out_files, out_afterfix_start_num = file2svm(pd_chunk, train_columns=bst.feature_names, target_column='target',

out_prefix='smvlight',

out_afterfix_start_num=0)

二、生成DMatrix&训练模型

参考: https://xgboost.readthedocs.io/en/latest/tutorials/external_memory.html

这里官网的 next 中return 的数值应该是写反了,不然会报错。

但是以Iterator的方式载入数据训练速度会降低。毕竟有舍有得么。

class Iterator(xgb.DataIter):

def __init__(self, svm_file_paths: List[str]):

self._file_paths = svm_file_paths

self._it = 0

super().__init__(cache_prefix=os.path.join('.', 'cache')) # 'D:\\work'

def next(self, input_data: Callable):

# DMatrix

if self._it == (len(self._file_paths)):

return 0

X, y = load_svmlight_file(self._file_paths[self._it])

input_data(X, label=y)

self._it +=1

return 1

def reset(self):

self._it = 0

it = Iterator(out_files)

Xy = xgb.DMatrix(it)

xgb_params = {

'max_depth': 8,

'learning_rate': 0.1,

'subsample': 0.9,

'colsample_bytree': 0.8,

'objective': 'reg:squarederror'

}

xgb_model = xgb.train(xgb_params, Xy, evals=[(Xy, 'train')], num_boost_round=100, verbose_eval=20)

"""

[0] train-rmse:21.60054

[20] train-rmse:3.50944

[40] train-rmse:0.97840

[60] train-rmse:0.47697

[80] train-rmse:0.28121

[99] train-rmse:0.17366

"""