我的k8s学习笔记

k8s

-

- 基础概念

-

- k8s架构

-

- api server 所有服务访问统一入口

- replication controller 维持副本期望数目

- scheduler

- etcd 键值对数据库 存储k8s集群所有重要信息(持久化)

- kublet 直接跟容器引擎进行交互,实现容器的管理

- kube-proxy 负责写入规则之iptables ipvs 实现服务映射访问

- 其它组件

- 什么是pod

-

- 控制器类型(控制器管理pod)

- k8s网络通讯模式

-

- 通讯规则

- 通讯规则详解

- 构建k8s集群

-

- 学习过程中使用vmware虚拟机

-

- vmware的配置

-

- 配置说明

- centos7虚拟机

-

- centos7安装

- centos7网络配置

- centos7环境配置

- docker安装

- k8s-master组件安装

- 生产环境

-

- 资源清单

-

- 资源分类

-

- 名称空间级别

-

- 工作负载型资源(workload)

- 服务发现及负载均衡型资源

- 配置与存储型资源:

- 特殊类型的存储卷

- 集群级资源

- 元数据型资源

- 资源清单

-

- 资源清单定义

- 常见yaml字段

- 查看字段

- 查看日志 常用基本操作

- pod的生命周期

-

- 图解

- initC

-

- initc 模版

- initC 解释

- 探针

-

- 有三种类型的处理程序:

- livenessProbe 存活检测

- readinessProbe 就绪检测

- start stop

-

- 示例

- pod控制器

-

- ReplicationController 和 ReplicaSet

-

- 适用场景

- Deployment

-

- 功能

- deployment与rs关联

- 示例

- 创建

- 扩容

- 更新镜像

- 回滚

- 其它操作

- DaemonSet

-

- 适用场景

- 示例

- Job

-

- 适用场景

- 示例

- CronJob

-

- 适用场景

- 并发策略

- 示例

- StatefulSet

- Horizontal Pod Autoscaling

- 服务发现

-

- Service 的概念

- Service 的类型

-

- ClusterIp

-

- 说明

- 图解

- 示例

- NodePort

-

- 原理

- 示例

- LoadBalancer

- ExternalName

-

- 示例

- VIP 和 Service 代理

-

- 详情

- 代理模式的分类

- ingress

-

- 功能

- 官网

- 实验1 http 代理

- 实验二

- 实验三 https

- 存储

-

- configMap

-

- 解释

- ConfigMap 的创建

-

- 1.用目录创建

- 2.使用文件创建

- 3.使用字面值创建

- 4.yaml创建

- Pod 中使用 ConfigMap

-

- 1.用 ConfigMap 来替代环境变量

- 2.用 ConfigMap 设置命令行参数

- 3.使用volume将ConfigMap作为文件或目录直接挂载

- ConfigMap 的热更新

-

- 修改 configMap

- ConfigMap 更新后滚动更新 Pod

- Secret

-

- Secret有三种类型

-

- Service Account:

- Opaque

- kubernetes.io/dockerconfigjson

- volumn

-

- 目前支持的类型

- emptyDir

-

- 应用场景

- 示例

- hostPath

-

- 应用场景

- 示例

- 集群调度

-

- 调度器 Scheduler

- 调度器亲和性

-

- 节点亲和性

-

- 软策略

- 硬策略

- 软硬合体

- 操作符

- Pod 亲和性

- 污点和容忍 Taint 和 Toleration

-

- 污点

- 污点的查看和清除

- 容忍Tolerations

- 指定调度节点

-

- Pod.spec.nodeName

- Pod.spec.nodeSelector

- 集群安全机制

-

- 认证

- 鉴权

- 访问控制 原理流程

- HELM

-

- 原理

- 模版自定义

- 部署常用的插件

- kubeadm 修改证书有效期为100年

基础概念

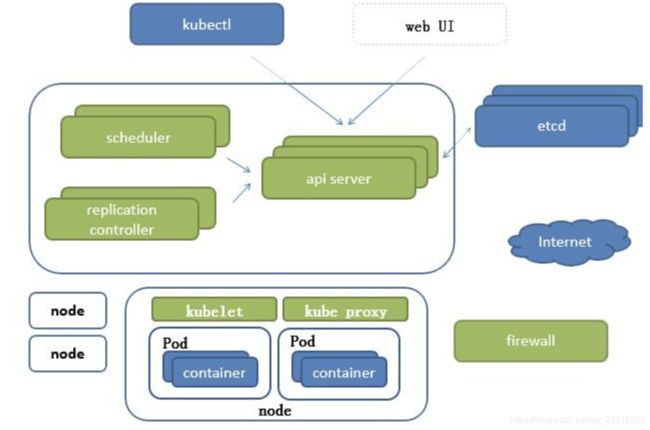

k8s架构

api server 所有服务访问统一入口

replication controller 维持副本期望数目

scheduler

etcd 键值对数据库 存储k8s集群所有重要信息(持久化)

- etcd是一个可信赖分布式键值存储服务,使用http

- 版本

- v2写入到内存

- v3 写入到db (k8s v1.11之前不支持)

kublet 直接跟容器引擎进行交互,实现容器的管理

kube-proxy 负责写入规则之iptables ipvs 实现服务映射访问

其它组件

- coreDNS 可以为集群中的SVC创建一个域名IP的对应关系解析

- dashboard 给 K8S 集群提供一个 B/S 结构访问体系

- ingress controller 官方只能实现四层代理,INGRESS 可以实现七层代理

- federation 提供一个可以跨集群中心多K8S统一管理功能

- prometheus 提供K8S集群的监控能力

- elk 提供 K8S 集群日志统一分析介入平台

什么是pod

控制器类型(控制器管理pod)

- ReplicationController

用来确保容器应用的副本数始终保持在用户定义的副本数,

即如果有容器异常退出,会自动创建新的Pod 来替代;

而如果异常多出来的容器也会自动回收

- ReplicaSet

ReplicaSet 跟ReplicationController 没有本质的不同,只是名字不一样,

并且ReplicaSet 支持集合式的selector虽然ReplicaSet 可以独立使用,

- Deployment

但一般还是建议使用Deployment 来自动管理ReplicaSet ,

这样就无需担心跟其他机制的不兼容问题,

ReplicaSet 不支持rolling-update 但Deployment 支持

Deployment 为Pod 和ReplicaSet 提供了一个声明式定义(declarative) 方法,

用来替代以前的ReplicationController 来方便的管理应用。

典型的应用场景包括:

*定义Deployment 来创建Pod 和ReplicaSet*滚动升级和回滚应用

*扩容和缩容*暂停和继续Deployment返回

-

DaemonSet

-

Cron Job

- 管理基于时间的Job,即:

- 在给定时间点只运行一次

- 周期性地在给定时间点运行返回

- 管理基于时间的Job,即:

-

服务发现(service 来代理pod 当成微服务理解) 轮询算法

- 可以通过 Deployment 做代理 实现服务发现

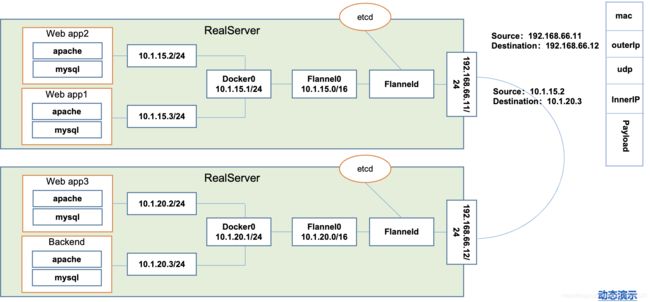

k8s网络通讯模式

- 网络模型假定了所有Pod 都在一个可以直接连通的扁平的网络空间中

通讯规则

- 同一个Pod 内的多个容器之间:localhost

- Pod 与Service 之间的通讯:各节点的Iptables 规则网络通讯模式

- 各Pod 之间的通讯:Overlay Network 使用

Flannel- 概述

CoreOS 团队针对Kubernetes 设计的一个网络规划服务,简单来说, 它的功能是让集群中的不同节点主机创建的Docker 容器都具有全集群唯一的虚拟IP地址。 而且它还能在这些IP 地址之间建立一个覆盖网络(Overlay Network), 通过这个覆盖网络,将数据包原封不动地传递到目标容器内

通讯规则详解

-

同一个Pod 内部通讯:同一个Pod 共享同一个网络命名空间,共享同一个Linux 协议栈

-

Pod1 至 Pod2

- Pod1 与Pod2 不在同一台主机,Pod的地址是与docker0在同一个网段的, 但docker0网段与宿主机网卡是两个完全不同的IP网段,并且不同Node之间的通信只能通过宿 主机的物理网卡进行。将Pod的IP和所在Node的IP关联起来,通过这个关联让Pod可以互相访问 - Pod1 与Pod2 在同一台机器,由Docker0 网桥直接转发请求至Pod2,不需要经过Flannel -

Pod 至 Service的网络:目前基于性能考虑,全部为iptables 维护和转发

-

Pod 到外网:Pod 向外网发送请求,查找路由表, 转发数据包到宿主机的网卡,

宿主网卡完成路由选择后,iptables执行Masquerade,把源IP 更改为宿主网卡的IP,

然后向外网服务器发送请求

构建k8s集群

学习过程中使用vmware虚拟机

vmware的配置

配置说明

1.自行寻找合适的vmware版本

2.配置nat网络模式的ip 子网掩码 网关

3.使用静态ip,不使用dhcp模式

centos7虚拟机

centos7安装

1. 可以到国内的镜像处下载 https://mirrors.163.com/centos/7.8.2003/isos/x86_64/

2. 安装过程。。。

3. 安装完成

centos7网络配置

1.使用nat网络模式

2.不想截图了 这里有篇一样的文章 https://blog.csdn.net/a785975139/article/details/53023590

centos7环境配置

1.安装基本的软件

yum install -y conntrack ntpdate ntp ipvsadm ipset jq iptables curl sysstat libseccomp wget vim net-tools git lrzsz

lrzsz xshell 传文件

从服务端发送文件到客户端:

sz filename

从客户端上传文件到服务端:

rz

2.关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

3.设置防火墙为 Iptables 并设置空规则

yum -y install iptables-services && systemctl start iptables && systemctl enable iptables&& iptables -F && service iptables save

4.设置主机名

hostnamectl set-hostname k8s-node01

hostnamectl set-hostname k8s-node02

hostnamectl set-hostname k8s-master01

5.加入ip到/etc/hosts

192.168.66.10 k8s-master01

192.168.66.20 k8s-node01

192.168.66.21 k8s-node02

6.关闭交换分区, /dev/mapper/centos-root

SELinux是干嘛的 参考 http://cn.linux.vbird.org/linux_basic/0440processcontrol_5.php

swapoff -a && sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

关闭SELinux

setenforce 0 && sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

7.调整内核参数,对于 K8S

cat > kubernetes.conf << EOF

net.bridge.bridge-nf-call-iptables=1

net.bridge.bridge-nf-call-ip6tables=1

net.ipv4.ip_forward=1

net.ipv4.tcp_tw_recycle=0

vm.swappiness=0 # 禁止使用 swap 空间,只有当系统 OOM 时才允许使用它

vm.overcommit_memory=1 # 不检查物理内存是否够用

vm.panic_on_oom=0 # 开启 OOM

fs.inotify.max_user_instances=8192

fs.inotify.max_user_watches=1048576

fs.file-max=52706963fs.nr_open=52706963

net.ipv6.conf.all.disable_ipv6=1

net.netfilter.nf_conntrack_max=2310720

EOF

cp kubernetes.conf /etc/sysctl.d/kubernetes.conf

sysctl -p /etc/sysctl.d/kubernetes.conf

ps:执行这条可能会报错 sysctl: cannot stat /proc/sys/net/netfilter/nf_conntrack_max: 没有那个文件或目录

解决办法: 先不管升级内核4之后自动调用 conntrack没有加载 这个可以省略 不过需要升级内核

执行 modprobe ip_conntrack 加载模块

查看 lsmod |grep conntrack

8.调整系统时区

# 设置系统时区为中国/上海

timedatectl set-timezone Asia/Shanghai

# 将当前的 UTC 时间写入硬件时钟

timedatectl set-local-rtc 0

# 重启依赖于系统时间的服务

systemctl restart rsyslog && systemctl restart crond

9.

关闭系统不需要服务 邮件服务

systemctl stop postfix && systemctl disable postfix

10.设置 rsyslogd 和 systemd journald 日志

mkdir /var/log/journal # 持久化保存日志的目录

mkdir /etc/systemd/journald.conf.d

cat > /etc/systemd/journald.conf.d/99-prophet.conf <<EOF

[Journal]

# 持久化保存到磁盘

Storage=persistent

# 压缩历史日志

Compress=yesSyncIntervalSec=5m

RateLimitInterval=30s

RateLimitBurst=1000

# 最大占用空间 10G

SystemMaxUse=10G

# 单日志文件最大 200M

SystemMaxFileSize=200M

# 日志保存时间 2 周

MaxRetentionSec=2week

# 不将日志转发到 syslog

ForwardToSyslog=no

EOF

systemctl restart systemd-journald

11.升级内核为4.4(好像不升级也行吧)

rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-3.el7.elrepo.noarch.rpm

# 安装完成后检查 /boot/grub2/grub.cfg 中对应内核 menuentry 中是否包含 initrd16 配置,如果没有,再安装一次!

yum --enablerepo=elrepo-kernel install -y kernel-lt

# 设置开机从新内核启动

grub2-set-default 'CentOS Linux (4.4.189-1.el7.elrepo.x86_64) 7 (Core)'

docker安装

1.kube-proxy开启ipvs的前置条件

modprobe br_netfilter

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules &&lsmod | grep -e ip_vs -e nf_conntrack_ipv4

2.安装docker 使用阿里云的centos7

安装 Docker 软件

# step 1: 安装必要的一些系统工具

yum install -y yum-utils device-mapper-persistent-data lvm2

# Step 2: 添加软件源信息

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# Step 3: 更新并安装Docker-CE

yum update -y && yum -y install docker-ce

# Step

reboot

systemctl start docker && systemctl enable docker

## 创建 /etc/docker 目录

mkdir /etc/docker

# 配置 daemon.

cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {"max-size": "100m" }

}

EOF

mkdir -p /etc/systemd/system/docker.service.d

# 重启docker服务

systemctl daemon-reload && systemctl restart docker && systemctl enable docker

k8s-master组件安装

1.安装 Kubeadm (主从配置)

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum -y install kubeadm-1.15.1 kubectl-1.15.1 kubelet-1.15.1

systemctl enable kubelet.service

2. 初始化主节点

2.1 生成配置文件

kubeadm config print init-defaults > kubeadm-config.yaml

2.2 修改配置文件

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress:192.168.66.10

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io

kind: ClusterConfiguration

kubernetesVersion: v1.15.1

networking:

dnsDomain: cluster.local

podSubnet: "10.244.0.0/16"

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs

2.3 初始化主节点 (虚拟机需要分配两个cpu)

需要下载需要的镜像

需要科.学.上.网 kubeadm init --config=kubeadm-config.yaml --experimental-upload-certs | tee kubeadm-init.log

使用阿里云的仓库 kubeadm init --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.15.1 --apiserver-advertise-address 192.168.66.10 --pod-network-cidr=10.244.0.0/16| tee kubeadm-init.log

3.加入主节点以及其余工作节点

查看安装日志(2.3的kubeadm-init.log)中的加入命令即可

删除节点 master中执行 kubectl delete node k8s-node01

节点加入集群 kubeadm reset && kubeadm join ...

master生成的加入token为24h 过期后

# 生成token

kubeadm token generate

# 根据token输出添加命令

kubeadm token create <token> --print-join-command --ttl=0

4.部署网络 flannel网络插件

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

5.查看系统的容器

kubectl get pod -n kube-system

6. 如果是遇到节点的flannel报错Error from server: Get https://192.168.66.21:10250/containerLogs/kube-system/kube-flannel-ds-jj7fz/kube-flannel: dial tcp 192.168.66.21:10250: connect: no route to host

解决方法:将该节点踢出,删除节点上的容器镜像,然后重新把这个节点加入到集群,就是有效

生产环境

资源清单

资源分类

名称空间级别

工作负载型资源(workload)

Pod、ReplicaSet、Deployment、StatefulSet、DaemonSet、Job、CronJob(ReplicationController在v1.11版本被废弃)

服务发现及负载均衡型资源

(ServiceDiscoveryLoadBalance):Service、Ingress、...

配置与存储型资源:

- Volume(存储卷)、

-

- CSI(容器存储接口,可以扩展各种各样的第三方存储卷)

特殊类型的存储卷

- ConfigMap(当配置中心来使用的资源类型)、

- Secret(保存敏感数据)、

- DownwardAPI(把外部环境中的信息输出给容器)

集群级资源

Namespace、Node、Role、ClusterRole、RoleBinding、ClusterRoleBinding

元数据型资源

- HPA、

- PodTemplate、

- LimitRange

资源清单

资源清单定义

- 在k8s中,一般使用yaml格式的文件来创建符合我们预期期望的pod,这样的yaml文件我们一般称为资源清单

常见yaml字段

| 参数名 | 字段类型 | 说明 |

|---|---|---|

| version | string | 这里指k8s的api版本 默认为v1 可以通过 kubectl api-versions查看 |

查看字段

- kubectl explain pod 可以去文档找

- kubectl explain pod.xxxxx

查看日志 常用基本操作

- kubectl get node --show-labels

- kubectl get pod --show-labels [-w] [-o wide]

- kubectl describe pod xxx-pod

- kubectl logs xxx-pod -c 容器名称

- 删除 pod

kubectl delete pod xxx-pod - 删除 pod

kubectl delete pod --all - 进入pod中的容器

kubectl exec xxx-pod [-c xxx容器] -it -- /bin/sh - 查看pod标签

kubectl get pod --show-labels - 修改标签

kubectl label pod xxx-pod label=newlabel --overwrite=True - 查看适用版本

kubectl explain rs/deployment/pod/job - 打标签

kubectl label pod you-pod app=hello --overwrite=true

pod的生命周期

图解

initC

initc 模版

# init 模板

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

labels:

app: myapp

spec:

containers:

name: myapp-container

image: busybox

command: ['sh','-c','echo The app is running! && sleep 3600']

initContainers:

- name: init-myservice

image: busybox

command: ['sh','-c','until nslookup myservice; do echo waiting for myservice; sleep 2;done;']

- name: init-mydb

image: busybox

command: ['sh','-c','until nslookup mydb; do echo waiting for mydb; sleep 2;done;']

# svc 模版示例

kind: Service

apiVersion: v1

metadata:

name: myservice

spec:

ports:

- protocol: TCP

port: 80

targetPort: 9376

---

kind: Service

apiVersion: v1

metadata:

name: mydb

spec:

ports:

- protocol: TCP

port: 80

targetPort: 9377

initC 解释

每个pod可以有多个initC

Init容器与普通的容器非常像,除了如下两点:

Init容器总是运行到成功完成为止

每个Init容器都必须在下一个Init容器启动之前成功完成

如果Pod的Init容器失败,Kubernetes会不断地重启该Pod,直到Init容器成功为止。

然而,如果Pod对应的restartPolicy为Never,它不会重新启动

Init容器会按顺序在网络和数据卷(pause容器)初始化之后启动

探针

- 探针是由kubelet对容器执行的定期诊断

有三种类型的处理程序:

- ExecAction:在容器内执行指定命令。如果命令退出时返回码为0则认为诊断成功。

- TCPSocketAction:对指定端口上的容器的IP地址进行TCP检查。如果端口打开,则诊断被认为是成功的。

- HTTPGetAction:对指定的端口和路径上的容器的IP地址执行HTTPGet请求。如果响应的状态码大于等于200且小于400,则诊断被认为是成功的

livenessProbe 存活检测

- exec 方式

apiVersion: v1

kind: Pod

metadata:

name: liveness-exec-pod

namespace: default

spec:

containers:

- name: liveness-exec-container

image: busybox

imagePullPolicy: IfNotPresent

command: ["/bin/sh","-c","touch /tmp/live ; sleep 60; rm -rf /tmp/live; sleep3600"]

livenessProbe:

exec:

command: ["test","-e","/tmp/live"]

initialDelaySeconds: 1

periodSeconds: 3

- http get 方式

apiVersion: v1

kind: Pod

metadata:

name: liveness-httpget-pod

namespace: default

spec:

containers:

- name: liveness-httpget-container

image: liuqt:v1

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

livenessProbe:

httpGet:

port: http

path: /hostname

initialDelaySeconds: 1

periodSeconds: 3

timeoutSeconds: 10

- tcp

apiVersion: v1

kind: Pod

metadata:

name: probe-tcp

spec:

containers:

- name: nginx

image: liuqt:v1

livenessProbe:

initialDelaySeconds: 5

timeoutSeconds: 1

tcpSocket:

port: 80

readinessProbe 就绪检测

- http get 方式

apiVersion: v1

kind: Pod

metadata:

name: readiness-httpget-pod

namespace: default

spec:

containers:

- name: readiness-httpget-container

image: liuqt:v1

imagePullPolicy: IfNotPresent

readinessProbe:

httpGet:

port: 80

path: /hostname

initialDelaySeconds: 1

periodSeconds: 3

start stop

示例

apiVersion: v1

kind: Pod

metadata:

name: lifecycle-demo

spec:

containers:

- name: lifecycle-demo-container

image: nginx

lifecycle:

postStart:

exec:

command: ["/bin/sh", "-c", "echo Hello from the postStart handler >/usr/share/message"]

preStop:

exec:

command: ["/bin/sh", "-c", "echo Hello from the poststop handler >/usr/share/message"]

pod控制器

ReplicationController 和 ReplicaSet

适用场景

- rs支持支持集合式的 selector 即标签功能,rc已被rs替代

- 确保pod指定的副本数

- 示例

apiVersion: extensions/v1beta1

kind: ReplicaSet

metadata:

name: frontend

spec:

replicas: 3

selector:

matchLabels:

tier: frontend

template:

metadata:

labels:

tier: frontend

spec:

containers:

- name: liuqt

image: liuqt:v1

env:

- name: GET_HOSTS_FROM

value: dns

ports:

- containerPort: 80

Deployment

功能

- 支持回滚更新、扩容、更新镜像

- 会创建对应的rs来管理pod

deployment与rs关联

示例

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: liuqt

image: liuqt:v1

ports:

- containerPort: 80

创建

kubectl create -f 上面的deployment.yaml --record

## --record参数可以记录命令,我们可以很方便的查看每次 revision 的变化

# 其实没啥用

扩容

kubectl scale deployment nginx-deployment --replicas 10

更新镜像

kubectl set image deployment/nginx-deployment liuqt=liuqt:v2

回滚

# 回滚到上一次

kubectl rollout undo deployment/nginx-deployment

# 查看回滚状态

kubectl rollout status deployment/nginx-deployment

# 查看历史的 rs

kubectl get rs

其它操作

kubectl set image deployment/nginx-deployment nginx=nginx:1.91

kubectl rollout status deployments nginx-deployment

# 回退上个版本

kubectl rollout undo deployment/nginx-deployment

# 回到指定版本

kubectl rollout history deployment/nginx-deployment

kubectl rollout undo deployment/nginx-deployment --to-revision=2

## 可以使用 --revision参数指定某个历史版本

kubectl rollout pause deployment/nginx-deployment ## 暂停 deployment 的更新

DaemonSet

适用场景

- 每个node仅运行一个副本,如日志收集,监控等

示例

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: deamonset-example

labels:

app: daemonset

spec:

selector:

matchLabels:

name: deamonset-example

template:

metadata:

labels:

name: deamonset-example

spec:

containers:

- name: daemonset-example

image: liuqt:v1

Job

适用场景

- 单次任务:计算任务

示例

# 计算圆周率 2000位

apiVersion: batch/v1

kind: Job

metadata:

name: pi

spec:

template:

metadata:

name: pi

spec:

containers:

- name: pi

image: perl

command: ["perl","-Mbignum=bpi","-wle","print bpi(2000)"]

restartPolicy: Never

CronJob

适用场景

- 定时任务:备份数据库、发邮件

- 定时去创建job

并发策略

- Allow(默认):允许并发运行 Job

- Forbid:禁止并发运行,如果前一个还没有完成,则直接跳过下一个

- Replace:取消当前正在运行的 Job,用一个新的来替换

示例

# 定时输出一句话

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: hello

spec:

schedule: "*/1 * * * *"

jobTemplate:

spec:

template:

spec:

containers:

- name: hello

image: busybox

args:

- /bin/sh

- -c

- date; echo Hello from the Kubernetes cluster

restartPolicy: OnFailure

StatefulSet

- 有状态服务 mysql mongo等

Horizontal Pod Autoscaling

- 控制控制器实现自动扩容

服务发现

Service 的概念

- 类似于微服务

Service 的类型

ClusterIp

- 默认类型,自动分配一个仅 Cluster 内部可以访问的虚拟 IP

说明

clusterIP 主要在每个 node 节点使用 iptables,将发向 clusterIP 对应端口的数据,转发到 kube-proxy 中。

然后 kube-proxy 自己内部实现有负载均衡的方法,并可以查询到这个 service 下对应 pod 的地址和端口,进而把数据转发给对应的 pod 的地址和端口

图解

为了实现图上的功能,主要需要以下几个组件的协同工作

1.apiserver 用户通过kubectl命令向apiserver发送创建service的命令,apiserver接收到请求后将数据存储到etcd中

2.kube-proxy kubernetes的每个节点中都有一个叫做kube-porxy的进程,这个进程负责感知service,pod的变化,并将变化的信息写入本地的iptables规则中

3.iptables 使用NAT等技术将virtualIP的流量转至endpoint中

示例

- deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deploy

namespace: default

spec:

replicas: 3

selector:

matchLabels:

app: myapp

release: stabel

template:

metadata:

labels:

app: myapp

release: stabel

env: test

spec:

containers:

- name: myapp

image: liuqt:v1

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 80

- svc

apiVersion: v1

kind: Service

metadata:

name: myapp

namespace: default

spec:

type: ClusterIP

selector:

app: myapp

release: stabel

ports:

- name: http

port: 80

targetPort: 80

NodePort

原理

nodePort 的原理在于在 node 上开了一个端口,将向该端口的流量导入到 kube-proxy,然后由 kube-proxy 进一步到给对应的 pod

示例

- deployment 使用上面的clasterIP

- svc

# 会在每台node和master释放一个随机端口 供外界访问

apiVersion: v1

kind: Service

metadata:

name: myapp

namespace: default

spec:

type: NodePort

selector:

app: myapp

release: stabel

ports:

- name: http

port: 80

targetPort: 80

LoadBalancer

- 需要搭建在云服务器,需要云供应商 ,没啥用。。。

- 在 NodePort 的基础上,借助 cloud provider 创建一个外部负载均衡器,并将请求转发到: NodePort

ExternalName

- 把集群外部的服务引入到集群内部来,在集群内部直接使用。没有任何类型代理被创建,这只有 kubernetes 1.7 或更高版本的 kube-dns 才支持

- 例如:node中需要访问外部的mysql等 ,定义一个mysql-svc供pod访问即可

示例

kind: Service

apiVersion: v1

metadata:

name: mysql-service

namespace: default

spec:

type: ExternalName

externalName: 127.0.0.1(你的mysql地址)

# mysql-service.defalut.svc.cluster.local 会解析到 你的mysql地址

VIP 和 Service 代理

详情

在 Kubernetes 集群中,每个 Node 运行一个kube-proxy进程。

kube-proxy负责为Service实现了一种VIP(虚拟 IP)的形式,而不是ExternalName的形式。

在 Kubernetes v1.0 版本,代理完全在 userspace。

在Kubernetes v1.1 版本,新增了 iptables 代理,但并不是默认的运行模式。

从 Kubernetes v1.2 起,默认就是iptables 代理。

在 Kubernetes v1.8.0-beta.0 中,添加了 ipvs 代理在 Kubernetes 1.14 版本开始默认使用ipvs 代理

在 Kubernetes v1.0 版本,Service是 “4层”(TCP/UDP over IP)概念。

在 Kubernetes v1.1 版本,新增了Ingress API(beta 版),用来表示 “7层”(HTTP)服务

代理模式的分类

- userspace 代理模式

- iptables 代理模式

- ipvs 代理模式

- rr:轮询调度

- lc:最小连接数

- dh:目标哈希

- sh:源哈希

- sed:最短期望延迟

- nq:不排队调度

ingress

功能

- 使用ingress可以实现将内部服务实现七层代理

官网

- ingress

- 这里用了nodeport模式

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.40.2/deploy/static/provider/baremetal/deploy.yaml - 上面的yaml有个坑

# 这里直接是报错的 我安装的k8s是 1.15.1

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

# 通过查看 kubectl explain ValidatingWebhookConfiguration

# 应将 apiVersion: admissionregistration.k8s.io/v1 改成

apiVersion: admissionregistration.k8s.io/v1betal

- 其他说明

只需要apply deploy.yaml 一个yaml就能开启ingress代理

deploy.yaml 里面需要的镜像 可能无法正常下载

可以通过阿里云容器镜像服务 代下

然后自行修改标签即可

实验1 http 代理

- deployment && service

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-deplyment1

spec:

replicas: 3

template:

metadata:

labels:

name: nginx1

spec:

containers:

- name: nginx

image: liuqt:v1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc1

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

selector:

name: nginx1

- ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-test

spec:

rules:

- host: www1.liuqt.com

http:

paths:

- path: /

backend:

serviceName: nginx-svc1

servicePort: 80

- 访问 通过 kubectl get svc -n ingress-nginx 可以查看到向外暴露的端口

- 在自己的主机hosts添加规则 www.xxx.com:11111 即可访问到上面的服务

实验二

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: deplyment1

spec:

replicas: 3

template:

metadata:

labels:

name: nginx1

spec:

containers:

- name: nginx1

image: liuqt:v1

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: svc1

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

selector:

name: nginx1

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: deplyment2

spec:

replicas: 3

template:

metadata:

labels:

name: nginx2

spec:

containers:

- name: nginx2

image: liuqt:v2

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: svc2

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

selector:

name: nginx2

- ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-test

spec:

rules:

- host: www1.liuqt.com

http:

paths:

- path: /

backend:

serviceName: svc1

servicePort: 80

- host: www2.liuqt.com

http:

paths:

- path: /

backend:

serviceName: svc2

servicePort: 80

- 访问方式同实验一

实验三 https

- 参考 示例

- 创建证书

openssl req -x509 -sha256 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=nginxsvc/O=nginxsvc"

kubectl create secret tls tls-secret --key tls.key --cert tls.crt

- deployment

- ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-test

spec:

tls:

- hosts:

- www2.liuqt.com

secretName: tls-secret

rules:

- host: www2.liuqt.com

http:

paths:

- path: /

backend:

serviceName: nginx-svc

servicePort: 80

存储

configMap

解释

许多应用程序会从配置文件、命令行参数或环境变量中读取配置信息。

ConfigMap API 给我们提供了向容器中注入配置信息的机制,

ConfigMap 可以被用来保存单个属性,也可以用来保存整个配置文件或者 JSON 二进制大对象

ConfigMap 的创建

1.用目录创建

# 从目录创建

mkdir config

echo a>config/a

echo b>config/b

kubectl create configmap special-config --from-file=config/

kubectl get configmap special-config -o go-template='{{.data}}'

2.使用文件创建

echo -e "a=b\nc=d" | tee config.env

kubectl create configmap special-config --from-file=config.env

kubectl create configmap special-config --from-env-file=config.env

kubectl get configmap special-config -o go-template='{{.data}}'

kubectl get configmap special-config -o yaml

3.使用字面值创建

kubectl create configmap special-config --from-literal=special_how=very --from-literal=special_type=charm

kubectlget configmaps special-config -o yaml

4.yaml创建

apiVersion: v1

kind: ConfigMap

metadata:

name: special-config

namespace: default

data:

special.how: very

special.type: charm

Pod 中使用 ConfigMap

1.用 ConfigMap 来替代环境变量

- 示例

apiVersion: v1

kind: Pod

metadata:

name: test-pod

spec:

containers:

- name: test-container

image: busybox

command: [ "/bin/sh", "-c", "env" ]

env:

- name: SPECIAL_LEVEL_KEY

valueFrom:

configMapKeyRef:

name: special-config

key: special.how

- name: SPECIAL_TYPE_KEY

valueFrom:

configMapKeyRef:

name: special-config

key: special.type

envFrom:

- configMapRef:

name: env-config

restartPolicy: Never

2.用 ConfigMap 设置命令行参数

- 示例

# 将ConfigMap用作命令行参数时,需要先把ConfigMap的数据保存在环境变量中,然后通过$(VAR_NAME)的方式引用环境变量.

apiVersion: v1

kind: Pod

metadata:

name: dapi-test-pod

spec:

containers:

- name: test-container

image: gcr.io/google_containers/busybox

command: [ "/bin/sh", "-c", "echo $(SPECIAL_LEVEL_KEY) $(SPECIAL_TYPE_KEY)" ]

env:

- name: SPECIAL_LEVEL_KEY

valueFrom:

configMapKeyRef:

name: special-config

key: special.how

- name: SPECIAL_TYPE_KEY

valueFrom:

configMapKeyRef:

name: special-config

key: special.type

restartPolicy: Never

3.使用volume将ConfigMap作为文件或目录直接挂载

- 示例

# 将创建的ConfigMap直接挂载至Pod的/etc/config目录下,其中每一个key-value键值对都会生成一个文件,key为文件名,value为内容

apiVersion: v1

kind: Pod

metadata:

name: vol-test-pod

spec:

containers:

- name: test-container

image: gcr.io/google_containers/busybox

command: [ "/bin/sh", "-c", "cat /etc/config/special.how" ]

volumeMounts:

- name: config-volume

mountPath: /etc/config

volumes:

- name: config-volume

configMap:

name: special-config

restartPolicy: Never

ConfigMap 的热更新

修改 configMap

- kubectl edit configmap log-config

- 修改key值后等待大概 10 秒钟时间,再次查看环境变量的值

ConfigMap 更新后滚动更新 Pod

- 更新 ConfigMap 目前并不会触发相关 Pod 的滚动更新,可以通过修改 pod annotations 的方式强制触发滚动更新

kubectl patch deployment my-nginx --patch '{"spec": {"template": {"metadata": {"annotations":{"version/config": "20190411" }}}}}'

- 啦啦啦

更新 ConfigMap 后:

使用该 ConfigMap 挂载的 Env 不会同步更新

使用该 ConfigMap 挂载的 Volume 中的数据需要一段时间(实测大概10秒)才能同步更新

Secret

- 解决了密码、token、密钥等敏感数据的配置问题

Secret有三种类型

Service Account:

- 用来访问Kubernetes API,由Kubernetes自动创建,并且会自动挂载到Pod的

/run/secrets/kubernetes.io/serviceaccount目录中 - 查看

kubectl exec xxx-pod-xxx ls /run/secrets/kubernetes.io/serviceaccount

Opaque

- base64编码格式的Secret,用来存储密码、密钥等;

- 示例

echo -n "admin" | base64

YWRtaW4=

echo -n "1f2d1e2e67df" | base64

MWYyZDFlMmU2N2Rm

- yaml 示例

apiVersion: v1

kind: Secret

metadata:

name: mysecret

type: Opaque

data:

password: MWYyZDFlMmU2N2Rm # pa

username: YWRtaW4=

- 创建secret

kubectl create -f secrets.yml - 使用方式一:Volume方式

apiVersion: v1

kind: Pod

metadata:

labels:

name: db

name: db

spec:

volumes:

- name: secrets

secret:

secretName: mysecret

containers:

- image: nginx:v1

name: db

volumeMounts:

- name: secrets

mountPath: "/etc/secrets"

readOnly: true

ports:

- name: cp

containerPort: 80

hostPort: 80

- 使用方式二: 将Secret导出到环境变量中

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: wordpress-deployment

spec:

replicas: 2

strategy:

type: RollingUpdate

template:

metadata:

labels:

app: wordpress

visualize: "true"

spec:

containers:

- name: "wordpress"

image: "wordpress"

ports:

- containerPort: 80

env:

- name: WORDPRESS_DB_USER

valueFrom:

secretKeyRef:

name: mysecret

key: username

- name: WORDPRESS_DB_PASSWORD

valueFrom:

secretKeyRef:

name: mysecret

key: password

kubernetes.io/dockerconfigjson

- 用来存储私有docker registry的认证信息(需要认证的镜像自动登陆)

- 示例

kubectl create secret docker-registry myregistrykey \

--docker-server=DOCKER_REGISTRY_SERVER --docker-username=DOCKER_USER \

--docker-password=DOCKER_PASSWORD --docker-email=DOCKER_EMAIL

- 创建pod时候使用

# 通过imagePullSecrets来引用刚创建的myregistrykey

apiVersion: v1

kind: Pod

metadata:

name: foo

spec:

containers:

- name: foo

image: app:v1

imagePullSecrets:

- name: myregistrykey

volumn

- 参考中文k8s文档参考

目前支持的类型

emptyDir、hostPath、

gcePersistentDisk、awsElasticBlockStore、nfs、iscsi

glusterfs、rbd、gitRepo、secret

persistentVolumeClaim

emptyDir

应用场景

emptyDir 磁盘的作用:

普通空间,基于磁盘的数据存储

作为从崩溃中恢复的备份点

存储那些那些需要长久保存的数据,例web服务中的数据

示例

apiVersion: v1

kind: Pod

metadata:

name: test-pd

spec:

containers:

- image: liuqt:v1

name: test-container

volumeMounts:

- mountPath: /cache

name: cache-volume

volumes:

- name: cache-volume

emptyDir: {}

hostPath

应用场景

一个hostPath类型的磁盘就是挂在了主机的一个文件或者目录,这个功能可能不是那么常用,但是这个功能提供了一个很强大的突破口对于某些应用来说

例如,如下情况我们旧可能需要用到hostPath

某些应用需要用到docker的内部文件,这个时候只需要挂在本机的/var/lib/docker作为hostPath

在容器中运行cAdvisor,这个时候挂在/dev/cgroups

示例

apiVersion: v1

kind: Pod

metadata:

name: test-pd

spec:

containers:

- image: k8s.gcr.io/test-webserver

name: test-container

volumeMounts:

- mountPath: /test-pd

name: test-volume

volumes:

- name: test-volume

hostPath:# directory location on host

path: /data# this field is optional

type: Directory

集群调度

调度器 Scheduler

- 主要的任务是把定义的 pod 分配到集群的节点上

调度器亲和性

节点亲和性

- 解释

节点亲和性(pod 和node亲和性)

软策略

分班了 我想去张三老师的班级

硬策略

我必须去张三老师的班级

Pod 亲和性

软策略

分班了 我同桌关系很好 能和他去一个班级最好

硬策略

分班了 我同桌关系很好 一定和他去

- pod.spec.nodeAffinity

软策略

- preferredDuringSchedulingIgnoredDuringExecution

- 示例

apiVersion: v1

kind: Pod

metadata:

name: affinity

labels:

app: node-affinity-pod

spec:

containers:

- name: with-node-affinity

image: liuqt:v1 affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: kubernetes.io/hostname

operator: NotIn

values:

- k8s-node100 # 软策略 不存在会随机调度

# - k8s-node2 # 软策略 会调度在node02

硬策略

- requiredDuringSchedulingIgnoredDuringExecution

- 示例

apiVersion: v1

kind: Pod

metadata:

name: affinity

labels: # 打上标签很重要 方便在deployment进行关联

app: node-affinity-pod

spec:

containers:

- name: with-node-affinity

image: liuqt:v1

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: NotIn

values:

- k8s-node02 # 硬策略 不调度在node02上面

# - k8s-node100 # 硬策略 不存在会一只pending

软硬合体

apiVersion: v1

kind: Pod

metadata:

name: affinity

labels: # 打上标签很重要 方便在deployment进行关联

app: node-affinity-pod

spec:

containers:

- name: with-node-affinity

image: liuqt:v1

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: NotIn

values:

- k8s-node02 # 硬策略 不调度在node02上面

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

preference:

matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s-node01 # 软策略 不存在会随机调度

操作符

In:label 的值在某个列表中

NotIn:label 的值不在某个列表中

Gt:label 的值大于某个值

Lt:label 的值小于某个值

Exists:某个 label 存在

DoesNotExist:某个 label 不存在

Pod 亲和性

- 示例

apiVersion: v1

kind: Pod

metadata:

name: pod-3

labels:

app: pod-3

spec:

containers:

- name: pod-3

image: hub.atguigu.com/library/myapp:v1

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- pod1

topologyKey: kubernetes.io/hostname

# 必须pod的标签有 app=pod

# topologyKey 拓扑域

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- pod2

topologyKey: kubernetes.io/hostname

- 说明

| 调度策略 | 匹配标签 | 操作符 | 拓扑域 | 支持调度目标 |

|---|---|---|---|---|

| nodeAffinity | 主机 | In, NotIn, Exists,DoesNotExist, Gt, Lt | 否 | 指定主机 |

| podAffinity | pod | In, NotIn, Exists,DoesNotExist | 是 | POD与指定POD同一拓扑域 |

| podAnitAffinity | pod | In, NotIn, Exists,DoesNotExist | 是 | POD与指定POD同一拓扑域 |

污点和容忍 Taint 和 Toleration

- hh 打呼噜

污点

- 设置节点的污点

kubectl taint nodes k8s-node01 key=value:effect - 每个污点有一个 key 和 value 作为污点的标签,其中 value 可以为空,effect 描述污点的作用

- effect 支持如下三个选项

NoSchedule:表示 k8s 将不会将 Pod 调度到具有该污点的 Node 上

PreferNoSchedule:表示 k8s 将尽量避免将 Pod 调度到具有该污点的 Node 上

NoExecute:表示 k8s 将不会将 Pod 调度到具有该污点的 Node 上,同时会将 Node 上已经存在的 Pod 驱逐出去

污点的查看和清除

# 设置污点

kubectl taint nodes node1 key1=value1:NoSchedule

# 查找 Taints 字段

kubectl describe pod pod-name

# 去除污点

kubectl taint nodes node1 key1:NoSchedule-

容忍Tolerations

- 设置在

pod.spec.tolerations - 示例

apiVersion: v1

kind: Pod

metadata:

name: pod-3

labels:

app: pod-3

spec:

containers:

- name: pod-3

image: hub.atguigu.com/library/myapp:v1

tolerations:

- key: "key1"

operator: "Equal"

value: "value1"

effect: "NoSchedule"

tolerationSeconds: 3600 # 3600s后会被驱离

# 其中 key, vaule, effect 要与 Node 上设置的 taint 保持一致

- 不指定污点时,容忍所有的污点key

tolerations:

- operator: "Exists"

- 不指定 effect 值时,表示容忍所有的污点作用

tolerations:

- key: "key"

operator: "Exists"

- 有多个 Master 存在时,防止资源浪费,可以如下设置

kubectl taint nodes k8s-master01 node-role.kubernetes.io/master=:PreferNoSchedule

指定调度节点

Pod.spec.nodeName

- 示例

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: myweb

spec:

replicas: 7

template:

metadata:

labels:

app: myweb

spec:

nodeName: k8s-node01 # 只会调度到node01上面运行

containers:

- name: myweb

image: app:v1

ports:

- containerPort: 80

Pod.spec.nodeSelector

- 示例

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: myweb

spec:

replicas: 2

template:

metadata:

labels:

app: myweb

spec:

nodeSelector:

disk: ssd # 选择node上存在disk=ssd标签的node

containers:

- name: myweb

image: app:v1

ports:

- containerPort: 80

- 打标签

kubectl label node node01 disk=ssd - 修改deployment副本数

kubectl edit deploayment xxx-deployment