9.17 hive高级语法01

hive高级语法

数据库(Database)

-

表的集合,HDFS中表现为一个文件夹

- 默认在hive.metastore.warehouse.dir属性目录下

-

如果没有指定数据库,默认使用default数据库

create database if not exists myhivebook; use myhivebook; show databases; describe database default; --more details than ’show’, such as location alter database myhivebook set owner user dayongd; drop database if exists myhivebook cascade;

数据表(Tables)

- 分为内部表和外部表

- 内部表(管理表)

- HDFS中为所属数据库目录下的子文件夹

- 数据完全由Hive管理,删除表(元数据)会删除数据

- 外部表(External Tables)

- 数据保存在指定位置的HDFS路径中

- Hive不完全管理数据,删除表(元数据)不会删除数据

- 面试题

- Hive内部表和外部表概念?区别?最适合的应用场景?

Hive建表语句

CREATE EXTERNAL TABLE IF NOT EXISTS employee_external (

name string,

work_place ARRAY,

sex_age STRUCT,

skills_score MAP,

depart_title MAP>

)

COMMENT 'This is an external table'

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '|'

COLLECTION ITEMS TERMINATED BY ','

MAP KEYS TERMINATED BY ':'

STORED AS TEXTFILE

LOCATION '/user/root/employee';

Hive建表 - 分隔符

- Hive中默认分隔符:

- 字段:Ctrl+A或^A(\001)

- 集合:Ctrl+B或^B(\002)

- 映射:Ctrl+C或^C(\003)

- 注意:

- 建表时指定分隔符只能用于非嵌套类型

- 嵌套类型由嵌套级别决定

- 数组中嵌套数组-外部数组是B,内部数组是C

- 映射中嵌套数组-外部映射是C,内部数组是D

Hive建表 - Storage SerDe

- SerDe:Serializer and Deserializer

- Hive支持不同类型的Storage SerDe

- LazySimpleSerDe: TEXTFILE

- BinarySerializerDeserializer: SEQUENCEFILE

- ColumnarSerDe: ORC, RCFILE

- ParquetHiveSerDe: PARQUET

- AvroSerDe: AVRO

- OpenCSVSerDe: for CST/TSV

- JSONSerDe

- RegExSerDe

- HBaseSerDe

CREATE TABLE test_serde_hb(

id string,

name string,

sex string,

age string

)

ROW FORMAT SERDE

'org.apache.hadoop.hive.hbase.HBaseSerDe'

STORED BY

'org.apache.hadoop.hive.hbase. HBaseStorageHandler'

WITH SERDEPROPERTIES (

"hbase.columns.mapping"=

":key,info:name,info:sex,info:age"

) TBLPROPERTIES("hbase.table.name" = "test_serde");

Hive建表高阶语句 - CTAS and WITH

- CTAS – as select方式建表

- CTAS不能创建partition, external, bucket table

CREATE TABLE ctas_employee as SELECT * FROM employee;

- CTE (CTAS with Common Table Expression)

CREATE TABLE cte_employee AS

WITH

r1 AS (SELECT name FROM r2 WHERE name = 'Michael'),

r2 AS (SELECT name FROM employee WHERE sex_age.sex= 'Male'),

r3 AS (SELECT name FROM employee WHERE sex_age.sex= 'Female')

SELECT * FROM r1 UNION ALL SELECT * FROM r3;

- Like

CREATE TABLE employee_like LIKE employee;

创建临时表

- 临时表是应用程序自动管理在复杂查询期间生成的中间数据的方法

- 表只对当前session有效,session退出后自动删除

- 表空间位于/tmp/hive-

(安全考虑) - 如果创建的临时表表名已存在,实际用的是临时表

CREATE TEMPORARY TABLE tmp_table_name1 (c1 string);

CREATE TEMPORARY TABLE tmp_table_name2 AS..

CREATE TEMPORARY TABLE tmp_table_name3 LIKE..

表操作-删除/修改表

- 删除表

DROP TABLE IF EXISTS employee [With PERGE];

TRUNCATE TABLE employee; -- 清空表数据

- 修改表(Alter针对元数据)

ALTER TABLE employee RENAME TO new_employee;

ALTER TABLE c_employee SET TBLPROPERTIES ('comment'='New name, comments');

ALTER TABLE employee_internal SET SERDEPROPERTIES ('field.delim' = '$’);

ALTER TABLE c_employee SET FILEFORMAT RCFILE; -- 修正表文件格式

-- 修改表的列操作

ALTER TABLE employee_internal CHANGE old_name new_name STRING;

-- 修改列名

ALTER TABLE c_employee ADD COLUMNS (work string); -- 添加列

ALTER TABLE c_employee REPLACE COLUMNS (name string); -- 替换列

Hive分区(Partitions)

- 分区主要用于提高性能

- 分区列的值将表划分为segments(文件夹)

- 查询时使用“分区”列和常规列类似

- 查询时Hive自动过滤掉不用于提高性能的分区

- 分为静态分区和动态分区

Hive分区操作 - 定义分区

CREATE TABLE employee_partitioned(

name string,

work_place ARRAY,

sex_age STRUCT,

skills_score MAP,

depart_title MAP> )

PARTITIONED BY (year INT, month INT)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '|'

COLLECTION ITEMS TERMINATED BY ','

MAP KEYS TERMINATED BY ':';

- 静态分区操作

ALTER TABLE employee_partitioned ADD

PARTITION (year=2019,month=3) PARTITION (year=2019,month=4);

ALTER TABLE employee_partitioned DROP PARTITION (year=2019, month=4);

Hive分区操作 - 动态分区

- 使用动态分区需设定属性

set hive.exec.dynamic.partition=true;

set hive.exec.dynamic.partition.modenonstrict;

- 动态分区设置方法

insert into table employee_partitioned partition(year, month)

select name,array('Toronto') as work_place,

named_struct("sex","male","age",30) as sex_age,

map("python",90) as skills_score,

map("r&d", array('developer')) as depart_title,

year(start_date) as year,month(start_date) as month

from employee_hr eh ;

分桶(Buckets)

- 分桶对应于HDFS中的文件

- 更高的查询处理效率

- 使抽样(sampling)更高效

- 根据“桶列”的哈希函数将数据进行分桶

- 分桶只有动态分桶

- SET hive.enforce.bucketing = true;

- 定义分桶

- CLUSTERED BY (employee_id) INTO 2 BUCKETS (最好是2的n次方)

- 必须使用INSERT方式加载数据

分桶抽样(Sampling)

-

随机抽样基于整行数据

SELECT * FROM table_name TABLESAMPLE(BUCKET 3 OUT OF 32 ON rand()) s; -

随机抽样基于指定列(使用分桶列更高效)

SELECT * FROM table_name TABLESAMPLE(BUCKET 3 OUT OF 32 ON id) s; -

随机抽样基于block size

SELECT * FROM table_name TABLESAMPLE(10 PERCENT) s; SELECT * FROM table_name TABLESAMPLE(1M) s; SELECT * FROM table_name TABLESAMPLE(10 rows) s;

Hive视图(Views)

- 视图概述

- 通过隐藏子查询、连接和函数来简化查询的逻辑结构

- 虚拟表,从真实表中选取数据

- 只保存定义,不存储数据

- 如果删除或更改基础表,则查询视图将失败

- 视图是只读的,不能插入或装载数据

应

- 用场景

- 将特定的列提供给用户,保护数据隐私

- 查询语句复杂的场景

Hive视图操作

-

视图操作命令:CREATE、SHOW、DROP、ALTER

CREATE VIEW view_name AS SELECT statement; -- 创建视图 -- 创建视图支持 CTE, ORDER BY, LIMIT, JOIN, etc. SHOW TABLES; -- 查找视图 (SHOW VIEWS 在 hive v2.2.0之后) SHOW CREATE TABLE view_name; -- 查看视图定义 DROP view_name; -- 删除视图 ALTER VIEW view_name SET TBLPROPERTIES ('comment' = 'This is a view'); --更改视图属性 ALTER VIEW view_name AS SELECT statement; -- 更改视图定义,

Hive测试图(Lateral View)

-

常与表生成函数结合使用,将函数的输入和输出连接

-

OUTER关键字:即使output为空也会生成结果

select name,work_place,loc from employee lateral view outer explode(split(null,',')) a as loc; -

支持多层级

select name,wps,skill,score from employee lateral view explode(work_place) work_place_single as wps lateral view explode(skills_score) sks as skill,score; -

通常用于规范化行或解析JSON

Hive查询 - SELECT基础

-

SELECT用于映射符合指定查询条件的行

-

Hive SELECT是数据库标准SQL的子集

-

使用方法类似于MySQL

SELECT 1; SELECT [DISTINCT] column_nam_list FROM table_name; SELECT * FROM table_name; SELECT * FROM employee WHERE name!='Lucy' LIMIT 5;

-

Hive查询 - CTE和嵌套查询

-

CTE(Common Table Expression)

-- CTE语法 WITH t1 AS (SELECT …) SELECT * FROM t1 -

嵌套查询

-- 嵌套查询示例 SELECT * FROM (SELECT * FROM employee) a;

Hive查询 - 进阶语句

-

列匹配正则表达式

SET hive.support.quoted.identifiers = none; SELECT `^o.*` FROM offers; -

虚拟列(Virtual Columns)

- 两个连续下划线,用于数据验证

- INPUT__FILE__NAME:Mapper Task的输入文件名称

- BLOCK__OFFSET__INSIDE__FILE:当前全局文件位置

- 两个连续下划线,用于数据验证

Hive JOIN - 关联查询

- 指对多表进行联合查询

- JOIN用于将两个或多个表中的行组合在一起查询

- 类似于SQL JOIN,但是Hive仅支持等值连接

- 内连接:INNER JOIN

- 外连接:OUTER JOIN

- RIGHT JOIN, LEFT JOIN, FULL OUTER JOIN

- 交叉连接:CROSS JOIN

- 隐式连接:Implicit JOIN

- JOIN发生在WHERE子句之前

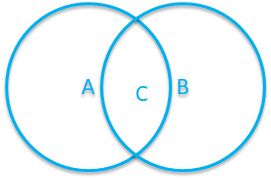

Area C = Circle1 JOIN Circle2

Area A = Circle1 LEFT OUTER JOIN Circle2

Area B = Circle1 RIGHT OUTER JOIN Circle2

AUBUC = Circle1 FULL OUTER JOIN Circle2

Hive JOIN - MAPJOIN

- MapJoin操作在Map端完成

- 小表关联大表

- 可进行不等值连接

- 开启join操作

- set hive.auto.convert.join = true(默认值)

- 运行时自动将连接转换为MAPJOIN

- MAPJOIN操作不支持:

- 在UNION ALL, LATERAL VIEW, GROUP BY/JOIN/SORT BY/CLUSTER BY/DISTRIBUTE BY等操作后面

- 在UNION, JOIN 以及其他 MAPJOIN之前

课后习题

文件employee.txt

Michael|Montreal,Toronto|Male,30|DB:80|Product:DeveloperLead

Will|Montreal|Male,35|Perl:85|Product:Lead,Test:Lead

Shelley|New York|Female,27|Python:80|Test:Lead,COE:Architect

Lucy|Vancouver|Female,57|Sales:89,HR:94|Sales:Lead

文件employee_id.txt

Michael|100|Montreal,Toronto|Male,30|DB:80|Product:DeveloperLead

Will|101|Montreal|Male,35|Perl:85|Product:Lead,Test:Lead

Steven|102|New York|Female,27|Python:80|Test:Lead,COE:Architect

Lucy|103|Vancouver|Female,57|Sales:89,HR:94|Sales:Lead

Mike|104|Montreal|Male,35|Perl:85|Product:Lead,Test:Lead

Shelley|105|New York|Female,27|Python:80|Test:Lead,COE:Architect

Luly|106|Vancouver|Female,57|Sales:89,HR:94|Sales:Lead

Lily|107|Montreal|Male,35|Perl:85|Product:Lead,Test:Lead

Shell|108|New York|Female,27|Python:80|Test:Lead,COE:Architect

Mich|109|Vancouver|Female,57|Sales:89,HR:94|Sales:Lead

Dayong|110|Montreal|Male,35|Perl:85|Product:Lead,Test:Lead

Sara|111|New York|Female,27|Python:80|Test:Lead,COE:Architect

Roman|112|Vancouver|Female,57|Sales:89,HR:94|Sales:Lead

Christine|113|Montreal|Male,35|Perl:85|Product:Lead,Test:Lead

Eman|114|New York|Female,27|Python:80|Test:Lead,COE:Architect

Alex|115|Vancouver|Female,57|Sales:89,HR:94|Sales:Lead

Alan|116|Montreal|Male,35|Perl:85|Product:Lead,Test:Lead

Andy|117|New York|Female,27|Python:80|Test:Lead,COE:Architect

Ryan|118|Vancouver|Female,57|Sales:89,HR:94|Sales:Lead

Rome|119|Montreal|Male,35|Perl:85|Product:Lead,Test:Lead

Lym|120|New York|Female,27|Python:80|Test:Lead,COE:Architect

Linm|121|Vancouver|Female,57|Sales:89,HR:94|Sales:Lead

Dach|122|Montreal|Male,35|Perl:85|Product:Lead,Test:Lead

Ilon|123|New York|Female,27|Python:80|Test:Lead,COE:Architect

Elaine|124|Vancouver|Female,57|Sales:89,HR:94|Sales:Lead

文件employee_hr.txt

Matias McGrirl|1|945-639-8596|2011-11-24

Gabriela Feldheim|2|706-232-4166|2017-12-16

Billy O'Driscoll|3|660-841-7326|2017-02-17

Kevina Rawet|4|955-643-0317|2012-01-05

Patty Entreis|5|571-792-2285|2013-06-11

Claudetta Sanderson|6|350-766-4559|2016-11-04

Bentley Oddie|7|446-519-0975|2016-05-02

Theressa Dowker|8|864-330-9976|2012-09-26

Jenica Belcham|9|347-248-4379|2011-05-02

Reube Preskett|10|918-740-2357|2015-03-26

Mary Skeldon|11|361-159-8710|2016-03-09

Ethelred Divisek|12|995-145-7392|2016-10-18

Field McGraith|13|149-133-9607|2015-10-06

Andeee Wiskar|14|315-207-5431|2012-05-10

Lloyd Nayshe|15|366-495-5398|2014-06-28

Mike Luipold|16|692-803-9373|2011-05-14

Tallie Swaine|17|570-709-6561|2011-08-06

Worth Ledbetter|18|905-586-2348|2012-09-25

Reine Leyborne|19|322-644-5798|2015-01-05

Norby Bellson|20|736-881-5785|2012-12-31

Nellie Jewar|21|551-505-3957|2017-06-18

Hoebart Deeth|22|780-240-0213|2011-09-19

Shel Haddrill|23|623-169-5495|2014-02-04

Christalle Cervantes|24|275-309-7794|2017-01-01

Dorita Miche|25|476-242-9769|2014-10-26

Conny Bowmen|26|398-181-4961|2011-10-21

Sabra O' Donohoe|27|327-773-8515|2015-01-28

Rahal Ashbe|28|561-777-0202|2012-12-13

Tye Greenstreet|29|499-510-1700|2012-01-17

Gordy Cristoforetti|30|955-110-7073|2015-10-09

Marsha Sharkey|31|221-696-5744|2017-01-29

Corbie Cruden|32|979-583-4252|2011-08-20

Anya Easen|33|428-602-5117|2011-08-16

Clea Brereton|34|909-198-4992|2018-01-08

Kimberley Pinnijar|35|608-177-4402|2015-06-03

Wilma Mackriell|36|637-304-3580|2012-06-23

Mitzi Gorman|37|134-675-2460|2017-07-16

Ashlin Rennick|38|816-635-9974|2014-04-20

Whitaker Shedd|39|614-792-6663|2016-05-19

Mandi Stronack|40|753-688-2327|2016-04-24

Niki Driffield|41|225-867-0712|2014-02-15

Regine Agirre|42|784-395-9982|2017-05-01

Evelina Craddy|43|274-850-6569|2017-06-14

Yasmin Ubsdall|44|679-739-9660|2012-03-10

Vivianna Shoreman|45|873-271-7100|2014-09-06

Chance Murra|46|248-160-3759|2017-12-31

Ferdy Adriano|47|735-447-2642|2013-11-11

Nikolos Tichner|48|869-871-9057|2014-02-15

Doro Rushman|49|861-337-3364|2011-08-27

Lela Hinzer|50|147-386-3735|2011-06-03

Hoyt Winspar|51|120-561-6266|2016-05-05

Vicki Rimington|52|257-204-8227|2014-11-21

Louis Dalwood|53|735-885-8087|2014-02-17

Joseph Zohrer|54|178-152-4726|2015-11-04

Kennett Senussi|55|182-904-2652|2017-05-20

Letta Musk|56|534-353-2038|2013-11-04

Giulietta Glentz|57|761-390-2806|2011-09-08

Wright Frostdyke|58|932-838-9710|2015-07-15

Bat Hannay|59|404-841-2981|2015-04-04

Devlen Hutsby|60|830-520-6401|2015-07-12

Lynnea Bembrigg|61|408-264-4116|2013-02-24

Udall Nelle|62|485-420-4327|2011-07-01

Kyle Matheson|63|153-149-2140|2011-07-03

Jarid Sprowell|64|848-408-9569|2017-11-08

Jeanie Griffitt|65|442-599-1231|2018-03-09

Joana Sleith|66|264-979-0388|2017-02-13

Doris Ilyushkin|67|877-472-3918|2015-08-03

Michaelina Rennels|68|949-522-9333|2012-07-05

Onofredo Butchard|69|392-833-3926|2017-11-05

Beatrice Amis|70|963-487-6585|2015-01-24

Joyan O'Hanlon|71|952-969-7279|2017-09-22

Mikaela Cardoo|72|960-275-3958|2015-01-24

Lori Dale|73|530-116-2773|2017-07-05

Stevena Roloff|74|241-314-8328|2015-12-21

Fayth Carayol|75|907-502-3752|2012-12-04

Carita Bruun|76|117-771-8056|2017-05-31

Darnell Hardwell|77|718-247-8505|2012-05-09

Jonathon Grealy|78|136-515-3637|2014-03-29

Laurice Rosini|79|352-594-3238|2017-02-15

Emelia Auten|80|311-899-1782|2014-09-10

Trace Fontelles|81|414-607-8366|2016-03-09

Hope Sket|82|461-595-7667|2017-09-30

Cilka Heijne|83|772-704-7366|2011-08-27

Maurise Gallico|84|546-158-7983|2011-12-21

Casey Greenfield|85|204-108-7707|2012-03-18

Wes Jaffrey|86|848-465-5131|2016-02-14

Jilly Eisikowitz|87|431-355-2777|2017-02-18

Auguste Kobel|88|562-494-1360|2012-02-29

Zackariah Pietrusiak|89|810-738-9846|2012-02-25

Pearline Marcq|90|200-835-9497|2016-02-10

Sayre Osbaldeston|91|340-132-2361|2011-11-30

Floyd Cano|92|133-768-6535|2016-02-27

Ciro Arendt|93|792-967-0588|2015-11-07

Auguste Kares|94|230-184-3438|2014-03-13

Skipp Spurden|95|747-133-1382|2012-03-15

Alyssa Prydden|96|963-170-0545|2014-11-07

Orlando Pallatina|97|354-125-1208|2012-07-12

Zoe Adacot|98|704-987-0702|2015-09-29

Blaine Fawdry|99|477-109-9014|2012-07-14

Cleon Haresnape|100|625-338-3965|2014-12-04

-- 1)Create an internal table employee_internal, same structure with employee

create table employee_internal(

name string,

address array<string>,

info struct<sex:string,age:int>,

technol map<string,int>,

jobs map<string,string>)

row format delimited

fields terminated by '|'

collection items terminated by ','

map keys terminated by ':'

lines terminated by '\n';

-- 2)Load data set 1 into this internal table (now, can use hdfs dfs –put)

load data local inpath '/root/employee.txt' into table employee;

-- 3)用 CTAS 建表 ctas_employee by copying data from employee_internal

create table cats_employee as select * from employee_internal;

-- 4)用 CTE 建表 cte_employee with male named Michael and all females

create table cte_employee as with

a1 as (select * from employee_internal where info.sex='Male'),

a2 as (select * from a1 where name='Michael'),

a3 as (select * from employee_internal where info.sex='Female')

select * from a2 union all select * from a3;

-- 5)用两种方法建立一个空表 by copying schema of employee_internal

create table emp_01 as select * from employee_internal where 1=0;

create table emp_02 like employee_internal;

-- 6)Rename cte_employee to c_employee

alter table cte_employee rename to c_employee;

-- 7)Rename column name to employee_name in employee_internal table and

-- also change column type

alter table employee_internal change name employee_name varchar(100);

-- 8)Add another column called work in c_employee table

insert into c_employee select * from employee_internal where employee_name<>'Michael' and info.sex='Male';

-- 9)Create empty partition table employee_partitioned using partition (year

-- int, month int)

create table employee_partitioned (

name string,

address array<string>,

info struct<sex:string,age:int>,

technol map<string,int>,

jobs map<string,string>)

partitioned by (year int,month int)

row format delimited

fields terminated by '|'

collection items terminated by ','

map keys terminated by ':'

lines terminated by '\n';

-- 10)Add two static partition to the table

alter table employee_partitioned add partition(year=2019,month=4) partition(year=2019,month=2);

-- 11)Load employee data into this partition table in one partition

load data local inpath '/root/employee.txt' into table employee_partitioned partition(year=2019,month=2);

-- 12)Create another external table employee_hr with data set 2

create external table employee_hr(

name string,

id int,

phone string,

date date)

row format delimited

fields terminated by '|'

lines terminated by '\n'

stored as textfile location '/data/2';

-- 13)Load more data from employee_hr into this employee_partitioned with dynamic

-- partition enabled partition by the onboard date (last column)

insert into employee_partitioned partition(year,month)

select name, array('Toronto') as work_place,

named_struct("sex","male","age",30) as sex_age,

map("python",90) as skills_score,

map("r&d", 'developer') as depart_title,

year(date),month(date) from employee_hr eh;

-- 14)Create another external table employee_id using data set 3

create external table employee_id(

name string,

id int,

address array<string>,

info struct<sex:string,age:int>,

technol map<string,int>,

jobs map<string,string>)

row format delimited

fields terminated by '|'

collection items terminated by ','

map keys terminated by ':'

lines terminated by '\n'

stored as textfile

location '/data/3';

-- 15)Create a bucket table employee_id_buckets cluster by employee_id

create external table employee_id_buckets(

name string,

id int,

address array<string>,

info struct<sex:string,age:int>,

technol map<string,int>,

jobs map<string,string>)

clustered by(id) into 3 buckets

row format delimited

fields terminated by '|'

collection items terminated by ','

map keys terminated by ':'

lines terminated by '\n';

set hive.enfore.bucketing=true;

-- 16)Load the bucket table from employee_id

load data local inpath '/root/employee_id.txt' into table employee_id_buckets;

-- 17)彻底清空 employee_partitioned(提示:数据和分区)

drop table employee_partitioned;