k8s下使用local-path-provisioner进行本地存储

传统的web项目存储文件,图片等,一般都是放站点部署的磁盘中。站点重启后还是能继续访问。

但是在k8s中。无状态pod重启后,都是随机落到一台worker上,这样之前的文件就有可能找不到了。

准备测试项目:

场景一,文件存pod下,重启pod文件还在吗?

参照:使用rancher在k8s上完成第一个CI/CD的项目

创建一个TextController 有两个接口:

SaveText 传入文本,保存一个txt文件在服务器。并返回文件地址。

GetText 传入文件名,下载那个文件下来

using Microsoft.AspNetCore.Http;

using Microsoft.AspNetCore.Mvc;

using System.Text;

namespace K8sStorageDemo.Controllers

{

[Route("api/[controller]/[action]")]

[ApiController]

public class TextController : ControllerBase

{

private string basePath = "/home/admin/files";

[HttpGet]

public string SaveText(string text)

{

return SaveFile(text);

}

[HttpGet]

public ActionResult GetText(string filename)

{

var path = basePath + $"/{filename}.txt";

byte[] byteArr = System.IO.File.ReadAllBytes(path);

string mimeType = "application/octet-stream";

return new FileContentResult(byteArr, mimeType)

{

FileDownloadName = filename

};

}

private string SaveFile(string text)

{

if (!System.IO.Directory.Exists(basePath))

{

System.IO.Directory.CreateDirectory(basePath);

}

var path = basePath + $"/{Guid.NewGuid().ToString()}.txt";

if (!System.IO.File.Exists(path))

{

// 创建要写入的文件。

string createText = text + Environment.NewLine;

System.IO.File.WriteAllText(path, createText, Encoding.UTF8);

}

return path;

}

}

}

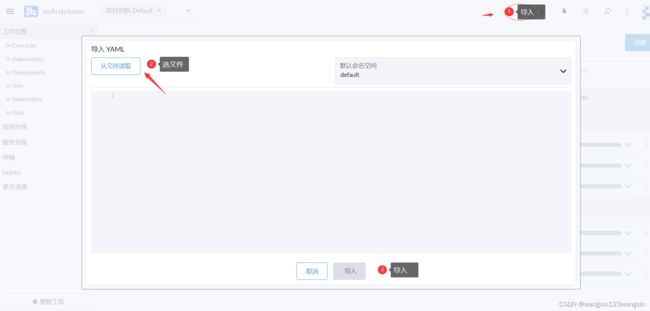

准备好ingress:K8sStorageDemo_ingress.yaml 导入集群。

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: k8sstoragedemo

namespace: default

spec:

rules:

- host: www.k8sstoragedemo.cn

http:

paths:

- path: /

backend:

serviceName: k8sstoragedemo

servicePort: 80rancher流水线发布完成后,配置本地hosts文件(参照):k8s基于ingress-nginx的服务发现和负载均衡

192.168.21.233 www.k8sstoragedemo.cn

然后请求:http://www.k8sstoragedemo.cn/api/Text/SaveText?text=hello%20k8s'

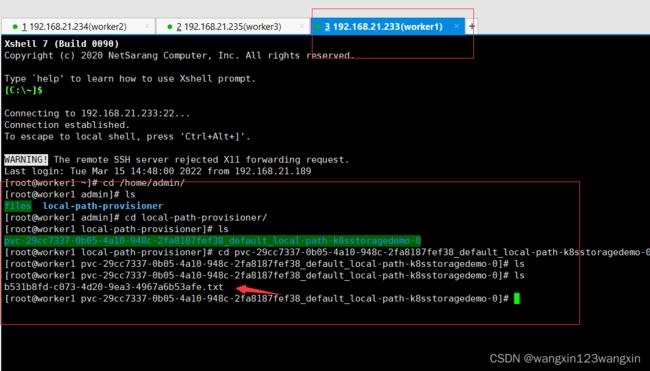

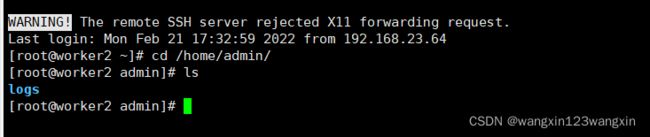

然后进入容器看

文件生成在/home/admin/files下 ,可以看到这个pod是落到worker2主机下的,通ssh到worker2下去找:

并没这个文件夹,重启服务后,你会发现,原来生成的文件也丢了。

场景二,将pod的文件夹映射到worker上,稍微修改发布文件:deployment.yaml

apiVersion: v1

kind: Service

metadata:

name: k8sstoragedemo

namespace: default

labels:

app: k8sstoragedemo

service: k8sstoragedemo

spec:

ports:

- port: 80

name: http

selector:

app: k8sstoragedemo

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: k8sstoragedemo

namespace: default

labels:

app: k8sstoragedemo

version: v1

spec:

replicas: 1

selector:

matchLabels:

app: k8sstoragedemo

version: v1

template:

metadata:

labels:

app: k8sstoragedemo

version: v1

spec:

containers:

- name: k8sstoragedemo

image: 192.168.21.4:8081/test/k8sstoragedemo:${CICD_GIT_BRANCH}-${CICD_GIT_COMMIT}

lifecycle:

postStart:

exec:

command:

- /bin/sh

- '-c'

- >-

mkdir -p /home/mount/${HOSTNAME}/files /home/mount/${HOSTNAME}/logs /home/admin/files /home/admin/logs

&& ln -s /home/mount/${HOSTNAME}/files /home/admin/files/k8sstoragedemo

&& ln -s /home/mount/${HOSTNAME}/logs /home/admin/logs/k8sstoragedemo

&& echo ${HOSTNAME}

volumeMounts:

- name: tz-config

mountPath: /etc/localtime

- name: files-volume

mountPath: /home/mount

- name: log-volume

mountPath: /home/mount

readinessProbe:

httpGet:

path: /health/status

port: 80

initialDelaySeconds: 10

periodSeconds: 5

ports:

- containerPort: 80

volumes:

- name: tz-config

hostPath:

path: /etc/localtime

- name: log-volume

hostPath:

path: /home/admin/logs

type: DirectoryOrCreate

- name: files-volume

hostPath:

path: /home/admin/files

type: DirectoryOrCreate

imagePullSecrets:

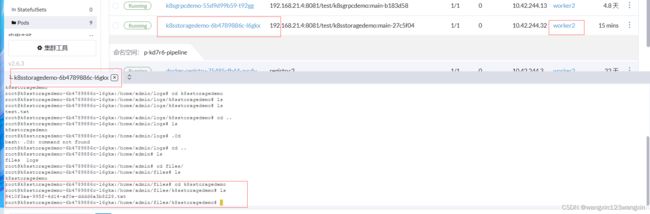

- name: mydockerhub重新部署后, 再次请求:http://www.k8sstoragedemo.cn/api/Text/SaveText?text=hello%20k8s'

如果下一次服务启动,没落在worker2上,那么文件就读取不到了。那么就有了场景三

场景三,pod重启还是能读取到上一次的存放的文件(除非上一次的woker2彻底挂了)

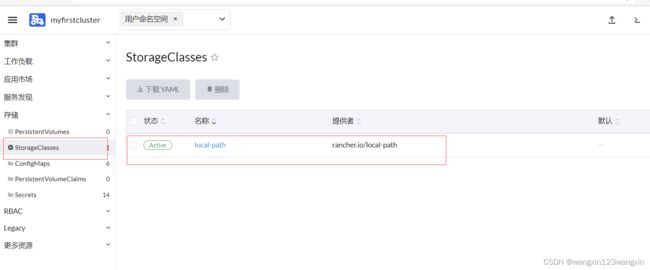

安装:local-path-provisioner 准备local-path-storage.yaml

apiVersion: v1

kind: Namespace

metadata:

name: local-path-storage

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: local-path-provisioner-service-account

namespace: local-path-storage

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: local-path-provisioner-role

rules:

- apiGroups: [ "" ]

resources: [ "nodes", "persistentvolumeclaims", "configmaps" ]

verbs: [ "get", "list", "watch" ]

- apiGroups: [ "" ]

resources: [ "endpoints", "persistentvolumes", "pods" ]

verbs: [ "*" ]

- apiGroups: [ "" ]

resources: [ "events" ]

verbs: [ "create", "patch" ]

- apiGroups: [ "storage.k8s.io" ]

resources: [ "storageclasses" ]

verbs: [ "get", "list", "watch" ]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: local-path-provisioner-bind

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: local-path-provisioner-role

subjects:

- kind: ServiceAccount

name: local-path-provisioner-service-account

namespace: local-path-storage

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: local-path-provisioner

namespace: local-path-storage

spec:

replicas: 1

selector:

matchLabels:

app: local-path-provisioner

template:

metadata:

labels:

app: local-path-provisioner

spec:

serviceAccountName: local-path-provisioner-service-account

containers:

- name: local-path-provisioner

image: rancher/local-path-provisioner:v0.0.21

imagePullPolicy: IfNotPresent

command:

- local-path-provisioner

- --debug

- start

- --config

- /etc/config/config.json

volumeMounts:

- name: config-volume

mountPath: /etc/config/

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumes:

- name: config-volume

configMap:

name: local-path-config

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-path

provisioner: rancher.io/local-path

volumeBindingMode: WaitForFirstConsumer

reclaimPolicy: Delete

---

kind: ConfigMap

apiVersion: v1

metadata:

name: local-path-config

namespace: local-path-storage

data:

config.json: |-

{

"nodePathMap":[

{

"node":"DEFAULT_PATH_FOR_NON_LISTED_NODES",

"paths":["/home/admin/local-path-provisioner"]

}

]

}

setup: |-

#!/bin/sh

while getopts "m:s:p:" opt

do

case $opt in

p)

absolutePath=$OPTARG

;;

s)

sizeInBytes=$OPTARG

;;

m)

volMode=$OPTARG

;;

esac

done

mkdir -m 0777 -p ${absolutePath}

teardown: |-

#!/bin/sh

while getopts "m:s:p:" opt

do

case $opt in

p)

absolutePath=$OPTARG

;;

s)

sizeInBytes=$OPTARG

;;

m)

volMode=$OPTARG

;;

esac

done

rm -rf ${absolutePath}

helperPod.yaml: |-

apiVersion: v1

kind: Pod

metadata:

name: helper-pod

spec:

containers:

- name: helper-pod

image: busybox

修改部署文件:deployment.yaml 不一样的地方我注释出来了

apiVersion: v1

kind: Service

metadata:

name: k8sstoragedemo

namespace: default

labels:

app: k8sstoragedemo

service: k8sstoragedemo

spec:

ports:

- port: 80

name: http

selector:

app: k8sstoragedemo

---

apiVersion: apps/v1

kind: StatefulSet #有状态

metadata:

name: k8sstoragedemo

namespace: default

labels:

app: k8sstoragedemo

version: v1

spec:

replicas: 1

selector:

matchLabels:

app: k8sstoragedemo

version: v1

template:

metadata:

labels:

app: k8sstoragedemo

version: v1

spec:

affinity: #POD亲和性 为了重启pod找到原来的主机(除非原来的主机挂了)

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- k8sstoragedemo

topologyKey: kubernetes.io/hostname

containers:

- name: k8sstoragedemo

image: 192.168.21.4:8081/test/k8sstoragedemo:${CICD_GIT_BRANCH}-${CICD_GIT_COMMIT}

lifecycle:

postStart:

exec:

command:

- /bin/sh

- '-c'

- >-

mkdir -p /home/mount/${HOSTNAME}/files /home/mount/${HOSTNAME}/logs /home/admin/files /home/admin/logs

&& ln -s /home/mount/${HOSTNAME}/files /home/admin/files/k8sstoragedemo

&& ln -s /home/mount/${HOSTNAME}/logs /home/admin/logs/k8sstoragedemo

&& echo ${HOSTNAME}

volumeMounts:

- name: tz-config

mountPath: /etc/localtime

- name: files-volume

mountPath: /home/mount

- name: log-volume

mountPath: /home/mount

- name: local-path #使用local-path-storage

mountPath: /home/admin/loaclfiles #目录。主机上也有这样的目录

readinessProbe:

httpGet:

path: /health/status

port: 80

initialDelaySeconds: 10

periodSeconds: 5

ports:

- containerPort: 80

volumes:

- name: tz-config

hostPath:

path: /etc/localtime

- name: log-volume

hostPath:

path: /home/admin/logs

type: DirectoryOrCreate

- name: files-volume

hostPath:

path: /home/admin/files

type: DirectoryOrCreate

imagePullSecrets:

- name: mydockerhub

volumeClaimTemplates: #定义pv

- metadata:

name: local-path

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "local-path"

resources:

requests:

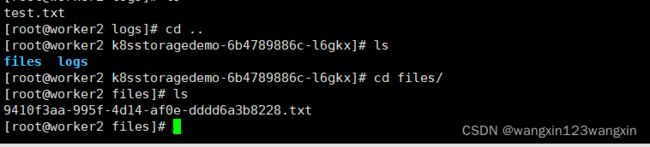

storage: 50Gi修改代码,把文件存新地址:/home/admin/loaclfiles

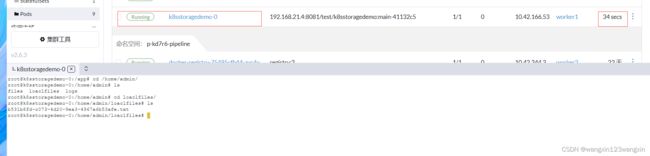

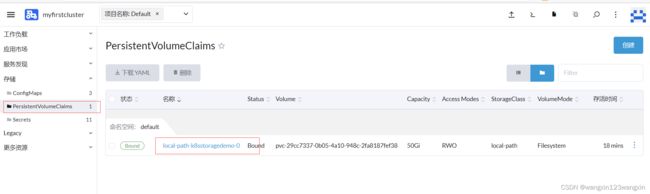

提交代码。rancher发布。等待发布完成。

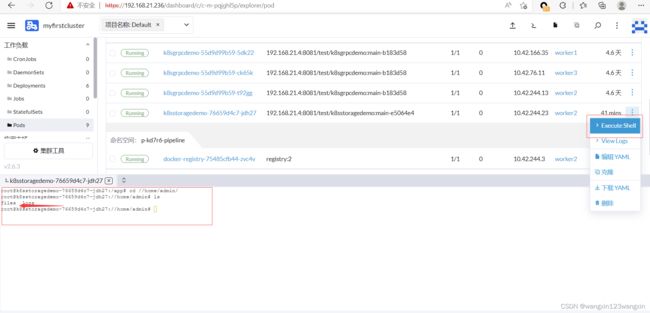

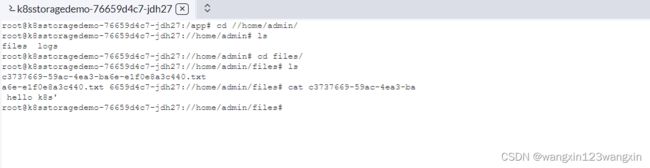

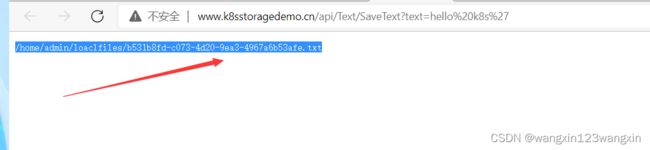

再次请求:http://www.k8sstoragedemo.cn/api/Text/SaveText?text=hello%20k8s' 生成一个文件

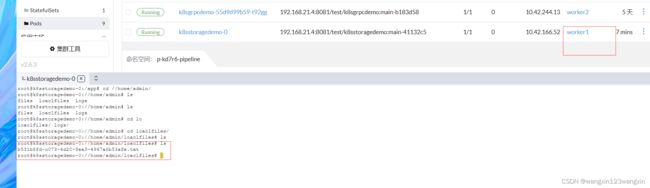

文件落到worker1上的。 现在杀掉pod 让pod重新部署

访问下载文件接口:看是否还能下载到那个文件否?:http://www.k8sstoragedemo.cn/api/Text/GetText?filename=xxxxx xxxxx就是生成文件接口返回的文件名称。重启后文件还是在对应目录。下载也能正常获取