k8s部署-32-k8s的集群资源管理cpu、memory(上)

我们了解了namespace的隔离性,那么cpu和内存到底能不能控制呢?如何在k8s中进行控制呢?每一个pod的内存、cpu、磁盘是如何控制的呢?

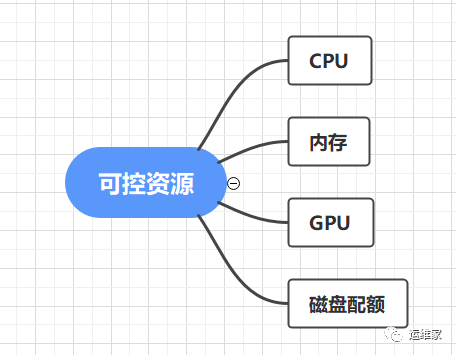

可控资源

首先我们要知道k8s能对哪些资源进行控制,如下图:

如何来分配这些资源呢,我们需要提前规划好一个值吧,在k8s中主要关注两个值,一个是requests,一个是limit;

requests:希望被分配到的额度

limits:最大被分配到的额度

大概心里有个数了,接下来实操一波。

验证资源划分

1、先查看当前集群有多少资源;

[root@node1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

node2 Ready 18d v1.20.2

node3 Ready 18d v1.20.2

[root@node1 ~]# kubectl describe node node2

# 省略部分输出

# 可以看到指定节点的内存和cpu以及其他信息的使用占比

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 550m (68%) 100m (12%)

memory 190Mi (15%) 390Mi (31%)

ephemeral-storage 0 (0%) 0 (0%)

hugepages-1Gi 0 (0%) 0 (0%)

hugepages-2Mi 0 (0%) 0 (0%)

Events:

[root@node1 ~]#

2、创建一个限制cpu和内存的pod,我们看一下,此pod的yaml文件,基于上一篇中,我们只添加了内存和cpu的限制,在deployment模块中。

[root@node1 ~]# cd namespace/

[root@node1 namespace]# mkdir requests

[root@node1 namespace]# cd requests/

[root@node1 requests]# vim request-web-demo.yaml

#deploy

apiVersion: apps/v1

kind: Deployment

metadata:

name: tomcat-demo

spec:

selector:

matchLabels:

app: tomcat-demo

replicas: 1

template:

metadata:

labels:

app: tomcat-demo

spec:

containers:

- name: tomcat-demo

image: registry.cn-hangzhou.aliyuncs.com/liuyi01/tomcat:8.0.51-alpine

ports:

- containerPort: 8080

resources:

requests:

memory: 100Mi

cpu: 100m

limits:

memory: 100Mi

cpu: 200m

---

#service

apiVersion: v1

kind: Service

metadata:

name: tomcat-demo

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: tomcat-demo

---

#ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: tomcat-demo

spec:

rules:

- host: tomcat.yunweijia.com

http:

paths:

- path: /

backend:

serviceName: tomcat-demo

servicePort: 80

[root@node1 requests]# kubectl apply -f request-web-demo.yaml

deployment.apps/tomcat-demo configured

service/tomcat-demo unchanged

ingress.extensions/tomcat-demo configured

[root@node1 requests]#

3、验证下配置是否生效

[root@node1 requests]# kubectl get pods -o wide | grep tomcat

tomcat-demo-577d557cb4-s8rv9 1/1 Running 3 2m 10.200.104.49 node2

[root@node1 requests]#

# 从上面可以看到运行在了node2节点上,登录到node2上

[root@node2 yunweijia]# crictl ps

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID

b9de73c75753c 2304e340e2384 About a minute ago Running tomcat-demo 3 c0b7dd83c1639

cf5d8452030ba 67da37a9a360e 6 minutes ago Running coredns 22 218c789a30d84

bc99cc78dd418 2673066df2c99 6 minutes ago Running deploy-springboot 2 321a93fee22d5

9a1ca81b1eb60 f2f70adc5d89a 6 minutes ago Running my-nginx 23 a1ae445fa4226

4edbc35f14aff 7a71aca7b60fc 6 minutes ago Running calico-node 22 da37be9dbe48b

e8daddbca434d 90f9d984ec9a3 6 minutes ago Running node-cache 22 cda9b13a11528

[root@node2 yunweijia]# crictl inspect b9de73c75753c

# 忽略部分内容,然后主要关注下linux那块内容

"linux": {

"resources": {

"devices": [

{

"allow": false,

"access": "rwm"

}

],

"memory": {

"limit": 104857600

},

"cpu": {

"shares": 102,

"quota": 20000,

"period": 100000

}

},

# 可以看到内存是104857600,我们配置额是100Mi,计算方式是:

# 100*1024*1024=104857600

# 然后看下CPU是102,那么他的计算方式如下:

# 100/1000=0.1

# 1024*0.1约等于102,他是发生资源抢占的时候一个权重,决定资源分配比例

4、模拟下在容器中占用超出限制的内存会怎样;

[root@node1 requests]# kubectl exec -it tomcat-demo-577d557cb4-s8rv9 -- bash

bash-4.4# vi test.sh # 写一个占用内存的测试脚本试试看

#!/bin/bash

str="woshi yunweijia, nihaoya, nihaoya, nihaoya."

while [ TRUE ]

do

str="$str$str"

echo "++++"

sleep 0.1

done

bash-4.4# sh test.sh

++++

++++

++++

++++

++++

++++

++++

++++

++++

command terminated with exit code 137

[root@node1 requests]# kubectl get pods

NAME READY STATUS RESTARTS AGE

deploy-springboot-5dbfc55f78-mpg69 1/1 Running 2 2d7hnginx-ds-q2pjt 1/1 Running 18 13d

nginx-ds-zc5qt 1/1 Running 23 19d

tomcat-demo-577d557cb4-s8rv9 0/1 OOMKilled 5 16m

[root@node1 requests]#

# 竟然自己退出了?说明内存被占用之后这个pod会存在异常

然后稍等几分钟,不用做任何操作,会自动恢复;

[root@node1 requests]# kubectl get pods

NAME READY STATUS RESTARTS AGE

deploy-springboot-5dbfc55f78-mpg69 1/1 Running 2 2d8h

nginx-ds-q2pjt 1/1 Running 18 13d

nginx-ds-zc5qt 1/1 Running 23 19d

tomcat-demo-577d557cb4-s8rv9 1/1 Running 7 25m

[root@node1 requests]#

然后我们登录系统查看下,是不是把占用内存的程序给kill掉了呢?

[root@node1 requests]# kubectl exec -it tomcat-demo-577d557cb4-s8rv9 -- bash

bash-4.4# cat test.sh

cat: can't open 'test.sh': No such file or directory

bash-4.4#

# 看来不止是把程序kill了,这个文件也给删除了,貌似是新启动了一个pod?

我们再验证下,在运行test.sh文件之前,新建一个文件,看看到底是新创建了一个pod,还是把异常程序删除了,如下:

[root@node1 requests]# kubectl exec -it tomcat-demo-577d557cb4-s8rv9 -- bash

bash-4.4# ls

LICENSE RELEASE-NOTES bin include logs temp work

NOTICE RUNNING.txt conf lib native-jni-lib webapps

bash-4.4# echo "123" > a.txt

bash-4.4# ls

LICENSE RELEASE-NOTES a.txt conf lib native-jni-lib webapps

NOTICE RUNNING.txt bin include logs temp work

bash-4.4# vi test.sh

bash-4.4# ls

LICENSE RELEASE-NOTES a.txt conf lib native-jni-lib test.sh work

NOTICE RUNNING.txt bin include logs temp webapps

bash-4.4# sh test.sh

++++

++++

++++

++++

++++

++++

++++

++++

++++

++++

++++

++++

++++

++++

command terminated with exit code 137

[root@node1 requests]#

[root@node1 requests]# kubectl get pods

NAME READY STATUS RESTARTS AGE

deploy-springboot-5dbfc55f78-mpg69 1/1 Running 2 2d8h

nginx-ds-q2pjt 1/1 Running 18 13d

nginx-ds-zc5qt 1/1 Running 23 19d

tomcat-demo-577d557cb4-s8rv9 0/1 OOMKilled 8 34m

[root@node1 requests]#

再稍等几分钟~~~我们等他自动恢复之后,进去看下。

[root@node1 requests]# kubectl exec -it tomcat-demo-577d557cb4-s8rv9 -- bash

bash-4.4# ls

LICENSE RELEASE-NOTES bin include logs temp work

NOTICE RUNNING.txt conf lib native-jni-lib webapps

bash-4.4#

[root@node1 requests]#

可以看到新建的文件也没了,所以这个后面还是要注意下,但是镜像本身的肯定不受影响。

5、然后我们验证下如何limit设置的非常大,会怎样呢?

# 和上面文件内容一致,我们只改动下面的信息

resources:

requests:

memory: 100Mi

cpu: 100m

limits:

memory: 100Gi

cpu: 20000m

# 可以看到我把limit的内存和cpu都调整的非常高,我的服务器本身是没有这么大内存的哈

# 看下情况

[root@node1 requests]# kubectl apply -f request-web-demo.yaml

[root@node1 requests]# kubectl get pod | grep tomcat

tomcat-demo-78787c7885-hxcm7 1/1 Running 0 71s

[root@node1 requests]#

新的pod也调度起来了,说明什么呢?说明我们pod的启动,并不依赖于limit参数;

6、我们验证下requests的内存调大,会怎样呢?

# 和上面文件内容一致,我们只改动下面的信息

resources:

requests:

memory: 10000Mi

cpu: 100m

limits:

memory: 100Gi

cpu: 20000m

# 我服务器本身没有10G的内存,看看情况如何

[root@node1 requests]# kubectl get pod | grep tomcat

tomcat-demo-685d47f966-kjcfg 0/1 Pending 0 13s

tomcat-demo-78787c7885-hxcm7 1/1 Running 0 3m43s

[root@node1 requests]#

上面的信息可以看到,老的pod在正常运行,新的pod处于pending状态,并没有启动,说明在requests超出服务器实际剩余空间时,是无法启动的。

7、验证下requests的cpu过大呢?

剩余内容请转至VX公众号 “运维家” ,回复 “139” 查看。