Zookeeper 学习-docker配置安装及集群

Zookeeper 学习

- Zookeeper 学习-docker配置安装及集群

-

- 1.zookeeper + docker基本配置

- 1.1.下载docker image

- 1.2.启动容器

- 1.3.查看容器是否启动

- 1.4.查看容器日志

- 1.5.ZK容器内检查状态

- 1.6.ZK 命令行连接 ZK服务器

- 1.7.ncat 命令行检查状态

- 2.ZK集群配置

- 2.1.准备docker-compose

- 2.2.准备YML脚本

- 2.3.启动ZK集群

- 2.4.检查ZK集群状态

- 2.5.ZK 命令行连接 ZK服务器

- 2.6. ncat 命令行检查状态

- 3.附加

- 3.1.docker-compose文件解释

- 3.2.ZooKeeper 的客户端-服务器架构

- 3.3.Monitor

- 3.4.Tools

- 3.5.处理问题

- 3.6.参考文档

Zookeeper 学习-docker配置安装及集群

Zookeeper 学习-docker配置安装及集群学习笔记

OS环境:

LSB Version: :core-4.1-amd64:core-4.1-noarch

Distributor ID: CentOS

Description: CentOS Linux release 8.1.1911 (Core)

Release: 8.1.1911

Codename: Core

Docker环境:

Client:

Version: 18.09.1

API version: 1.39

Go version: go1.10.6

Git commit: 4c52b90

Built: Wed Jan 9 19:35:01 2019

OS/Arch: linux/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 18.09.1

API version: 1.39 (minimum version 1.12)

Go version: go1.10.6

Git commit: 4c52b90

Built: Wed Jan 9 19:06:30 2019

OS/Arch: linux/amd64

Experimental: false

JAVA环境:

openjdk version “1.8.0_252”

OpenJDK Runtime Environment (build 1.8.0_252-b09)

OpenJDK 64-Bit Server VM (build 25.252-b09, mixed mode)

1.zookeeper + docker基本配置

1.1.下载docker image

下载image

docker pull zookeeper

1.2.启动容器

启动容器,

以下映射网络

docker run --privileged=true -d --name zookeeper --publish 2181:2181 -d zookeeper:latest

以下没有映射网络

docker run --privileged=true -d --name zookeeper -d zookeeper:latest

结果如下:

[root@cloudhost --privileged=true -d --name zookeeper -d zookeeper:latest

6e28336e74d904cfa76c2ab3988cbbd370aa875d7950035e977eb87d83f55468

1.3.查看容器是否启动

查看容器状态

docker ps

结果如下:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

862bfff58c8f zookeeper “/docker-entrypoint.…” 19 minutes ago Up 19 minutes 2888/tcp, 3888/tcp, 8080/tcp, 0.0.0.0:2183->2181/tcp zookeeper_zoo3_1

3096c8f566b7 zookeeper “/docker-entrypoint.…” 19 minutes ago Up 19 minutes 2888/tcp, 3888/tcp, 0.0.0.0:2181->2181/tcp, 8080/tcp zookeeper_zoo1_1

d1b528538db1 zookeeper “/docker-entrypoint.…” 19 minutes ago Up 19 minutes 2888/tcp, 3888/tcp, 8080/tcp, 0.0.0.0:2182->2181/tcp zookeeper_zoo2_1

1.4.查看容器日志

查看容器的运行日志

docker logs -f zookeeper_zoo3_1

或者

docker logs -f 862bfff58c8f

结果如下:

ZooKeeper JMX enabled by default

Using config: /conf/zoo.cfg

2020-06-08 15:33:05,695 [myid:] - INFO [main:QuorumPeerConfig@173] - Reading configuration from: /conf/zoo.cfg

2020-06-08 15:33:05,716 [myid:] - INFO [main:QuorumPeerConfig@450] - clientPort is not set

2020-06-08 15:33:05,717 [myid:] - INFO [main:QuorumPeerConfig@463] - secureClientPort is not set

2020-06-08 15:33:05,717 [myid:] - INFO [main:QuorumPeerConfig@479] - observerMasterPort is not set

2020-06-08 15:33:05,726 [myid:] - INFO [main:QuorumPeerConfig@496] - metricsProvider.className is org.apache.zookeeper.metrics.impl.DefaultMetricsProvider

2020-06-08 15:33:05,790 [myid:3] - INFO [main:DatadirCleanupManager@78] -

…

1.5.ZK容器内检查状态

登录容器命令行,验证服务是否启动成功,

方法一:在容器命令行界面中执行

docker exec -it zookeeper_zoo1_1 /bin/bash

cd bin

zkServer.sh status

结果如下:

[root@cloudhost~]# docker exec -it zookeeper_zoo1_1 /bin/bash

root@zoo1:/apache-zookeeper-3.6.1-bin# cd bin

root@zoo1:/apache-zookeeper-3.6.1-bin/bin# zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /conf/zoo.cfg

Client port found: 2181. Client address: localhost.

Mode: leader

1.6.ZK 命令行连接 ZK服务器

启动 ZK 命令行连接ZK服务器,

执行本地ZK安装的 zkCli.sh 命令或者远程

docker run -it --rm --link zookeeper:zookeeper zookeeper zkCli.sh -server zookeeper

或者

docker run -it --rm --link zookeeper:zookeeper zookeeper zkCli.sh -server zk1:2181

结果如下:

[root@cloudhostbin]# sh zkCli.sh -server -server zookeeper

/usr/bin/java

Connecting to localhost:2181

2020-06-09 00:11:39,734 [myid:] - INFO [main:Environment@98] - Client environment:zookeeper.version=3.6.1–104dcb3e3fb464b30c5186d229e00af9f332524b, built on 04/21/2020 15:01 GMT

2020-06-09 00:11:39,737 [myid:] - INFO [main:Environment@98] - Client environment:host.name=localhost

2020-06-09 00:11:39,737 [myid:] - INFO [main:En

。。。

2020-06-09 00:11:39,928 [myid:localhost:2181] - INFO [main-SendThread(localhost:2181):ClientCnxn S e n d T h r e a d @ 986 ] − S o c k e t c o n n e c t i o n e s t a b l i s h e d , i n i t i a t i n g s e s s i o n , c l i e n t : / 0 : 0 : 0 : 0 : 0 : 0 : 0 : 1 : 40044 , s e r v e r : l o c a l h o s t / 0 : 0 : 0 : 0 : 0 : 0 : 0 : 1 : 21812020 − 06 − 0900 : 11 : 39 , 961 [ m y i d : l o c a l h o s t : 2181 ] − I N F O [ m a i n − S e n d T h r e a d ( l o c a l h o s t : 2181 ) : C l i e n t C n x n SendThread@986] - Socket connection established, initiating session, client: /0:0:0:0:0:0:0:1:40044, server: localhost/0:0:0:0:0:0:0:1:2181 2020-06-09 00:11:39,961 [myid:localhost:2181] - INFO [main-SendThread(localhost:2181):ClientCnxn SendThread@986]−Socketconnectionestablished,initiatingsession,client:/0:0:0:0:0:0:0:1:40044,server:localhost/0:0:0:0:0:0:0:1:21812020−06−0900:11:39,961[myid:localhost:2181]−INFO[main−SendThread(localhost:2181):ClientCnxnSendThread@1420] - Session establishment complete on server localhost/0:0:0:0:0:0:0:1:2181, session id = 0x100028bcc670000, negotiated timeout = 30000

WATCHER::

WatchedEvent state:SyncConnected type:None path:null

[zk: localhost:2181(CONNECTED) 0]

1.7.ncat 命令行检查状态

通过 nc (netcap)命令连接到指定的 ZK 服务器, 发送 stat查看 ZK 服务的状态, 例如:

echo stat | nc 127.0.0.1 2181

输出如下:

[root@cloudhostbin]# echo stat | nc 127.0.0.1 2181

Zookeeper version: 3.6.1–104dcb3e3fb464b30c5186d229e00af9f332524b, built on 04/21/2020 15:01 GMT

Clients:

/172.23.0.1:438580

Latency min/avg/max: 0/2.375/13

Received: 12

Sent: 11

Connections: 1

Outstanding: 0

Zxid: 0x700000004

Mode: follower

Node count: 5

2.ZK集群配置

2.1.准备docker-compose

使用docker-compose加载,需要提前安装相关介质。

下载介质

curl -L “https://github.com/docker/compose/releases/download/1.24.1/docker-compose- ( u n a m e − s ) − (uname -s)- (uname−s)−(uname -m)” -o /usr/local/bin/docker-compose

注意:要安装其他版本的 Compose,请替换 1.24.1。

将可执行权限应用于二进制文件:

chmod +x /usr/local/bin/docker-compose

创建软链:

ln -s /usr/local/bin/docker-compose /usr/bin/docker-compose

检查状态:

docker-compose --version

cker-compose version 1.24.1, build 4667896b

2.2.准备YML脚本

创建名为 docker-compose.yml 的文件, 其内容如下:

version: ‘3.1’

services:

zoo1:

image: zookeeper

restart: always

hostname: zoo1

networks:

- zoo-net

ports:

- 2181:2181

volumes:

- zoo1-data:/data

- zoo1-log:/datalog

environment:

ZOO_MY_ID: 1

ZOO_SERVERS: server.1=0.0.0.0:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

ZOO_4LW_COMMANDS_WHITELIST: “*”

zoo2:

image: zookeeper

restart: always

hostname: zoo2

networks:

- zoo-net

ports:

- 2182:2181

environment:

ZOO_MY_ID: 2

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=0.0.0.0:2888:3888;2181 server.3=zoo3:2888:3888;2181

ZOO_4LW_COMMANDS_WHITELIST: “*”

zoo3:

image: zookeeper

restart: always

hostname: zoo3

networks:

- zoo-net

ports:

- 2183:2181

environment:

ZOO_MY_ID: 3

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=0.0.0.0:2888:3888;2181

ZOO_4LW_COMMANDS_WHITELIST: “*”

volumes:

zoo1-data:

external: false

zoo1-log:

external: false

networks:

zoo-net:

配置文件定义 三个 zookeeper 镜像, 并分别将本地的 2181, 2182, 2183 端口绑定到对应的容器的2181端口上.

ZOO_MY_ID 和 ZOO_SERVERS 是 ZK 集群必须设置的两个环境变量, 其中 ZOO_MY_ID 表示 ZK 服务的 id, 它是1-255 之间的整数, 必须在集群中唯一. ZOO_SERVERS 是ZK 集群的主机列表.

ZOO_4LW_COMMANDS_WHITELIST是可选的环境变量,允许nc命令执行stat指令能收集状态

2.3.启动ZK集群

在 docker-compose.yml 当前目录执行命令启动集群,缺省查找docker-compose.yml文件:

docker-compose up

或者增加参数指定加载文件:

docker-compose -f stack.yml up

输出如下:

[root@cloudhostzookeeper]# docker-compose -f stack.yml up

Creating network “zookeeper_zoo-net” with the default driver

Creating volume “zookeeper_zoo1-data” with default driver

Creating volume “zookeeper_zoo1-log” with default driver

Recreating zookeeper_zoo2_1 …

Recreating zookeeper_zoo1_1 …

Recreating zookeeper_zoo3_1 …

WARNING: Service “zoo1” is using volume “/datalog” from the previous container. Host mapping “zookeeper_zoo1-log” has no effect. Remove the existing containers (with docker-compose rm zoo1) to use the host volume mapping.

Recreating zookeeper_zoo2_1 … done

Recreating zookeeper_zoo1_1 … done

Recreating zookeeper_zoo3_1 … done

Attaching to zookeeper_zoo2_1, zookeeper_zoo1_1, zookeeper_zoo3_1

zoo2_1 | ZooKeeper JMX enabled by default

zoo2_1 | Using config: /conf/zoo.cfg

。。。

注意:此命令执行后会在前端输出服务器状态,请不要关闭或中断

2.4.检查ZK集群状态

执行上述命令成功后, 接着在另一个终端中运行 docker-compose ps 命令可以查看启动的 ZK 容器:

docker-compose ps

Name Command State Ports

--------------------------------------------------------------------------------

zoo1 /docker-entrypoint.sh zkSe … Up 0.0.0.0:2181->2181/tcp,

2888/tcp, 3888/tcp, 8080/tcp

zoo2 /docker-entrypoint.sh zkSe … Up 0.0.0.0:2182->2181/tcp,

2888/tcp, 3888/tcp, 8080/tcp

zoo3 /docker-entrypoint.sh zkSe … Up 0.0.0.0:2183->2181/tcp,

2888/tcp, 3888/tcp, 8080/tcp

注意, 可以在 “docker-compose up” 和 “docker-compose ps” 前都添加了 COMPOSE_PROJECT_NAME=zk_test 这个环境变量, 这是为 compose 工程起一个名字, 以免与其他的 compose 混淆.

2.5.ZK 命令行连接 ZK服务器

如果启动时没有绑定宿主机的端口, 无法直接访问ZK服务器,需要通过 Docker 的 link 机制访问 ZK 容器

执行如下命令:

docker run -it --rm --link zookeeper:zookeeper zookeeper zkCli.sh -server localhost:2181,localhost:2182,localhost:2183

注意此处zookeeper_default的格式为{project_name}_{network_name},project_name默认为文件夹名,network_name默认为docker-compose.yml中指定的名字。docker-compose启动集群时会为其分配专门的网络,

其中–net参数可以用使用docker network ls找到

docker network ls

NETWORK ID NAME DRIVER SCOPE

a3a285347b0f bridge bridge local

0cac385a4171 host host local

6f490d6d9f91 none null local

f44bab96e95c zookeeper_default bridge local

2.6. ncat 命令行检查状态

检查三个zookeeper服务模式,通过nc输出分析确定ZK 集群已经运行 ,zoo1, zoo2 模式 follower, zoo3 模式leader。

通过 nc (netcap)命令连接到指定的 ZK 服务器, 发送 stat查看 ZK 服务的状态, 例如:

echo stat | nc 127.0.0.1 2181

输出如下:

Latency min/avg/max: 0/0.0/0

Received: 2

Sent: 1

Connections: 1

Outstanding: 0

Zxid: 0x200000001

Mode: follower

Node count: 5

[root@cloudhost~]#

[root@cloudhost~]# echo stat | nc 127.0.0.1 2182

Zookeeper version: 3.6.1–104dcb3e3fb464b30c5186d229e00af9f332524b, built on 04/21/2020 15:01 GMT

Clients:

/172.23.0.1:522520

Latency min/avg/max: 0/0.0/0

Received: 3

Sent: 2

Connections: 1

Outstanding: 0

Zxid: 0x700000000

Mode: follower

Node count: 5

[root@cloudhost~]# echo stat | nc 127.0.0.1 2183

Zookeeper version: 3.6.1–104dcb3e3fb464b30c5186d229e00af9f332524b, built on 04/21/2020 15:01 GMT

Clients:

/172.23.0.1:515240

Latency min/avg/max: 0/0.0/0

Received: 4

Sent: 3

Connections: 1

Outstanding: 0

Zxid: 0x700000000

Mode: leader

Node count: 5

Proposal sizes last/min/max: -1/-1/-1

3.附加

3.1.docker-compose文件解释

docker-compose文件解释

networks: 指定了网络名,最终的网络名可能为"{project_name}/{network_name}"

ports: {p1}:{p2} 指定将宿主机上的p1端口映射到容器的p2端口,映射关系可在docker-compose ps中查看

volumes: zookeeper docker hub页面提到 This image is configured with volumes at /data and /datalog to hold the Zookeeper in-memory database snapshots and the transaction log of updates to the database, respectively.

大致就是zookeeper会需要创建卷来存储/data和/datalog(实际上貌似每个容器会创造三个卷)

- zoo1-data:/data结合下方的zoo1-data:(换行) external: false的意思就是,将/data挂载在zoo1-data上,同时external: false将指定不一定要使用现成的卷(不存在则创建名为{project_name}_zoo1-data的卷,此处为zk_cluster_zoo1-data)(external: true意思是必须使用现成的卷)

environment: 这里的三个变量都可在zookeeper docker hub页面查找中查阅

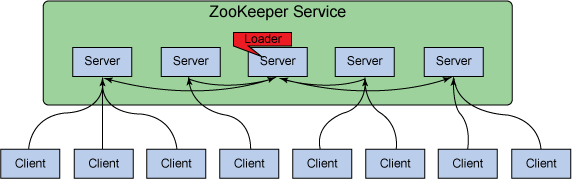

3.2.ZooKeeper 的客户端-服务器架构

ZooKeeper 虽然是一个针对分布式系统的协调服务,但它本身也是一个分布式应用程序。ZooKeeper 遵循一个简单的客户端-服务器模型,其中客户端 是使用服务的节点(即机器),而服务器 是提供服务的节点。ZooKeeper 服务器的集合形成了一个 ZooKeeper 集合体(ensemble)。在任何给定的时间内,一个 ZooKeeper 客户端可连接到一个 ZooKeeper 服务器。每个 ZooKeeper 服务器都可以同时处理大量客户端连接。每个客户端定期发送 ping 到它所连接的 ZooKeeper 服务器,让服务器知道它处于活动和连接状态。被询问的 ZooKeeper 服务器通过 ping 确认进行响应,表示服务器也处于活动状态。如果客户端在指定时间内没有收到服务器的确认,那么客户端会连接到集合体中的另一台服务器,而且客户端会话会被透明地转移到新的 ZooKeeper 服务器。

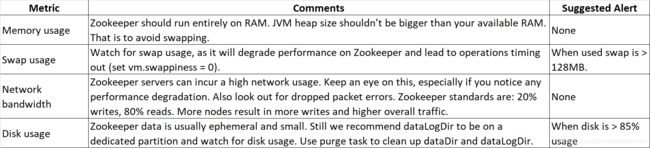

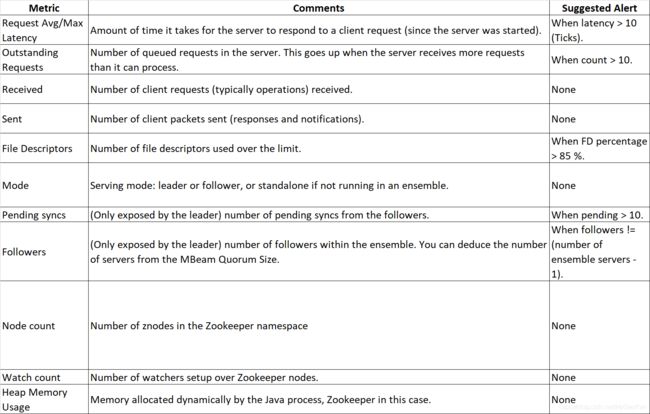

3.3.Monitor

3.4.Tools

The simplest way to monitor Zookeeper and collect these metrics is by using the commands known as “4 letter words” within the ZK community. You can run these using telnet or netcat directly:

$ echo ruok | nc 127.0.0.1 5111

imok

$ echo mntr | nc localhost 5111

zk_version 3.4.0

zk_avg_latency 0

zk_max_latency 0

zk_min_latency 0

zk_packets_received 70

zk_packets_sent 69

zk_outstanding_requests 0

zk_server_state leader

zk_znode_count 4

zk_watch_count 0

zk_ephemerals_count 0

zk_approximate_data_size 27

zk_followers 4 - only exposed by the Leader

zk_synced_followers 4 - only exposed by the Leader

zk_pending_syncs 0 - only exposed by the Leader

zk_open_file_descriptor_count 23 - only available on Unix platforms

zk_max_file_descriptor_count 1024 - only available on Unix platforms

We’ve looked at mytop for MySQL, and memcache-top for Memcached. Well, Zookeeper has one too, zktop:

$ ./zktop.py --servers “localhost:2181,localhost:2182,localhost:2183”

Ensemble – nodecount:10 zxid:0x1300000001 sessions:4

SERVER PORT M OUTST RECVD SENT CONNS MINLAT AVGLAT MAXLAT

localhost 2181 F 0 93 92 2 2 7 13

localhost 2182 F 0 37 36 1 0 0 0

localhost 2183 L 0 36 35 1 0 0 0

CLIENT PORT I QUEUE RECVD SENT

127.0.0.1 34705 1 0 56 56

127.0.0.1 35943 1 0 1 0

127.0.0.1 33999 1 0 1 0

127.0.0.1 37988 1 0 1 0

If you are after more detailed metrics, you can access those through JMX. You could also take the DIY road and go for JMXTrans and Graphite, or use Nagios/Cacti/Ganglia with check_zookeeper.py. Alternatively, you can save time (and preserve your sanity) by choosing a hosted service like Server Density (that’s us!).

If you want to test the quality and performance of your Zookeeper ensemble, then zk-smoketest with zk-smoketest.py and zk-latencies.py are great tools to check out.

Other:ZooKeeper Explorer

Download

Eclipse version 3.6 or higher is required.

Install plugins using the software or update site:

http://people.apache.org/~srimanth/hadoop-eclipse/update-site

3.5.处理问题

a.)集群初始化失败提示通信失败

报错如下:

2020-06-08 13:44:25,696 [myid:3] - INFO [ListenerHandler-zoo3/172.20.0.3:3888:QuorumCnxManager L i s t e n e r Listener ListenerListenerHandler@1070] - Received connection request from /172.20.0.4:56738 2020-06-08 13:44:25,700 [myid:3] - WARN [SendWorker:2:QuorumCnxManager S e n d W o r k e r @ 1281 ] − I n t e r r u p t e d w h i l e w a i t i n g f o r m e s s a g e o n q u e u e j a v a . l a n g . I n t e r r u p t e d E x c e p t i o n a t j a v a . b a s e / j a v a . u t i l . c o n c u r r e n t . l o c k s . A b s t r a c t Q u e u e d S y n c h r o n i z e r SendWorker@1281] - Interrupted while waiting for message on queue java.lang.InterruptedException at java.base/java.util.concurrent.locks.AbstractQueuedSynchronizer SendWorker@1281]−Interruptedwhilewaitingformessageonqueuejava.lang.InterruptedExceptionatjava.base/java.util.concurrent.locks.AbstractQueuedSynchronizerConditionObject.reportInterruptAfterWait(Unknown Source)

解决步骤:YML文件调整ZOO_SERVERS值

当前服务器节点的hostname设置为0.0.0.0,

如server.1设置为

ZOO_SERVERS: server.1=0.0.0.0:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181

如server.2设置为

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=0.0.0.0:2888:3888;2181 server.3=zoo3:2888:3888;2181

如server.2设置为

ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=0.0.0.0:2888:3888;2181

b.)nc执行失败提示whitelist

报错如下:

echo stat | nc 127.0.0.1 2182

stat is not executed because it is not in the whitelist.

解决步骤:YML文件增加whitelist参数

ZOO_4LW_COMMANDS_WHITELIST: “*”

3.6.参考文档

ZooKeeper 基础知识、部署和应用程序

https://www.ibm.com/developerworks/cn/data/library/bd-zookeeper/

docker-compose部署zookeeper集群

https://cloud.tencent.com/developer/article/1546947

How to Monitor Zookeeper

https://blog.serverdensity.com/how-to-monitor-zookeeper/

Apache Hadoop-Eclipse

http://people.apache.org/~srimanth/hadoop-eclipse/index.html