配置keepalived+lvs+nginx架构,lvs为nat模式

服务结构介绍

| - | - | - |

|---|---|---|

| 主机名 | ip地址 | 安装服务(角色) |

| client | 192.168.1.10 | 客户端 |

| lvs-0001网卡eth1 | 网卡eth1---->192.168.1.30 | keepalived/lvs/ipvsadm |

| lvs-0001网卡eth0 | 网卡eth0---->192.168.0.30 | keepalived/lvs/ipvsadm |

| lvs-0002网卡eth1 | 网卡eth1---->192.168.1.31 | keepalived/lvs/ipvsadm |

| lvs-0002网卡eth0 | 网卡eth0---->192.168.0.31 | keepalived/lvs/ipvsadm |

| web1 | 192.168.0.20 | nginx |

| web2 | 192.168.0.21 | nginx |

NAT 通过转换源ip地址和目的ip地址

NAT模式 lvs需要双网卡 既能对外与客户端连接 也能对内与服务器连接

Clinet 访问过程

客户端访问lvs的vip,访问请求的源ip地址为客户端ip 目的地ip地址为lvs vip

lvs收到请求后,转换ip。把请求的源ip地址改为lvs 与服务器通信的ip,目的地ip改为服务器ip 把请求转给服务器

服务器收到请求后,返回结果给lvs。此时返回结果的 源ip为服务器ip,目的地ip为lvs 与服务器通信的ip

lvs收到返回结果后,再次转换ip。把返回结果的源ip改为lvs vip,把返回结果的目的地ip改为客户端ip,把返回结果转给客户端

LVS介绍

-

LVS的工作模式:

- NAT:网络地址转换

- DR:路由模式(直连路由)

- TUN:隧道模式

-

术语:

- 调度器:LVS服务器

- 真实服务器Real Server:提供服务的服务器

- VIP:虚拟地址,提供给用户访问的地址

- DIP:指定地址,LVS服务器上与真实服务器通信的地址

- RIP:真实地址,真实服务器的地址

-

常见的调度算法,共10个,常用的有4个:

-

轮询rr:Real Server轮流提供服务

-

加权轮询wrr:根据服务器性能设置权重,权重大的得到的请求更多

-

最少连接lc:根据Real Server的连接数分配请求

-

加权最少连接wlc:类似于wrr,根据权重分配请求

-

配置架构

安装nginx,配置网站服务

配置web1

安装nginx

[root@web1 ~]# yum -y install gcc make

[root@web1 ~]# yum -y install pcre-devel

[root@web1 ~]# yum -y install openssl openssl-devel

[root@web1 ~]# tar xf nginx-1.17.6.tar.gz

[root@web1 ~]# cd nginx-1.17.6

[root@web1 nginx-1.17.6]# ./configure --with-http_ssl_module

[root@web1 nginx-1.17.6]# make && make install

[root@web1 nginx-1.17.6]# ls /usr/local/nginx/

conf html logs sbin

[root@web1 nginx-1.17.6]# cd /usr/local/nginx/

#nginx默认的配置,打开解析php文件,实现动静分离,注意此处修改为include fastcgi.conf;

[root@web1 nginx]# vim conf/nginx.conf

65 location ~ \.php$ {

66 root html;

67 fastcgi_pass 127.0.0.1:9000;

68 fastcgi_index index.php;

69 # fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

70 include fastcgi.conf;

71 }

配置网站

#准备静态页面

[root@web1 nginx]# vim html/index.html

<html>

<marquee behavior="alternate">

<font size="12px" color=#00ff00>Hello World web1~~~~

</marquee>

</html>

#准备动态页面

[root@web1 nginx]# vim html/test.php

<pre>

<?PHP

//phpinfo();

//$arr = array("id" => random_int(0000,9999));

foreach (array("REMOTE_ADDR", "REQUEST_METHOD", "HTTP_USER_AGENT", "REQUEST_URI") as $i) {

$arr[$i] = $_SERVER[$i];

}

if($_SERVER['REQUEST_METHOD']=="POST"){

$arr += $_POST;

}else{

$arr += $_GET;

}

print_R($arr);

print_R("php_host: \t".gethostname()."\n");

$n = 0;

$start = 1;

$end = isset($_GET["id"])? $_GET["id"] : 10000 ;

for($num = $start; $num <= $end; $num++) {

if ( $num == 1 ) continue;

for ($i = 2; $i <= sqrt($num); $i++) {

if ($num % $i == 0) continue 2;

}

$n++;

}

print_R($n."\n");

?>

安装php

yum -y install php

yum -y install php-fpm

启动服务

[root@web1 nginx]# systemctl enable php-fpm --now

[root@web1 nginx]# sbin/nginx -t

nginx: the configuration file /usr/local/nginx/conf/nginx.conf syntax is ok

nginx: configuration file /usr/local/nginx/conf/nginx.conf test is successful

[root@web1 nginx]# sbin/nginx

[root@web1 nginx]# ss -utnlp | grep 80

tcp LISTEN 0 511 *:80 *:* users:(("nginx",pid=16056,fd=6),("nginx",pid=16055,fd=6))

[root@web1 nginx]# ss -utnlp | grep 9000

tcp LISTEN 0 128 127.0.0.1:9000 *:* users:(("php-fpm",pid=16052,fd=0),("php-fpm",pid=16051,fd=0),("php-fpm",pid=16050,fd=0),("php-fpm",pid=16049,fd=0),("php-fpm",pid=16048,fd=0),("php-fpm",pid=16047,fd=6))

#关闭nginx服务

[root@web1 ~]# /usr/local/nginx/sbin/nginx -s sotp

配置nginx开机自启

把nginx加入系统服务,使用systemctl控制(笔者采用此种方法)

#编写Unit文件,使systemctl命令控制nginx

[root@web1 ~]# vim /usr/lib/systemd/system/nginx.service

[Unit]

Description=The Nginx HTTP Server

After=network.target remote-fs.target nss-lookup.target

[Service]

#nginx是多进程类型程序,要设置为forking

Type=forking

#当执行了systemctl start nginx之后执行的命令

ExecStart=/usr/local/nginx/sbin/nginx

#当执行了systemctl reload nginx之后执行的命令

ExecReload=/usr/local/nginx/sbin/nginx -s reload

#当执行了systemctl stop nginx之后执行的命令,这里是用kill命令发送退出信号给nginx的进程号,相当于停止nginx服务,-s QUIT是发送退出信号,$MAINPID是变量,里面存了nginx的进程号

ExecStop=/bin/kill -s QUIT ${MAINPID}

[Install]

#支持开机自启

WantedBy=multi-user.target

#激活刚才的test.service文件,但有时可能不好使,可以重启系统然后重启服务之后可以用systemctl等命令控制nginx

[root@web1 ~]# systemctl daemon-reload

#启动服务并设置开机自启

[root@web1 ~]# systemctl enable nginx --now

Created symlink from /etc/systemd/system/multi-user.target.wants/nginx.service to /usr/lib/systemd/system/nginx.service.

[root@web1 ~]# ss -utnlp | grep nginx

tcp LISTEN 0 511 *:80 *:* users:(("nginx",pid=1748,fd=6),("nginx",pid=1747,fd=6))

把nginx启动命令写入开机启动脚本

echo "/usr/local/nginx/sbin/nginx" >> /etc/rc.local

本机访问查看

![]()

[root@web1 nginx]# curl localhost

<html>

<marquee behavior="alternate">

<font size="12px" color=#00ff00>Hello World web1~~~

</marquee>

</html>

[root@web1 nginx]# curl localhost/test.php

<pre>

Array

(

[REMOTE_ADDR] => 127.0.0.1

[REQUEST_METHOD] => GET

[HTTP_USER_AGENT] => curl/7.29.0

[REQUEST_URI] => /test.php

)

php_host: web1

1229

配置web2

安装nginx同web1

[root@web2 ~]# yum -y install gcc make

[root@web2 ~]# yum -y install pcre-devel

[root@web2 ~]# yum -y install openssl openssl-devel

[root@web2 ~]# tar xf nginx-1.17.6.tar.gz

[root@web2 ~]# cd nginx-1.17.6/

[root@web2 nginx-1.17.6]# ./configure --with-http_ssl_module

[root@web2 nginx-1.17.6]# make && make install

[root@web2 nginx-1.17.6]# cd /usr/local/nginx/

[root@web2 nginx]# vim conf/nginx.conf

65 location ~ \.php$ {

66 root html;

67 fastcgi_pass 127.0.0.1:9000;

68 fastcgi_index index.php;

69 # fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

70 include fastcgi.conf;

71 }

配置网站

[root@web2 nginx]# vim html/index.html

<html>

<marquee behavior="alternate">

<font size="12px" color=#00ff00>Hello World web2~~~~

</marquee>

</html>

#准备动态页面

[root@web2 nginx]# vim html/test.php

<pre>

<?PHP

//phpinfo();

//$arr = array("id" => random_int(0000,9999));

foreach (array("REMOTE_ADDR", "REQUEST_METHOD", "HTTP_USER_AGENT", "REQUEST_URI") as $i) {

$arr[$i] = $_SERVER[$i];

}

if($_SERVER['REQUEST_METHOD']=="POST"){

$arr += $_POST;

}else{

$arr += $_GET;

}

print_R($arr);

print_R("php_host: \t".gethostname()."\n");

$n = 0;

$start = 1;

$end = isset($_GET["id"])? $_GET["id"] : 10000 ;

for($num = $start; $num <= $end; $num++) {

if ( $num == 1 ) continue;

for ($i = 2; $i <= sqrt($num); $i++) {

if ($num % $i == 0) continue 2;

}

$n++;

}

print_R($n."\n");

?>

安装php

yum -y install php

yum -y install php-fpm

启动服务

[root@web2 nginx]# systemctl enable php-fpm --now

[root@web2 nginx]# sbin/nginx -t

nginx: the configuration file /usr/local/nginx/conf/nginx.conf syntax is ok

nginx: configuration file /usr/local/nginx/conf/nginx.conf test is successful

[root@web2 nginx]# sbin/nginx

[root@web2 nginx]# ss -utnlp | grep 80

udp UNCONN 0 0 *:68 *:* users:(("dhclient",pid=580,fd=6))

tcp LISTEN 0 511 *:80 *:* users:(("nginx",pid=13691,fd=6),("nginx",pid=13690,fd=6))

[root@web2 nginx]# ss -utnlp | grep 9000

tcp LISTEN 0 128 127.0.0.1:9000 *:* users:(("php-fpm",pid=13687,fd=0),("php-fpm",pid=13686,fd=0),("php-fpm",pid=13685,fd=0),("php-fpm",pid=13684,fd=0),("php-fpm",pid=13683,fd=0),("php-fpm",pid=13682,fd=6))

#关闭nginx服务

[root@web2 ~]# /usr/local/nginx/sbin/nginx -s sotp

配置nginx开机自启

把nginx加入系统服务,使用systemctl控制(笔者采用此种方法)

#编写Unit文件,使systemctl命令控制nginx

[root@web2 ~]# vim /usr/lib/systemd/system/nginx.service

[Unit]

Description=The Nginx HTTP Server

After=network.target remote-fs.target nss-lookup.target

[Service]

#nginx是多进程类型程序,要设置为forking

Type=forking

#当执行了systemctl start nginx之后执行的命令

ExecStart=/usr/local/nginx/sbin/nginx

#当执行了systemctl reload nginx之后执行的命令

ExecReload=/usr/local/nginx/sbin/nginx -s reload

#当执行了systemctl stop nginx之后执行的命令,这里是用kill命令发送退出信号给nginx的进程号,相当于停止nginx服务,-s QUIT是发送退出信号,$MAINPID是变量,里面存了nginx的进程号

ExecStop=/bin/kill -s QUIT ${MAINPID}

[Install]

#支持开机自启

WantedBy=multi-user.target

#激活刚才的test.service文件,但有时可能不好使,可以重启系统然后重启服务之后可以用systemctl等命令控制nginx

[root@web2 ~]# systemctl daemon-reload

#启动服务并设置开机自启

[root@web2 ~]# systemctl enable nginx --now

Created symlink from /etc/systemd/system/multi-user.target.wants/nginx.service to /usr/lib/systemd/system/nginx.service.

[root@webw ~]# ss -utnlp | grep nginx

tcp LISTEN 0 511 *:80 *:* users:(("nginx",pid=1748,fd=6),("nginx",pid=1747,fd=6))

把nginx启动命令写入开机启动脚本

[root@web2 ~]# echo "/usr/local/nginx/sbin/nginx" >> /etc/rc.local

本机访问查看

[root@web2 nginx]# curl localhost

<html>

<marquee behavior="alternate">

<font size="12px" color=#00ff00>Hello World web2~~~~

</marquee>

</html>

[root@web2 nginx]# curl localhost/test.php

<pre>

Array

(

[REMOTE_ADDR] => 127.0.0.1

[REQUEST_METHOD] => GET

[HTTP_USER_AGENT] => curl/7.29.0

[REQUEST_URI] => /test.php

)

php_host: web2

1229

配置lvs服务器双网卡

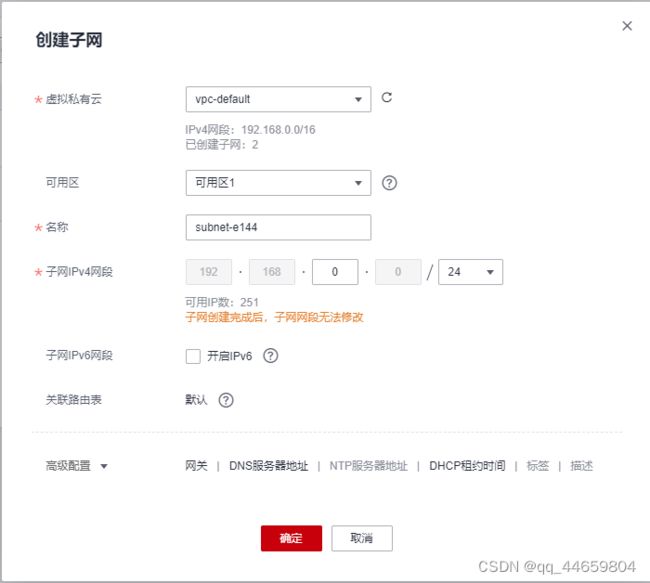

创建子网

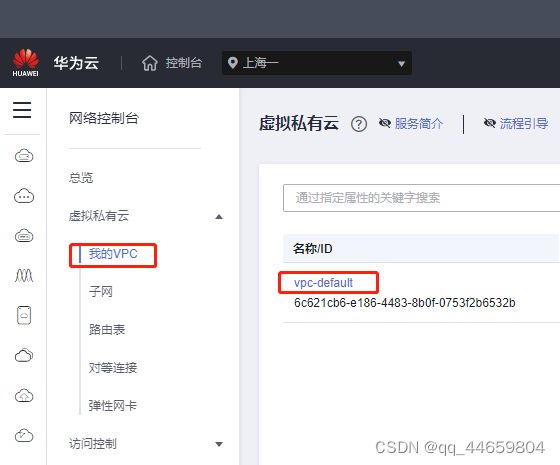

登录控制台,进入虚拟私有云VPC

单击我的VPC,按名称/ID进入vpc

1,点击子网的数字,进入子网,创建子网

配置第二个弹性网卡

lvs-0001配置第二个弹性网卡(图示为已绑定)

为lvs-0001服务器添加新的弹性网卡,并设置新网段的ip地址

选择要新增网卡的服务器

![]()

新增网卡,设置ip

lvs-0002配置第二个弹性网卡(图示为已绑定)

配置策略路由

当云服务器配置了多张网卡时,需要在云服务器内部配置策略路由来实现非主网卡的通信。未设置路由规则,扩展网卡的IP将无法访问

配置路由策略

详情见:https://support.huaweicloud.com/trouble-ecs/ecs_trouble_0110.html

获取信息

获取源端即本机的每个网卡IP地址,网卡名称

获取每个IP地址对应的网关信息,子网网段信息

获取目的端的ip地址

获取lvs-0001的IP地址

服务器终端ifconfig或者华为云查看弹性服务器获取IP地址

[root@lvs-0001 ~]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.0.30 netmask 255.255.255.0 broadcast 192.168.0.255

inet6 fe80::f816:3eff:fee0:3856 prefixlen 64 scopeid 0x20<link>

ether fa:16:3e:e0:38:56 txqueuelen 1000 (Ethernet)

RX packets 5568 bytes 1699453 (1.6 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 5464 bytes 2347919 (2.2 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.1.30 netmask 255.255.255.0 broadcast 192.168.1.255

inet6 fe80::f816:3eff:fe16:6e8b prefixlen 64 scopeid 0x20<link>

ether fa:16:3e:16:6e:8b txqueuelen 1000 (Ethernet)

RX packets 64 bytes 3887 (3.7 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 9 bytes 970 (970.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

获取lvs-0002的IP地址

[root@lvs-0002 ~]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.0.31 netmask 255.255.255.0 broadcast 192.168.0.255

inet6 fe80::f816:3eff:fee0:3857 prefixlen 64 scopeid 0x20<link>

ether fa:16:3e:e0:38:57 txqueuelen 1000 (Ethernet)

RX packets 3427 bytes 1070844 (1.0 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 3490 bytes 1472206 (1.4 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.1.31 netmask 255.255.255.0 broadcast 192.168.1.255

inet6 fe80::f816:3eff:fe16:6e8c prefixlen 64 scopeid 0x20<link>

ether fa:16:3e:16:6e:8c txqueuelen 1000 (Ethernet)

RX packets 2 bytes 415 (415.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 9 bytes 970 (970.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

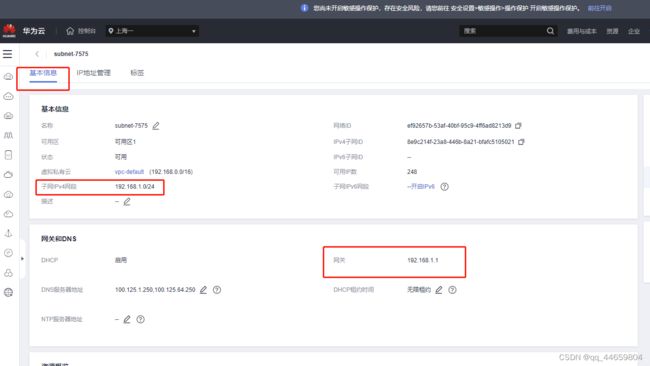

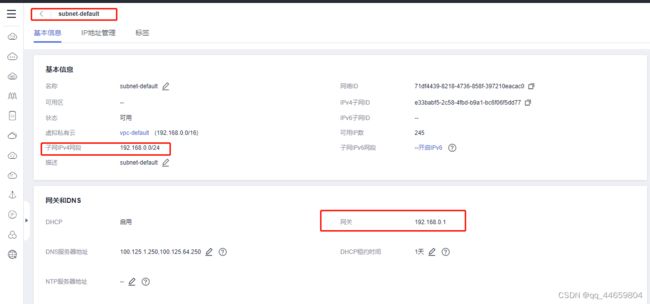

获取子网网段和网关

华为云进入 -->虚拟私有云–>子网–>名称/ID–>基本信息,查看子网网段和网关

lvs-0001和lvs-0002拓展网卡在同一网段,查询一个即可

lvs-0001和lvs-0002主网卡在同一网段,查询一个即可

获取扩展网卡的子网网段和网关

获取主网卡的子网网段和网关

获取目的端的ip地址

web1和web2网卡在同一网段,查询一个即可

[root@web1 ~]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.0.20 netmask 255.255.255.0 broadcast 192.168.0.255

inet6 fe80::f816:3eff:fee0:384c prefixlen 64 scopeid 0x20<link>

ether fa:16:3e:e0:38:4c txqueuelen 1000 (Ethernet)

RX packets 2131 bytes 618430 (603.9 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2220 bytes 847737 (827.8 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

[root@web2 ~]# ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.0.21 netmask 255.255.255.0 broadcast 192.168.0.255

inet6 fe80::f816:3eff:fee0:384d prefixlen 64 scopeid 0x20<link>

ether fa:16:3e:e0:38:4d txqueuelen 1000 (Ethernet)

RX packets 2212 bytes 629393 (614.6 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2255 bytes 850290 (830.3 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

获取信息如下

| - | - | - | - | - | - |

|---|---|---|---|---|---|

| 端 | 服务器 | 网卡名称 | IP地址 | 子网网段 | 子网网关 |

| 源端 | lvs-0001 | eth0 | 192.168.0.30 | 192.168.0.0 | 192.168.0.1 |

| 源端 | lvs-0001 | eth1 | 192.168.1.30 | 192.168.1.0 | 192.168.1.1 |

| 源端 | lvs-0002 | eth0 | 192.168.0.31 | 192.168.0.0 | 192.168.0.1 |

| 源端 | lvs-0002 | eth1 | 192.168.1.31 | 192.168.1.0 | 192.168.1.1 |

| 目的端 | web1 | eth0 | 192.168.0.20 | / | / |

| 目的端 | web2 | eth0 | 192.168.0.21 | / | / |

配置多网卡策略路由前,请务必确保源主网卡和目的端通信正常。

检查源端云服务器主网卡和目的端云服务器通信情况

ping -I 源端云服务器主网卡地址 目的端云服务器地址

服务器主网卡和目的端云服务器通信正常

[root@lvs-0001 ~]# ping -I 192.168.0.30 192.168.0.20

PING 192.168.0.20 (192.168.0.20) from 192.168.0.30 : 56(84) bytes of data.

64 bytes from 192.168.0.20: icmp_seq=1 ttl=64 time=1.17 ms

64 bytes from 192.168.0.20: icmp_seq=2 ttl=64 time=0.256 ms

64 bytes from 192.168.0.20: icmp_seq=3 ttl=64 time=0.243 ms

--- 192.168.0.20 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2001ms

rtt min/avg/max/mdev = 0.243/0.557/1.173/0.435 ms

配置前服务器主网卡和目的端云服务器不能通信

[root@lvs-0001 ~]# ping -I 192.168.1.30 192.168.0.20

PING 192.168.0.20 (192.168.0.20) from 192.168.1.30 : 56(84) bytes of data.

--- 192.168.0.20 ping statistics ---

7 packets transmitted, 0 received, 100% packet loss, time 5999ms

配置命令格式

依次执行以下命令,添加主网卡和扩展网卡的策略路由。

主网卡

ip route add default via 主网卡子网网关 dev 主网卡网卡名称 table 自定义路由表名称

ip route add 主网卡子网网段 dev 主网卡网卡名称 table 路由表名称

ip rule add from 主网卡网卡地址 table 路由表名称

扩展网卡

ip route add default via 扩展网卡子网网关 dev 扩展网卡网卡名称 table 自定义路由表名称,不能重名

ip route add 扩展网卡子网网段 dev 扩展网卡网卡名称 table 路由表名称

ip rule add from 扩展网卡网卡地址 table 路由表名称

其他扩展网卡同样配置,路由表名字可自定义,但不能重名

执行配置命令,配置lvs-0001策略路由

web2与web1同网段,目的端配置web1即可

配置主网卡

[root@lvs-0001 ~]# ip route add default via 192.168.0.1 dev eth0 table 10

[root@lvs-0001 ~]# ip route add 192.168.0.0/24 dev eth0 table 10

[root@lvs-0001 ~]# ip rule add from 192.168.0.30 table 10

配置扩展网卡

[root@lvs-0001 ~]# ip route add default via 192.168.1.1 dev eth1 table 20

[root@lvs-0001 ~]# ip route add 192.168.1.0/24 dev eth1 table 20

[root@lvs-0001 ~]# ip rule add from 192.168.1.30 table 20

查看配置验证

[root@lvs-0001 ~]# ip rule

0: from all lookup local

32763: from all to 169.254.169.254 lookup main

32764: from 192.168.1.30 lookup 20

32765: from 192.168.0.30 lookup 10

32766: from all lookup main

32767: from all lookup default

[root@lvs-0001 ~]# ip route show table 10

default via 192.168.0.1 dev eth0

192.168.0.0/24 dev eth0 scope link

[root@lvs-0001 ~]# ip route show table 20

default via 192.168.1.1 dev eth1

192.168.1.0/24 dev eth1 scope link

测试通信验证

ping -I 源端云服务器主网卡地址 目的端云服务器地址

ping -I 源端云服务器扩展网卡地址 目的端云服务器地址

服务器主网卡和扩展网卡和目的端云服务器都能通信

[root@lvs-0001 ~]# ping -I 192.168.0.30 192.168.0.20

PING 192.168.0.20 (192.168.0.20) from 192.168.0.30 : 56(84) bytes of data.

64 bytes from 192.168.0.20: icmp_seq=1 ttl=64 time=0.384 ms

64 bytes from 192.168.0.20: icmp_seq=2 ttl=64 time=0.249 ms

64 bytes from 192.168.0.20: icmp_seq=3 ttl=64 time=0.218 ms

--- 192.168.0.20 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.218/0.283/0.384/0.074 ms

[root@lvs-0001 ~]# ping -I 192.168.1.30 192.168.0.20

PING 192.168.0.20 (192.168.0.20) from 192.168.1.30 : 56(84) bytes of data.

64 bytes from 192.168.0.20: icmp_seq=1 ttl=64 time=0.722 ms

64 bytes from 192.168.0.20: icmp_seq=2 ttl=64 time=0.264 ms

64 bytes from 192.168.0.20: icmp_seq=3 ttl=64 time=0.219 ms

--- 192.168.0.20 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2000ms

rtt min/avg/max/mdev = 0.219/0.401/0.722/0.228 ms

永久配置策略路由

临时路由配置完后立即生效,当云服务器重启后临时路由会丢失,需要配置永久路由,避免云服务器重启后网络中断。

把路由配置追加写入开机运行的文件,/etc/rc.local

[root@lvs-0001 ~]# vim /etc/rc.local

# wait for nics up

sleep 5

# Add v4 routes for eth0

ip route flush table 10

ip route add default via 192.168.0.1 dev eth0 table 10

ip route add 192.168.0.0/24 dev eth0 table 10

ip rule add from 192.168.0.30 table 10

# Add v4 routes for eth1

ip route flush table 20

ip route add default via 192.168.1.1 dev eth1 table 20

ip route add 192.168.1.0/24 dev eth1 table 20

ip rule add from 192.168.1.30 table 20

# Add v4 routes for cloud-init

ip rule add to 169.254.169.254 table main

参数说明:

- wait for nics up:文件启动时间,建议和本示例中的配置保持一致。

- Add v4 routes for eth0:主网卡的策略路由,和上方配置保持一致。

- Add v4 routes for eth1:扩展网卡的策略路由,和上方配置保持一致。

- Add v4 routes for cloud-init:配置cloud-init地址,请和本示例中的配置保持一致,不要修改。

给文件添加执行权限

chmod +x /etc/rc.local

[root@lvs-0001 ~]# chmod +x /etc/rc.local

重启服务器使配置永久生效

重启服务器使配置永久生效,此处请确保不影响业务再重启云服务器操作

[root@lvs-0001 ~]# reboot

检查源端云服务器主网卡和目的端云服务器通信情况

ping -I 源端云服务器主网卡地址 目的端云服务器地址

服务器主网卡和目的端云服务器通信正常

[root@lvs-0002 ~]# ping -I 192.168.0.31 192.168.0.20

PING 192.168.0.20 (192.168.0.20) from 192.168.0.31 : 56(84) bytes of data.

64 bytes from 192.168.0.20: icmp_seq=1 ttl=64 time=0.564 ms

64 bytes from 192.168.0.20: icmp_seq=2 ttl=64 time=0.251 ms

--- 192.168.0.20 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 0.251/0.407/0.564/0.157 ms

配置前服务器主网卡和目的端云服务器不能通信

[root@lvs-0002 ~]# ping -I 192.168.1.31 192.168.0.20

PING 192.168.0.20 (192.168.0.20) from 192.168.1.31 : 56(84) bytes of data.

--- 192.168.0.20 ping statistics ---

7 packets transmitted, 0 received, 100% packet loss, time 5999ms

配置命令格式

依次执行以下命令,添加主网卡和扩展网卡的策略路由。

主网卡

ip route add default via 主网卡子网网关 dev 主网卡网卡名称 table 自定义路由表名称

ip route add 主网卡子网网段 dev 主网卡网卡名称 table 路由表名称

ip rule add from 主网卡网卡地址 table 路由表名称

扩展网卡

ip route add default via 扩展网卡子网网关 dev 扩展网卡网卡名称 table 自定义路由表名称,不能重名

ip route add 扩展网卡子网网段 dev 扩展网卡网卡名称 table 路由表名称

ip rule add from 扩展网卡网卡地址 table 路由表名称

其他扩展网卡同样配置,路由表名字可自定义,但不能重名

执行配置命令,配置lvs-0002策略路由

web2与web1同网段,目的端配置web1即可

配置主网卡

[root@lvs-0002 ~]# ip route add default via 192.168.0.1 dev eth0 table 100

[root@lvs-0002 ~]# ip route add 192.168.0.0/24 dev eth0 table 100

[root@lvs-0002 ~]# ip rule add from 192.168.0.31 table 100

配置扩展网卡

[root@lvs-0002 ~]# ip route add default via 192.168.1.1 dev eth1 table 200

[root@lvs-0002 ~]# ip route add 192.168.1.0/24 dev eth1 table 200

[root@lvs-0002 ~]# ip rule add from 192.168.1.31 table 200

查看配置验证

[root@lvs-0002 ~]# ip rule

0: from all lookup local

32764: from 192.168.1.31 lookup 20

32765: from 192.168.0.31 lookup 10

32766: from all lookup main

32767: from all lookup default

[root@lvs-0002 ~]# ip route show table 10

default via 192.168.0.1 dev eth0

192.168.0.0/24 dev eth0 scope link

[root@lvs-0002 ~]# ip route show table 20

default via 192.168.1.1 dev eth1

192.168.1.0/24 dev eth1 scope link

测试通信验证

服务器主网卡和扩展网卡和目的端云服务器都能通信

[root@lvs-0002 ~]# ping -I 192.168.0.31 192.168.0.20

PING 192.168.0.20 (192.168.0.20) from 192.168.0.31 : 56(84) bytes of data.

64 bytes from 192.168.0.20: icmp_seq=1 ttl=64 time=0.713 ms

64 bytes from 192.168.0.20: icmp_seq=2 ttl=64 time=0.303 ms

--- 192.168.0.20 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1000ms

rtt min/avg/max/mdev = 0.303/0.508/0.713/0.205 ms

[root@lvs-0002 ~]# ping -I 192.168.1.31 192.168.0.20

PING 192.168.0.20 (192.168.0.20) from 192.168.1.31 : 56(84) bytes of data.

64 bytes from 192.168.0.20: icmp_seq=1 ttl=64 time=0.440 ms

64 bytes from 192.168.0.20: icmp_seq=2 ttl=64 time=0.264 ms

--- 192.168.0.20 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 999ms

rtt min/avg/max/mdev = 0.264/0.352/0.440/0.088 ms

永久配置策略路由

临时路由配置完后立即生效,当云服务器重启后临时路由会丢失,需要配置永久路由,避免云服务器重启后网络中断。

把路由配置追加写入开机运行的文件,/etc/rc.local

[root@lvs-0002 ~]# vim /etc/rc.local

# wait for nics up

sleep 5

# Add v4 routes for eth0

ip route flush table 100

ip route add default via 192.168.0.1 dev eth0 table 100

ip route add 192.168.0.0/24 dev eth0 table 100

ip rule add from 192.168.0.31 table 100

# Add v4 routes for eth1

ip route flush table 200

ip route add default via 192.168.1.1 dev eth1 table 200

ip route add 192.168.1.0/24 dev eth1 table 200

ip rule add from 192.168.1.31 table 200

# Add v4 routes for cloud-init

ip rule add to 169.254.169.254 table main

参数说明:

- wait for nics up:文件启动时间,建议和本示例中的配置保持一致。

- Add v4 routes for eth0:主网卡的策略路由,和上方配置保持一致。

- Add v4 routes for eth1:扩展网卡的策略路由,和上方配置保持一致。

- Add v4 routes for cloud-init:配置cloud-init地址,请和本示例中的配置保持一致,不要修改。

给文件添加执行权限

chmod +x /etc/rc.local

[root@lvs-0002 ~]# chmod +x /etc/rc.local

重启服务器使配置永久生效

重启服务器使配置永久生效,此处请确保不影响业务再重启云服务器操作

[root@lvs-0002 ~]# reboot

配置lvs的nat模式

lvs-0001配置lvs的nat模式

配置内核转发

LVS已经被集成到Linux内核模块中

#net.ipv4.ip_forward = 1为配置成功

[root@lvs-0001 ~]# echo 'net.ipv4.ip_forward = 1' >> /etc/sysctl.conf

#生效配置

[root@lvs-0001 ~]# sysctl -p

#查看验证

[root@lvs-0001 ~]# sysctl -a | grep ip_forward

net.ipv4.ip_forward = 1

net.ipv4.ip_forward_use_pmtu = 0

sysctl: reading key "net.ipv6.conf.all.stable_secret"

sysctl: reading key "net.ipv6.conf.default.stable_secret"

sysctl: reading key "net.ipv6.conf.eth0.stable_secret"

sysctl: reading key "net.ipv6.conf.eth1.stable_secret"

sysctl: reading key "net.ipv6.conf.lo.stable_secret"

安装ipvsadm

ipvsadm命令是LVS在应用层的管理命令,我们可以通过这个命令去管理LVS的配置。ipvsadm是一个工具,同时它也是一条命令,用于管理LVS的策略规则。

ipvsadm服务,通过系统服务的方式开启关闭LVS的策略规则

[root@lvs-0001 ~]# yum install -y ipvsadm

ipvsadm使用说明

-A: 添加虚拟服务器

-E: 编辑虚拟服务器

-D: 删除虚拟服务器

-t: 添加tcp服务器

-u: 添加udp服务器

-s: 指定调度算法。如轮询rr/加权轮询wrr/最少连接lc/加权最少连接wlc

-a: 添加虚拟服务器后,向虚拟服务器中加入真实服务器

-r: 指定真实服务器

-w: 设置权重

-m: 指定工作模式为NAT

-g: 指定工作模式为DR

设置规则

ipvsadm的配置当前立即生效

#配置轮询调度web1,web2

[root@lvs-0001 ~]# ipvsadm -A -t 192.168.1.30:80 -s rr

#因为只是配置轮询,非加权轮询。设置的权重不起作用

[root@lvs-0001 ~]# ipvsadm -a -t 192.168.1.30:80 -r 192.168.0.20 -w 1 -m

[root@lvs-0001 ~]# ipvsadm -a -t 192.168.1.30:80 -r 192.168.0.21 -w 2 -m

#查看调度策略

[root@lvs-0001 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.1.30:80 rr

-> 192.168.0.20:80 Masq 1 0 0

-> 192.168.0.21:80 Masq 2 0 0

把规则保存到ipvsadm服务启动时需要读取的文件/etc/sysconfig/ipvsadm

添加eth1网卡IP地址的名称解析(使用ipvsadm服务需要)

保存的规则会把ip地址替换为主机名,从配置文件加载规则时,默认主机名对应eth0的IP地址。添加对eth1网卡IP地址的名称解析,会在从配置文件加载规则时,把主机名对应eth1的IP地址,这是我们需要的

[root@lvs-0001 ~]# vim /etc/hosts

192.168.1.30 lvs-0001

查看ipvsadm服务的文件

[root@lvs-0001 ~]# rpm -ql ipvsadm

/etc/sysconfig/ipvsadm-config

/usr/lib/systemd/system/ipvsadm.service

/usr/sbin/ipvsadm

/usr/sbin/ipvsadm-restore

/usr/sbin/ipvsadm-save

/usr/share/doc/ipvsadm-1.27

/usr/share/doc/ipvsadm-1.27/README

/usr/share/man/man8/ipvsadm-restore.8.gz

/usr/share/man/man8/ipvsadm-save.8.gz

/usr/share/man/man8/ipvsadm.8.gz

查看ipvsadm服务文件/usr/lib/systemd/system/ipvsadm.service

#关闭服务时规则从文件/etc/sysconfig/ipvsadm保存,开启服务时规则从文件/etc/sysconfig/ipvsadm加载

[root@lvs-0001 ~]# cat /usr/lib/systemd/system/ipvsadm.service

[Unit]

Description=Initialise the Linux Virtual Server

After=syslog.target network.target

[Service]

Type=oneshot

ExecStart=/bin/bash -c "exec /sbin/ipvsadm-restore < /etc/sysconfig/ipvsadm"

ExecStop=/bin/bash -c "exec /sbin/ipvsadm-save -n > /etc/sysconfig/ipvsadm"

ExecStop=/sbin/ipvsadm -C

RemainAfterExit=yes

[Install]

WantedBy=multi-user.target

保存规则到/etc/sysconfig/ipvsadm

[root@lvs-0001 ~]# ipvsadm-save > /etc/sysconfig/ipvsadm

启动ipvsadm服务,设置开机自启

[root@lvs-0001 ~]# systemctl daemon-reload

[root@lvs-0001 ~]# systemctl enable ipvsadm.service --now

[root@lvs-0001 ~]# systemctl status ipvsadm.service

关闭弹性网卡的源/目的检查

访问web服务测试

[root@lvs-0001 ~]# curl 192.168.1.30

<html>

<marquee behavior="alternate">

<font size="12px" color=#00ff00>Hello World web2~~~~

</marquee>

</html>

[root@lvs-0001 ~]# curl 192.168.1.30

<html>

<marquee behavior="alternate">

<font size="12px" color=#00ff00>Hello World web1~~~~

</marquee>

</html>

lvs-0002 配置lvs的nat模式

配置内核转发

LVS已经被集成到Linux内核模块中

[root@lvs-0002 ~]# echo 'net.ipv4.ip_forward = 1' >> /etc/sysctl.conf

[root@lvs-0002 ~]# sysctl -p

vm.swappiness = 0

net.core.somaxconn = 1024

net.ipv4.tcp_max_tw_buckets = 5000

net.ipv4.tcp_max_syn_backlog = 1024

net.ipv4.ip_forward = 1

[root@lvs-0002 ~]# sysctl -a | grep ip_forward

net.ipv4.ip_forward = 1

net.ipv4.ip_forward_use_pmtu = 0

sysctl: reading key "net.ipv6.conf.all.stable_secret"

sysctl: reading key "net.ipv6.conf.default.stable_secret"

sysctl: reading key "net.ipv6.conf.eth0.stable_secret"

sysctl: reading key "net.ipv6.conf.eth1.stable_secret"

sysctl: reading key "net.ipv6.conf.lo.stable_secret"

安装ipvsadm

ipvsadm命令是LVS在应用层的管理命令,我们可以通过这个命令去管理LVS的配置。ipvsadm是一个工具,同时它也是一条命令,用于管理LVS的策略规则。

ipvsadm服务,通过系统服务的方式开启关闭LVS的策略规则

[root@lvs-0002 ~]# yum -y install ipvsadm.x86_64

ipvsadm使用说明

-A: 添加虚拟服务器

-E: 编辑虚拟服务器

-D: 删除虚拟服务器

-t: 添加tcp服务器

-u: 添加udp服务器

-s: 指定调度算法。如轮询rr/加权轮询wrr/最少连接lc/加权最少连接wlc

-a: 添加虚拟服务器后,向虚拟服务器中加入真实服务器

-r: 指定真实服务器

-w: 设置权重

-m: 指定工作模式为NAT

-g: 指定工作模式为DR

设置规则

ipvsadm的配置当前立即生效

[root@lvs-0002 ~]# ipvsadm -A -t 192.168.1.31:80 -s rr

[root@lvs-0002 ~]# ipvsadm -a -t 192.168.1.31:80 -r 192.168.0.20 -w 1 -m

[root@lvs-0002 ~]# ipvsadm -a -t 192.168.1.31:80 -r 192.168.0.21 -w 2 -m

[root@lvs-0002 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.1.31:80 rr

-> 192.168.0.20:80 Masq 1 0 0

-> 192.168.0.21:80 Masq 2 0 0

把规则保存到ipvsadm服务启动时需要读取的文件/etc/sysconfig/ipvsadm

添加eth1网卡IP地址的名称解析(使用ipvsadm服务需要)

保存的规则会把ip地址替换为主机名,从配置文件加载规则时,默认主机名对应eth0的IP地址。添加对eth1网卡IP地址的名称解析,会在从配置文件加载规则时,把主机名对应eth1的IP地址,这是我们需要的

[root@lvs-0002 ~]# vim /etc/hosts

192.168.1.31 lvs-0002

保存规则到/etc/sysconfig/ipvsadm

[root@lvs-0002 ~]# ipvsadm-save > /etc/sysconfig/ipvsadm

启动ipvsadm服务,设置开机自启

[root@lvs-0002 ~]# systemctl daemon-reload

[root@lvs-0002 ~]# systemctl enable ipvsadm.service --now

Created symlink from /etc/systemd/system/multi-user.target.wants/ipvsadm.service to /usr/lib/systemd/system/ipvsadm.service.

[root@lvs-0002 ~]# systemctl status ipvsadm.service

关闭弹性网卡的源/目的检查

访问web服务测试

[root@lvs-0002 ~]# curl 192.168.1.31

<html>

<marquee behavior="alternate">

<font size="12px" color=#00ff00>Hello World web1~~~~

</marquee>

</html>

[root@lvs-0002 ~]# curl 192.168.1.31

<html>

<marquee behavior="alternate">

<font size="12px" color=#00ff00>Hello World web2~~~~

</marquee>

</html>

配置keepalived

Keepalived起初是为LVS设计的,可以通过keepalived配置lvs规则,并配置对后端服务器的健康检测和故障隔离

lvs-0001安装配置keepalived

yum -y install keepalived.x86_64

vim /etc/keepalived/keepalived.conf

12 router_id lvs-0001 #设置本机在集群中的唯一识别符

13 vrrp_iptables # 自动配置iptables放行规则

14 vrrp_skip_check_adv_addr

15 vrrp_strict

16 vrrp_garp_interval 0

17 vrrp_gna_interval 0

18 }

19

20 vrrp_instance VI_1 {

21 state MASTER # 状态,主为MASTER,备为BACKUP

22 interface eth1 #此处为与客户端交互的网卡

23 virtual_router_id 51 # 虚拟路由器地址

24 priority 100 # 优先级,数字越大越优先

25 advert_int 1 ## 发送心跳消息的间隔

26 authentication {

27 auth_type PASS # 认证类型为共享密码

28 auth_pass 1111 ## 集群中的机器密码相同,才能成为集群

29 }

30 virtual_ipaddress {

31 192.168.1.200/24 # VIP地址,可配置多个

32 }

33 }

34 #以下为keepalived配置lvs的规则 健康检测

35 virtual_server 192.168.1.200 80 { # 声明虚拟服务器地址

36 delay_loop 6 # 健康检查延迟6秒开始

37 lb_algo rr # 调度算法为rr

38 lb_kind NAT # 工作模式为NAT

39 #persistence_timeout 50 # 50秒内相同客户端调度到相同服务器,注释便于验证结果

40 protocol TCP # 协议是TCP

41

42 real_server 192.168.0.20 80 { # 声明真实服务器

43 weight 1 # 权重,调度算法为rr不起作用

44 TCP_CHECK { # 通过TCP协议对真实服务器做健康检查

45 connect_timeout 3 # 连接超时时间为3秒

46 nb_get_retry 3 # 3次访问失败则认为真实服务器故障

47 delay_before_retry 3 # 两次检查时间的间隔3秒

48 }

49 }

50 real_server 192.168.0.21 80 {

51 weight 2

52 TCP_CHECK {

53 connect_timeout 3

54 nb_get_retry 3

55 delay_before_retry 3

56 }

57 }

58 }

59 # 删除下面所有行

lvs-0002安装配置keepalived

yum -y install keepalived.x86_64

vim /etc/keepalived/keepalived.conf

12 router_id lvs-0002 #设置本机在集群中的唯一识别符

13 vrrp_iptables # 自动配置iptables放行规则

14 vrrp_skip_check_adv_addr

15 vrrp_strict

16 vrrp_garp_interval 0

17 vrrp_gna_interval 0

18 }

19

20 vrrp_instance VI_1 {

21 state BACKUP # 状态,主为MASTER,备为BACKUP

22 interface eth1 #此处为与客户端交互的网卡

23 virtual_router_id 51 # 虚拟路由器地址

24 priority 80 # 优先级,数字越大越优先

25 advert_int 1 ## 发送心跳消息的间隔

26 authentication {

27 auth_type PASS # 认证类型为共享密码

28 auth_pass 1111 ## 集群中的机器密码相同,才能成为集群

29 }

30 virtual_ipaddress {

31 192.168.1.200/24 # VIP地址,可配置多个

32 }

33 }

34 #以下为keepalived配置lvs的规则 健康检测

35 virtual_server 192.168.1.200 80 { # 声明虚拟服务器地址

36 delay_loop 6 # 健康检查延迟6秒开始

37 lb_algo rr # 调度算法为rr

38 lb_kind NAT # 工作模式为NAT

39 #persistence_timeout 50 # 50秒内相同客户端调度到相同服务器,注释便于验证结果

40 protocol TCP # 协议是TCP

41

42 real_server 192.168.0.20 80 { # 声明真实服务器

43 weight 1 # 权重,调度算法为rr不起作用

44 TCP_CHECK { # 通过TCP协议对真实服务器做健康检查

45 connect_timeout 3 # 连接超时时间为3秒

46 nb_get_retry 3 # 3次访问失败则认为真实服务器故障

47 delay_before_retry 3 # 两次检查时间的间隔3秒

48 }

49 }

50 real_server 192.168.0.21 80 {

51 weight 2

52 TCP_CHECK {

53 connect_timeout 3

54 nb_get_retry 3

55 delay_before_retry 3

56 }

57 }

58 }

59 # 删除下面所有行

启动keepalived服务

keepalived的虚拟IP在lvs-0001

启动lvs-0001的keepalived服务

[root@lvs-0001 ~]# systemctl enable keepalived.service --now

[root@lvs-0001 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.1.30:80 rr

-> 192.168.0.20:80 Masq 1 0 0

-> 192.168.0.21:80 Masq 2 0 0

TCP 192.168.1.200:80 rr persistent 50

-> 192.168.0.20:80 Masq 1 0 0

-> 192.168.0.21:80 Masq 2 0 0

#keepalived的虚拟IP在lvs-0001

[root@lvs-0001 ~]# ip a s

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether fa:16:3e:e0:38:56 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.30/24 brd 192.168.0.255 scope global noprefixroute dynamic eth0

valid_lft 74083sec preferred_lft 74083sec

inet6 fe80::f816:3eff:fee0:3856/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether fa:16:3e:16:6e:8b brd ff:ff:ff:ff:ff:ff

inet 192.168.1.30/24 brd 192.168.1.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet 192.168.1.200/24 scope global secondary eth1

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe16:6e8b/64 scope link

valid_lft forever preferred_lft forever

启动lvs-0002的keepalived服务

[root@lvs-0002 ~]# systemctl enable keepalived.service --now

[root@lvs-0002 ~]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 192.168.1.31:80 rr

-> 192.168.0.20:80 Masq 1 0 0

-> 192.168.0.21:80 Masq 2 0 0

TCP 192.168.1.200:80 rr

-> 192.168.0.20:80 Masq 1 0 0

-> 192.168.0.21:80 Masq 2 0 0

[root@lvs-0002 ~]# ip a s

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether fa:16:3e:e0:38:57 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.31/24 brd 192.168.0.255 scope global noprefixroute dynamic eth0

valid_lft 74218sec preferred_lft 74218sec

inet6 fe80::f816:3eff:fee0:3857/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether fa:16:3e:16:6e:8c brd ff:ff:ff:ff:ff:ff

inet 192.168.1.31/24 brd 192.168.1.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe16:6e8c/64 scope link

valid_lft forever preferred_lft forever

申请虚拟IP,绑定虚拟IP

控制台–>子网–>eth1网卡IP对应的子网–>IP地址管理–>申请虚拟IP–>绑定实例lvs-001和lvs-002的eth1网卡–>绑定弹性公网IP

配置虚拟IP的策略路由

配置lvs-001虚拟IP的策略路由

#配置策略路由

[root@lvs-0001 ~]# ip route add default via 192.168.1.1 dev eth1 table 201

[root@lvs-0001 ~]# ip route add 192.168.1.0/24 dev eth1 table 201

[root@lvs-0001 ~]# ip rule add from 192.168.1.200 table 201

#永久配置

[root@lvs-0001 ~]# vim /etc/rc.local

# wait for nics up

sleep 5

# Add v4 routes for eth0

ip route flush table 100

ip route add default via 192.168.0.1 dev eth0 table 100

ip route add 192.168.0.0/24 dev eth0 table 100

ip rule add from 192.168.0.31 table 100

# Add v4 routes for eth1

ip route flush table 200

ip route add default via 192.168.1.1 dev eth1 table 200

ip route add 192.168.1.0/24 dev eth1 table 200

ip rule add from 192.168.1.31 table 200

# Add v4 routes for eth1 vip

ip route flush table 201

ip route add default via 192.168.1.1 dev eth1 table 201

ip route add 192.168.1.0/24 dev eth1 table 201

ip rule add from 192.168.1.200 table 201

# Add v4 routes for cloud-init

ip rule add to 169.254.169.254 table main

#ping测试联通正常

[root@lvs-0001 ~]# ping -I 192.168.1.200 192.168.0.20

PING 192.168.0.20 (192.168.0.20) from 192.168.1.200 : 56(84) bytes of data.

64 bytes from 192.168.0.20: icmp_seq=1 ttl=63 time=0.305 ms

64 bytes from 192.168.0.20: icmp_seq=2 ttl=63 time=0.287 ms

64 bytes from 192.168.0.20: icmp_seq=3 ttl=63 time=0.274 ms

--- 192.168.0.20 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 1999ms

rtt min/avg/max/mdev = 0.274/0.288/0.305/0.023 ms

配置lvs-002虚拟IP的策略路由

#配置策略路由

[root@lvs-0002 ~]# ip route add default via 192.168.1.1 dev eth1 table 201

[root@lvs-0002 ~]# ip route add 192.168.1.0/24 dev eth1 table 201

[root@lvs-0002 ~]# ip rule add from 192.168.1.200 table 201

#永久配置

[root@lvs-0002 ~]# vim /etc/rc.local

# wait for nics up

sleep 5

# Add v4 routes for eth0

ip route flush table 100

ip route add default via 192.168.0.1 dev eth0 table 100

ip route add 192.168.0.0/24 dev eth0 table 100

ip rule add from 192.168.0.31 table 100

# Add v4 routes for eth1

ip route flush table 200

ip route add default via 192.168.1.1 dev eth1 table 200

ip route add 192.168.1.0/24 dev eth1 table 200

ip rule add from 192.168.1.31 table 200

# Add v4 routes for eth1 vip

ip route flush table 201

ip route add default via 192.168.1.1 dev eth1 table 201

ip route add 192.168.1.0/24 dev eth1 table 201

ip rule add from 192.168.1.200 table 201

# Add v4 routes for cloud-init

ip rule add to 169.254.169.254 table main

#关闭lvs-0001的keepalived服务,vip漂移到lvs-0002

[root@lvs-0001 ~]# systemctl stop keepalived.service

[root@lvs-0002 ~]# ip a s

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether fa:16:3e:e0:38:57 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.31/24 brd 192.168.0.255 scope global noprefixroute dynamic eth0

valid_lft 85177sec preferred_lft 85177sec

inet6 fe80::f816:3eff:fee0:3857/64 scope link

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether fa:16:3e:16:6e:8c brd ff:ff:ff:ff:ff:ff

inet 192.168.1.31/24 brd 192.168.1.255 scope global noprefixroute eth1

valid_lft forever preferred_lft forever

inet 192.168.1.200/24 scope global secondary eth1

valid_lft forever preferred_lft forever

inet6 fe80::f816:3eff:fe16:6e8c/64 scope link

valid_lft forever preferred_lft forever

[root@lvs-0002 ~]# ping -I 192.168.1.200 192.168.0.20

PING 192.168.0.20 (192.168.0.20) from 192.168.1.200 : 56(84) bytes of data.

64 bytes from 192.168.0.20: icmp_seq=1 ttl=64 time=0.747 ms

64 bytes from 192.168.0.20: icmp_seq=2 ttl=64 time=0.278 ms

--- 192.168.0.20 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 999ms

rtt min/avg/max/mdev = 0.278/0.512/0.747/0.235 ms

尚未解决的问题:

vip已能够连通到web。vip在lvs的主节点时,所有lvs服务器都能访问vip连通到web,vip不在主节点时,只能在拥有vip的lvs服务器访问vip连通到web,其他lvs服务器不能访问vip不能连通到web。不能通过vip绑定的弹性公网连通到web。