Android codec2 视频框架 之输入buffer

文章目录

-

-

- 输入端的内存管理

- 输入数据包buffer结构体的转换

-

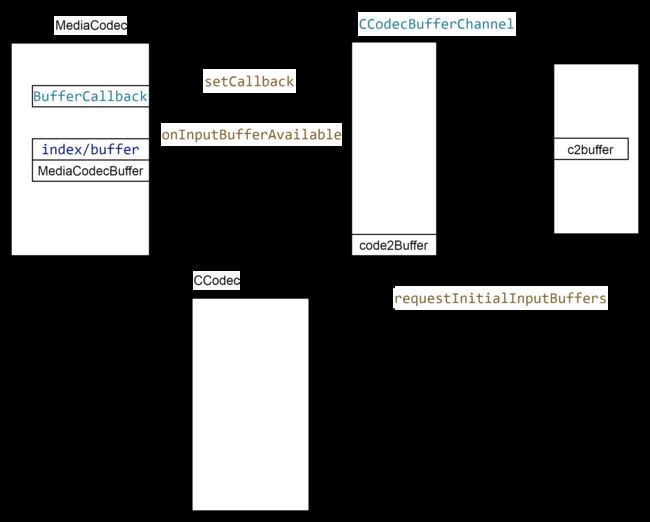

主要的流程如上, 申请内存在CCodecBufferChannel,申请之后回调到MediaCodec。然后应用从MediaCodec获取 将解码数据放到buffer中,CCodecBufferChannel在将这块buffer 送到componet模块。

输入端的内存管理

- 内部解码输入buffer的申请个数以及获取方式

mediacodec 中会申请一部分(默认情况下是4个)待解码的buffer。

status_t CCodecBufferChannel::requestInitialInputBuffers() {

if (mInputSurface) {

return OK;

}

size_t numInputSlots = mInput.lock()->numSlots;

struct ClientInputBuffer {

size_t index;

sp buffer;

size_t capacity;

};

std::list clientInputBuffers;

{

Mutexed::Locked input(mInput);

while (clientInputBuffers.size() < numInputSlots) {

ClientInputBuffer clientInputBuffer;

if (!input->buffers->requestNewBuffer(&clientInputBuffer.index,

&clientInputBuffer.buffer)) {

break;

}

}

}

其中在构造函数中定义了

constexpr size_t kSmoothnessFactor = 4;

input->numSlots = kSmoothnessFactor;

这个buffer 外部有两种方式可以获取到。

- 直接调用dequeueInputBuffer。

- 设置回调到Mediacodec,有buffer 可用的时候 回调到callback中。

输入输出都可以这样做, 在NuPlayer 中是设置回调到mediacodec,然后mediacodec回调回来。nuplayer中是在MediaCodec 有bufer 可用的时候 handleAnInputBuffer 从source读取数据,这个是一个新的 ABuffer buffer,读到数据后将会有拷贝的动作 将ABuffer拷贝到MediaCodecBuffer中。

sp reply = new AMessage(kWhatCodecNotify, this);

mCodec->setCallback(reply);

- 输入buffer的申请、存储

在CCodecBufferChannel中 requestInitialInputBuffers 将调用input->buffers->requestNewBuffer申请到index和buffer。这些buffer也同时存储到input->buffers中。然后通过回调 回调到Mediacodec的kWhatFillThisBuffer,FillThisBuffer的 updateBuffers 存储buffer到mPortBuffers,存储index 到mAvailPortBuffers。 如果有设置callback的话,会把index 返回给注册callback的地方。如果是getInputBuffer 那么获取的是CCodecBufferChannel的input->buffers.

上述的回调有两个地方会调用

- InitialInputBuffers的时候。

- 是feedInputBufferIfAvailable的时候。而feedInputBufferIfAvailable 在onWorkDone, discardBuffer、renderOutputBuffe、onInputBufferDone等都可会调用。

MediaCodec.cpp

status_t MediaCodec::init(const AString &name) {

mBufferChannel->setCallback(

std::unique_ptr(

new BufferCallback(new AMessage(kWhatCodecNotify, this))));

}

ccodec.cpp

void CCodec::start() {

(void)mChannel->requestInitialInputBuffers();

}

MediaCodec.cpp

void BufferCallback::onInputBufferAvailable(

size_t index, const sp &buffer) {

sp notify(mNotify->dup());

notify->setInt32("what", kWhatFillThisBuffer);

notify->setSize("index", index);

notify->setObject("buffer", buffer);

notify->post();

}

- 申请的内存不够的情况会怎么处理?

在nuplayer中拷贝解码数据到mediacodec的时候 会判断从codec取出来的buffer 够不够 不够的话会报错。而这个buffer 大小的申请也是外部设置的,一般是在解析的时候能够知道 最大是多少。比如下面的MP4解析的代码中会获取box 中sample的最大值,然后依据这个值设定输入的buffer的最大值。

bool NuPlayer::Decoder::onInputBufferFetched(const sp &msg) {

CHECK(msg->findSize("buffer-ix", &bufferIx));

CHECK_LT(bufferIx, mInputBuffers.size());

sp codecBuffer = mInputBuffers[bufferIx];

sp buffer;

bool hasBuffer = msg->findBuffer("buffer", &buffer);

if (needsCopy) {

if (buffer->size() > codecBuffer->capacity()) {

handleError(ERROR_BUFFER_TOO_SMALL);

mDequeuedInputBuffers.push_back(bufferIx);

return false;

}

}

status_t NuPlayer::Decoder::fetchInputData(sp &reply) {

status_t err = mSource->dequeueAccessUnit(mIsAudio, &accessUnit);

reply->setBuffer("buffer", accessUnit);

}

sp LinearInputBuffers::Alloc(

const std::shared_ptr &pool, const sp &format) {

int32_t capacity = kLinearBufferSize;

(void)format->findInt32(KEY_MAX_INPUT_SIZE, &capacity);

}

size_t max_size;

err = mLastTrack->sampleTable->getMaxSampleSize(&max_size);

if (max_size != 0) {

if (max_size > SIZE_MAX - 10 * 2) {

ALOGE("max sample size too big: %zu", max_size);

return ERROR_MALFORMED;

}

AMediaFormat_setInt32(mLastTrack->meta,

AMEDIAFORMAT_KEY_MAX_INPUT_SIZE, max_size + 10 * 2);

}

- PipelineWatcher控制外部输入buffer的速度

监控输入buffer的情况,有buffer送入解码器的时候 mFramesInPipeline 存储buffer、index 和时间。送入componet 处理完成之后调用onWorkDone从队列中删除。而这个mFramesInPipeline队列的大小不能超过mInputDelay + mPipelineDelay + mOutputDelay + mSmoothnessFactor.默认是4,就是输入最多存储4块了,超过4块,就不会回调到外部,让外部送数据进来了。

if (!items.empty()) {

{

Mutexed::Locked watcher(mPipelineWatcher);

PipelineWatcher::Clock::time_point now = PipelineWatcher::Clock::now();

for (const std::unique_ptr &work : items) {

watcher->onWorkQueued(

work->input.ordinal.frameIndex.peeku(),

std::vector(work->input.buffers),

now);

}

}

err = mComponent->queue(&items);

}

while (!mPipelineWatcher.lock()->pipelineFull()) {

sp inBuffer;

size_t index;

{

Mutexed::Locked input(mInput);

numActiveSlots = input->buffers->numActiveSlots();

ALOGD("active:%d, numslot:%d", (int)numActiveSlots, (int)input->numSlots);

if (numActiveSlots >= input->numSlots) {

break;

}

if (!input->buffers->requestNewBuffer(&index, &inBuffer)) {

ALOGE("[%s] no new buffer available", mName);

break;

}

}

ALOGE("[%s] new input index = %zu [%p]", mName, index, inBuffer.get());

mCallback->onInputBufferAvailable(index, inBuffer);

}

输入数据包buffer结构体的转换

- MediaCodec 层

ABuffer(Nuplayer)------>MediaCodecBuffer ----->C2Buffer

- Nuplayer: 拷贝解码数据到前面requestInitialInputBuffers申请的Codec2buffer(基类是MediaCodecBuffer)

- MediaCodec: Nuplayer中拷贝好的buffer queueInputBuffer到MediaCodec 中,MediaCodec要把这块buffer 传递到

底下具体的componet需要要转换为一个c2buffer。这个c2buffer封装在c2work中 queue 到componet中。

- componet层:

是调用到simplec2componet 中,调用的是queue_nb。 在simpleC2的实现中是发送一个process的消息到looper

执行processQueue,processQueue在调用到具体的解码componet的proces进行处理。

std::unique_ptr work(new C2Work);

work->input.ordinal.timestamp = timeUs;

work->input.ordinal.frameIndex = mFrameIndex++;

// WORKAROUND: until codecs support handling work after EOS and max output sizing, use timestamp

// manipulation to achieve image encoding via video codec, and to constrain encoded output.

// Keep client timestamp in customOrdinal

work->input.ordinal.customOrdinal = timeUs;

work->input.buffers.clear();

sp copy;

bool usesFrameReassembler = false;

if (buffer->size() > 0u) {

Mutexed::Locked input(mInput);

std::shared_ptr c2buffer;

if (!input->buffers->releaseBuffer(buffer, &c2buffer, false)) {

return -ENOENT;

}

}

err = mComponent->queue(&items);