alphafold-mutimer安装及使用(保姆级教程)

请使用Linux的客官放心食用,windows客官可作参考。

1.标红为必备软件包

(mm) $ conda list

_libgcc_mutex 0.1 main Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror _openmp_mutex 5.1 1_gnu Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror absl-py 0.15.0 pypi_0 pypi astunparse 1.6.3 pypi_0 pypi biopython 1.79 pypi_0 pypi blas 1.0 mkl Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror brotlipy 0.7.0 py38h27cfd23_1003 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror bzip2 1.0.8 h7b6447c_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror ca-certificates 2023.7.22 hbcca054_0 conda-forge cachetools 5.3.1 pypi_0 pypi certifi 2023.7.22 pyhd8ed1ab_0 conda-forge cffi 1.15.0 py38h7f8727e_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror charset-normalizer 2.0.4 pyhd3eb1b0_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror clang 5.0 pypi_0 pypi contextlib2 21.6.0 pypi_0 pypi cryptography 41.0.2 py38h774aba0_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror cuda-cudart 11.8.89 0 nvidia cuda-cupti 11.8.87 0 nvidia cuda-libraries 11.8.0 0 nvidia cuda-nvrtc 11.8.89 0 nvidia cuda-nvtx 11.8.86 0 nvidia cuda-runtime 11.8.0 0 nvidia cudatoolkit 11.8.0 h6a678d5_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror cudnn 8.9.2.26 cuda11_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror dm-haiku 0.0.4 pypi_0 pypi dm-tree 0.1.6 pypi_0 pypi ffmpeg 4.3 hf484d3e_0 pytorch fftw 3.3.9 h27cfd23_1 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror filelock 3.9.0 py38h06a4308_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror flatbuffers 1.12 pypi_0 pypi freetype 2.12.1 h4a9f257_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror gast 0.4.0 pypi_0 pypi giflib 5.2.1 h5eee18b_3 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror gmp 6.2.1 h295c915_3 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror gmpy2 2.1.2 py38heeb90bb_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror gnutls 3.6.15 he1e5248_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror google-auth 2.22.0 pypi_0 pypi google-auth-oauthlib 0.4.6 pypi_0 pypi google-pasta 0.2.0 pypi_0 pypi grpcio 1.34.1 pypi_0 pypi h5py 3.1.0 pypi_0 pypi hhsuite 3.3.0 py38pl5321h8ded8fe_5 bioconda hmmer 3.3.2 h87f3376_2 bioconda idna 3.4 py38h06a4308_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror immutabledict 2.0.0 pypi_0 pypi importlib-metadata 6.8.0 pypi_0 pypi intel-openmp 2021.4.0 h06a4308_3561 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror jax 0.2.14 pypi_0 pypi jaxlib 0.1.69+cuda111 pypi_0 pypi jinja2 3.1.2 py38h06a4308_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror jpeg 9e h5eee18b_1 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror kalign2 2.04 hec16e2b_3 bioconda keras 2.13.1 pypi_0 pypi keras-nightly 2.5.0.dev2021032900 pypi_0 pypi keras-preprocessing 1.1.2 pypi_0 pypi lame 3.100 h7b6447c_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror lcms2 2.12 h3be6417_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror lerc 3.0 h295c915_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror libblas 3.9.0 12_linux64_mkl conda-forge libcblas 3.9.0 12_linux64_mkl conda-forge libcublas 11.11.3.6 0 nvidia libcufft 10.9.0.58 0 nvidia libcufile 1.7.2.10 0 nvidia libcurand 10.3.3.141 0 nvidia libcusolver 11.4.1.48 0 nvidia libcusparse 11.7.5.86 0 nvidia libdeflate 1.17 h5eee18b_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror libedit 3.1.20221030 h5eee18b_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror libffi 3.2.1 hf484d3e_1007 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror libgcc-ng 11.2.0 h1234567_1 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror libgfortran-ng 13.1.0 h69a702a_0 conda-forge libgfortran5 13.1.0 h15d22d2_0 conda-forge libgomp 11.2.0 h1234567_1 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror libiconv 1.16 h7f8727e_2 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror libidn2 2.3.4 h5eee18b_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror liblapack 3.9.0 12_linux64_mkl conda-forge libnpp 11.8.0.86 0 nvidia libnsl 2.0.0 h5eee18b_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror libnvjpeg 11.9.0.86 0 nvidia libopenblas 0.3.20 pthreads_h78a6416_0 conda-forge libpng 1.6.39 h5eee18b_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror libprotobuf 3.20.3 he621ea3_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror libstdcxx-ng 11.2.0 h1234567_1 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror libtasn1 4.19.0 h5eee18b_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror libtiff 4.5.1 h6a678d5_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror libunistring 0.9.10 h27cfd23_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror libwebp 1.2.4 h11a3e52_1 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror libwebp-base 1.2.4 h5eee18b_1 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror lz4-c 1.9.4 h6a678d5_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror markdown 3.4.4 pypi_0 pypi markupsafe 2.1.1 py38h7f8727e_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror mkl 2021.4.0 h06a4308_640 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror mkl-service 2.4.0 py38h7f8727e_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror mkl_fft 1.3.1 py38hd3c417c_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror mkl_random 1.2.2 py38h51133e4_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror ml-collections 0.1.0 pypi_0 pypi mpc 1.1.0 h10f8cd9_1 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror mpfr 4.0.2 hb69a4c5_1 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror mpmath 1.3.0 py38h06a4308_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror ncurses 6.4 h6a678d5_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror nettle 3.7.3 hbbd107a_1 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror networkx 3.1 py38h06a4308_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror ninja 1.10.2 h06a4308_5 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror ninja-base 1.10.2 hd09550d_5 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror numpy 1.21.0 py38h9894fe3_0 conda-forge oauthlib 3.2.2 pypi_0 pypi ocl-icd 2.3.1 h7f98852_0 conda-forge ocl-icd-system 1.0.0 1 conda-forge openh264 2.1.1 h4ff587b_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror openmm 7.5.1 py38ha082873_1 conda-forge openssl 1.1.1v h7f8727e_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror opt-einsum 3.3.0 pypi_0 pypi pandas 1.3.4 pypi_0 pypi pdbfixer 1.7 pyhd3deb0d_0 conda-forge perl 5.32.1 2_h7f98852_perl5 conda-forge pillow 9.4.0 py38h6a678d5_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror pip 23.2.1 py38h06a4308_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror protobuf 3.20.3 pypi_0 pypi pyasn1 0.5.0 pypi_0 pypi pyasn1-modules 0.3.0 pypi_0 pypi pycparser 2.21 pyhd3eb1b0_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror pyopenssl 23.2.0 py38h06a4308_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror pysocks 1.7.1 py38h06a4308_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror python 3.8.0 h0371630_2 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror python-dateutil 2.8.2 pypi_0 pypi python_abi 3.8 2_cp38 conda-forge pytorch 2.0.1 py3.8_cuda11.8_cudnn8.7.0_0 pytorch pytorch-cuda 11.8 h7e8668a_5 pytorch pytorch-mutex 1.0 cuda pytorch pytz 2023.3.post1 pypi_0 pypi pyyaml 6.0.1 pypi_0 pypi readline 7.0 h7b6447c_5 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror requests 2.31.0 py38h06a4308_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror requests-oauthlib 1.3.1 pypi_0 pypi rsa 4.9 pypi_0 pypi scipy 1.7.0 pypi_0 pypi setuptools 68.0.0 py38h06a4308_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror six 1.15.0 pypi_0 pypi sqlite 3.33.0 h62c20be_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror sympy 1.11.1 py38h06a4308_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror tabulate 0.9.0 pypi_0 pypi tensorboard 2.11.2 pypi_0 pypi tensorboard-data-server 0.6.1 pypi_0 pypi tensorboard-plugin-wit 1.8.1 pypi_0 pypi tensorflow 2.5.0 pypi_0 pypi tensorflow-estimator 2.5.0 pypi_0 pypi termcolor 1.1.0 pypi_0 pypi tk 8.6.12 h1ccaba5_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror torchaudio 2.0.2 py38_cu118 pytorch torchtriton 2.0.0 py38 pytorch torchvision 0.15.2 py38_cu118 pytorch typing-extensions 3.7.4.3 pypi_0 pypi typing_extensions 4.7.1 py38h06a4308_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror urllib3 1.26.16 py38h06a4308_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror werkzeug 2.3.7 pypi_0 pypi wheel 0.38.4 py38h06a4308_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror wrapt 1.12.1 pypi_0 pypi xz 5.4.2 h5eee18b_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror zipp 3.16.2 pypi_0 pypi zlib 1.2.13 h5eee18b_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror zstd 1.5.5 hc292b87_0 Index of /anaconda/pkgs/main/ | 北京外国语大学开源软件镜像站 | BFSU Open Source Mirror

注:标红色的为必须软件包及对应版本,软件安装要特别注意顺序,会在下一步进行介绍,请徐徐图之。(注意顺序,不然一直重装)

2.系统配置:

安装前需要查看系统配置,然后再搭建conda虚拟环境。

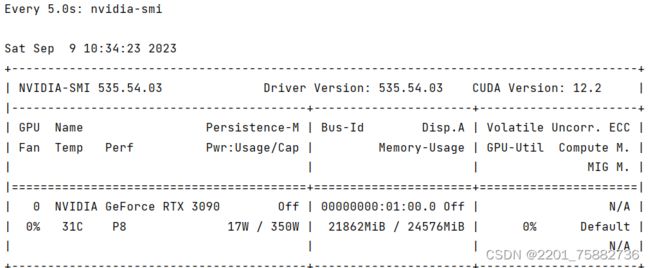

2.1 cuda版本

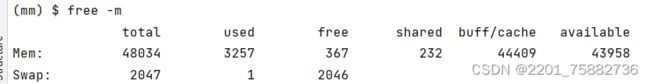

nvidia-smi2.2内存量

free -m2.3 cudnn版本

dpkg -l | grep cudnn![]()

3.conda搭建python3.8的虚拟环境

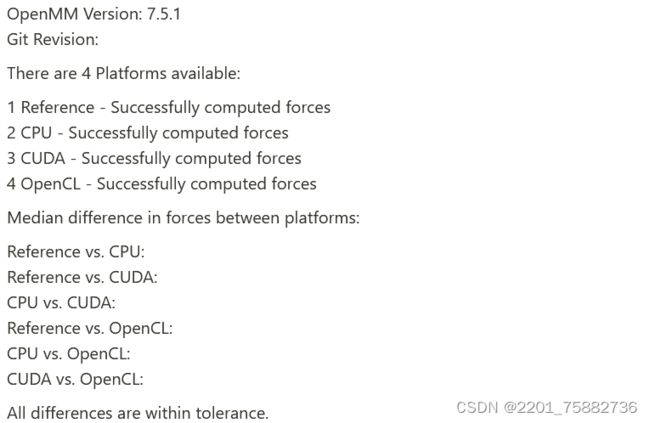

3.1首先需要安装openmm和cudatoolkit,然后检测虚拟环境内的cudatoolkit版本与系统cuda版本是否适配,操作如下:

3.1.1 conda install cudatoolkit==版本号,例如本人安装的为11.8.0 #不能高于系统cuda的版本号,本人使用的系统cuda12.2(用conda search cudatoolkit查找conda仓库中可用的cudatoolkit版本)

3.1.2 conda install -c conda-forge openmm=7.5.1 #alphafold要求openmm版本为7.5.1,不然会报错

3.1.3 开始检测cudatoolkit是否适配,执行:python -m simtk.testInstallation。如果直接执行python -m openmm.testInstallation 可能会报错找不openmm,如果输出以下内容就说明cudatoolkit与系统cuda版本适配:

如果输出内容里出现:CUDA - Error computing forces with CUDA platform,原因在于cudatookit不对。首先使用 nvidia-smi 查看CUDA Version,然后使用 conda install -c conda-forge cudatoolkit= 对应的版本号,就可以解决问题。当然没有cuda也能跑起来,只是费时间。 原文链接:(防坑)Alphafold 非docker 安装指南_alphafold安装_Zqinstarking的博客-CSDN博客

3.2 pytorch的安装

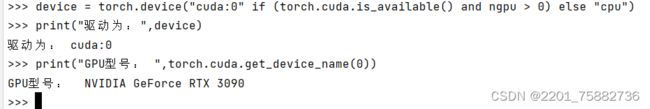

#官网Previous PyTorch Versions | PyTorch ,按照cudatoolkit对应版本,安装后检测torch是否支持GPU运行,#相关连接https://blog.csdn.net/weixin_42788078/article/details/103116903,如果支持会返回GPU等相关信息。成功的情况下代码和返回如下(进入python命令行):

import torch

import time

print(torch.__version__)

print(torch.cuda.is_available())或者(进入python命令行)

device = torch.device("cuda:0" if (torch.cuda.is_available() and ngpu > 0) else "cpu")

print("驱动为:",device)

print("GPU型号: ",torch.cuda.get_device_name(0))如果用conda安装后检测不成功,检查(使用conda list)是否为cpu版本的pytorch,在软件包中gpu版本我已标红,如果是cpu版本的,卸了,网上建议用pip重新安装,但是容易断,还是卸了重新用conda安装,大概率会是gpu版本的(╮(╯▽╰)╭)。

3.3 tensorflow安装

安装:

pip install --upgrade tensorflow==2.5.0#检测TensorFlow是否支持GPU,进入python界面,输入如下代码(进入python命令行)

import tensorflow as tf print(tf.test.is_built_with_cuda())

print(tf.test.is_gpu_available())

print(tf.config.list_physical_devices('GPU'))#输出如下即成功

3.4 零零碎碎软件包的安装

如下软件,其他的如果运行有问题再补充。

conda install -y -c conda-forge pdbfixer==1.7 conda install -y -c bioconda hmmer==3.3.2 hhsuite==3.3.0 kalign2==2.04

pip install absl-py==0.13.0 biopython==1.79 dm-haiku==0.0.4 dm-tree==0.1.6 immutabledict==2.0.0 jax==0.2.14 ml-collections==0.1.0 scipy==1.7.0 pandas==1.3.4

pip install --upgrade jax==0.2.14 jaxlib==0.1.69+cuda111 -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

3.5 jax的安装

需要测试

#测试jax是否支持GPU运行,输入如下代码(进入python命令行):

import torch, jax;

print(torch.cuda.is_available)

print(jax.devices()[0])

gpu:0#输出如下即成功(进入python命令行)

import torch, jax;

print(torch.cuda.is_available)

print(jax.devices()[0])

gpu:0

gpu:0 #测试jax.numpy是否支持GPU运行,输入如下代码(进入python命令行):

import jax.numpy as np

from jax import random#输出如下即成功

4.alphafold-mutimer使用

注意:在安装过alphafold,数据库已全部下载的前提下进行跟新。

alphafold-mutimer需要用到的数据库在alphafold官网https://github.com/google-deepmind/alphafold下载也可,用最新数据库就行,把alphafold官网上的数据库全部下载下来就可以了,后面只需更改更新模型的参数文件,下一步进行介绍。

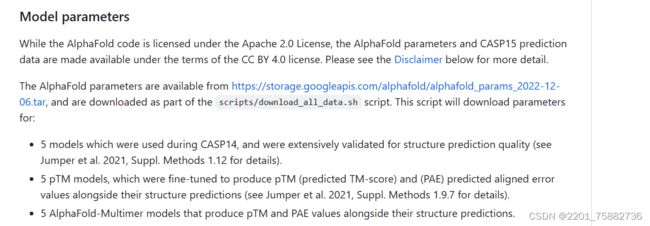

4.1更新模型参数文件

在https://github.com/jcheongs/alphafold-multimer或https://github.com/google-deepmind/alphafold下载模型参数,如下:

#下载模型使用参数:wget https://storage.googleapis.com/alphafold/alphafold_params_2022-12-06.tar

#解压后

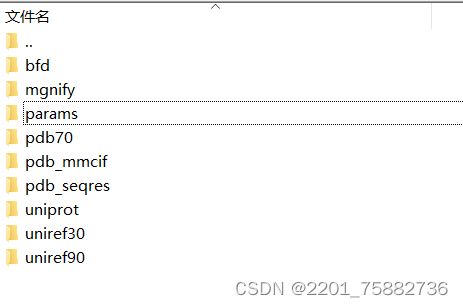

放入数据库储存路径,如下:params文件内就有模型的参数

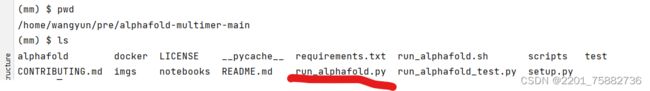

4.2 alphafold-mutimer的run_alphafold.py获得

不同于alphafold的run_alphafold.py,alphafold-mutimer的run_alphafold.py代码经过了更新。

可在alphafold-mutimer官网https://github.com/jcheongs/alphafold-multimer下载,操作如下:

解压:unzip 文件包

解压后得到如下:进入文件后需要验证能否顺利运行,下一步将会进行介绍。

4.3 验证是否能运行alphafold-mutimer

#输入代码:

python run_alphafold.py --help#输出如下即代表成功:

2023-09-11 15:12:48.147643: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcudart.so.11.0

2023-09-11 15:12:48.588580: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcuda.so.1

2023-09-11 15:12:48.607655: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2023-09-11 15:12:48.607793: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1733] Found device 0 with properties:

pciBusID: 0000:01:00.0 name: NVIDIA GeForce RTX 3090 computeCapability: 8.6

coreClock: 1.695GHz coreCount: 82 deviceMemorySize: 23.69GiB deviceMemoryBandwidth: 871.81GiB/s

2023-09-11 15:12:48.607852: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcudart.so.11.0

2023-09-11 15:12:48.621122: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcublas.so.11

2023-09-11 15:12:48.621198: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcublasLt.so.11

2023-09-11 15:12:48.623171: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcufft.so.10

2023-09-11 15:12:48.623382: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcurand.so.10

2023-09-11 15:12:48.623839: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcusolver.so.11

2023-09-11 15:12:48.624399: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcusparse.so.11

2023-09-11 15:12:48.624489: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcudnn.so.8

2023-09-11 15:12:48.624560: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2023-09-11 15:12:48.624663: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2023-09-11 15:12:48.624729: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1871] Adding visible gpu devices: 0

2023-09-11 15:12:48.645313: I tensorflow/core/platform/cpu_feature_guard.cc:142] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2023-09-11 15:12:48.645752: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2023-09-11 15:12:48.645845: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1733] Found device 0 with properties:

pciBusID: 0000:01:00.0 name: NVIDIA GeForce RTX 3090 computeCapability: 8.6

coreClock: 1.695GHz coreCount: 82 deviceMemorySize: 23.69GiB deviceMemoryBandwidth: 871.81GiB/s

2023-09-11 15:12:48.645917: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2023-09-11 15:12:48.645984: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2023-09-11 15:12:48.646039: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1871] Adding visible gpu devices: 0

2023-09-11 15:12:48.646075: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcudart.so.11.0

2023-09-11 15:12:48.783877: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1258] Device interconnect StreamExecutor with strength 1 edge matrix:

2023-09-11 15:12:48.783899: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1264] 0

2023-09-11 15:12:48.783902: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1277] 0: N

2023-09-11 15:12:48.783980: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2023-09-11 15:12:48.784044: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2023-09-11 15:12:48.784090: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2023-09-11 15:12:48.784141: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1418] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 556 MB memory) -> physical GPU (device: 0, name: NVIDIA GeForce RTX 3090, pci bus id: 0000:01:00.0, compute capability: 8.6)

Full AlphaFold protein structure prediction script.

flags:

run_alphafold.py:

--[no]benchmark: Run multiple JAX model evaluations to obtain a timing that excludes the compilation time, which should be more indicative of the time required for inferencing many

proteins.

(default: 'false')

--bfd_database_path: Path to the BFD database for use by HHblits.

--data_dir: Path to directory of supporting data.

--db_preset: : Choose preset MSA database configuration - smaller genetic database config (reduced_dbs) or full genetic database config (full_dbs)

(default: 'full_dbs')

--fasta_paths: Paths to FASTA files, each containing a prediction target that will be folded one after another. If a FASTA file contains multiple sequences, then it will be folded as

a multimer. Paths should be separated by commas. All FASTA paths must have a unique basename as the basename is used to name the output directories for each prediction.

(a comma separated list)

--hhblits_binary_path: Path to the HHblits executable.

(default: '/home/wangyun/miniconda3/envs/mm/bin/hhblits')

--hhsearch_binary_path: Path to the HHsearch executable.

(default: '/home/wangyun/miniconda3/envs/mm/bin/hhsearch')

--hmmbuild_binary_path: Path to the hmmbuild executable.

(default: '/home/wangyun/miniconda3/envs/mm/bin/hmmbuild')

--hmmsearch_binary_path: Path to the hmmsearch executable.

(default: '/home/wangyun/miniconda3/envs/mm/bin/hmmsearch')

--is_prokaryote_list: Optional for multimer system, not used by the single chain system. This list should contain a boolean for each fasta specifying true where the target complex is

from a prokaryote, and false where it is not, or where the origin is unknown. These values determine the pairing method for the MSA.

(a comma separated list)

--jackhmmer_binary_path: Path to the JackHMMER executable.

(default: '/home/wangyun/miniconda3/envs/mm/bin/jackhmmer')

--kalign_binary_path: Path to the Kalign executable.

(default: '/home/wangyun/miniconda3/envs/mm/bin/kalign')

--max_template_date: Maximum template release date to consider. Important if folding historical test sets.

--mgnify_database_path: Path to the MGnify database for use by JackHMMER.

--model_preset: : Choose preset model configuration - the monomer model, the monomer model with extra ensembling, monomer model with pTM

head, or multimer model

(default: 'monomer')

--obsolete_pdbs_path: Path to file containing a mapping from obsolete PDB IDs to the PDB IDs of their replacements.

--output_dir: Path to a directory that will store the results.

--pdb70_database_path: Path to the PDB70 database for use by HHsearch.

--pdb_seqres_database_path: Path to the PDB seqres database for use by hmmsearch.

--random_seed: The random seed for the data pipeline. By default, this is randomly generated. Note that even if this is set, Alphafold may still not be deterministic, because

processes like GPU inference are nondeterministic.

(an integer)

--[no]run_relax: Whether to run the final relaxation step on the predicted models. Turning relax off might result in predictions with distracting stereochemical violations but might

help in case you are having issues with the relaxation stage.

(default: 'true')

--small_bfd_database_path: Path to the small version of BFD used with the "reduced_dbs" preset.

--template_mmcif_dir: Path to a directory with template mmCIF structures, each named .cif

--uniclust30_database_path: Path to the Uniclust30 database for use by HHblits.

--uniprot_database_path: Path to the Uniprot database for use by JackHMMer.

--uniref90_database_path: Path to the Uniref90 database for use by JackHMMER.

--[no]use_gpu_relax: Whether to relax on GPU. Relax on GPU can be much faster than CPU, so it is recommended to enable if possible. GPUs must be available if this setting is enabled.

--[no]use_precomputed_msas: Whether to read MSAs that have been written to disk instead of running the MSA tools. The MSA files are looked up in the output directory, so it must stay

the same between multiple runs that are to reuse the MSAs. WARNING: This will not check if the sequence, database or configuration have changed.

(default: 'false')

Try --helpfull to get a list of all flags.

5. 如何使用alphafold-mutimer参数

5.1使用大的数据库

python run_alphafold.py

--data_dir=/data/luping/alphaFold/alphaFold2/alphafold/scripts/AlphaFoldDataRes#数据库所在的大目录

--uniref90_database_path=/data/luping/alphaFold/alphaFold2/alphafold/scripts/AlphaFoldDataRes/uniref90/uniref90.fasta#(数据库大目录下uniref90的目录)

--mgnify_database_path=/data/luping/alphaFold/alphaFold2/alphafold/scripts/AlphaFoldDataRes/mgnify/mgy_clusters_2022_05.fa#(数据库大目录下mgnify的目录)

--template_mmcif_dir=/data/luping/alphaFold/alphaFold2/alphafold/scripts/AlphaFoldDataRes/pdb_mmcif/mmcif_files#(数据库大目录下mmcif的目录)

--max_template_date=2021-11-01

--obsolete_pdbs_path=/data/luping/alphaFold/alphaFold2/alphafold/scripts/AlphaFoldDataRes/pdb_mmcif/obsolete.dat#(数据库大目录下obsolete的目录)

--bfd_database_path=/data/luping/alphaFold/alphaFold2/alphafold/scripts/AlphaFoldDataRes/bfd/bfd_metaclust_clu_complete_id30_c90_final_seq.sorted_opt#(数据库大目录下bfd的目录)

--model_preset=multimer#(试验项目为多聚体)

--uniprot_database_path=/data/luping/alphaFold/alphaFold2/alphafold/scripts/AlphaFoldDataRes/uniprot/uniprot.fasta#(数据库大目录下uniprot的目录)

--pdb_seqres_database_path=/data/luping/alphaFold/alphaFold2/alphafold/scripts/AlphaFoldDataRes/pdb_seqres/pdb_seqres.txt#(数据库大目录下pdb_seqres的目录)

--db_preset=full_dbs#(试验以大数据库为仓库筛选模板)

--uniclust30_database_path=/data/luping/alphaFold/alphaFold2/alphafold/scripts/AlphaFoldDataRes/uniref30/UniRef30_2021_03#(数据库大目录下UniRef30的目录)

--fasta_paths=/home/wangyun/pre/proin/mutimer1 #(输入的fasta文件,为了准确性内含2条及两条以上的序列)

--is_prokaryote_list=false#(试验序列为真核生物选true,否则为false)

--output_dir=/home/wangyun/pre/outbig-m #(输出结果文件的目录)

--use_gpu_relax=true#(是否使用GPU进行松弛处理,处理后模型打分会更高)

--benchmark=true#(加速?)

--use_precomputed_msas=true#(是否使用原先生成的msas文件,测试同一个fasta文件序列各种参数的时候可用)5.2使用小的数据库

python run_alphafold.py

--data_dir=/data/luping/alphaFold/alphaFold2/alphafold/scripts/AlphaFoldDataRes#(参考使用大数据库)

--uniref90_database_path=/data/luping/alphaFold/alphaFold2/alphafold/scripts/AlphaFoldDataRes/uniref90/uniref90.fasta#(参考使用大数据库)

--mgnify_database_path=/data/luping/alphaFold/alphaFold2/alphafold/scripts/AlphaFoldDataRes/mgnify/mgy_clusters_2022_05.fa#(参考使用大数据库)

--template_mmcif_dir=/data/luping/alphaFold/alphaFold2/alphafold/scripts/AlphaFoldDataRes/pdb_mmcif/mmcif_files#(参考使用大数据库)

--is_prokaryote_list=false#(参考使用大数据库)

--max_template_date=2021-11-01#(参考使用大数据库)

--obsolete_pdbs_path=/data/luping/alphaFold/alphaFold2/alphafold/scripts/AlphaFoldDataRes/pdb_mmcif/obsolete.dat#(参考使用大数据库)

--model_preset=multimer#(参考使用大数据库)

--uniprot_database_path=/data/luping/alphaFold/alphaFold2/alphafold/scripts/AlphaFoldDataRes/uniprot/uniprot.fasta#(参考使用大数据库)

--pdb_seqres_database_path=/data/luping/alphaFold/alphaFold2/alphafold/scripts/AlphaFoldDataRes/pdb_seqres/pdb_seqres.txt#(参考使用大数据库)

--db_preset=reduced_dbs#(使用小数据库)

--small_bfd_database_path=/data/luping/alphaFold/alphaFold2/alphafold/scripts/AlphaFoldData/small_bfd/bfd-first_non_consensus_sequences.fasta#(参考使用大数据库)

--fasta_paths=/home/wangyun/pre/proin/mutimer1#(参考使用大数据库)

--output_dir=/home/wangyun/pre/out-m#(参考使用大数据库)

--use_gpu_relax=true#(参考使用大数据库)

--benchmark=true --use_precomputed_msas=true#(参考使用大数据库)6.结果

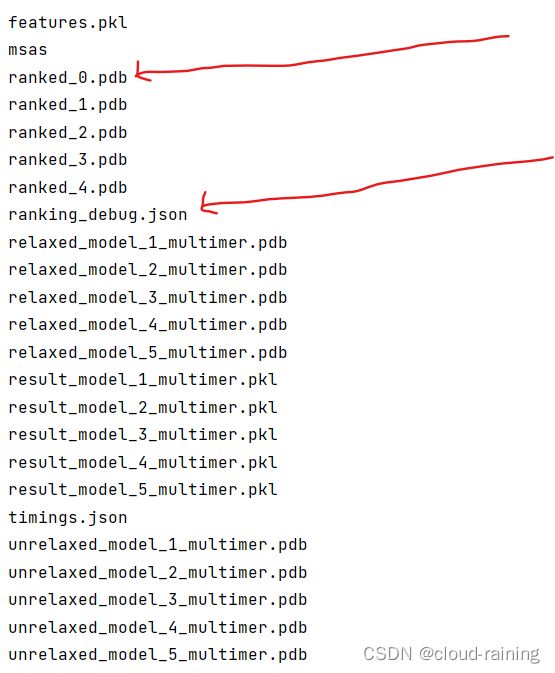

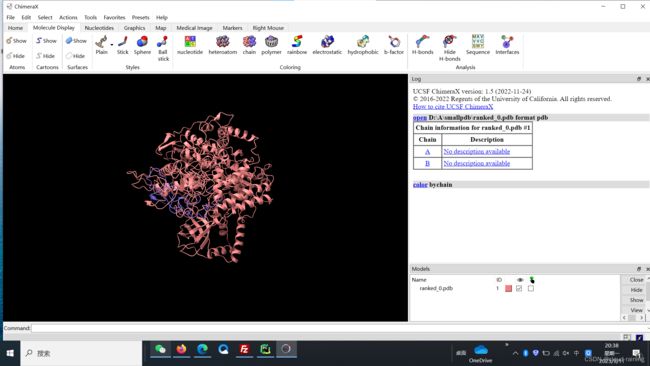

正常情况下会有5个模型输出,ranked_0.pdb打分最高,在ranking_debug.json中可得到 "iptm+ptm"分数(model confidence(DockQ) = 0.8 · ipTM + 0.2 · pTM 成功(DockQ ≥ 0.23),中等准确度(DockQ [3]≥0.49) ,高准确度(DockQ ≥0.8) ,该分数高低代表模型的可信度。参考文献Protein complex prediction with AlphaFold-Multimer (deepmind.com))。之后可以下载ChimeraX软件UCSF ChimeraX Home Page后打开.pdb图像文件。

#图像

本人属于跨界接触深度学习,不当之处还望各位大佬指出,您们的每一次严厉指出是我进步的推力,谢谢各位。