【kubernetes系列】k8s集群中删Terminating状态的资源

在初学阶段,经常可能遇到删除资源不成功的时候,一直处于Terminating状态。今天介绍删除常用的三种处于Terminating状态的资源namespace、pod和pv。

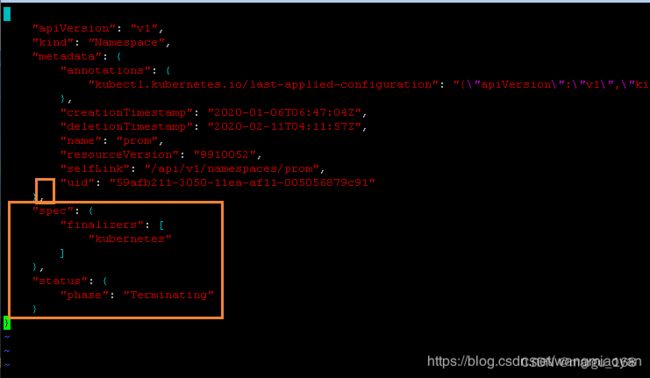

删Terminating状态的namespace

1.kubectl get ns monitoring -o json > monitor.json

2.vim monitor.json

3. 启动代理(默认为8081端口)

kubectl proxy

4.执行生效

curl -k -H “Content-Type: application/json” -X PUT --data-binary @monitor.json http://127.0.0.1:8001/api/v1/namespaces/monitoring/finalize

即可

删除处于terminating状态的Pod

直接手动强制删除

kubectl delete pod xxx --force --grace-period=0

或者重启kubelet服务—实在不行直接干掉整个命名空间

插叙:

pv从released状态变成available的方法:

kubectl patch pv xxx -p ‘{“metadata”:{“finalizers”:null}}’

在PV变成Available过程中,最关键的是PV的spec.claimRef字段,该字段记录着原来PVC的绑定信息,删除绑定信息,即可重新释放PV从而达到Available。

测试环境editpv大胆删除spec.claimRef这段。再次查看PV:

kubectl edit pv pvc-069c4486-d773-11e8-bd12-000c2931d938

删除处于terminating状态的pv

[root@k8s-m1 k8s-volumes]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv002 2Gi RWO Retain Terminating zookeeper/datadir-zk-1 2d6h

pv003 2Gi RWO,RWX Retain Terminating zookeeper/datadir-zk-0 2d6h

pv004 4Gi RWO,RWX Retain Terminating zookeeper/datadir-zk-3 2d1h

[root@k8s-m1 k8s-volumes]# kubectl patch pv pv002 -p '{"metadata":{"finalizers":null}}'

persistentvolume/pv002 patched

[root@k8s-m1 k8s-volumes]# kubectl patch pv pv003 -p '{"metadata":{"finalizers":null}}'

persistentvolume/pv003 patched

[root@k8s-m1 k8s-volumes]# kubectl patch pv pv004 -p '{"metadata":{"finalizers":null}}'

persistentvolume/pv004 patched

[root@k8s-m1 k8s-volumes]# kubectl get pv

No resources found

通过上面的操作发现处于terminating状态的pv被删除。

通过etcd删除pod、namespace和pv

进入Etcd容器的方法(etcd是以pod的方式部署):

kubectl exec -it etcd-host01 -n kube-system -- /bin/sh

etcdctl --endpoints=https://172.37.2.11:2379 --ca-file=/etc/kubernetes/pki/etcd/ca.crt --cert-file=/etc/kubernetes/pki/etcd/server.crt --key-file=/etc/kubernetes/pki/etcd/server.key cluster-health

查看etcd集群的健康状况。

查看key

etcdctl --endpoints=https://172.37.2.11:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key get / --prefix

--keys-only

强制删除pod

export ETCDCTL_API=3 (注意指定版本)

etcdctl --endpoints=https://172.37.2.11:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key del /registry/pods/fixbug-service/eureka2-fixbug-deploy-54b49d7784-lvrnt

强制删除ns

etcdctl --endpoints=https://172.37.2.11:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key del /registry/namespaces/fixbug-service

强制删除pv

etcdctl --endpoints=https://172.37.2.11:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key get /registry/persistentvolumes/pv001 --prefix

/registry/persistentvolumes/pv001

k8s

v1PersistentVolumepv001"*$03f6443c-c708-4086-8006-6d84440b0a802Z

namepv001b0kubectl.kubernetes.io/last-applied-configurationapiVersion":"v1","kind":"PersistentVolume","metadata":{"annotations":{},"labels":{"name":"pv001"},"name":"pv001"},"spec":{"accessModes":["ReadWriteMany","ReadWriteOnce"],"capacity":{"storage":"1Gi"},"nfs":{"path":"/data/volumes/v1","server":"192.168.2.140"}}}

rubernetes.io/pv-protectionz

kube-controller-managerUpdatevFieldsV1:

"f:status":{"f:phase":{}}}

kubectl-client-side-applyUpdatevFieldsV1:f:metadata":{"f:annotations":{".":{},"f:kubectl.kubernetes.io/last-applied-configuration":{}},"f:labels":{".":{},"f:name":{}}},"f:spec":{"f:accessModes":{},"f:capacity":{".":{},"f:storage":{}},"f:nfs":{".":{},"f:path":{},"f:server":{}},"f:persistentVolumeReclaimPolicy":{},"f:volumeMode":{}}}m

storage

1Gi%*#

ReadWriteOnce*Retain2Bumes/v1

Filesystem

Available"

etcdctl --endpoints=https://172.37.2.11:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key del /registry/persistentvolumes/pv001

1

etcdctl --endpoints=https://172.37.2.11:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/server.crt --key=/etc/kubernetes/pki/etcd/server.key get /registry/persistentvolumes/pv001 --prefix

# kubectl get pv pv001

Error from server (NotFound): persistentvolumes "pv001" not found

更多关于kubernetes的知识分享,请前往博客主页。编写过程中,难免出现差错,敬请指出