Flink Table和SQL动态表的理解(更新流和追加流)、动态表的种类

目录

- 1. 动态表

-

- 1.1 更新流(upsert、retract)的连续查询

- 1.2 追加流(append-only)的连续查询

- 2. Flink Table中动态表的种类

注意:如果Timestamp属性列被用于计算,则下游的Table将没有Timestamp和Watermark。例如table1的时间属性列为timestamp1,且table1有Watermark。执行SQL语句

val table2 = tEnv.sqlQuery("select (timestamp1 + interval '3' second) as timestamp1 from table1"),则table2将没有Timestamp和Watermark

1. 动态表

对于动态表,一旦前面的基表有insert、update、delete形式的changelog stream,就用一条SQL查询的物化视图即时更新动态表。物化视图用state缓存数据。动态表数据更新方式可以为append-only、retract、upsert

对于动态表,一旦前面的基表有insert、update、delete形式的changelog stream,就用一条SQL查询的物化视图即时更新动态表。物化视图用state缓存数据。动态表数据更新方式可以为append-only、retract、upsert

注意:

- 动态表只是方便我们理解的一个逻辑表,实际并不存在

1.1 更新流(upsert、retract)的连续查询

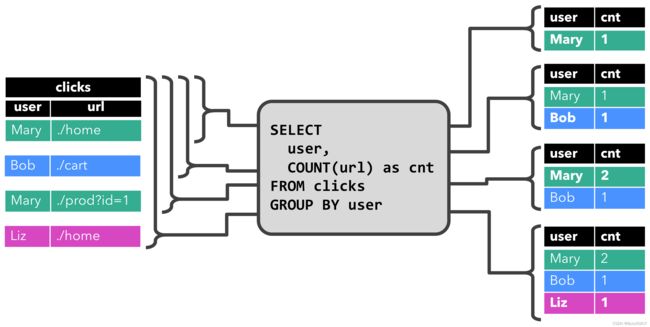

下面是一个计算每个用户点击网站的次数,每来一条新的数据,动态对表进行更新

更新流通常需要维持更多的状态

[root@flink1 ~]#

[root@flink1 ~]# yum install -y nc

[root@flink1 ~]#

[root@flink1 ~]# nc -lk 9000

示例代码如下:

import org.apache.flink.streaming.api.scala.{StreamExecutionEnvironment, createTypeInformation}

import org.apache.flink.table.api.bridge.scala.{StreamTableEnvironment, dataStreamConversions}

import org.apache.flink.table.api.{EnvironmentSettings, Schema}

object flink_test {

def main(args: Array[String]): Unit = {

val senv = StreamExecutionEnvironment.getExecutionEnvironment

val settings = EnvironmentSettings

.newInstance()

.inStreamingMode()

.build()

val tEnv = StreamTableEnvironment.create(senv, settings)

val socket_stream = senv.socketTextStream("192.168.23.101", 9000, ',')

.map(x => {

val cols = x.split(",")

(cols(0), cols(1))

})

val socket_table = socket_stream.toTable(tEnv,

Schema.newBuilder()

.columnByExpression("cTime", "proctime()")

.build()

).as("user", "url", "cTime")

tEnv.createTemporaryView("clicks", socket_table)

tEnv.executeSql("select user, count(url) as cnt from clicks group by user").print()

}

}

启动程序,然后向socket发送消息

[root@flink1 ~]# nc -lk 9000

Mary,./home

Bob,./cart

Mary,./prod?id=1

Liz,./home

程序输出的结果如下

+----+--------------------------------+----------------------+

| op | user | cnt |

+----+--------------------------------+----------------------+

| +I | Mary | 1 |

| +I | Bob | 1 |

| -U | Mary | 1 |

| +U | Mary | 2 |

| +I | Liz | 1 |

数据也是按这样的方式,实时的发送到下游的stream或外部系统

1.2 追加流(append-only)的连续查询

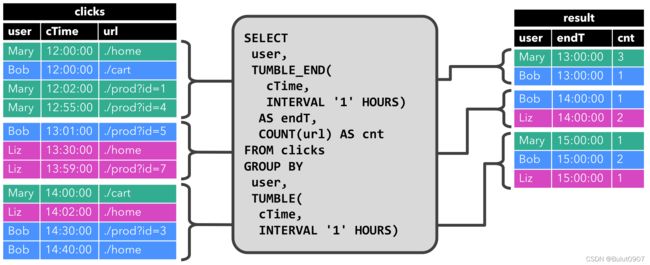

计算每个用户每分钟点击网站的次数,只有到一分钟结束时,才会触发滚动窗口的计算,窗口中的数据是批量计算,而不是来一条处理一条。所以此时只有insert数据到动态结果表

import org.apache.flink.streaming.api.scala.{StreamExecutionEnvironment, createTypeInformation}

import org.apache.flink.table.api.bridge.scala.{StreamTableEnvironment, dataStreamConversions}

import org.apache.flink.table.api.{EnvironmentSettings, Schema}

object flink_test {

def main(args: Array[String]): Unit = {

val senv = StreamExecutionEnvironment.getExecutionEnvironment

val settings = EnvironmentSettings

.newInstance()

.inStreamingMode()

.build()

val tEnv = StreamTableEnvironment.create(senv, settings)

val socket_stream = senv.socketTextStream("192.168.23.101", 9000, ',')

.map(x => {

val cols = x.split(",")

(cols(0), cols(1))

})

val socket_table = socket_stream.toTable(tEnv,

Schema.newBuilder()

// SQL定义方式为:cTime as proctime()

.columnByExpression("cTime", "proctime()")

.build()

).as("user", "url", "cTime")

tEnv.createTemporaryView("clicks", socket_table)

val querySql = "select user, tumble_end(cTime, interval '1' minutes) as endT, count(url) as cnt from clicks group by user, tumble(cTime, interval '1' minutes)"

tEnv.executeSql(querySql).print()

}

}

启动程序,然后向socket发送消息,2022-01-24 21:10:00(包含) - 2022-01-24 21:11:00(不含)发送消息如下

[root@flink1 ~]# nc -lk 9000

Mary,./home

Bob,./cart

Mary,./prod?id=1

Mary,./prod?id=4

2022-01-24 21:11:00(包含) - 2022-01-24 21:12:00(不含)发送消息如下

Bob,./prod?id=5

Liz,./home

Liz,./prod?id=7

2022-01-24 21:12:00(包含) - 2022-01-24 21:13:00(不含)发送消息如下

Mary,./cart

Liz,./home

Bob,./prod?id=3

Bob,./home

程序输出的结果如下

+----+--------------------------------+-------------------------+----------------------+

| op | user | endT | cnt |

+----+--------------------------------+-------------------------+----------------------+

| +I | Mary | 2022-01-24 21:11:00.000 | 3 |

| +I | Bob | 2022-01-24 21:11:00.000 | 1 |

| +I | Bob | 2022-01-24 21:12:00.000 | 1 |

| +I | Liz | 2022-01-24 21:12:00.000 | 2 |

| +I | Mary | 2022-01-24 21:13:00.000 | 1 |

| +I | Liz | 2022-01-24 21:13:00.000 | 1 |

| +I | Bob | 2022-01-24 21:13:00.000 | 2 |

2. Flink Table中动态表的种类

- 普通表,如读取Mysql数据库的表

- 版本表,如读取kafka的实时数据

2.1. append-only表,如用户的orders数据

2.2. upsert表,需定义主键和event timestamp列。如商品product数据,其价格动态变化。Flink会在内部动态维护一张表。如果商品product数据是append-only形式,则需要手动建立一张版本视图,如下所示

create view versioned_products as

select product_id, price, update_time

from (select *, row_number() over(partition by product_id order by update_time desc) as row_no from products)

where row_no = 1;