android 音视频硬编解码

前言

年前在做音视频对讲方面的研究,经过一番曲折,总算有所回报,春晚也没啥好看的,干脆对这段时间走过的坑,做个记录。

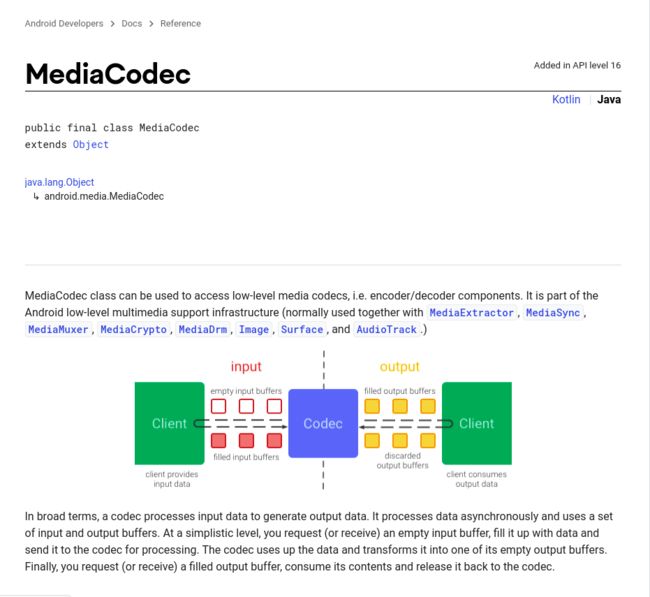

音视频对讲,需要将相机实时预览的图像数据,以及麦克风音频数据进行编码处理,而编码又分为软编和硬编,毫无疑问,能用硬编就用硬编,而安卓硬编,绕不开MediaCodec。

MediaCodec

关于MediaCodec,官方文档有着详细的解答,这里就不赘述了。

视频硬编码

我这里需要将相机实时预览的YUV数据,编码为H.264格式的数据,在开始编码之前,首先要

MediaFormat mediaFormat = MediaFormat.createVideoFormat(MIMETYPE_VIDEO_AVC, width, height);

mediaFormat.setInteger(MediaFormat.KEY_COLOR_FORMAT, MediaCodecInfo.CodecCapabilities.COLOR_FormatYUV420Flexible);

mediaFormat.setInteger(MediaFormat.KEY_BIT_RATE, width * height * 5);

mediaFormat.setInteger(MediaFormat.KEY_FRAME_RATE, 30);

mediaFormat.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, 1);

try {

mMediaCodec = MediaCodec.createEncoderByType(MIMETYPE_VIDEO_AVC);

mMediaCodec.configure(mediaFormat, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

mMediaCodec.start();

} catch (Exception e) {

e.printStackTrace();

}

接下来就可以传入数据进行编码了

private void encodeBuffer(@NonNull byte[] buffer, long pts) {

int inputBufferIndex = mMediaCodec.dequeueInputBuffer(TIMEOUT_S);

if (inputBufferIndex >= 0) {

ByteBuffer inputBuffer = mMediaCodec.getInputBuffer(inputBufferIndex);

inputBuffer.clear();

inputBuffer.put(buffer);

mMediaCodec.queueInputBuffer(inputBufferIndex, 0, buffer.length, pts, 0);

}

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

int outputBufferIndex = mMediaCodec.dequeueOutputBuffer(bufferInfo, TIMEOUT_S);

while (outputBufferIndex >= 0) {

ByteBuffer outputBuffer = mMediaCodec.getOutputBuffer(outputBufferIndex);

if (bufferInfo.flags == MediaCodec.BUFFER_FLAG_CODEC_CONFIG) {

bufferInfo.size = 0;

}

if (bufferInfo.size > 0) {

outputBuffer.position(bufferInfo.offset);

outputBuffer.limit(bufferInfo.offset + bufferInfo.size);

bufferInfo.presentationTimeUs = pts;

// todo 编码后的数据,可做回调处理...

}

mMediaCodec.releaseOutputBuffer(outputBufferIndex, false);

bufferInfo = new MediaCodec.BufferInfo();

outputBufferIndex = mMediaCodec.dequeueOutputBuffer(bufferInfo, TIMEOUT_S);

}

}

音频硬编码

同时,将麦克风录制的PCM数据,编码为AAC格式的数据,同理,在开始编码之前

MediaFormat mediaFormat = MediaFormat.createAudioFormat(MIMETYPE_AUDIO_AAC, sampleRateInHz, channelConfig == AudioFormat.CHANNEL_IN_MONO ? 1 : 2);

mediaFormat.setInteger(MediaFormat.KEY_BIT_RATE, 64000);

mediaFormat.setInteger(MediaFormat.KEY_MAX_INPUT_SIZE, AudioRecord.getMinBufferSize(DEFAULT_SAMPLE_RATE_IN_HZ, DEFAULT_CHANNEL_CONFIG, DEFAULT_ENCODING) * 3);

mediaFormat.setInteger(MediaFormat.KEY_CHANNEL_COUNT, channelConfig == AudioFormat.CHANNEL_IN_MONO ? 1 : 2);

try {

mMediaCodec = MediaCodec.createEncoderByType(MIMETYPE_AUDIO_AAC);

mMediaCodec.configure(mediaFormat, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

mMediaCodec.start();

} catch (Exception e) {

e.printStackTrace();

}

同理,接下来就可以传入数据进行编码了

private void encodeBuffer(@NonNull byte[] buffer, long pts) {

int inputBufferIndex = mMediaCodec.dequeueInputBuffer(TIMEOUT_S);

if (inputBufferIndex >= 0) {

ByteBuffer inputBuffer = mMediaCodec.getInputBuffer(inputBufferIndex);

inputBuffer.clear();

inputBuffer.limit(buffer.length);

inputBuffer.put(buffer);

mMediaCodec.queueInputBuffer(inputBufferIndex, 0, buffer.length, pts, 0);

}

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

int outputBufferIndex = mMediaCodec.dequeueOutputBuffer(bufferInfo, TIMEOUT_S);

while (outputBufferIndex >= 0) {

ByteBuffer outputBuffer = mMediaCodec.getOutputBuffer(outputBufferIndex);

if (bufferInfo.flags == MediaCodec.BUFFER_FLAG_CODEC_CONFIG) {

bufferInfo.size = 0;

}

if (bufferInfo.size > 0) {

outputBuffer.position(bufferInfo.offset);

outputBuffer.limit(bufferInfo.offset + bufferInfo.size);

bufferInfo.presentationTimeUs = pts;

// todo 编码后的数据,可做回调处理...

}

mMediaCodec.releaseOutputBuffer(outputBufferIndex, false);

bufferInfo = new MediaCodec.BufferInfo();

outputBufferIndex = mMediaCodec.dequeueOutputBuffer(bufferInfo, TIMEOUT_S);

}

}

可以发现,音视频编码流程是一样的,通过上面的操作,看起来数据的编码流程已经完成,接下来,就是解码了,同样的,解码也要用到的MediaCodec

视频硬解码

解码之前

try {

mMediaCodec = MediaCodec.createDecoderByType(MediaFormat.MIMETYPE_VIDEO_AVC);

MediaFormat mediaFormat = MediaFormat.createVideoFormat(MediaFormat.MIMETYPE_VIDEO_AVC, width, height);

mMediaCodec.configure(mediaFormat, surface, null, 0);

mMediaCodec.start();

} catch (Exception e) {

throw new RuntimeException(e);

}

接下来便是解码已经编码好的H.264帧数据并在Surface中进行渲染

public void decodeAndRenderV(byte[] in, int offset, int length, long pts) {

int inputBufferIndex = mMediaCodec.dequeueInputBuffer(-1);

if (inputBufferIndex >= 0) {

ByteBuffer inputBuffer = mMediaCodec.getInputBuffer(inputBufferIndex);

inputBuffer.clear();

inputBuffer.put(in, offset, length);

mMediaCodec.queueInputBuffer(inputBufferIndex, 0, length, pts, 0);

}

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

int outputBufferIndex = mMediaCodec.dequeueOutputBuffer(bufferInfo, TIMEOUT_US);

while (outputBufferIndex >= 0) {

mMediaCodec.releaseOutputBuffer(outputBufferIndex, true);

outputBufferIndex = mMediaCodec.dequeueOutputBuffer(bufferInfo, TIMEOUT_US);

}

}

- 如果想完整保存每一帧的

YUV数据呢?

音频硬解码

同样的,首先初始化操作

try {

mMediaCodec = MediaCodec.createDecoderByType(MIMETYPE_AUDIO_AAC);

MediaFormat mediaFormat = new MediaFormat();

mediaFormat.setString(MediaFormat.KEY_MIME, MIMETYPE_AUDIO_AAC);

mMediaCodec.configure(mediaFormat, null, null, 0);

} catch (IOException e) {

throw new RuntimeException(e);

}

最后,解码音频帧数据并进行播放(需要先准备好AudioTrack)

public void decodeAndRenderA(byte[] in, int offset, int length, long pts) {

int inputBufIndex = mMediaCodec.dequeueInputBuffer(TIMEOUT_US);

if (inputBufIndex >= 0) {

ByteBuffer dstBuf = mMediaCodec.getInputBuffer(inputBufIndex);

dstBuf.clear();

dstBuf.put(in, offset, length);

mMediaCodec.queueInputBuffer(inputBufIndex, 0, length, pts, 0);

}

ByteBuffer outputBuffer;

MediaCodec.BufferInfo info = new MediaCodec.BufferInfo();

int outputBufferIndex = mMediaCodec.dequeueOutputBuffer(info, TIMEOUT_US);

while (outputBufferIndex >= 0) {

outputBuffer = mMediaCodec.getOutputBuffer(outputBufferIndex);

byte[] outData = new byte[info.size];

outputBuffer.get(outData);

outputBuffer.clear();

if (mAudioTrack != null) {

mAudioTrack.write(outData, 0, info.size);

}

mMediaCodec.releaseOutputBuffer(outputBufferIndex, false);

outputBufferIndex = mMediaCodec.dequeueOutputBuffer(info, TIMEOUT_US);

}

}

坑的开始

重点来了,通过上面对MediaCodec的使用,可以实现规范的音视频数据帧的硬编解码,然而,在实际的应用中会发现,无论是推流还是收流,都存在不少的坑,比如

- 编码后的图像呈黑白色

-

ffmpeg推视频流报non-existing PPS 0 referenced错误 - 带

ADTS数据头的数据帧用ffmpeg推流成功后,再推视频流会一直返回错误码-1094995529 -

AAC硬解码总是报IllegalStateException异常 - 解码

AAC帧数据硬解码调用dequeueOutputBuffer时,总是返回-1 - 拉流得到的数据头有变更

这里只列举了印象比较深的几个坑,其它的就不一一列举了,而在解决这各种问题之前,还必须要掌握SPS、PPS以及ADTS的相关知识

SPS和PPS

MediaCodec同步方式H.264编码获取SPS和PPS

int outputBufferIndex = mMediaCodec.dequeueOutputBuffer(bufferInfo, TIMEOUT_S);

if (outputBufferIndex == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED) {

MediaFormat mediaFormat = mMediaCodec.getOutputFormat();

ByteBuffer spsb = mediaFormat.getByteBuffer("csd-0");

byte[] sps = new byte[spsb.remaining()];

spsb.get(sps, 0, sps.length);

ByteBuffer ppsb = mediaFormat.getByteBuffer("csd-1");

byte[] pps = new byte[ppsb.remaining()];

ppsb.get(pps, 0, pps.length);

byte[] sps_pps = new byte[sps.length + pps.length];

System.arraycopy(sps, 0, sps_pps, 0, sps.length);

System.arraycopy(pps, 0, sps_pps, sps.length, pps.length);

}

相对应的,H.264解码如何获取SPS和PPS信息呢?

通常,H.264编码帧数据都有一个起始码,起始码由三个字节的00 00 01或者四个字节的00 00 00 01组成,起始码之后下一个字节便是帧数据类型Code

| code | nalu type | 解释 |

|---|---|---|

| 0 | undefined | |

| 0x01 | 1 | 不分区,非IDR图像的片(B帧) |

| 0x41 | 1 | 不分区,非IDR图像的片(P帧) |

| 2 | 片分区A | |

| 3 | 片分区B | |

| 4 | 片分区C | |

| 0x65 | 5 | IDR图像中的片(关键帧,I帧) |

| 0x06 | 6 | 补充增强信息单元(SEI) |

| 0x67 | 7 | SPS |

| 0x68 | 8 | PPS |

| 9 | 序列结束 | |

| 10 | 序列结束 | |

| 11 | 码流结束 | |

| 12 | 填充 | |

| 13-23 | 保留 | |

| 24 | STAP-A 单一时间的组合包 | |

| 25 | STAP-B 单一时间的组合包 | |

| 26 | MTAP16 多个时间的组合包 | |

| 27 | MTAP24 多个时间的组合包 | |

| 28 | FU-A 分片的单元 | |

| 29 | FU-B 分片的单元 | |

| 30-31 | undefined |

nalu type = code & 0x1F

由于设备厂商的不同,起始码紧接的

code可能为十进制,也可能为十六进制,我在这里就踩过坑

在解码AAC帧数据前,也需要设置MediaCodec的SPS

int sampleIndex = 4;

int chanCfgIndex = 2;

int profileIndex = 1;

byte[] adtsAudioHeader = new byte[2];

adtsAudioHeader[0] = (byte) (((profileIndex + 1) << 3) | (sampleIndex >> 1));

adtsAudioHeader[1] = (byte) ((byte) ((sampleIndex << 7) & 0x80) | (chanCfgIndex << 3));

ByteBuffer byteBuffer = ByteBuffer.allocate(adtsAudioHeader.length);

byteBuffer.put(adtsAudioHeader);

byteBuffer.flip();

mediaFormat.setByteBuffer("csd-0", byteBuffer);

当然,这种方法不够灵活,可以变更为在刚开始接收带ADTS数据头的数据帧时再设置

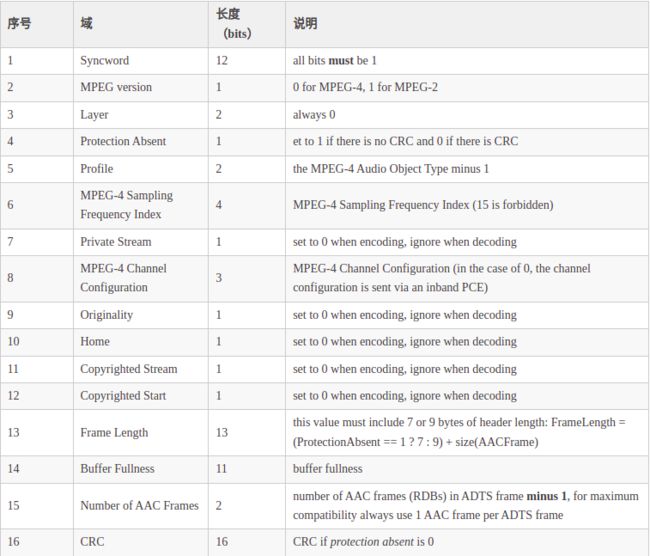

ADTS

通常,编码后的AAC数据可能会添加上ADTS数据头

private void addADTStoData(byte[] data, int packetLen) {

int profile = 2;

int freqIdx = 4;

int chanCfg = 2;

data[0] = (byte) 0xFF;

data[1] = (byte) 0xF9;

data[2] = (byte) (((profile - 1) << 6) + (freqIdx << 2) + (chanCfg >> 2));

data[3] = (byte) (((chanCfg & 3) << 6) + (packetLen >> 11));

data[4] = (byte) ((packetLen & 0x7FF) >> 3);

data[5] = (byte) (((packetLen & 7) << 5) + 0x1F);

data[6] = (byte) 0xFC;

}

以上算法基于ADTS数据格式

其中有几个序列也有着相应的规范

- [5] Profile

| index | profile |

|---|---|

| 0 | main profile |

| 1 | low complexity profile (LC) |

| 2 | scalable sampling rate profile (SSR) |

| 3 | (reserved) |

这里也就可以解释,上面算法中的

profile变量在第三字节处减1就是为了得到下标值

- [6] MPEG-4 Sampling Frequency Index

| index | sample rate |

|---|---|

| 0 | 96000 Hz |

| 1 | 88200 Hz |

| 2 | 64000 Hz |

| 3 | 48000 Hz |

| 4 | 44100 Hz |

| 5 | 32000 Hz |

| 6 | 24000 Hz |

| 7 | 22050 Hz |

| 8 | 16000 Hz |

| 9 | 12000 Hz |

| 10 | 11025 Hz |

| 11 | 8000 Hz |

| 12 | 7350 Hz |

| 13 | Reserved |

| 14 | Reserved |

| 15 | frequency is written explictly |

- [8] MPEG-4 Channel Configuration

| index | channel |

|---|---|

| 0 | Defined in AOT Specifc Config |

| 1 | 1 channel: front-center |

| 2 | 2 channels: front-left, front-right |

| 3 | 3 channels: front-center, front-left, front-right |

| 4 | 4 channels: front-center, front-left, front-right, back-center |

| 5 | 5 channels: front-center, front-left, front-right, back-left, back-right |

| 6 | 6 channels: front-center, front-left, front-right, back-left, back-right, LFE-channel |

| 7 | 8 channels: front-center, front-left, front-right, side-left, side-right, back-left, back-right, LFE-channel |

| 8-15 | Reserved |