基于canal+kafka+flink的实时增量同步功能1:mysqlTokafka代码实现

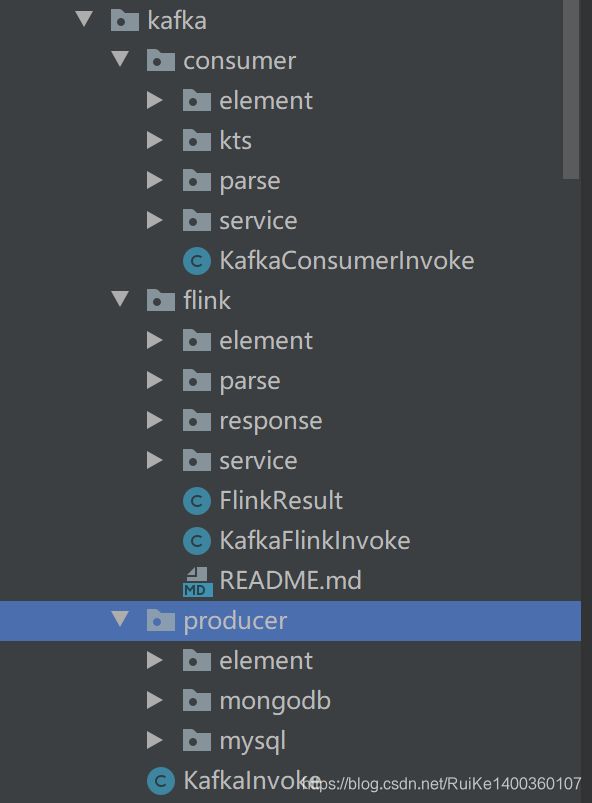

我们先来看一个代码架构图:

功能介绍:

实现一个可配置化可监控的,实时监听mysql库表变动,推送数据到kafka,实时入库新的库表功能。使用到的技术有canal同步中间件,kafka消息队列,大数据实时计算流引擎flink以及并发编程等技术。分为mysqlTokafka、kafkaTomysql两部分讲,这一篇我们实现mysql数据推送到kafka。

一、kafka生产者端代码简介

(1)kafka调用者

在该类判断是调用mysql2kafka的生产作者还是kafka2mysql的消费者,消费者需要配合flink处理消息入库

@Component("KafkaInvoke")

@Scope("prototype")

public class KafkaInvoke extends AbstractMonitorComponentInvoke {

@Override

public ComponentResult invoke() throws SyDevOpsRuntimeException {

return loadInvoke().invoke();

}

@Override

public ComponentResult monitor() throws SyDevOpsRuntimeException {

return loadInvoke().monitor();

}

@Override

public ComponentResult stop() throws SyDevOpsRuntimeException {

return loadInvoke().stop();

}

@Override

public ComponentResult restart() throws SyDevOpsRuntimeException {

return loadInvoke().restart();

}

private AbstractMonitorComponentInvoke loadInvoke() {

AbstractMonitorComponentInvoke invokerBean;

try {

Map componentSpec = JsonUtil.fromJson(parameters.getComponentSpec(), Map.class);

Map configuration = (Map) componentSpec.get(Common.ComponentKey.CONFIGURATION);

Map readerStr = (Map) configuration.get(Common.ComponentKey.READER);

Map writerStr = (Map) configuration.get(Common.ComponentKey.WRITER);

Map setting = (Map) configuration.get(Common.ComponentKey.SETTING);

String engineType = MapParamUtil.getStringValue(setting, Common.ComponentKey.ENGINE_TYPE);

if (Common.KafkaEngineType.FLINK.equalsIgnoreCase(engineType)) {

invokerBean = SpringContextUtil.getBean(KafkaFlinkInvoke.class.getSimpleName(),

KafkaFlinkInvoke.class);

}

else {

String readerType = readerStr.get(Common.ComponentKey.PLUGIN).toString();

String writerType = writerStr.get(Common.ComponentKey.PLUGIN).toString();

String pluginType = readerType + "-" + writerType;

if (StringUtils.equalsAnyIgnoreCase(readerType, AgentCommon.PluginType.KAFKA, AgentCommon.PluginType.DTS)) {

invokerBean = SpringContextUtil.getBean(KafkaConsumerInvoke.class.getSimpleName(),

KafkaConsumerInvoke.class);

}

else if (AgentCommon.PluginType.KAFKA.equals(writerType)) {

if (Common.ComponentType.COMPONENT_MONGODB_KAFKA.equals(pluginType)) {

invokerBean = SpringContextUtil.getBean(Mongodb2KafkaInvoke.class.getSimpleName(),

Mongodb2KafkaInvoke.class);

}

else if (Common.ComponentType.COMPONENT_MYSQL_KAFKA.endsWith(pluginType)) {

invokerBean = SpringContextUtil.getBean(Mysql2KafkaInvoke.class.getSimpleName(),

Mysql2KafkaInvoke.class);

}

else {

throw new SyDevOpsRuntimeException("Unknown kafka pluginType:" + pluginType);

}

}

else {

throw new SyDevOpsRuntimeException("Unknown kafka pluginType:" + pluginType);

}

}

invokerBean.init(parameters);

}

catch (Exception e) {

throw new SyDevOpsRuntimeException(e.getMessage(), e);

}

return invokerBean;

}

}(2)生产者Mysql2KafkaInvoke代码实现

@Component("Mysql2KafkaInvoke")

@Scope("prototype")

public class Mysql2KafkaInvoke extends AbstractMonitorComponentInvoke {

private static final Logger PRINT = LoggerFactory.getLogger(Mysql2KafkaInvoke.class);

@Autowired

private Mysql2KafkaElementParser mysql2KafkaElementParser;

@Autowired

private Mysql2KafkaService mysql2KafkaService;

/**

* 父类KafkaInvoke方法invoke具体实现,实现初始化同步信息,解析配置信息,以及开启canal同步

* @return

* @throws SyDevOpsRuntimeException

*/

@Override

public ComponentResult invoke() throws SyDevOpsRuntimeException {

ComponentResult result = new ComponentResult();

result.setInvokeResult(ComponentResult.RUNNING);

try {

CommonContext.getLogger().makeRunLog(LoggerLevel.INFO, "The mysql2kafka job is starting ...", PRINT);

// 解析报文,数据源,kafka默认主题,canal安装路径,canal配置信息,任务id等

mysql2KafkaElementParser.parse(getParameters());

mysql2KafkaService.init(mysql2KafkaElementParser.getMysql2KafkaElement());

mysql2KafkaService.start();

result.setInvokeMsg("Execute mysql2KafkaInvoke success");

CommonContext.getLogger().makeRunLog(LoggerLevel.INFO, "The mysql2kafka job is started", PRINT);

}

catch (Exception e) {

throw new SyDevOpsRuntimeException(e.getMessage(), e);

}

return result;

}

/**

* 实现父类,开启全程调度监视器

* @return

* @throws SyDevOpsRuntimeException

*/

@Override

public ComponentResult monitor() throws SyDevOpsRuntimeException {

ComponentResult result = new ComponentResult();

try {

mysql2KafkaElementParser.parse(getParameters());

mysql2KafkaService.init(mysql2KafkaElementParser.getMysql2KafkaElement());

Map monitor = mysql2KafkaService.monitor();

if (MapParamUtil.getIntValue(monitor, "result") == 0) {

result.setInvokeResult(ComponentResult.RUNNING);

result.setInvokeMsg("Execute monitor success");

}

else {

result.setInvokeResult(ComponentResult.FAIL);

result.setInvokeMsg("Execute monitor fail");

}

}

catch (Exception e) {

throw new SyDevOpsRuntimeException(e.getMessage(), e);

}

return result;

}

/**

* 工作流:暂停任务

* @return

* @throws SyDevOpsRuntimeException

*/

@Override

public ComponentResult stop() throws SyDevOpsRuntimeException {

ComponentResult result = new ComponentResult();

try {

CommonContext.getLogger().makeRunLog(LoggerLevel.INFO, "The mysql2kafka job is stopping...", PRINT);

mysql2KafkaElementParser.parse(getParameters());

mysql2KafkaService.init(mysql2KafkaElementParser.getMysql2KafkaElement());

if (mysql2KafkaService.stop()) {

result.setInvokeResult(ComponentResult.SUCCESS);

result.setInvokeMsg("Execute mysql2kafka stop success");

}

else {

result.setInvokeResult(ComponentResult.FAIL);

result.setInvokeMsg("Execute mysql2kafka stop fail");

}

CommonContext.getLogger().makeRunLog(LoggerLevel.INFO, "The mysql2kafka job is stopped", PRINT);

}

catch (Exception e) {

throw new SyDevOpsRuntimeException(e.getMessage(), e);

}

return result;

}

/**

* 工作流:重启任务

* @return

* @throws SyDevOpsRuntimeException

*/

@Override

public ComponentResult restart() throws SyDevOpsRuntimeException {

ComponentResult result = new ComponentResult();

result.setInvokeResult(ComponentResult.RUNNING);

CommonContext.getLogger().makeRunLog(LoggerLevel.INFO, "The mysql2kafka job is restarting...", PRINT);

try {

mysql2KafkaElementParser.parse(getParameters());

mysql2KafkaService.init(mysql2KafkaElementParser.getMysql2KafkaElement());

mysql2KafkaService.restart();

result.setInvokeMsg("Execute mysql2kafka restart success");

CommonContext.getLogger().makeRunLog(LoggerLevel.INFO, "The mysql2kafka job is started", PRINT);

}

catch (Exception e) {

throw new SyDevOpsRuntimeException(e.getMessage(), e);

}

return result;

}

}

(3)生产者配置信息解析代码

初始化时解析mysql2kafa的任务配置信息

@Component

@Scope("prototype")

public class Mysql2KafkaElementParser extends AbstractElementParser {

private Mysql2KafkaElement mysql2KafkaElement;

private Map reader;

private Map writer;

private Map setting;

private static final String KAFKA_DATATYPE_STRING = "STRING";

private static final String KAFKA_DATATYPE_JSON = "JSON";

private static final String KAFKA_DATATYPE_OGG = "OGG";

public Mysql2KafkaElement getMysql2KafkaElement() {

return mysql2KafkaElement;

}

public void parse(ComponentParameter parameters) {

init(parameters);

mysql2KafkaElement = Mysql2KafkaElement.getInstance();

scriptParse();

parseDataSource();

parseDefaultKafkaTopic();

parseCanalPath();

parseTaskInstId();

parseWorkitemId();

parseCanalInstanceName();

parseDataFormatType();

parseDataFormat();

parseSetting();

paseTables();

parseDatabasesAndTopic();

}

/**

* 解析报文

*/

private void scriptParse() {

try {

Map componentSpec = JsonUtil.fromJson(parameters.getComponentSpec(), Map.class);

Map configuration = (Map) componentSpec.get(Common.ComponentKey.CONFIGURATION);

setting = (Map) configuration.get(Common.ComponentKey.SETTING);

Map origintStr = (Map) configuration.get(Common.ComponentKey.READER);

reader = (Map) origintStr.get(Common.ComponentKey.PARAMETER);

Map targetStr = (Map) configuration.get(Common.ComponentKey.WRITER);

writer = (Map) targetStr.get(Common.ComponentKey.PARAMETER);

}

catch (Exception e) {

throw new SyDevOpsRuntimeException("Failed to parse script:" + e.getMessage(), e);

}

}

/**

* 解析数据源

*/

private void parseDataSource() {

try {

DataSource oriDatasource = getDataSource(reader.get(Common.ComponentKey.DATASOURCE).toString());

mysql2KafkaElement.setOriDatasource(oriDatasource);

DataSource tarDatasource = getDataSource(writer.get(Common.ComponentKey.DATASOURCE).toString());

mysql2KafkaElement.setTarDatasource(tarDatasource);

DataSource confluentDatasource = getDataSource(writer.get(Common.ComponentKey.CANALDATASOURCE).toString());

mysql2KafkaElement.setCanalDatasouce(confluentDatasource);

}

catch (Exception e) {

throw new SyDevOpsRuntimeException("Failed to parse datasouce:" + e.getMessage(), e);

}

}

/**

* 解析kafka 默认主题

*/

private void parseDefaultKafkaTopic() {

try {

mysql2KafkaElement.setDefaultKafkaTopic(writer.get(Common.ComponentKey.DEFAULTKAFKATOPIC).toString());

}

catch (Exception e) {

throw new SyDevOpsRuntimeException("Failed to parse kafkaTopic:" + e.getMessage(), e);

}

}

/**

* 解析canal安装路径

*/

private void parseCanalPath() {

try {

String confluentPath = MapParamUtil.getStringValue(writer, Common.ComponentKey.CANALPATH);

if (confluentPath.endsWith("/")) {

confluentPath = confluentPath.substring(0, confluentPath.length() - 1);

}

mysql2KafkaElement.setCanalPath(confluentPath);

}

catch (Exception e) {

throw new SyDevOpsRuntimeException("Failed to parse confluentPath:" + e.getMessage(), e);

}

}

/**

* 解析任务实例id

*/

private void parseTaskInstId() {

try {

String taskInstId = parameters.getTaskInstId();

if (StringUtils.isNotEmpty(taskInstId)) {

mysql2KafkaElement.setTaskInstId(taskInstId);

}

else {

throw new SyDevOpsRuntimeException("TaskInstId == null");

}

}

catch (Exception e) {

throw new SyDevOpsRuntimeException("Failed to parse taskInstId:" + e.getMessage(), e);

}

}

/**

* 解析任务实例id

*/

private void parseWorkitemId() {

try {

String workitemId = parameters.getWorkitemId();

if (StringUtils.isNotEmpty(workitemId)) {

mysql2KafkaElement.setWorkitemId(workitemId);

}

else {

throw new SyDevOpsRuntimeException("workitemId == null");

}

}

catch (Exception e) {

throw new SyDevOpsRuntimeException("Failed to parse workitemId:" + e.getMessage(), e);

}

}

/**

* 解析instance名称 默认指定 "MYSQL_KAFKA_"+taskInstId

*/

private void parseCanalInstanceName() {

try {

String taskInstId = parameters.getTaskInstId();

mysql2KafkaElement.setInstanceName("MYSQL_KAFKA_" + taskInstId);

}

catch (Exception e) {

throw new SyDevOpsRuntimeException("Failed to parse parseCanalInstanceName:" + e.getMessage(), e);

}

}

/**

* 解析dataFormatType STRING/JSON 解析列分隔符

*/

private void parseDataFormatType() {

try {

String dataFormatType = writer.get(Common.ComponentKey.DATAFORMATTYPE).toString();

mysql2KafkaElement.setDataFormatType(dataFormatType);

if (KAFKA_DATATYPE_STRING.equalsIgnoreCase(dataFormatType)) {

String colDelimiter = writer.get(Common.ComponentKey.COL_DELIMITER).toString();

String transform = AscIITransform.transform(colDelimiter);

if (StringUtils.isNotEmpty(transform)) {

mysql2KafkaElement.setColDelimiter(colDelimiter);

}

else {

mysql2KafkaElement.setColDelimiter(",");

}

}

else if (KAFKA_DATATYPE_JSON.equalsIgnoreCase(dataFormatType)) {

mysql2KafkaElement.setColDelimiter(",");

}

else {

throw new SyDevOpsRuntimeException("Unknow dataFormatType:" + dataFormatType);

}

}

catch (Exception e) {

throw new SyDevOpsRuntimeException("Failed to parse dataFormatType:" + e.getMessage(), e);

}

}

/**

* 解析dataFormat OGG/" "

*/

private void parseDataFormat() {

try {

String dataFormatType = writer.get(Common.ComponentKey.DATAFORMATTYPE).toString();

String dataFormat = (String) writer.get(Common.ComponentKey.DATAFORMAT);

if (StringUtils.isEmpty(dataFormat)) {

dataFormat = " ";

}

if (KAFKA_DATATYPE_STRING.equalsIgnoreCase(dataFormatType)) {

mysql2KafkaElement.setDataFormat(KAFKA_DATATYPE_OGG);

}

else {

mysql2KafkaElement.setDataFormat(dataFormat);

}

}

catch (Exception e) {

throw new SyDevOpsRuntimeException("Failed to parse parseDataFormat:" + e.getMessage(), e);

}

}

/**

* 解析通道控制参数

*/

private void parseSetting() {

try {

String dataFormatType = writer.get(Common.ComponentKey.DATAFORMATTYPE).toString();

if (KAFKA_DATATYPE_STRING.equalsIgnoreCase(dataFormatType)) {

// DataFormatType类型为STRING时 OperaType固定为 I JSON格式才可稽核

mysql2KafkaElement.setOperaType("I");

}

else {

mysql2KafkaElement.setOperaType(setting.get(Common.ComponentKey.OPERATYPE).toString());

}

}

catch (Exception e) {

throw new SyDevOpsRuntimeException("Failed to parse settingParams:" + e.getMessage(), e);

}

}

/**

* 解析 tables

*/

private void paseTables() {

try {

List(4)生产者Mysql2KafkaService代码具体实现

@Component

@Scope("prototype")

public class Mysql2KafkaService extends AbstractInvokeService {

private Logger PRINT = LoggerFactory.getLogger(Mysql2KafkaService.class);

@Autowired

private DataIntegWorkitemMapper dataIntegWorkitemMapper;

private Mysql2KafkaElement element;

private DataSource oriDatasource;

// private DataSource tarDatasource;

private DataSource canalDatasouce;

private String instancePath;

public void init(Mysql2KafkaElement mysql2KafkaElement) {

this.element = mysql2KafkaElement;

this.oriDatasource = element.getOriDatasource();

// this.tarDatasource = element.getTarDatasource();

this.canalDatasouce = element.getCanalDatasouce();

this.instancePath = element.getCanalPath().concat("/conf/").concat(element.getInstanceName());

}

/**

* 每次启动之后重新替换jar包(这个jar是具体处理kafka的)

* @throws SyDevOpsRuntimeException

*/

public void replaceCanalServerJar() throws SyDevOpsRuntimeException {

//防止多个分片同时去上传jar包

String taskInstId = element.getTaskInstId();

DataIntegWorkitemExample example = new DataIntegWorkitemExample();

example.createCriteria().andTaskInstIdEqualTo(Integer.parseInt(taskInstId));

List dataIntegWorkitems = dataIntegWorkitemMapper.selectByExample(example);

Boolean isSplitWorkitem = dataIntegWorkitems.size() > 1 ? true : false;

SSHHelper.uploadResource(canalDatasouce, "kafka/mysql", PlugInvokeConstant.CANALSERVER_JAR,

element.getCanalPath() + "/lib", isSplitWorkitem, element.getWorkitemId(), PRINT);

}

/**

* 启动canal任务,在服务器指定位置新建文件夹存放配置和调度等文件信息

* @throws SyDevOpsRuntimeException

* @throws InterruptedException

*/

public void start() throws SyDevOpsRuntimeException, InterruptedException {

replaceCanalServerJar();

if (!checkCanalStatus()) {

startCanal();

}

// 检测mysql数据源连接性

String url = oriDatasource.getParamValue(DataSource.Database.URL);

String username = oriDatasource.getParamValue(DataSource.Database.USERNAME);

String password = oriDatasource.getParamValue(DataSource.Database.PASSCODE);

try {

DatabaseUtil.isValid(DataSource.MYSQL, url, username, password);

}

catch (Exception e) {

throw new SyDevOpsRuntimeException("oriDatasource[" + oriDatasource.getDatasourceName() + "] is not valid",

e);

}

// 在canal的conf目录下新建 文件夹

String mkdirCmd = buildMkdirCmd();

SAScriptHelper.mkdir(canalDatasouce, PRINT, mkdirCmd);

// 上传instance.properties配置文件

uploadInstanceProperties(instancePath);

}

// 0 成功 1失败

public Map monitor() throws SyDevOpsRuntimeException {

Map monitorResult = new HashMap();

try {

if (!checkCanalStatus()) {

monitorResult.put("result", "1");

PRINT.info("Monitor result: mysql2Kafka job " + element.getInstanceName() + " is error");

}

else {

monitorResult.put("result", "0");

PRINT.info("Monitor result: mysql2Kafka job " + element.getInstanceName() + " is running");

}

}

catch (Exception e) {

PRINT.warn("Monitor result: mysql2Kafka job " + element.getInstanceName() + " is error" + e.getMessage(),

e);

monitorResult.put("result", "1");

}

return monitorResult;

}

/**

* 暂停canal任务

* @return

*/

public boolean stop() {

try {

// 删除 canal conf目录下新建的文件夹即可 停止实例

String rmInstanceCmd = instancePath + "_*/";

SAScriptHelper.remove(canalDatasouce, PRINT, rmInstanceCmd);

// 删除canal instance日志文件

String rmLogCmd = element.getCanalPath() + "/logs/" + element.getInstanceName() + "_*/";

SAScriptHelper.remove(canalDatasouce, PRINT, rmLogCmd);

}

catch (Exception e) {

PRINT.warn("Fail to stop mysql2Kafka:" + e.getMessage(), e);

return false;

}

return true;

}

public void restart() throws SyDevOpsRuntimeException, InterruptedException {

stop();

start();

}

/**

* 检查canal状态

*

* @throws SyDevOpsRuntimeException

*/

private boolean checkCanalStatus() throws SyDevOpsRuntimeException {

try {

String psCmd = "PID=$(cat " + element.getCanalPath() + "/bin/canal.pid) \n" + "ps -f -p $PID |wc -l";

String result = SAScriptHelper.shell(canalDatasouce, PRINT, psCmd);

if ((Integer.parseInt(result.trim()) == 1)) {

CommonContext.getLogger().makeRunLog(LoggerLevel.INFO, "The canal process is over", PRINT);

String rmCmd = element.getCanalPath() + "/bin/canal.pid";

SAScriptHelper.remove(canalDatasouce, PRINT, rmCmd);

return false;

}

}

catch (Exception e) {

return false;

}

return true;

}

private void startCanal() throws SyDevOpsRuntimeException, InterruptedException {

String psCmd = "ps -ef|grep jar | grep ".concat(element.getCanalPath().concat("/bin")).concat(" |wc -l");

String result = SAScriptHelper.shell(canalDatasouce, PRINT, psCmd);

if ((Integer.parseInt(result.trim()) == 1)) {

String startCmd = "sh ".concat(element.getCanalPath().concat("/bin/startup.sh"));

SAScriptHelper.shell(canalDatasouce, PRINT, startCmd);

}

}

/**

* 创建instance.properties文件存放目录 可能是多个

*

* @return

*/

private String buildMkdirCmd() {

String mkdirCmd = "";

if (1 == element.getDatabases().length) {

mkdirCmd = instancePath + "_0";

}

else {

StringBuffer mkdirBuffer = new StringBuffer();

for (int i = 0; i < element.getDatabases().length; i++) {

mkdirBuffer.append(" ").append(instancePath).append("_").append(i);

}

mkdirCmd = mkdirBuffer.toString();

}

return mkdirCmd;

}

/**

* 上传instance.properties 配置文件

* canal.instance.mysql.slaveId=1235 canal.instance.gtidon=false

* canal.instance.master.address=XX:3306 canal.instance.tsdb.enable=false canal.instance.dbUsername=XX

* canal.instance.dbPassword=XX canal.instance.connectionCharset=UTF-8 canal.instance.enableDruid=false

* canal.instance.filter.regex=test\\.testfang canal.mq.topic=testTopic canal.mq.partition=0

*

* @param instancePath

*/

private void uploadInstanceProperties(String instancePath) {

for (int i = 0; i < element.getDatabases().length; i++) {

String uploadPath = instancePath.concat("_").concat(String.valueOf(i));

CommonContext.getLogger().makeRunLog(LoggerLevel.INFO,

"Upload instance.properties to " + uploadPath + " ...", PRINT);

StringBuffer instanceProperties = buildProperties(i);

try {

if (!SSHHelper.transferFile(instanceProperties.toString().getBytes("UTF-8"),

PlugInvokeConstant.CANAL_INSTANCE_PROPERTIES, uploadPath, canalDatasouce, null)) {

throw new SyDevOpsRuntimeException("Failed to upload canal instance.properties");

}

}

catch (UnsupportedEncodingException e) {

throw new SyDevOpsRuntimeException(e.getMessage(), e);

}

}

CommonContext.getLogger().makeRunLog(LoggerLevel.INFO, "Upload instance.properties success", PRINT);

}

private StringBuffer buildProperties(int i) {

// 获取mysql ip:port

String mysqlinfo;

String url = oriDatasource.getParamValue("url");

try {

mysqlinfo = url.substring(url.indexOf("mysql://") + 8, url.lastIndexOf("/"));

}

catch (Exception e) {

throw new SyDevOpsRuntimeException("url is illegal" + e.getMessage(), e);

}

String username = oriDatasource.getParamValue("username");

String password = oriDatasource.getParamValue("password");

StringBuffer instanceProperties = new StringBuffer();

instanceProperties.append("canal.instance.mysql.slaveId=")

.append(element.getTaskInstId().concat(String.valueOf(i))).append("\n");

instanceProperties.append("canal.instance.gtidon=false").append("\n");

instanceProperties.append("canal.instance.master.address=").append(mysqlinfo).append("\n");

instanceProperties.append("canal.instance.tsdb.enable=false").append("\n");

instanceProperties.append("canal.instance.dbUsername=").append(username).append("\n");

instanceProperties.append("canal.instance.dbPassword=").append(password).append("\n");

instanceProperties.append("canal.instance.connectionCharset=UTF-8").append("\n");

instanceProperties.append("canal.instance.enableDruid=false").append("\n");

instanceProperties.append("canal.instance.filter.regex=").append(element.getDatabases()[i]).append("\n");

instanceProperties.append("canal.mq.topic=").append(element.getTopicName()[i]).append("\n");

instanceProperties.append("canal.mq.partition=").append(element.getPartition()[i]).append("\n");

return instanceProperties;

}

}