Apache Atlas 执行导入hive元数据脚本import-hive.sh报错

目录

- 一、问题一

-

- 1 问题

- 2 解决

- 二、问题二

-

- 1 问题

- 2 解决

-

- 2.1 查看hive是否正常

- 2.2 解决hive命令行无法启动问题

- 2.3 解决hive无法查询数据库问题

- 2.4 修改hive-site.xml

一、问题一

1 问题

Apache Atlas 执行导入hive元数据脚本import-hive.sh报错

bash import-hive.sh

Caused by: com.ctc.wstx.exc.WstxParsingException: Illegal character entity: expansion character (code 0x8 at [row,col,system-id]: [3223,96,“file:/opt/soft/apache-hive-3.1.2-bin/conf/hive-site.xml”]

报错日志:

2021-07-28T10:00:32,343 ERROR [main] org.apache.hadoop.conf.Configuration - error parsing conf file:/opt/soft/apache-hive-3.1.2-bin/conf/hive-site.xml

com.ctc.wstx.exc.WstxParsingException: Illegal character entity: expansion character (code 0x8

at [row,col,system-id]: [3223,96,"file:/opt/soft/apache-hive-3.1.2-bin/conf/hive-site.xml"]

at com.ctc.wstx.sr.StreamScanner.constructWfcException(StreamScanner.java:621) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.throwParseError(StreamScanner.java:491) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.reportIllegalChar(StreamScanner.java:2456) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.validateChar(StreamScanner.java:2403) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.resolveCharEnt(StreamScanner.java:2369) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.fullyResolveEntity(StreamScanner.java:1515) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.BasicStreamReader.nextFromTree(BasicStreamReader.java:2828) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.BasicStreamReader.next(BasicStreamReader.java:1123) ~[woodstox-core-5.0.3.jar:5.0.3]

at org.apache.hadoop.conf.Configuration$Parser.parseNext(Configuration.java:3257) ~[hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration$Parser.parse(Configuration.java:3063) ~[hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2986) [hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:2931) [hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2806) [hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration.get(Configuration.java:1460) [hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.hive.conf.HiveConf.getVar(HiveConf.java:4996) [hive-common-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.conf.HiveConf.getVar(HiveConf.java:5069) [hive-common-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.conf.HiveConf.initialize(HiveConf.java:5156) [hive-common-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.conf.HiveConf.(HiveConf.java:5099) [hive-common-3.1.2.jar:3.1.2]

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.main(HiveMetaStoreBridge.java:141) [hive-bridge-2.1.0.jar:2.1.0]

2021-07-28T10:00:32,345 ERROR [main] org.apache.atlas.hive.bridge.HiveMetaStoreBridge - Import failed

java.lang.RuntimeException: com.ctc.wstx.exc.WstxParsingException: Illegal character entity: expansion character (code 0x8

at [row,col,system-id]: [3223,96,"file:/opt/soft/apache-hive-3.1.2-bin/conf/hive-site.xml"]

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:3003) ~[hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:2931) ~[hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2806) ~[hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration.get(Configuration.java:1460) ~[hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.hive.conf.HiveConf.getVar(HiveConf.java:4996) ~[hive-common-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.conf.HiveConf.getVar(HiveConf.java:5069) ~[hive-common-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.conf.HiveConf.initialize(HiveConf.java:5156) ~[hive-common-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.conf.HiveConf.(HiveConf.java:5099) ~[hive-common-3.1.2.jar:3.1.2]

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.main(HiveMetaStoreBridge.java:141) [hive-bridge-2.1.0.jar:2.1.0]

Caused by: com.ctc.wstx.exc.WstxParsingException: Illegal character entity: expansion character (code 0x8

at [row,col,system-id]: [3223,96,"file:/opt/soft/apache-hive-3.1.2-bin/conf/hive-site.xml"]

at com.ctc.wstx.sr.StreamScanner.constructWfcException(StreamScanner.java:621) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.throwParseError(StreamScanner.java:491) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.reportIllegalChar(StreamScanner.java:2456) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.validateChar(StreamScanner.java:2403) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.resolveCharEnt(StreamScanner.java:2369) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.StreamScanner.fullyResolveEntity(StreamScanner.java:1515) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.BasicStreamReader.nextFromTree(BasicStreamReader.java:2828) ~[woodstox-core-5.0.3.jar:5.0.3]

at com.ctc.wstx.sr.BasicStreamReader.next(BasicStreamReader.java:1123) ~[woodstox-core-5.0.3.jar:5.0.3]

at org.apache.hadoop.conf.Configuration$Parser.parseNext(Configuration.java:3257) ~[hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration$Parser.parse(Configuration.java:3063) ~[hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2986) ~[hadoop-common-3.1.1.jar:?]

... 8 more

Failed to import Hive Meta Data!!!

2 解决

报错提示文件中有非法字符

vim打开文件

vim hive-site.xml

# 设置行号

:set nu

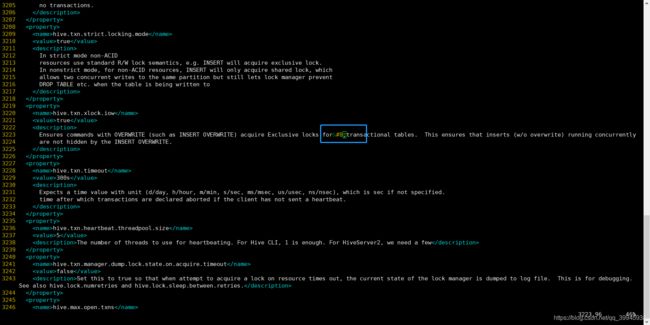

找到第3223行的第96个字符,如下所示

把特殊字符删了试试看

按 i 进入编辑模式

删除特殊字符

按ESC推出编辑模式

输入:wq保存并推出

再次执行import-hive.sh,发现已经不报这个错了,报了其他的错

bash import-hive.sh

二、问题二

1 问题

Apache Atlas 执行导入hive元数据脚本import-hive.sh报错

SQLSyntaxErrorException: Table/View ‘DBS’ does not exist.

StandardException: Table/View ‘DBS’ does not exist.

.MetaException: Version information not found in metastore.

RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

2021-07-28T10:16:46,502 WARN [main] org.apache.hadoop.hive.metastore.MetaStoreDirectSql - Self-test query [select "DB_ID" from "DBS"] failed; direct SQL is disabled

javax.jdo.JDODataStoreException: Error executing SQL query "select "DB_ID" from "DBS"".

at org.datanucleus.api.jdo.NucleusJDOHelper.getJDOExceptionForNucleusException(NucleusJDOHelper.java:543) ~[datanucleus-api-jdo-4.2.4.jar:?]

at org.datanucleus.api.jdo.JDOQuery.executeInternal(JDOQuery.java:391) ~[datanucleus-api-jdo-4.2.4.jar:?]

at org.datanucleus.api.jdo.JDOQuery.execute(JDOQuery.java:216) ~[datanucleus-api-jdo-4.2.4.jar:?]

at org.apache.hadoop.hive.metastore.MetaStoreDirectSql.runTestQuery(MetaStoreDirectSql.java:276) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.MetaStoreDirectSql.(MetaStoreDirectSql.java:184) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.ObjectStore.initializeHelper(ObjectStore.java:498) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.ObjectStore.initialize(ObjectStore.java:420) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.ObjectStore.setConf(ObjectStore.java:375) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.util.ReflectionUtils.setConf(ReflectionUtils.java:77) [hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.util.ReflectionUtils.newInstance(ReflectionUtils.java:137) [hadoop-common-3.1.1.jar:?]

at org.apache.hadoop.hive.metastore.RawStoreProxy.(RawStoreProxy.java:59) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RawStoreProxy.getProxy(RawStoreProxy.java:67) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.newRawStoreForConf(HiveMetaStore.java:718) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMSForConf(HiveMetaStore.java:696) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:690) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:767) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:538) [hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_291]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_291]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_291]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_291]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:147) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:108) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.(RetryingHMSHandler.java:80) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:93) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:8667) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.(HiveMetaStoreClient.java:169) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.(SessionHiveMetaStoreClient.java:94) [hive-exec-3.1.2.jar:3.1.2]

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) [?:1.8.0_291]

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) [?:1.8.0_291]

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) [?:1.8.0_291]

at java.lang.reflect.Constructor.newInstance(Constructor.java:423) [?:1.8.0_291]

at org.apache.hadoop.hive.metastore.utils.JavaUtils.newInstance(JavaUtils.java:84) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.(RetryingMetaStoreClient.java:95) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:148) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:119) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:4299) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4367) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:4347) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:4603) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:291) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:274) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.(Hive.java:435) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:375) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:355) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:331) [hive-exec-3.1.2.jar:3.1.2]

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.(HiveMetaStoreBridge.java:216) [hive-bridge-2.1.0.jar:2.1.0]

at org.apache.atlas.hive.bridge.HiveMetaStoreBridge.main(HiveMetaStoreBridge.java:141) [hive-bridge-2.1.0.jar:2.1.0]

Caused by: java.sql.SQLSyntaxErrorException: Table/View 'DBS' does not exist.

at org.apache.derby.impl.jdbc.SQLExceptionFactory.getSQLException(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.Util.generateCsSQLException(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.TransactionResourceImpl.wrapInSQLException(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.TransactionResourceImpl.handleException(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.EmbedConnection.handleException(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.ConnectionChild.handleException(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.EmbedPreparedStatement.(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.EmbedPreparedStatement42.(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.jdbc.Driver42.newEmbedPreparedStatement(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.EmbedConnection.prepareStatement(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.EmbedConnection.prepareStatement(Unknown Source) ~[derby-10.14.1.0.jar:?]

at com.zaxxer.hikari.pool.ProxyConnection.prepareStatement(ProxyConnection.java:325) ~[HikariCP-2.6.1.jar:?]

at com.zaxxer.hikari.pool.HikariProxyConnection.prepareStatement(HikariProxyConnection.java) ~[HikariCP-2.6.1.jar:?]

at org.datanucleus.store.rdbms.SQLController.getStatementForQuery(SQLController.java:345) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.RDBMSQueryUtils.getPreparedStatementForQuery(RDBMSQueryUtils.java:211) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.SQLQuery.performExecute(SQLQuery.java:633) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.query.Query.executeQuery(Query.java:1855) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.store.rdbms.query.SQLQuery.executeWithArray(SQLQuery.java:807) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.query.Query.execute(Query.java:1726) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.api.jdo.JDOQuery.executeInternal(JDOQuery.java:374) ~[datanucleus-api-jdo-4.2.4.jar:?]

... 46 more

Caused by: org.apache.derby.iapi.error.StandardException: Table/View 'DBS' does not exist.

at org.apache.derby.iapi.error.StandardException.newException(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.iapi.error.StandardException.newException(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.compile.FromBaseTable.bindTableDescriptor(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.compile.FromBaseTable.bindNonVTITables(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.compile.FromList.bindTables(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.compile.SelectNode.bindNonVTITables(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.compile.DMLStatementNode.bindTables(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.compile.DMLStatementNode.bind(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.compile.CursorNode.bindStatement(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.GenericStatement.prepMinion(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.GenericStatement.prepare(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.sql.conn.GenericLanguageConnectionContext.prepareInternalStatement(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.EmbedPreparedStatement.(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.EmbedPreparedStatement42.(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.jdbc.Driver42.newEmbedPreparedStatement(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.EmbedConnection.prepareStatement(Unknown Source) ~[derby-10.14.1.0.jar:?]

at org.apache.derby.impl.jdbc.EmbedConnection.prepareStatement(Unknown Source) ~[derby-10.14.1.0.jar:?]

at com.zaxxer.hikari.pool.ProxyConnection.prepareStatement(ProxyConnection.java:325) ~[HikariCP-2.6.1.jar:?]

at com.zaxxer.hikari.pool.HikariProxyConnection.prepareStatement(HikariProxyConnection.java) ~[HikariCP-2.6.1.jar:?]

at org.datanucleus.store.rdbms.SQLController.getStatementForQuery(SQLController.java:345) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.RDBMSQueryUtils.getPreparedStatementForQuery(RDBMSQueryUtils.java:211) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.rdbms.query.SQLQuery.performExecute(SQLQuery.java:633) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.query.Query.executeQuery(Query.java:1855) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.store.rdbms.query.SQLQuery.executeWithArray(SQLQuery.java:807) ~[datanucleus-rdbms-4.1.19.jar:?]

at org.datanucleus.store.query.Query.execute(Query.java:1726) ~[datanucleus-core-4.1.17.jar:?]

at org.datanucleus.api.jdo.JDOQuery.executeInternal(JDOQuery.java:374) ~[datanucleus-api-jdo-4.2.4.jar:?]

... 46 more

2021-07-28T10:16:46,509 INFO [main] org.apache.hadoop.hive.metastore.ObjectStore - Initialized ObjectStore

2021-07-28T10:16:46,618 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:46,618 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:46,618 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:46,619 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:46,619 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:46,619 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:47,981 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:47,981 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:47,981 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:47,982 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type of null yet this is not valid. Ignored

2021-07-28T10:16:47,982 WARN [main] DataNucleus.MetaData - Metadata has jdbc-type