Springboot + docker + ELK +Filebeat实现日志收集与展示

文章目录

- 1、ELKB架构

- 2、本机环境

- 3、ELKB环境搭建

-

- 3.1、创建自定义网络

- 3.2、elasticsearch环境配置

-

- 3.2.1、创建elasticsearch目录

- 3.2.2、配置elasticsearch.yml文件

- 3.3、Kibana环境配置

-

- 3.3.1、创建kibana目录

- 3.3.2、kibana.yml文件配置

- 3.4、Logstash配置

-

- 3.4.1、Logstash目录创建

- 3.4.2、logstash.yml和logstash.conf文件配置

- 3.5、Filebeat配置

-

- 3.5.1、Filebeat目录创建

- 3.5.2、filebeat.yml文件配置

- 3.6 docker-compose配置

-

- 3.6.1、 创建docker-compose.yml文件

- 3.6.2、 docker-compose.yml文件配置

- 3.6.3、docker-compose启动ELKB

- 4、SpringBoot项目构建

-

- 4.1、微服务1(elk_test)

- 4.2、微服务1(elk_test2)

- 5、微服务Docker化部署

-

- 5.1、微服务打包

- 5.2、 创建docker目录,用于存放每个微服务的Dockerfile文件、jar包以及挂载的日志

- 5.3、 创建微服务镜像、容器及开启服务

- 6、日志展示

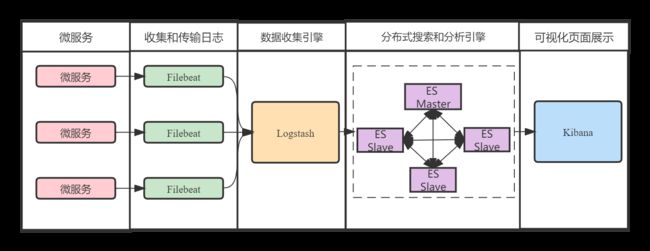

1、ELKB架构

ELKB中各组件的作用如下:

- Filebeat:采集日志文件等数据;

- Logstash:处理过滤日志数据;

- Elasticsearch:存储、索引日志;

- Kibana:可视化界面;

若只是用ELK架构,可参考博客:https://blog.csdn.net/hansome_hong/article/details/124585026?spm=1001.2014.3001.5502

2、本机环境

linux:

pikaqiu@pikaqiu-virtual-machine:~$ uname -a

Linux pikaqiu-virtual-machine 5.11.0-27-generic #29~20.04.1-Ubuntu SMP Wed Aug 11 15:58:17 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux

Docker 环境:

pikaqiu@pikaqiu-virtual-machine:~$ docker version

Client: Docker Engine - Community

Version: 20.10.0

API version: 1.41

Go version: go1.13.15

Git commit: 7287ab3

Built: Tue Dec 8 18:59:53 2020

OS/Arch: linux/amd64

Context: default

Experimental: true

Server: Docker Engine - Community

Engine:

Version: 20.10.0

API version: 1.41 (minimum version 1.12)

Go version: go1.13.15

Git commit: eeddea2

Built: Tue Dec 8 18:57:44 2020

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.4.3

GitCommit: 269548fa27e0089a8b8278fc4fc781d7f65a939b

runc:

Version: 1.0.0-rc92

GitCommit: ff819c7e9184c13b7c2607fe6c30ae19403a7aff

docker-init:

Version: 0.19.0

GitCommit: de40ad0

Docker-compose:

pikaqiu@pikaqiu-virtual-machine:~$ docker-compose version

docker-compose version 1.24.1, build 4667896b

docker-py version: 3.7.3

CPython version: 3.6.8

OpenSSL version: OpenSSL 1.1.0j 20 Nov 2018

3、ELKB环境搭建

3.1、创建自定义网络

docker network create mynetwork

3.2、elasticsearch环境配置

3.2.1、创建elasticsearch目录

mkdir -p /home/pikaqiu/elk/elasticsearch/conf

mkdir -p /home/pikaqiu/elk/elasticsearch/logs

mkdir -p /home/pikaqiu/elk/elasticsearch/data

// elasticsearch数据文件夹授权,保证docker容器中读写权限

chmod 777 /home/pikaqiu/elk/elasticsearch/data

3.2.2、配置elasticsearch.yml文件

文件位置:/home/pikaqiu/elk/elasticsearch/conf/elasticsearch.yml

# default configuration in docker

cluster.name: "elasticsearch" #集群名

network.host: 0.0.0.0 #主机ip

#network.bind_host: 0.0.0.0

#cluster.routing.allocation.disk.threshold_enabled: false

#node.name: es-master

#node.master: true

#node.data: true

http.cors.enabled: true #允许跨域,集群需要设置

http.cors.allow-origin: "*" #跨域设置

#http.port: 9200

#transport.tcp.port: 9300

3.3、Kibana环境配置

3.3.1、创建kibana目录

mkdir -p /home/pikaqiu/elk/kibana/conf

touch /home/pikaqiu/elk/kibana/conf/kibana.yml

3.3.2、kibana.yml文件配置

文件位置:/home/pikaqiu/elk/kibana/conf/kibana.yml

server.name: "kibana"

server.host: "0.0.0.0"

# 注意你的本地IP

elasticsearch.hosts: ["http://192.168.88.158:9200"]

3.4、Logstash配置

3.4.1、Logstash目录创建

touch /home/pikaqiu/elk/logstash/logstash.yml

mkdir -p /home/pikaqiu/elk/logstash/conf

touch /home/pikaqiu/elk/logstash/conf/logstash.conf

3.4.2、logstash.yml和logstash.conf文件配置

1) logstash.yml文件配置

文件位置:/home/pikaqiu/elk/logstash/logstash.yml

http.host: "0.0.0.0"

#配置elasticsearch集群地址

xpack.monitoring.elasticsearch.hosts: [ "http://192.168.88.158:9200" ]

#允许监控

xpack.monitoring.enabled: false

#注意这里的目录为 挂载目录

path.config: /usr/share/logstash/conf/logstash.conf

文件位置:/home/pikaqiu/elk/logstash/conf/logstash.conf

input {

beats {

port => 5044

client_inactivity_timeout => 36000

}

}

output {

if "elk-test1" in [tags]{

elasticsearch {

hosts => ["http://192.168.88.158:9200"]

index => "elk-test1-%{+YYYY.MM}"

}

}

if "elk-test2" in [tags]{

elasticsearch {

hosts => ["http://192.168.88.158:9200"]

index => "elk-test2-%{+YYYY.MM}"

}

}

}

3.5、Filebeat配置

3.5.1、Filebeat目录创建

mkdir -p /home/pikaqiu/elk/filebeat/conf

touch /home/pikaqiu/elk/filebeat/conf/filebeat.yml

3.5.2、filebeat.yml文件配置

注意:filebeat.yml文件权限为 755 ,不能是777,否则会报如下错

config file ("filebeat.yml") can only be writable by the owner but the permissions are "-rwxrwxrwx"

文件位置:/home/pikaqiu/elk/filebeat/conf/filebeat.yml

# 定义info1应用的input类型、以及存放的具体路径

filebeat.inputs:

- type: log

enabled: true

paths:

- /home/pikaqiu/docker/elktest1/log/*.log

tags: ["elk-test1"]

multiline:

pattern: ^[0-9]{4}

negate: true

match: after

timeout: 3s

- type: log

enabled: true

paths:

- /home/pikaqiu/docker/elktest2/log/*.log

tags: ["elk-test2"]

multiline:

pattern: ^[0-9]{4}

negate: true

match: after

timeout: 3s

#============================= Filebeat modules ===============================

filebeat.config.modules:

# Glob pattern for configuration loading

path: ${path.config}/modules.d/*.yml

# Set to true to enable config reloading

reload.enabled: true

# ============================== logstash =====================================

output.logstash:

hosts: ["192.168.88.158:5044"] #192.168.88.158为logstash安装的服务器ip

enabled: true

#============================== Kibana =====================================

setup.kibana:

host: "192.168.88.158:5601"

#============================== elasticsearch =====================================

#output.elasticsearch:

# hosts: ["192.168.88.158:9200"]

# enabled: true

3.6 docker-compose配置

3.6.1、 创建docker-compose.yml文件

touch /home/pikaqiu/elk/docker-compose.yml

3.6.2、 docker-compose.yml文件配置

version: "3.4" #版本号

services:

########## elk日志套件(镜像版本最好保持一致) ##########

elasticsearch: #服务名称

container_name: elasticsearch #容器名称

image: elasticsearch:7.16.1 #使用的镜像 elastisearch:分布式搜索和分析引擎,提供搜索、分析、存储数据三大功能

restart: on-failure #重启策略 1)no:默认策略,当docker容器重启时,服务也不重启 2)always:当docker容器重启时,服务也重启 3)on-failure:在容器非正常退出时(退出状态非0),才会重启容器

ports: #避免出现端口映射错误,建议采用字符串格式

- "9200:9200"

- "9300:9300"

environment: #环境变量设置 也可在配置文件中设置,environment优先级高

- discovery.type=single-node #单节点设置

- bootstrap.memory_lock=true #锁住内存 提高性能

- "ES_JAVA_OPTS=-Xms512m -Xmx512m" #设置启动内存大小 默认内存/最大内存

ulimits:

memlock:

soft: -1

hard: -1

volumes: #挂载文件

- /home/pikaqiu/elk/elasticsearch/data:/usr/share/elasticsearch/data

- /home/pikaqiu/elk/elasticsearch/conf/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

- /home/pikaqiu/elk/elasticsearch/logs:/usr/share/elasticsearch/logs

networks: #网络命名空间 用于隔离服务

- somenetwork

kibana:

container_name: kibana

image: kibana:7.16.1 #kibana:数据分析可视化平台

depends_on:

- elasticsearch

restart: on-failure

ports:

- "5601:5601"

volumes:

- /home/pikaqiu/elk/kibana/conf/kibana.yml:/usr/share/kibana/config/kibana.yml

networks:

- somenetwork

logstash:

container_name: logstash

image: logstash:7.16.1 #logstash:日志处理

command: logstash -f /usr/share/logstash/conf/logstash.conf

depends_on:

- elasticsearch

restart: on-failure

ports:

- "9600:9600"

- "5044:5044"

volumes: #logstash.conf日志处理配置文件

- /home/pikaqiu/elk/logstash/logstash.yml:/usr/share/logstash/config/logstash.yml

- /home/pikaqiu/elk/logstash/conf/:/usr/share/logstash/conf/

networks:

- somenetwork

filebeat:

container_name: filebeat

image: elastic/filebeat:7.16.1 #filebeat:轻量级的日志文件数据收集器,属于Beats六大日志采集器之一

depends_on:

- elasticsearch

- logstash

- kibana

restart: on-failure

volumes: #filebeat.yml配置.log文件的日志输出到logstash

- /home/pikaqiu/elk/filebeat/conf/filebeat.yml:/usr/share/filebeat/filebeat.yml

- /home/pikaqiu/docker/elktest1/log:/home/pikaqiu/docker/elktest1/log

- /home/pikaqiu/docker/elktest2/log:/home/pikaqiu/docker/elktest2/log

networks:

- somenetwork

#使用自定义的网桥名字

networks:

somenetwork:

external: true

3.6.3、docker-compose启动ELKB

1)完整目录结构

pikaqiu@pikaqiu-virtual-machine:~/elk$ tree

.

├── docker-compose.yml

├── elasticsearch

│ ├── conf

│ │ └── elasticsearch.yml

│ ├── data

│ └── logs

├── filebeat

│ └── conf

│ └── filebeat.yml

├── kibana

│ └── conf

│ └── kibana.yml

└── logstash

├── conf

│ └── logstash.conf

└── logstash.yml

2)构建并启动ELKB容器

cd /home/pikaqiu/elk

docker-compose up -d

3)查看运行的服务

pikaqiu@pikaqiu-virtual-machine:~/docker/elktest1/log$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

84114233f532 elastic/filebeat:7.16.1 "/usr/bin/tini -- /u…" 22 minutes ago Up 22 minutes filebeat

02ca759a68ce kibana:7.16.1 "/bin/tini -- /usr/l…" 22 minutes ago Up 22 minutes 0.0.0.0:5601->5601/tcp kibana

6f7d8758dab6 logstash:7.16.1 "/usr/local/bin/dock…" 22 minutes ago Up 22 minutes 0.0.0.0:5044->5044/tcp, 0.0.0.0:9600->9600/tcp logstash

5544905dc359 elasticsearch:7.16.1 "/bin/tini -- /usr/l…" 22 minutes ago Up 22 minutes 0.0.0.0:9200->9200/tcp, 0.0.0.0:9300->9300/tcp elasticsearch

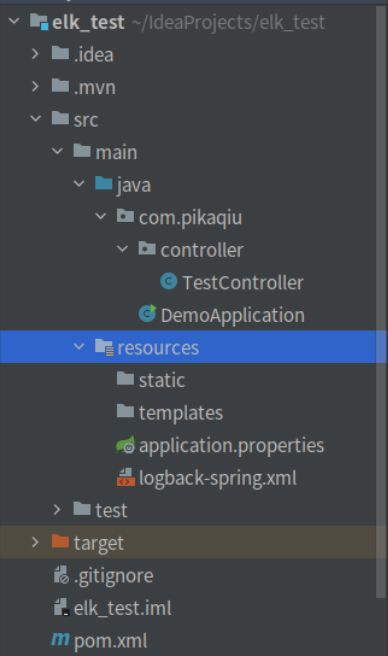

4、SpringBoot项目构建

这里准备了两个微服务以模拟多个微服务的场景。

4.1、微服务1(elk_test)

2. pom.xml文件

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<parent>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-parentartifactId>

<version>2.6.7version>

<relativePath/>

parent>

<groupId>com.pikaqiugroupId>

<artifactId>elk_testartifactId>

<version>0.0.1-SNAPSHOTversion>

<name>demoname>

<description>Demo project for Spring Bootdescription>

<properties>

<java.version>1.8java.version>

properties>

<dependencies>

<dependency>

<groupId>net.logstash.logbackgroupId>

<artifactId>logstash-logback-encoderartifactId>

<version>5.3version>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-webartifactId>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-testartifactId>

<scope>testscope>

dependency>

dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-maven-pluginartifactId>

plugin>

plugins>

build>

project>

3. logback-spring.xml

<configuration>

<include resource="org/springframework/boot/logging/logback/defaults.xml"/>

<include resource="org/springframework/boot/logging/logback/console-appender.xml"/>

<property name="LOG_FILE_PATH" value="/home/pikaqiu/logs">property>

<property name="APP_NAME" value="elk1-log"/>

<property name="LOG_PATTERN" value="%d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{50} - %msg %n"/>

<contextName>${APP_NAME}contextName>

<appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender">

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>debuglevel>

filter>

<encoder>

<Pattern>${LOG_PATTERN}Pattern>

<charset>UTF-8charset>

encoder>

appender>

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_FILE_PATH}/${APP_NAME}/log-info-%d{yyyy-MM-dd}.logfileNamePattern>

<maxHistory>30maxHistory>

rollingPolicy>

<encoder>

<pattern>${LOG_PATTERN}pattern>

encoder>

appender>

<root level="info">

<appender-ref ref="CONSOLE"/>

<appender-ref ref="FILE"/>

root>

configuration>

4. application.properties

server.port=8080

5. TestController.java

package com.pikaqiu.controller;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class TestController {

private Logger logger = (Logger)LogManager.getLogger(this.getClass());

@RequestMapping("/index1")

public void testElk(){

logger.debug("======================= elk1 test ================");

logger.info("======================= elk1 test ================");

logger.warn("======================= elk1 test ================");

logger.error("======================= elk1 test ================");

}

}

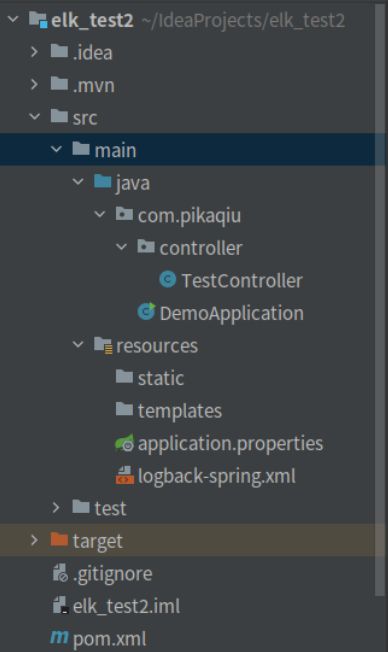

4.2、微服务1(elk_test2)

2. pom.xml文件

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<parent>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-parentartifactId>

<version>2.6.7version>

<relativePath/>

parent>

<groupId>com.pikaqiugroupId>

<artifactId>elk_testartifactId>

<version>0.0.1-SNAPSHOTversion>

<name>demoname>

<description>Demo project for Spring Bootdescription>

<properties>

<java.version>1.8java.version>

properties>

<dependencies>

<dependency>

<groupId>net.logstash.logbackgroupId>

<artifactId>logstash-logback-encoderartifactId>

<version>5.3version>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-webartifactId>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-testartifactId>

<scope>testscope>

dependency>

dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-maven-pluginartifactId>

plugin>

plugins>

build>

project>

3. logback-spring.xml

<configuration>

<include resource="org/springframework/boot/logging/logback/defaults.xml"/>

<include resource="org/springframework/boot/logging/logback/console-appender.xml"/>

<property name="LOG_FILE_PATH" value="/home/pikaqiu/logs">property>

<property name="APP_NAME" value="elk2-log"/>

<property name="LOG_PATTERN" value="%d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{50} - %msg %n"/>

<contextName>${APP_NAME}contextName>

<appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender">

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>debuglevel>

filter>

<encoder>

<Pattern>${LOG_PATTERN}Pattern>

<charset>UTF-8charset>

encoder>

appender>

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_FILE_PATH}/${APP_NAME}/log-info-%d{yyyy-MM-dd}.logfileNamePattern>

<maxHistory>30maxHistory>

rollingPolicy>

<encoder>

<pattern>${LOG_PATTERN}pattern>

encoder>

appender>

<root level="info">

<appender-ref ref="CONSOLE"/>

<appender-ref ref="FILE"/>

root>

configuration>

4. application.properties

server.port=8081

5. TestController.java

package com.pikaqiu.controller;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

public class TestController {

private Logger logger = (Logger)LogManager.getLogger(this.getClass());

@RequestMapping("/index2")

public void testElk(){

logger.debug("======================= elk2 test ================");

logger.info("======================= elk2 test ================");

logger.warn("======================= elk2 test ================");

logger.error("======================= elk2 test ================");

}

}

注意:微服务1和微服务2的 logback-spring.xml文件中对应的日志文件输出路径不同

5、微服务Docker化部署

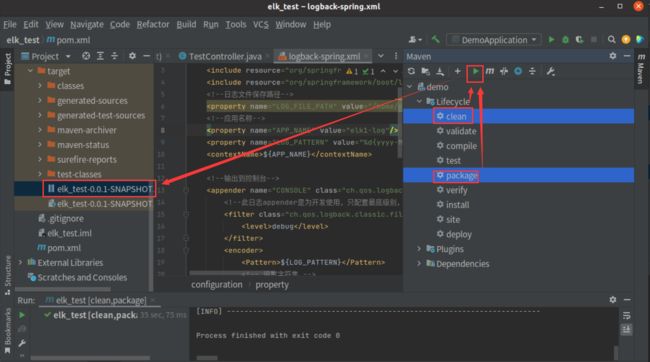

5.1、微服务打包

5.2、 创建docker目录,用于存放每个微服务的Dockerfile文件、jar包以及挂载的日志

1)使用命令查看docker目录结构

pikaqiu@pikaqiu-virtual-machine:~/docker$ tree

.

├── elktest1

│ ├── Dockerfile

│ ├── elk_test-0.0.1-SNAPSHOT.jar

│

└── elktest2

├── Dockerfile

├── elk_test2-0.0.1-SNAPSHOT.jar

2)elktest1的 Dockerfile文件

FROM java

RUN mkdir -p /home/app

#设置时区

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' >/etc/timezone

ENTRYPOINT ["java","-jar","-Dlogging.file=/home/app/elk_test.log","/home/app/elk_test-0.0.1-SNAPSHOT.jar"]

# 命令 docker run -d -v /home/pikaqiu/docker/elktest1/log:/home/pikaqiu/logs/elk1-log --net=host -v /home/pikaqiu/docker/elktest1:/home/app --name elktest1 elktest1

3)elktest2 的 Dockerfile文件

FROM java

RUN mkdir -p /home/app

#设置时区

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' >/etc/timezone

ENTRYPOINT ["java","-jar","-Dlogging.file=/home/app/elk_test2.log","/home/app/elk_test2-0.0.1-SNAPSHOT.jar"]

# 命令 docker run -d -v /home/pikaqiu/docker/elktest2/log:/home/pikaqiu/logs/elk2-log --net=host -v /home/pikaqiu/docker/elktest2:/home/app --name elktest2 elktest2

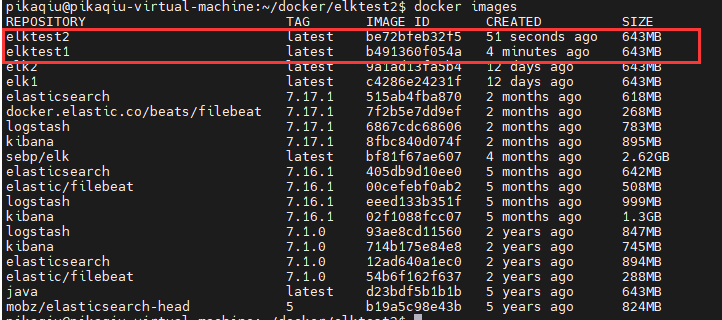

5.3、 创建微服务镜像、容器及开启服务

1)分别进入elktest1和elktest2的路径下执行 docker build -t *** . 命令

pikaqiu@pikaqiu-virtual-machine:~/docker/elktest1$ docker build -t elktest1 .

Sending build context to Docker daemon 18.07MB

Step 1/4 : FROM java

---> d23bdf5b1b1b

Step 2/4 : RUN mkdir -p /home/app

---> Using cache

---> ab7b6c3ff6f5

Step 3/4 : RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' >/etc/timezone

---> Using cache

---> 299a8c3dde10

Step 4/4 : ENTRYPOINT ["java","-jar","-Dlogging.file=/home/app/elk_test.log","/home/app/elk_test-0.0.1-SNAPSHOT.jar"]

---> Running in 54286ea24055

Removing intermediate container 54286ea24055

---> b491360f054a

Successfully built b491360f054a

Successfully tagged elktest1:latest

pikaqiu@pikaqiu-virtual-machine:~/docker/elktest2$ docker build -t elktest2 .

Sending build context to Docker daemon 18.07MB

Step 1/4 : FROM java

---> d23bdf5b1b1b

Step 2/4 : RUN mkdir -p /home/app

---> Using cache

---> ab7b6c3ff6f5

Step 3/4 : RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' >/etc/timezone

---> Using cache

---> 299a8c3dde10

Step 4/4 : ENTRYPOINT ["java","-jar","-Dlogging.file=/home/app/elk_test2.log","/home/app/elk_test2-0.0.1-SNAPSHOT.jar"]

---> Running in 2739a124dd52

Removing intermediate container 2739a124dd52

---> be72bfeb32f5

Successfully built be72bfeb32f5

Successfully tagged elktest2:latest

3)创建容器并运行服务

# 创建elktest1容器并运行服务

pikaqiu@pikaqiu-virtual-machine:~/docker/elktest1$ docker run -d -v /home/pikaqiu/docker/elktest1/log:/home/pikaqiu/logs/elk1-log --net=host -v /home/pikaqiu/docker/elktest1:/home/app --name elktest1 elktest1

ec11c1a72ea47f47c8c13ce8dd91933252ffdc0a389779ba05369e21c3f4a107

# 创建elktest2容器并运行服务

pikaqiu@pikaqiu-virtual-machine:~/docker/elktest2$ docker run -d -v /home/pikaqiu/docker/elktest2/log:/home/pikaqiu/logs/elk2-log --net=host -v /home/pikaqiu/docker/elktest2:/home/app --name elktest2 elktest2

0eb4eb719e16e2a7a32ef5cd0bca833bae216a0024a7be098e82cf32a915c407

# 查看正在运行的服务中存在elktest1, elktest2

pikaqiu@pikaqiu-virtual-machine:~/docker/elktest2$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

0eb4eb719e16 elktest2 "java -jar -Dlogging…" 6 seconds ago Up 5 seconds elktest2

ec11c1a72ea4 elktest1 "java -jar -Dlogging…" About a minute ago Up About a minute elktest1

5073703f3449 elastic/filebeat:7.16.1 "/usr/bin/tini -- /u…" 12 hours ago Up 22 minutes filebeat

c550979367d8 kibana:7.16.1 "/bin/tini -- /usr/l…" 12 hours ago Up 22 minutes 0.0.0.0:5601->5601/tcp kibana

453254a8e7d4 logstash:7.16.1 "/usr/local/bin/dock…" 12 hours ago Up 22 minutes 0.0.0.0:5044->5044/tcp, 0.0.0.0:9600->9600/tcp logstash

d29feceb5bc8 elasticsearch:7.16.1 "/bin/tini -- /usr/l…" 12 hours ago Up 22 minutes 0.0.0.0:9200->9200/tcp, 0.0.0.0:9300->9300/tcp elasticsearch

注意:此时使用tree命令查看目录结构,发现 elktest1 和elktest2 路径下出现 log目录 且存在 .log文件,这里的两个log目录及其中的.log文件是微服务容器启动后挂载宿主机的文件到容器里(可以理解为目录或文件映射),无需手动创建,这样可以实现容器中的数据持久化(如微服务的日志),也便于elkb从宿主机的相应路径下获取日志文件。

pikaqiu@pikaqiu-virtual-machine:~/docker$ tree

.

├── elktest1

│ ├── Dockerfile

│ ├── elk_test1.jar

│ └── log

│ └── log-info-2022-05-10.log

└── elktest2

├── Dockerfile

├── elk_test2.jar

└── log

└── log-info-2022-05-10.log

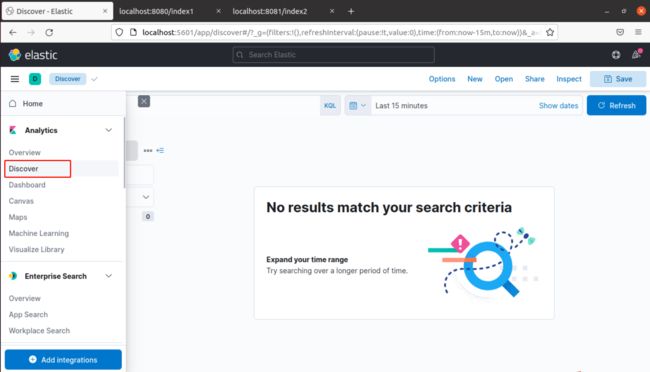

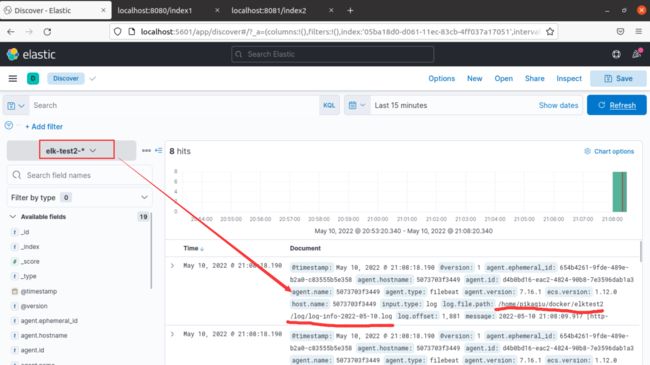

6、日志展示

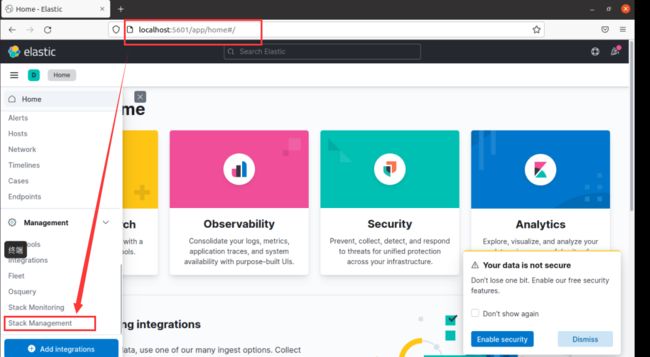

1. 输入http://192.168.88.158:5601,访问Kibana web界面。点击左侧设置,进入Management界面

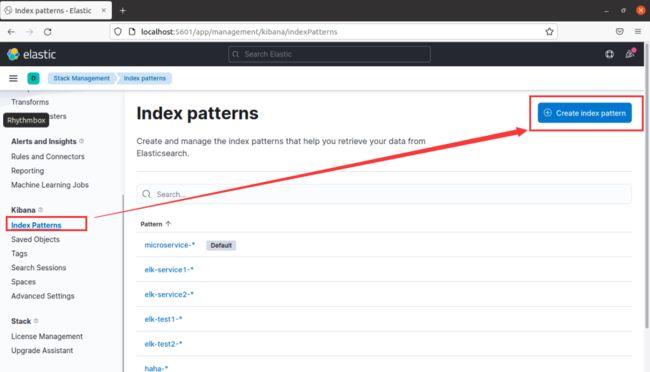

2. 选择索引模式,点击创建索引模式

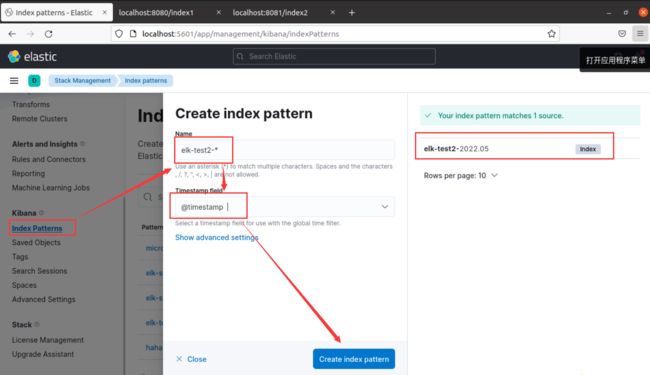

3. 填写名称和时间戳字段,并创建该索引模式

4. 选择Discover,可以看到刚刚创建的两个索引模式

5. 选择elk-test1-索引模式,可以看到微服务1生成的日志

6. 选择elk-test2-索引模式,可以看到微服务2生成的日志