深度神经网络中的Batch Normalization介绍及实现

之前在经典网络DenseNet介绍_fengbingchun的博客-CSDN博客_densenet中介绍DenseNet时,网络中会有BN层,即Batch Normalization,在每个Dense Block中都会有BN参与运算,下面对BN进行介绍并给出C++和PyTorch实现。

Batch Normalization即批量归一化由Sergey loffe等人于2015年提出,论文名为:《Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift》,论文见:https://arxiv.org/pdf/1502.03167.pdf 。

Batch Normalization是一种算法方法,它使深度神经网络的训练更快、更稳定。它可在激活函数前也可在激活函数后进行。它依赖于batch size,当batch size较小时,性能退化严重。在训练和测试阶段,它的计算方式不同。

对于CNN,使用BN更好;对于RNN,使用LN(Layer Normalization)更好。

在训练过程中,由于每层输入的分布随着前一层的参数发生变化而发生变化,因此训练深度神经网络很复杂。由于需要较低的学习率和仔细的参数初始化,这会减慢训练速度,并且使得训练具有饱和非线性的模型变得非常困难。我们将这种现象称为内部协变量偏移(internal covariate shift),并通过归一化层输入来解决该问题。

Batch Normalization用于训练小批量样本(mini-batch)。它允许我们使用更高的学习率,并且不必太小心初始化。它还充当正则化器,在某些情况下消除了Dropout的需要。

Batch Normalization实现算法如下,截图来自原始论文:

在一个mini-batch中,在每一个BN层中,对每个样本的同一通道,计算它们的均值和方差,再对数据进行归一化,归一化到平均值为0,标准差为1 的常态分布,最后使用两个可学习参数gamma和beta对归一化的数据进行缩放和移位。此外,在训练过程中还保存了每个mini-batch每一BN层的均值和方差,最后求所有mini-batch均值和方差的期望值,以此来作为推理过程中该BN层的均值和方差。

Batch Normalization优点:

(1).在不影响收敛性的情况下,可使用更大的学习率,使训练更快、更稳定;

(2).具有正则化效果,防止过拟合,可去除Dropout和局部响应归一化(Local Response Normalization, LRN);

(3).由于训练数据打乱顺序,使得每个epoch中mini-batch都不一样,对不同mini-batch做归一化可以起到数据增强的效果;

(4).缓减梯度爆炸和梯度消失。

以下是C++实现:

batch_normalization.hpp:

#ifndef FBC_SRC_NN_BATCH_NORM_HPP_

#define FBC_SRC_NN_BATCH_NORM_HPP_

#include

#include

#include

namespace ANN {

class BatchNorm {

public:

BatchNorm(int number, int channels, int height, int width) : number_(number), channels_(channels), height_(height), width_(width)

{

mean_.resize(channels_);

std::fill(mean_.begin(), mean_.end(), 0.);

variance_.resize(channels_);

std::fill(variance_.begin(), variance_.end(), 0.);

}

int LoadData(const float* data, int length);

std::unique_ptr Run();

void SetGamma(float gamma) { gamma_ = gamma; }

float GetGamma() const { return gamma_; }

void SetBeta(float beta) { beta_ = beta; }

float GetBeta() const { return beta_; }

void SetMean(std::vector mean) { mean_ = mean; }

std::vector GetMean() const { return mean_; }

void SetVariance(std::vector variance) { variance_ = variance; }

std::vector GetVariance() const { return variance_; }

void SetEpsilon(float epsilon) { epsilon_ = epsilon; }

private:

int number_; // mini-batch

int channels_;

int height_;

int width_;

std::vector mean_;

std::vector variance_;

float gamma_ = 1.; // 缩放

float beta_ = 0.; // 平移

float epsilon_ = 1e-5; // small positive value to avoid zero-division

std::vector data_;

};

} // namespace ANN

#endif // FBC_SRC_NN_BATCH_NORM_HPP_ batch_normalization.cpp:

#include "batch_normalization.hpp"

#include

#include

#include

#include "common.hpp"

namespace ANN {

int BatchNorm::LoadData(const float* data, int length)

{

CHECK(number_ * channels_ * height_ * width_ == length);

data_.resize(length);

memcpy(data_.data(), data, length * sizeof(float));

return 0;

}

std::unique_ptr BatchNorm::Run()

{

int spatial_size = height_ * width_;

for (int n = 0; n < number_; ++n) {

int offset = n * (channels_ * spatial_size);

for (int c = 0; c < channels_; ++c) {

const float* p = data_.data() + offset + (c * spatial_size);

for (int k = 0; k < spatial_size; ++k) {

mean_[c] += *p++;

}

}

}

std::transform(mean_.begin(), mean_.end(), mean_.begin(), [=](float_t x) { return x / (number_ * spatial_size); });

for (int n = 0; n < number_; ++n) {

int offset = n * (channels_ * spatial_size);

for (int c = 0; c < channels_; ++c) {

const float* p = data_.data() + offset + (c * spatial_size);

for (int k = 0; k < spatial_size; ++k) {

variance_[c] += std::pow(*p++ - mean_[c], 2.);

}

}

}

std::transform(variance_.begin(), variance_.end(), variance_.begin(), [=](float_t x) { return x / (std::max(1., number_*spatial_size*1.)); });

std::vector stddev(channels_);

for (int c = 0; c < channels_; ++c) {

stddev[c] = std::sqrt(variance_[c] + epsilon_);

}

std::unique_ptr output(new float[number_ * channels_ * spatial_size]);

for (int n = 0; n < number_; ++n) {

const float* p1 = data_.data() + n * (channels_ * spatial_size);

float* p2 = output.get() + n * (channels_ * spatial_size);

for (int c = 0; c < channels_; ++c) {

for (int k = 0; k < spatial_size; ++k) {

*p2++ = (*p1++ - mean_[c]) / stddev[c];

}

}

}

return output;

}

} // namespace ANN funset.cpp:

int test_batch_normalization()

{

const std::vector data = { 11.1, -2.2, 23.3, 54.4, 58.5, -16.6,

-97.7, -28.8, 49.9, -61.3, 52.6, -33.9,

-2.45, -15.7, 72.4, 9.1, 47.2, 21.7};

const int number = 3, channels = 1, height = 1, width = 6;

ANN::BatchNorm bn(number, channels, height, width);

bn.LoadData(data.data(), data.size());

std::unique_ptr output = bn.Run();

fprintf(stdout, "result:\n");

for (int n = 0; n < number; ++n) {

const float* p = output.get() + n * (channels * height * width);

for (int c = 0; c < channels; ++c) {

for (int h = 0; h < height; ++h) {

for (int w = 0; w < width; ++w) {

fprintf(stdout, "%f, ", p[c * (height * width) + h * width + w]);

}

fprintf(stdout, "\n");

}

}

}

return 0;

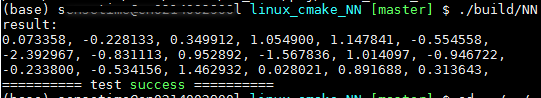

} 执行结果如下:

以下是调用PyTorch接口实现:源码来自于https://zh.d2l.ai/chapter_convolutional-modern/batch-norm.html

import torch

from torch import nn

import numpy as np

# reference: https://zh.d2l.ai/chapter_convolutional-modern/batch-norm.html

# BatchNorm reimplementation

def batch_norm(X, gamma, beta, moving_mean, moving_var, eps, momentum):

# 通过is_grad_enabled来判断当前模式是训练模式还是预测模式

if not torch.is_grad_enabled():

# 如果是在预测模式下,直接使用传入的移动平均所得的均值和方差

X_hat = (X - moving_mean) / torch.sqrt(moving_var + eps)

else:

assert len(X.shape) in (2, 4)

if len(X.shape) == 2:

# 使用全连接层的情况,计算特征维上的均值和方差

mean = X.mean(dim=0)

var = ((X - mean) ** 2).mean(dim=0)

else:

# 使用二维卷积层的情况,计算通道维上(axis=1)的均值和方差。

# 这里我们需要保持X的形状以便后面可以做广播运算

mean = X.mean(dim=(0, 2, 3), keepdim=True)

var = ((X - mean) ** 2).mean(dim=(0, 2, 3), keepdim=True)

# 训练模式下,用当前的均值和方差做标准化

X_hat = (X - mean) / torch.sqrt(var + eps)

# 更新移动平均的均值和方差

moving_mean = momentum * moving_mean + (1.0 - momentum) * mean

moving_var = momentum * moving_var + (1.0 - momentum) * var

Y = gamma * X_hat + beta # 缩放和移位

return Y, moving_mean.data, moving_var.data

class BatchNorm(nn.Module):

# num_features:完全连接层的输出数量或卷积层的输出通道数。

# num_dims:2表示完全连接层,4表示卷积层

def __init__(self, num_features, num_dims):

super().__init__()

if num_dims == 2:

shape = (1, num_features)

else:

shape = (1, num_features, 1, 1)

# 参与求梯度和迭代的拉伸和偏移参数,分别初始化成1和0

self.gamma = nn.Parameter(torch.ones(shape))

self.beta = nn.Parameter(torch.zeros(shape))

# 非模型参数的变量初始化为0和1

self.moving_mean = torch.zeros(shape)

self.moving_var = torch.ones(shape)

def forward(self, X):

# 如果X不在内存上,将moving_mean和moving_var复制到X所在显存上

if self.moving_mean.device != X.device:

self.moving_mean = self.moving_mean.to(X.device)

self.moving_var = self.moving_var.to(X.device)

# 保存更新过的moving_mean和moving_var

Y, self.moving_mean, self.moving_var = batch_norm(

X, self.gamma, self.beta, self.moving_mean,

self.moving_var, eps=1e-5, momentum=0.9)

return Y

# N = 3, C = 1, H = 1, W = 6

data = [[[[11.1, -2.2, 23.3, 54.4, 58.5, -16.6]]],

[[[-97.7, -28.8, 49.9, -61.3, 52.6, -33.9]]],

[[[-2.45, -15.7, 72.4, 9.1, 47.2, 21.7]]]]

input = torch.FloatTensor(data) # [N, C, H, W]

print("input shape:", input.shape)

model = BatchNorm(1, 2)

output = model(input)

print("output:", output)

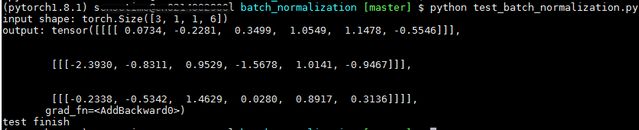

print("test finish")执行结果如下:可见,C++和PyTorch实现结果相同

以下是调用tiny-dnn接口的测试代码:

int test_dnn_batch_normalization()

{

const std::vector data = { 11.1, -2.2, 23.3, 54.4, 58.5, -16.6,

-97.7, -28.8, 49.9, -61.3, 52.6, -33.9,

-2.45, -15.7, 72.4, 9.1, 47.2, 21.7 };

const int number = 3, channels = 1, height = 1, width = 6;

const int spatial_size = height * width;

tiny_dnn::tensor_t in_data(number), out_data(number);

for (int n = 0; n < number; ++n) {

in_data[n].resize(spatial_size * channels);

out_data[n].resize(spatial_size * channels);

int offset = n * (spatial_size * channels);

memcpy(in_data[n].data(), data.data() + offset, sizeof(float)*spatial_size*channels);

std::fill(out_data[n].begin(), out_data[n].end(), 0.);

}

std::vector in(1), out(1);

in[0] = &in_data;

out[0] = &out_data;

tiny_dnn::batch_normalization_layer bn(spatial_size, channels);

bn.forward_propagation(in, out);

fprintf(stdout, "tiny_dnn result:\n");

for (int n = 0; n < number; ++n) {

for (int s = 0; s < spatial_size * channels; ++s)

fprintf(stdout, "%f ", out_data[n][s]);

fprintf(stdout, "\n");

}

return 0;

} 执行结果如下:与上面的C++和PyTorch代码结果若有不同,原因是tiny-dnn源码中math_functions.h文件在求平均方差时除数为num_examples*spatial_dim-1.0f,而不是num_examples*spatial_dim

GitHub:

https://github.com/fengbingchun/NN_Test

https://github.com/fengbingchun/PyTorch_Test