Spark读取mysql数据插入Hive表中

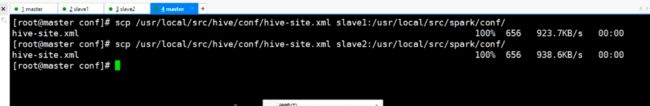

先把hive-size文件分发到每台机器spark配置文件下,避免待会找不到hive数据库

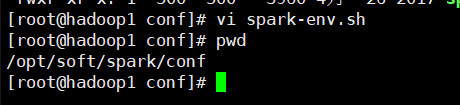

修改spark的配置文件,conf目录下的spark-env.sh

export JAVA_HOME=/opt/soft/jdk

export SPARK_MASTER_HOST=hadoop1

export SPARK_MASTER_PORT=7077

export SPARK_WASTER_CORES=1

export SPARK_WASTER_MEMORT=1g

export SPARK_CONF_DIR=/opt/soft/spark/conf

export HADOOP_CONF_DIR=/opt/soft/hadoop/etc/hadoop

把代码打包成jar包在spark中运行

//创建相对应的表

create table ods3(id string,name string,classinfo string,birthday string,phone string,sex string) partitioned by(nian int) row format delimited fields terminated by ',' lines terminated by '\n' ;

package spark

import org.apache.spark.sql.SparkSession

import java.util.Properties

object spark {

def main(args: Array[String]): Unit = {

val sparkSession = SparkSession.builder().appName("Task1").master("spark://hadoop1:7077").enableHiveSupport().getOrCreate()

//创建一个配置文件

val prop =new Properties()

prop.setProperty("user","root");

prop.setProperty("password","123456")

//数据库地址

val url="jdbc:mysql://192.168.10.101:3306/test"

//读取jdbc中的数据然后返回一个数据集

//val df =sparkSession.read.jdbc(url,"(SELECT * FROM tb_students )as mytable",prop)

val df=sparkSession.read.format("jdbc").option("user","root")

.option("password","123456").option("url",url).option("dbtable","(SELECT * FROM tb_students )as mytable")

.load()

//(内存中的一个临时表结构)

df.registerTempTable("orders")

//执行hive语句插入表中

sparkSession.sql("use odsl")

sparkSession.sql("insert into ods_orders5 select * from orders")

sparkSession.stop()

}

}

使用静态分区插入

package spark

import org.apache.spark.sql.{DataFrameReader, SparkSession}

object spark2 {

def main(args: Array[String]): Unit = {

val sparkSession = SparkSession.builder().appName("spark2").enableHiveSupport().getOrCreate();

var df =sparkSession.read.format("jdbc")

.option("url","jdbc:mysql://192.168.10.101:3306/test")

.option("user","root")

.option("password","123456")

.option("dbtable","(SELECT * FROM tb_students WHERE year(birthday)=1999) as mytable")

.load()

//将去除的数据放入hive分区表中

df.registerTempTable("orders");//临时表

sparkSession.sql("use odsl");

sparkSession.sql("insert into ods_orders2 partition(nian=1999) select * from orders ")

sparkSession.stop();

}

}

运行jar包代码

spark-submit --class spark.spark /opt/Mainscala.jar

如果出现Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient报错,解决办法是

1.重新初始化hive元数据,把原来的hive表删除了重新初始化

schematool -dbType mysql -initSchema

2.查看hive元数据版本是否和hive的当前版本一致

select * from version;

update version set SCHEMA_VERSION='2.1.0' where VER_ID=1;

3.修改hive-site.xml文件配置

hive.metastore.schema.verification

false