clickhouse部署

目录

- 前置要求

- 注意事项

- 单机搭建

-

- 1 创建目录

- 2 启动docker镜像服务

- 3 使用client连接clickhouse服务

- 4 查询clickhouse状态

- 集群搭建

-

- 1添加配置文件

-

- 配置文件目录结构

- conf目录下文件

- 文件内容

-

- docker-compose.yaml

- config.xml(clickhouse配置文件)

- metrika1.xml、metrika2.xml、metrika3.xml(集群节点配置)

- users.xml(用户信息)

- 2启动项目

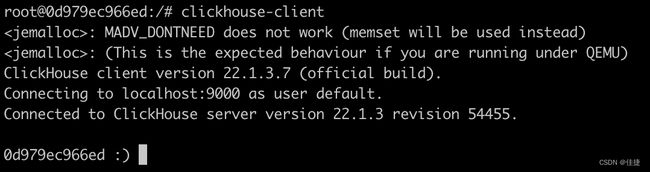

- 3查询容器id

- 4进入容器

- 5连接clickhouse

- 6查询集群状态

前置要求

单机需要安装docker,集群搭建还需要docker compose。

注意事项

以下命令如docker compose命令为Mac下的命令,linux需要使用docker-compose。

单机搭建

该搭建流程为使用docker进行搭建。

1 创建目录

/Users/jiajie/docker/clickhouse/clickhouse_test_db 该目录为机器上自己创建的目录,需要先创建一个,将下面命令中的目录修改为实际机器的目录

2 启动docker镜像服务

docker run -d -p 8123:8123 -p 9000:9000 --name clickhouse-test-server --ulimit nofile=262144:262144 --volume=/Users/jiajie/docker/clickhouse/clickhouse_test_db:/var/lib/clickhouse yandex/clickhouse-server

3 使用client连接clickhouse服务

docker run -it --rm --link clickhouse-test-server:clickhouse-server yandex/clickhouse-client --host clickhouse-server

4 查询clickhouse状态

select * from system.clusters;

集群搭建

使用docker compose进行搭建

1添加配置文件

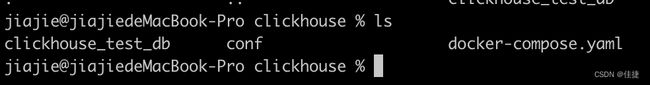

配置文件目录结构

根目录下情况主要的是conf目录和docker-compose.yaml文件

conf目录下文件

文件内容

docker-compose.yaml

version: "3.7"

services:

ck1:

image: yandex/clickhouse-server

ulimits:

nofile:

soft: 300001

hard: 300002

ports:

- 9001:9000

volumes:

- ./conf/config.xml:/etc/clickhouse-server/config.xml

- ./conf/users.xml:/etc/clickhouse-server/users.xml

- ./conf/metrika1.xml:/etc/metrika.xml

links:

- "zk1"

depends_on:

- zk1

ck2:

image: yandex/clickhouse-server

ulimits:

nofile:

soft: 300001

hard: 300002

volumes:

- ./conf/metrika2.xml:/etc/metrika.xml

- ./conf/config.xml:/etc/clickhouse-server/config.xml

- ./conf/users.xml:/etc/clickhouse-server/users.xml

ports:

- 9002:9000

depends_on:

- zk1

ck3:

image: yandex/clickhouse-server

ulimits:

nofile:

soft: 300001

hard: 300002

volumes:

- ./conf/metrika3.xml:/etc/metrika.xml

- ./conf/config.xml:/etc/clickhouse-server/config.xml

- ./conf/users.xml:/etc/clickhouse-server/users.xml

ports:

- 9003:9000

depends_on:

- zk1

zk1:

image: zookeeper

restart: always

hostname: zk1

expose:

- "2181"

ports:

- 2181:2181

config.xml(clickhouse配置文件)

<clickhouse>

<logger>

<level>tracelevel>

<log>/var/log/clickhouse-server/clickhouse-server.loglog>

<errorlog>/var/log/clickhouse-server/clickhouse-server.err.logerrorlog>

<size>1000Msize>

<count>10count>

logger>

<http_port>8123http_port>

<tcp_port>9000tcp_port>

<mysql_port>9004mysql_port>

<postgresql_port>9005postgresql_port>

<interserver_http_port>9009interserver_http_port>

<max_connections>4096max_connections>

<keep_alive_timeout>3keep_alive_timeout>

<grpc>

<enable_ssl>falseenable_ssl>

<ssl_cert_file>/path/to/ssl_cert_filessl_cert_file>

<ssl_key_file>/path/to/ssl_key_filessl_key_file>

<ssl_require_client_auth>falsessl_require_client_auth>

<ssl_ca_cert_file>/path/to/ssl_ca_cert_filessl_ca_cert_file>

<compression>deflatecompression>

<compression_level>mediumcompression_level>

<max_send_message_size>-1max_send_message_size>

<max_receive_message_size>-1max_receive_message_size>

<verbose_logs>falseverbose_logs>

grpc>

<openSSL>

<server>

<certificateFile>/etc/clickhouse-server/server.crtcertificateFile>

<privateKeyFile>/etc/clickhouse-server/server.keyprivateKeyFile>

<dhParamsFile>/etc/clickhouse-server/dhparam.pemdhParamsFile>

<verificationMode>noneverificationMode>

<loadDefaultCAFile>trueloadDefaultCAFile>

<cacheSessions>truecacheSessions>

<disableProtocols>sslv2,sslv3disableProtocols>

<preferServerCiphers>truepreferServerCiphers>

server>

<client>

<loadDefaultCAFile>trueloadDefaultCAFile>

<cacheSessions>truecacheSessions>

<disableProtocols>sslv2,sslv3disableProtocols>

<preferServerCiphers>truepreferServerCiphers>

<invalidCertificateHandler>

<name>RejectCertificateHandlername>

invalidCertificateHandler>

client>

openSSL>

<max_concurrent_queries>100max_concurrent_queries>

<max_server_memory_usage>0max_server_memory_usage>

<max_thread_pool_size>10000max_thread_pool_size>

<max_server_memory_usage_to_ram_ratio>0.9max_server_memory_usage_to_ram_ratio>

<total_memory_profiler_step>4194304total_memory_profiler_step>

<total_memory_tracker_sample_probability>0total_memory_tracker_sample_probability>

<uncompressed_cache_size>8589934592uncompressed_cache_size>

<mark_cache_size>5368709120mark_cache_size>

<mmap_cache_size>1000mmap_cache_size>

<compiled_expression_cache_size>134217728compiled_expression_cache_size>

<compiled_expression_cache_elements_size>10000compiled_expression_cache_elements_size>

<path>/var/lib/clickhouse/path>

<tmp_path>/var/lib/clickhouse/tmp/tmp_path>

<user_files_path>/var/lib/clickhouse/user_files/user_files_path>

<ldap_servers>

ldap_servers>

<user_directories>

<users_xml>

<path>users.xmlpath>

users_xml>

<local_directory>

<path>/var/lib/clickhouse/access/path>

local_directory>

user_directories>

<default_profile>defaultdefault_profile>

<custom_settings_prefixes>custom_settings_prefixes>

<default_database>defaultdefault_database>

<mlock_executable>truemlock_executable>

<remap_executable>falseremap_executable>

' | sed -e 's|.*>\(.*\)<.*|\1|')

wget https://github.com/ClickHouse/clickhouse-jdbc-bridge/releases/download/v$PKG_VER/clickhouse-jdbc-bridge_$PKG_VER-1_all.deb

apt install --no-install-recommends -f ./clickhouse-jdbc-bridge_$PKG_VER-1_all.deb

clickhouse-jdbc-bridge &

* [CentOS/RHEL]

export MVN_URL=https://repo1.maven.org/maven2/ru/yandex/clickhouse/clickhouse-jdbc-bridge

export PKG_VER=$(curl -sL $MVN_URL/maven-metadata.xml | grep '' | sed -e 's|.*>\(.*\)<.*|\1|')

wget https://github.com/ClickHouse/clickhouse-jdbc-bridge/releases/download/v$PKG_VER/clickhouse-jdbc-bridge-$PKG_VER-1.noarch.rpm

yum localinstall -y clickhouse-jdbc-bridge-$PKG_VER-1.noarch.rpm

clickhouse-jdbc-bridge &

Please refer to https://github.com/ClickHouse/clickhouse-jdbc-bridge#usage for more information.

]]>

<remote_servers incl="clickhouse_remote_servers">

<test_unavailable_shard>

<shard>

<replica>

<host>localhosthost>

<port>9000port>

replica>

shard>

<shard>

<replica>

<host>localhosthost>

<port>1port>

replica>

shard>

test_unavailable_shard>

remote_servers>

<zookeeper incl="zookeeper-servers">

zookeeper>

<builtin_dictionaries_reload_interval>3600builtin_dictionaries_reload_interval>

<max_session_timeout>3600max_session_timeout>

<default_session_timeout>60default_session_timeout>

<query_log>

<database>systemdatabase>

<table>query_logtable>

<partition_by>toYYYYMM(event_date)partition_by>

<flush_interval_milliseconds>7500flush_interval_milliseconds>

query_log>

<trace_log>

<database>systemdatabase>

<table>trace_logtable>

<partition_by>toYYYYMM(event_date)partition_by>

<flush_interval_milliseconds>7500flush_interval_milliseconds>

trace_log>

<query_thread_log>

<database>systemdatabase>

<table>query_thread_logtable>

<partition_by>toYYYYMM(event_date)partition_by>

<flush_interval_milliseconds>7500flush_interval_milliseconds>

query_thread_log>

<query_views_log>

<database>systemdatabase>

<table>query_views_logtable>

<partition_by>toYYYYMM(event_date)partition_by>

<flush_interval_milliseconds>7500flush_interval_milliseconds>

query_views_log>

<part_log>

<database>systemdatabase>

<table>part_logtable>

<partition_by>toYYYYMM(event_date)partition_by>

<flush_interval_milliseconds>7500flush_interval_milliseconds>

part_log>

<metric_log>

<database>systemdatabase>

<table>metric_logtable>

<flush_interval_milliseconds>7500flush_interval_milliseconds>

<collect_interval_milliseconds>1000collect_interval_milliseconds>

metric_log>

<asynchronous_metric_log>

<database>systemdatabase>

<table>asynchronous_metric_logtable>

<flush_interval_milliseconds>7000flush_interval_milliseconds>

asynchronous_metric_log>

<opentelemetry_span_log>

<engine>

engine MergeTree

partition by toYYYYMM(finish_date)

order by (finish_date, finish_time_us, trace_id)

engine>

<database>systemdatabase>

<table>opentelemetry_span_logtable>

<flush_interval_milliseconds>7500flush_interval_milliseconds>

opentelemetry_span_log>

<crash_log>

<database>systemdatabase>

<table>crash_logtable>

<partition_by />

<flush_interval_milliseconds>1000flush_interval_milliseconds>

crash_log>

<session_log>

<database>systemdatabase>

<table>session_logtable>

<partition_by>toYYYYMM(event_date)partition_by>

<flush_interval_milliseconds>7500flush_interval_milliseconds>

session_log>

<top_level_domains_lists>

top_level_domains_lists>

<dictionaries_config>*_dictionary.xmldictionaries_config>

<user_defined_executable_functions_config>*_function.xmluser_defined_executable_functions_config>

<encryption_codecs>

encryption_codecs>

<distributed_ddl>

<path>/clickhouse/task_queue/ddlpath>

distributed_ddl>

<graphite_rollup_example>

<pattern>

<regexp>click_costregexp>

<function>anyfunction>

<retention>

<age>0age>

<precision>3600precision>

retention>

<retention>

<age>86400age>

<precision>60precision>

retention>

pattern>

<default>

<function>maxfunction>

<retention>

<age>0age>

<precision>60precision>

retention>

<retention>

<age>3600age>

<precision>300precision>

retention>

<retention>

<age>86400age>

<precision>3600precision>

retention>

default>

graphite_rollup_example>

<format_schema_path>/var/lib/clickhouse/format_schemas/format_schema_path>

<query_masking_rules>

<rule>

<name>hide encrypt/decrypt argumentsname>

<regexp>((?:aes_)?(?:encrypt|decrypt)(?:_mysql)?)\s*\(\s*(?:'(?:\\'|.)+'|.*?)\s*\)regexp>

<replace>\1(???)replace>

rule>

query_masking_rules>

<send_crash_reports>

<enabled>falseenabled>

<anonymize>falseanonymize>

<endpoint>https://[email protected]/5226277endpoint>

send_crash_reports>

<include_from>/etc/metrika.xmlinclude_from>

clickhouse>

metrika1.xml、metrika2.xml、metrika3.xml(集群节点配置)

weight标签提供写入数据权重,权重越大,写入数据越多。

<yandex>

<clickhouse_remote_servers>

<test_cluster>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>ck1host>

<port>9000port>

replica>

shard>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>ck2host>

<port>9000port>

replica>

shard>

<shard>

<weight>1weight>

<internal_replication>trueinternal_replication>

<replica>

<host>ck3host>

<port>9000port>

replica>

shard>

test_cluster>

clickhouse_remote_servers>

<zookeeper-servers>

<node index="1">

<host>zk1host>

<port>2181port>

node>

zookeeper-servers>

yandex>

users.xml(用户信息)

<clickhouse>

<profiles>

<default>

<max_memory_usage>10000000000max_memory_usage>

<load_balancing>randomload_balancing>

<allow_ddl>1allow_ddl>

<readonly>0readonly>

default>

<readonly>

<readonly>1readonly>

readonly>

profiles>

<users>

<default>

<access_management>1access_management>

<password>password>

<networks>

<ip>::/0ip>

networks>

<profile>defaultprofile>

<quota>defaultquota>

default>

<test>

<password>password>

<quota>defaultquota>

<profile>defaultprofile>

<allow_databases>

<database>defaultdatabase>

<database>test_dictionariesdatabase>allow_databases>

<allow_dictionaries>

<dictionary>replicaTest_alldictionary>

allow_dictionaries>

test>

users>

<quotas>

<default>

<interval>

<duration>3600duration>

<queries>0queries>

<errors>0errors>

<result_rows>0result_rows>

<read_rows>0read_rows>

<execution_time>0execution_time>

interval>

default>

quotas>

clickhouse>

2启动项目

存放docker-conpose.yaml处进行命令输入

docker compose up -d

3查询容器id

docker ps -a

4进入容器

docker exec -it 容器id /bin/bash

5连接clickhouse

clickhouse-client

6查询集群状态

select * from system.clusters;