logstash安装和使用

官网:https://www.elastic.co/cn/logstash/

[root@VM-4-10-centos logstash]# tar -zxvf logstash-8.11.1-linux-x86_64.tar.gz -C ../software/

[root@VM-4-10-centos logstash]# mv logstash-8.11.1/ logstash

3.启动测试

运行最基本的 Logstash 管道,控制台输入控制台打印输出方便测试

[root@VM-4-10-centos logstash]# bin/logstash -e 'input { stdin { } } output { stdout {} }'

4.常用数据采集案例

输入脚本配置

官方输入插件:https://www.elastic.co/guide/en/logstash/current/input-plugins.html

采集Logstash的心跳输出到控制台

heartbeat.conf

input {

heartbeat {

#采集数据的频率10s一次

interval => 10

type => "heartbeat"

}

}

output {

stdout {

codec => rubydebug

}

}

[root@VM-4-10-centos logstash]# bin/logstash -f confdata/heartbeat.conf

进入到 Logstash 安装目录,并修改 config/logstash.yml 文件。我们把config.reload.automatic 设置为 true。

这样设置的好处是,每当我修改完我的配置文件后,我不需要每次都退出我的Logstash,然后再重新运行。Logstash 会自动侦测到最新的配置文件的变化。

监控端口数据变化输出到控制台

创建一个叫做 weblog.conf 的配置文件,并输入一下的内容:

input {

tcp {

port => 8848

}

}

output {

stdout { }

}

[root@VM-4-10-centos logstash]# bin/logstash -f confdata/heartbeat.conf

[root@VM-4-10-centos ~]# echo 'hello logstash' | nc localhost 8848

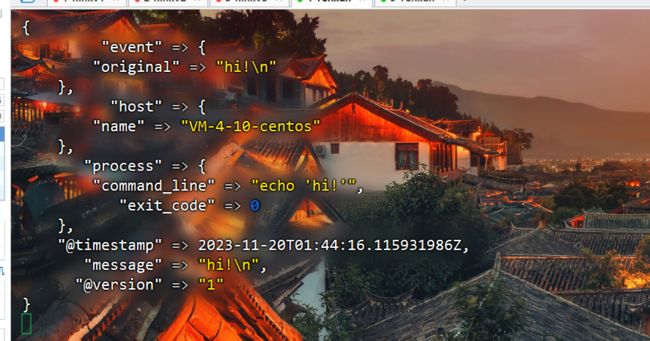

定期运行 shell 命令,并将shell命令返回的结果整个输出

input {

exec {

command => "echo 'hi!'"

#每30秒执行一次

interval => 30

}

}

output {

stdout { }

}

Input插件监控日志

input {

file {

#检测文件路径

path => "/opt/software/logstash/logdata/test.log"

#检测频率

stat_interval => 30

#从开头进行检测

start_position => "beginning"

}

}

output{

stdout{}

}

生成随机日志事件

这样做的一般目的是测试插件的性能

input {

generator {

count => 3

lines => [

"java",

"python",

"helloworld"

]

ecs_compatibility => disabled

}

}

output {

stdout { }

}

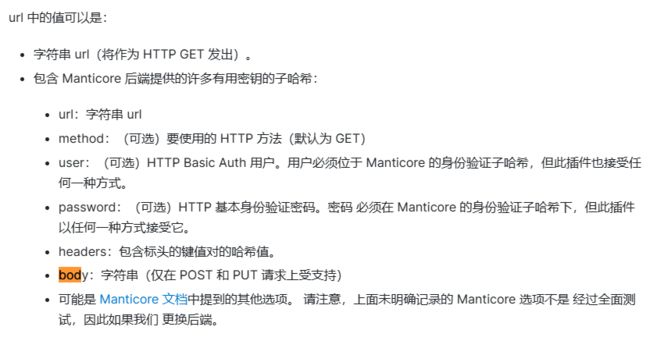

调用 HTTP API接口采集数据

input {

http_poller {

urls => {

url => "http://api.openweathermap.org/data/2.5/weather?q=London,uk&APPID=7dbe7341764f682c2242e744c4f167b0&units=metric"

}

request_timeout => 60

schedule => { every => "5s"}

codec => "json"

metadata_target => "http_poller_metadata"

}

}

output {

stdout {

}

}

JDBC 输入插件

首先,我们将适当的 JDBC 驱动程序库放在我们当前的

input {

jdbc {

#驱动包的位置

jdbc_driver_library => "/opt/software/logstash/lib/mysql-connector-java-8.0.27.jar"

jdbc_driver_class => "com.mysql.cj.jdbc.Driver"

jdbc_connection_string => "jdbc:mysql://10.0.4.10:3306/metastore"

jdbc_user => "root"

jdbc_password => "123456"

schedule => "* * * * *"

statement => "select * from DBS;"

}

}

output {

stdout {

}

}

过滤插件配置

官网过滤插件:https://www.elastic.co/guide/en/logstash/current/filter-plugins.html

删除过滤器插件(删除进入此筛选器的所有内容)

input {

jdbc {

#驱动包的位置

jdbc_driver_library => "/opt/software/logstash/lib/mysql-connector-java-8.0.27.jar"

jdbc_driver_class => "com.mysql.cj.jdbc.Driver"

jdbc_connection_string => "jdbc:mysql://10.0.4.10:3306/metastore"

jdbc_user => "root"

jdbc_password => "123456"

schedule => "* * * * *"

statement => "select * from DBS;"

}

}

filter {

if [name] == "zhangtest" {

drop { }

}

}

output {

stdout {

}

}

添加过滤插件后name=zhangtest的那条数据就没有采集

Grok filter plugin

Grok 是将非结构化日志数据解析为结构化和可查询内容的好方法

input {

generator {

message => "2019-09-09T13:00:00Z Whose woods these are I think I know."

count => 1

}

}

filter {

grok {

match => [

"message", "%{TIMESTAMP_ISO8601:timestamp_string}%{SPACE}%{GREEDYDATA:line}"

]

}

}

output {

stdout {

codec => rubydebug

}

}

Dissect filter

input {

generator {

message => "<1>Oct 16 20:21:22 www1 1,2016/10/16 20:21:20,3,THREAT,SCAN,6,2016/10/16 20:21:20,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54"

count => 1

}

}

filter {

if [message] =~ "THREAT," {

dissect {

mapping => {

message => "<%{priority}>%{syslog_timestamp} %{+syslog_timestamp} %{+syslog_timestamp} %{logsource} %{pan_fut_use_01},%{pan_rec_time},%{pan_serial_number},%{pan_type},%{pan_subtype},%{pan_fut_use_02},%{pan_gen_time},%{pan_src_ip},%{pan_dst_ip},%{pan_nat_src_ip},%{pan_nat_dst_ip},%{pan_rule_name},%{pan_src_user},%{pan_dst_user},%{pan_app},%{pan_vsys},%{pan_src_zone},%{pan_dst_zone},%{pan_ingress_intf},%{pan_egress_intf},%{pan_log_fwd_profile},%{pan_fut_use_03},%{pan_session_id},%{pan_repeat_cnt},%{pan_src_port},%{pan_dst_port},%{pan_nat_src_port},%{pan_nat_dst_port},%{pan_flags},%{pan_prot},%{pan_action},%{pan_misc},%{pan_threat_id},%{pan_cat},%{pan_severity},%{pan_direction},%{pan_seq_number},%{pan_action_flags},%{pan_src_location},%{pan_dst_location},%{pan_content_type},%{pan_pcap_id},%{pan_filedigest},%{pan_cloud},%{pan_user_agent},%{pan_file_type},%{pan_xff},%{pan_referer},%{pan_sender},%{pan_subject},%{pan_recipient},%{pan_report_id},%{pan_anymore}"

}

}

}

}

output {

stdout {

codec => rubydebug

}

}

KV filter

解析键/值对中数据的简便方法

input {

generator {

message => "[email protected]&oq=bobo&ss=12345"

count => 1

}

}

filter {

kv {

source => "message"

target => "parsed"

field_split => "&?"

}

}

output {

stdout {

codec => rubydebug

}

}

JSON filter

input {

generator {

message => '{"id":2,"timestamp":"2019-08-11T17:55:56Z","paymentType":"Visa","name":"Darby Dacks","gender":"Female","ip_address":"77.72.239.47","purpose":"Shoes","country":"Poland","age":55}'

count => 1

}

}

filter {

json {

source => "message"

}

}

output {

stdout {

codec => rubydebug

}

}

输出插件配置

官网输出插件:https://www.elastic.co/guide/en/logstash/current/output-plugins.html

Elasticsearch 输出插件

input {

generator {

message => '{"id":2,"timestamp":"2019-08-11T17:55:56Z","paymentType":"Visa","name":"Darby Dacks","gender":"Female","ip_address":"77.72.239.47","purpose":"Shoes","country":"Poland","age":55}'

count => 1

}

}

filter {

json {

source => "message"

}

}

output {

stdout {

codec => rubydebug

}

elasticsearch {

hosts => ["10.0.4.10:9200"]

index => "flinkdata"

workers => 1

}

}