bclinux aarch64 ceph 14.2.10 云主机 性能对比FastCFS vdbench

部署参考

ceph-deploy bclinux aarch64 ceph 14.2.10-CSDN博客

ceph-deploy bclinux aarch64 ceph 14.2.10【3】vdbench fsd 文件系统测试-CSDN博客

ceph 14.2.10 aarch64 非集群内 客户端 挂载块设备-CSDN博客

FastCFS vdbench数据参考

bclinux aarch64 openeuler 20.03 LTS SP1 部署 fastCFS-CSDN博客

客户端主要操作记录

创建存储池

[root@ceph-0 ~]# ceph osd pool create vdbench 128 128

pool 'vdbench' created

[root@ceph-0 ~]# ceph osd pool application enable vdbench rbd

enabled application 'rbd' on pool 'vdbench'

[root@ceph-0 ~]# rbd create image1 --size 50G --pool vdbench --image-format 2 --image-feature layering

导出秘钥

[root@ceph-0 ~]# ceph auth get-or-create client.blockuser mon 'allow r' osd 'allow * pool=vdbench'

[client.blockuser]

key = AQBvVV1loK0rDhAAbzolwBMO12wT1A40wg7Y9Q==

[root@ceph-0 ~]# ceph auth get client.blockuser

exported keyring for client.blockuser

[client.blockuser]

key = AQBvVV1loK0rDhAAbzolwBMO12wT1A40wg7Y9Q==

caps mon = "allow r"

caps osd = "allow * pool=vdbench"

[root@ceph-0 ~]# ceph auth get client.blockuser -o /etc/ceph/ceph.client.blockuser.keyring

exported keyring for client.blockuser

[root@ceph-0 ~]# ceph --user blockuser -s

cluster:

id: 3c33ee8e-d279-4279-a612-097c00d55734

health: HEALTH_WARN

clock skew detected on mon.ceph-1, mon.ceph-0, mon.ceph-2

mon ceph-0 is low on available space

services:

mon: 4 daemons, quorum ceph-3,ceph-1,ceph-0,ceph-2 (age 21m)

mgr: ceph-0(active, since 18m), standbys: ceph-1, ceph-2, ceph-3

osd: 4 osds: 4 up (since 18m), 4 in (since 18m)

data:

pools: 1 pools, 128 pgs

objects: 4 objects, 35 B

usage: 4.0 GiB used, 396 GiB / 400 GiB avail

pgs: 128 active+clean

[root@ceph-0 ~]# scp /etc/ceph/ceph.client.blockuser.keyring ceph-client:/etc/ceph/

映射块设备

[root@ceph-0 ~]# ssh ceph-client

[root@ceph-client ~]# rbd map vdbench/image1 --user blockuser -m ceph-0,ceph-1,ceph-2,ceph-3

/dev/rbd0

挂载

[root@ceph-client ~]# mkdir /mnt/rbd -p

[root@ceph-client ~]# fdisk -l /dev/rbd0

Disk /dev/rbd0: 50 GiB, 53687091200 bytes, 104857600 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 4194304 bytes / 4194304 bytes

[root@ceph-client ~]# mkfs.xfs /dev/rbd0

log stripe unit (4194304 bytes) is too large (maximum is 256KiB)

log stripe unit adjusted to 32KiB

meta-data=/dev/rbd0 isize=512 agcount=16, agsize=819200 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=1, rmapbt=0

= reflink=1

data = bsize=4096 blocks=13107200, imaxpct=25

= sunit=1024 swidth=1024 blks

naming =version 2 bsize=4096 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=6400, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

Discarding blocks...Done.

[root@ceph-client ~]# mount /dev/rbd0 /mnt/rbd

[root@ceph-client ~]# df -h /mnt/rbd

Filesystem Size Used Avail Use% Mounted on

/dev/rbd0 50G 391M 50G 1% /mnt/rbd

vdbench

ceph-rbd.conf 配置文件

hd=default,vdbench=/root/vdbench,user=root,shell=ssh

hd=hd1,system=ceph-client

fsd=fsd1,anchor=/mnt/rbd,depth=2,width=10,files=40,size=4M,shared=yes

fwd=format,threads=24,xfersize=1m

fwd=default,xfersize=1m,fileio=sequential,fileselect=sequential,operation=write,threads=24

fwd=fwd1,fsd=fsd1,host=hd1

rd=rd1,fwd=fwd*,fwdrate=max,format=restart,elapsed=600,interval=1

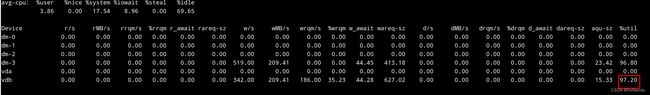

执行测试

./vdbench -f ceph-rbd.conf结果

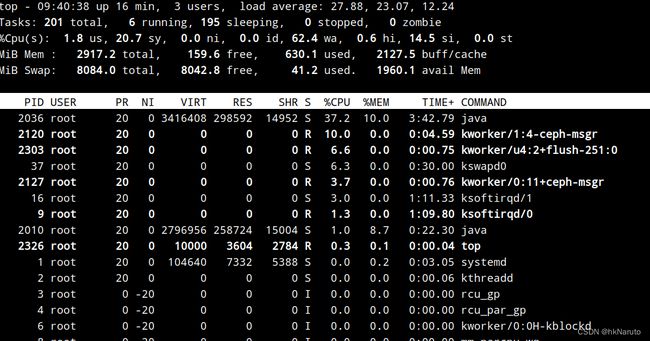

其中一个osd

与FastCFS成绩差不多。

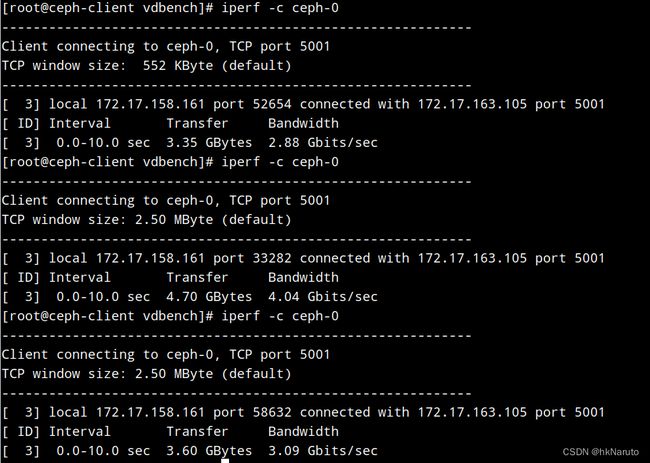

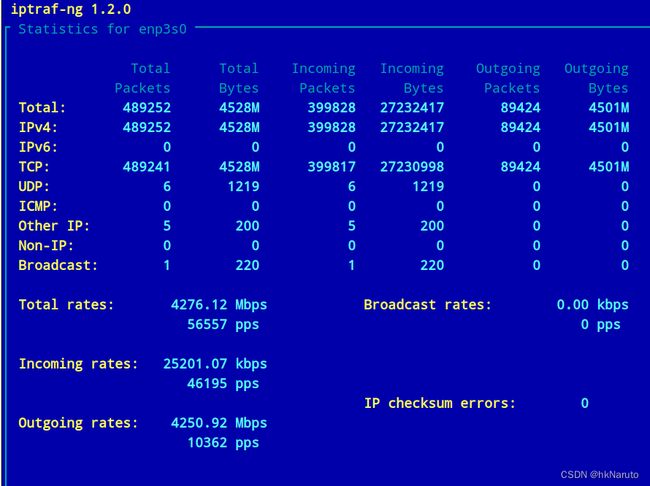

iperf网卡峰值测试

最高跑到了4Gbits/sec

说明未达到网卡极限!