KVM虚拟机与网络

实验环境

Ubuntu 16.04.1

root@dev:/sys/class/net# cat /etc/issue

Ubuntu 16.04.1 LTS \n \l

KVM

root@dev:/sys/class/net# dpkg -l| grep kvm

ii qemu-kvm 1:2.5+dfsg-5ubuntu10.51 amd64 QEMU Full virtualization

root@dev:/sys/class/net# dpkg -L qemu-kvm

/.

/usr

/usr/bin

/usr/bin/kvm

/usr/share

/usr/share/man

/usr/share/man/man1

/usr/share/man/man1/kvm.1.gz

/usr/share/doc

/usr/share/doc/qemu-kvm

/usr/share/doc/qemu-kvm/copyright

/usr/share/doc/qemu-kvm/NEWS.Debian.gz

/usr/bin/kvm-spice

/usr/bin/qemu-system-x86_64-spice

/usr/share/doc/qemu-kvm/changelog.Debian.gz

openvswitch

root@dev:/lib/modules/4.4.36-nn3-server/kernel/net/openvswitch# dpkg -l | grep openvswitch

ii openvswitch-common 2.5.9-0ubuntu0.16.04.3 amd64 Open vSwitch common components

ii openvswitch-switch 2.5.9-0ubuntu0.16.04.3 amd64 Open vSwitch switch implementations

ii python-openvswitch 2.5.9-0ubuntu0.16.04.3 all Python bindings for Open vSwitch

iproute2

root@dev:/lib/modules/4.4.36-nn3-server/kernel/net/openvswitch# dpkg -l | grep iproute2

ii iproute2 4.3.0-1ubuntu3 amd64 networking and traffic control tools

net-tools

root@dev:/lib/modules/4.4.36-nn3-server/kernel/net/openvswitch# dpkg -l | grep net-tools

ii net-tools 1.60-26ubuntu1 amd64 NET-3 networking toolkit

wget https://launchpad.net/ubuntu/+archive/primary/+sourcefiles/net-tools/1.60+git20161116.90da8a0-1ubuntu1/net-tools_1.60+git20161116.90da8a0.orig.tar.gz

KVM虚拟机部署

没有安装kvm可以用apt-get安装,网上有很多教程。

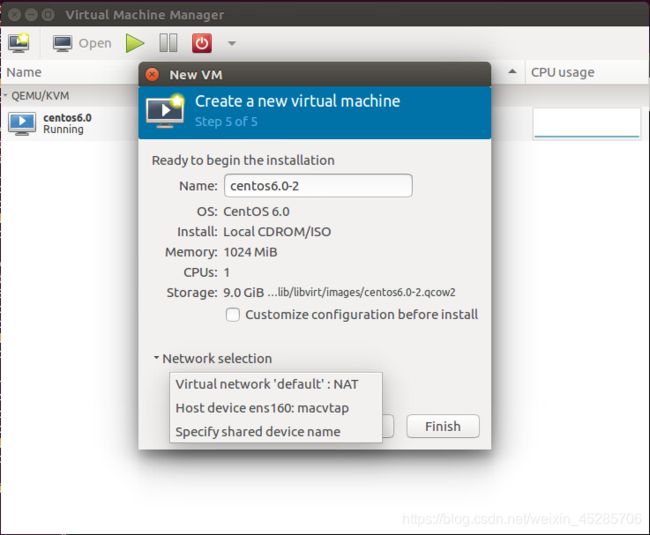

使用virt-manager可以在图形化界面部署KVM虚拟机,在部署KVM虚拟机的时候,选择的网络是default的NAT网络。

KVM虚拟机配置文件的位置

类似于VMware vsphere的vmx文件, KVM虚拟机同样有类似的配置文件。其配置文件位于如下位置:

root@dev:/etc/libvirt/qemu# ls

centos6.0.xml networks

vnic的配置在此xml文件里长这样:

<interface type='network'>

<mac address='52:54:00:ef:c8:d6'/>

<source network='default'/>

<model type='virtio'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/>

</interface>

可以看到,里面包含mac地址,和所要连接的网络“default”。再看看这个叫default的网络长的什么样(准确来说是这个网络在磁盘上persist成啥样,必然是某一个服务在linux操作系统启动的时候读取了这个xml文件并且创建了网桥):

root@dev:/etc/libvirt/qemu/networks# cat default.xml

<!--

WARNING: THIS IS AN AUTO-GENERATED FILE. CHANGES TO IT ARE LIKELY TO BE

OVERWRITTEN AND LOST. Changes to this xml configuration should be made using:

virsh net-edit default

or other application using the libvirt API.

-->

<network>

<name>default</name>

<uuid>ef327d9c-c286-40c0-ad01-110916760008</uuid>

<forward mode='nat'/>

<bridge name='virbr0' stp='on' delay='0'/>

<mac address='52:54:00:51:e7:c7'/>

<ip address='192.168.122.1' netmask='255.255.255.0'>

<dhcp>

<range start='192.168.122.2' end='192.168.122.254'/>

</dhcp>

</ip>

</network>

从上面可以看出,这种配置方法和vmware的VM连到standard switch的方式非常类似。下面看一下运行时的状态。

KVM虚拟机网络运行时状态

二层网络的配置

root@dev:/etc/libvirt/qemu/networks# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 02:00:af:fe:79:67 brd ff:ff:ff:ff:ff:ff

inet 10.182.136.227/20 brd 10.182.143.255 scope global ens160

valid_lft forever preferred_lft forever

inet6 fd01:0:106:201:0:afff:fefe:7967/64 scope global mngtmpaddr dynamic

valid_lft 2591855sec preferred_lft 604655sec

inet6 fe80::afff:fefe:7967/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:8e:2b:b7:71 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 scope global docker0

valid_lft forever preferred_lft forever

4: virbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether fe:54:00:ef:c8:d6 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.1/24 brd 192.168.122.255 scope global virbr0

valid_lft forever preferred_lft forever

5: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast state DOWN group default qlen 1000

link/ether 52:54:00:51:e7:c7 brd ff:ff:ff:ff:ff:ff

8: vnet0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master virbr0 state UNKNOWN group default qlen 1000

link/ether fe:54:00:ef:c8:d6 brd ff:ff:ff:ff:ff:ff

inet6 fe80::fc54:ff:feef:c8d6/64 scope link

valid_lft forever preferred_lft forever

root@dev:/etc/libvirt/qemu/networks# brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.02428e2bb771 no

virbr0 8000.fe5400efc8d6 yes vnet0

可以发现,这个VM的虚拟网卡就是连接到网桥virbr0的一个叫做vnet0的port。理论上讲,物理交换机或者网桥的接口是不需要MAC地址的。这里的vnet0上显示的MAC地址"fe:54:00:ef:c8:d6", 与VM的xml配置文件里是一致的。如果给VM安装了guest OS,登陆进去发现其网卡的MAC地址也是与此地址一致的。

这里还有一个问题,我是怎么知道vnet0连接的就是我刚才创建的虚拟机的vnic呢?可以通过如下命令查看:

root@dev:/etc/libvirt/qemu/networks# virsh list

Id Name State

----------------------------------------------------

3 centos6.0 running

这个VM的Id是3.

root@dev:/etc/libvirt/qemu/networks# virsh dumpxml 3

....

<interface type='network'>

<mac address='52:54:00:ef:c8:d6'/>

<source network='default' bridge='virbr0'/>

<target dev='vnet0'/>

<model type='virtio'/>

<alias name='net0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/>

</interface>

可以看出这个VM的vnic连的是vnet0。也可以直接用下面的命令:

for vm in $(virsh list | grep running | awk '{print $2}'); do echo "$vm: " && virsh dumpxml $vm | grep "vnet" | sed 's/[^'']*''\([^'']*\)''[^'']*/\t\1/g'; done

问题又来了,xml文件里也没有这个vnet0。virsh dumpxml里的这个信息是哪里来的呢?我感觉,这个信息应该是一个runtime的信息。可能在VM power on的时候,libvirt(或者别的东西)动态创建了这个vnet0。于是尝试把这个VM poweroff。

果然,把VM power off之后,在“ip addr”里这个vnet0就没有了!

从下面的结果看以看出,vnet0用的driver是tun。

root@dev:~/script# ethtool -i vnet0

driver: tun

version: 1.6

firmware-version:

expansion-rom-version:

bus-info: tap

supports-statistics: no

supports-test: no

supports-eeprom-access: no

supports-register-dump: no

supports-priv-flags: no

三层网络的配置

路由表

先看一下host的路由表:

root@dev:/etc/libvirt/qemu/networks# route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default 10.182.143.254 0.0.0.0 UG 0 0 0 ens160

10.182.128.0 * 255.255.240.0 U 0 0 0 ens160

link-local * 255.255.0.0 U 1000 0 0 ens160

172.17.0.0 * 255.255.0.0 U 0 0 0 docker0

192.168.122.0 * 255.255.255.0 U 0 0 0 virbr0

可以看到,目的地址为192.168.122.0/24网段的IP地址都是在链路上,直接发送到virbr0这个interface。

关于virbr0的IP地址的理解:

对于host来说,virbr0是网桥上的一个虚拟网卡,并可以绑定IP地址。这个虚拟网卡在host的网络命名空间之内。如果类比esx的 virtual standard switch(VSS)的话, 这个网桥virbr0相当于是一个VSS。而"ip addr"里这个可以看到的具备IP地址192.168.122.1的virbr0,相当与连接到这个VSS的vmknic。

区别在于,在esx上创建VSS是独立的步骤,不会在VSS上自动创建vmknic。但是linux上,创建网桥的同时,也隐式的创建了host可见的虚拟网卡。

从这个路由表可以看出,host作为路由器,通过virbr0(虚拟网卡)连接到virbr0(网桥)所在的子网。所以,即使是没有任何NAT规则,在host上也能ping通连接到virbr0的VM(当然,VM需要配置和virbr0在同一个子网的IP)。

NAT

尝试了在Guest内部,完全可以ping通外界,比如ping www.baidu.com。能做到这一点,必然是KVM对host的iptable动了手脚,配置了SNAT:

root@dev:/etc/libvirt/qemu/networks# iptables -t nat -L -n

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

DOCKER all -- 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

DOCKER all -- 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

RETURN all -- 192.168.122.0/24 224.0.0.0/24

RETURN all -- 192.168.122.0/24 255.255.255.255

MASQUERADE tcp -- 192.168.122.0/24 !192.168.122.0/24 masq ports: 1024-65535

MASQUERADE udp -- 192.168.122.0/24 !192.168.122.0/24 masq ports: 1024-65535

MASQUERADE all -- 192.168.122.0/24 !192.168.122.0/24

MASQUERADE all -- 172.17.0.0/16 0.0.0.0/0

Chain DOCKER (2 references)

target prot opt source destination

RETURN all -- 0.0.0.0/0 0.0.0.0/0

Iptable我现在暂时看不懂。待学习。

下一步待研究的问题

- 感觉对tap/tun/等还不是理解的特别透彻,研究ifconfigure的源代码。

- kvm虚拟机的net0是如何创建的,对于kernel意味着什么。

- ifconfigure列出的各中设备,其驱动如何让查看

- ifconfigure是从/sys/class/net/获取的信息吗?

- 网桥如何实现dhcpserver功能的。

- 研究ovs

- 家里的路由器是怎么实现ping的??

- nat做了一些工作,对icmp首部中的Identifier字段进行了重映射,你可以把这个标识符字段理解为端口号的作用。当然具体操作有很多细节,详见rfc5508。

- NAT设备对于有端口的协议,比如TCP、UDP的数据包,采用端口来识别。对于没有端口的协议,比如ICMP、OSPF的数据包,我知道的有些厂家设备,是会采用具体协议的一个字段来识别的。具体详细信息,可以参考相关RFC。

- 看看tun driver的源代码在哪