Kubernetes K8S之kube-prometheus概述与部署

Kubernetes K8S之kube-prometheus概述与部署

主机配置规划

| 服务器名称(hostname) | 系统版本 | 配置 | 内网IP | 外网IP(模拟) |

|---|---|---|---|---|

| k8s-master | CentOS7.7 | 2C/4G/20G | 172.16.1.110 | 10.0.0.110 |

| k8s-node01 | CentOS7.7 | 2C/4G/20G | 172.16.1.111 | 10.0.0.111 |

| k8s-node02 | CentOS7.7 | 2C/4G/20G | 172.16.1.112 | 10.0.0.112 |

prometheus概述

Prometheus是一个开源的系统监控和警报工具包,自2012成立以来,许多公司和组织采用了Prometheus。它现在是一个独立的开源项目,并独立于任何公司维护。在2016年,Prometheus加入云计算基金会作为Kubernetes之后的第二托管项目。

Prometheus性能也足够支撑上万台规模的集群。

Prometheus的关键特性

- 多维度数据模型

- 灵活的查询语言

- 不依赖于分布式存储;单服务器节点是自治的

- 通过基于HTTP的pull方式采集时序数据

- 可以通过中间网关进行时序列数据推送

- 通过服务发现或者静态配置来发现目标服务对象

- 支持多种多样的图表和界面展示,比如Grafana等

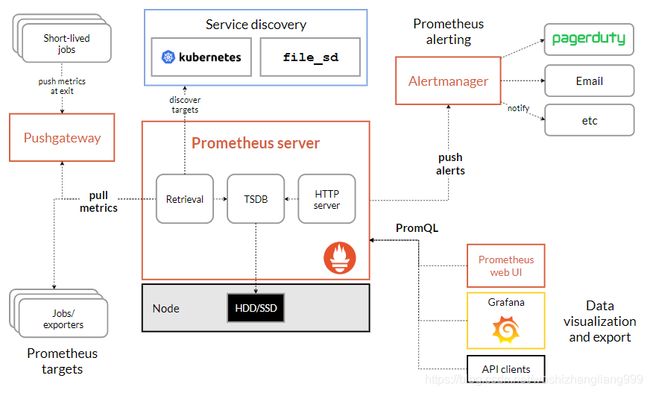

架构图

基本原理

Prometheus的基本原理是通过HTTP协议周期性抓取被监控组件的状态,任意组件只要提供对应的HTTP接口就可以接入监控。不需要任何SDK或者其他的集成过程。

这样做非常适合做虚拟化环境监控系统,比如VM、Docker、Kubernetes等。输出被监控组件信息的HTTP接口被叫做exporter 。目前互联网公司常用的组件大部分都有exporter可以直接使用,比如Varnish、Haproxy、Nginx、MySQL、Linux系统信息(包括磁盘、内存、CPU、网络等等)。

Prometheus三大套件

- Server 主要负责数据采集和存储,提供PromQL查询语言的支持。

- Alertmanager 警告管理器,用来进行报警。

- Push Gateway 支持临时性Job主动推送指标的中间网关。

服务过程

- Prometheus Daemon负责定时去目标上抓取metrics(指标)数据,每个抓取目标需要暴露一个http服务的接口给它定时抓取。Prometheus支持通过配置文件、文本文件、Zookeeper、Consul、DNS SRV Lookup等方式指定抓取目标。Prometheus采用PULL的方式进行监控,即服务器可以直接通过目标PULL数据或者间接地通过中间网关来Push数据。

- Prometheus在本地存储抓取的所有数据,并通过一定规则进行清理和整理数据,并把得到的结果存储到新的时间序列中。

- Prometheus通过PromQL和其他API可视化地展示收集的数据。Prometheus支持很多方式的图表可视化,例如Grafana、自带的Promdash以及自身提供的模版引擎等等。Prometheus还提供HTTP API的查询方式,自定义所需要的输出。

- PushGateway支持Client主动推送metrics到PushGateway,而Prometheus只是定时去Gateway上抓取数据。

- Alertmanager是独立于Prometheus的一个组件,可以支持Prometheus的查询语句,提供十分灵活的报警方式。

kube-prometheus部署

kube-prometheus的GitHub地址:

https://github.com/coreos/kube-prometheus/

本次我们选择release-0.2版本,而不是其他版本。

kube-prometheus下载与配置修改

下载

[root@k8s-master prometheus]# pwd

/root/k8s_practice/prometheus

[root@k8s-master prometheus]#

[root@k8s-master prometheus]# wget https://github.com/coreos/kube-prometheus/archive/v0.2.0.tar.gz

[root@k8s-master prometheus]# tar xf v0.2.0.tar.gz

[root@k8s-master prometheus]# ll

total 432

drwxrwxr-x 10 root root 4096 Sep 13 2019 kube-prometheus-0.2.0

-rw-r--r-- 1 root root 200048 Jul 19 11:41 v0.2.0.tar.gz

配置修改

# 当前所在目录

[root@k8s-master manifests]# pwd

/root/k8s_practice/prometheus/kube-prometheus-0.2.0/manifests

[root@k8s-master manifests]#

# 配置修改1

[root@k8s-master manifests]# vim grafana-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: grafana

name: grafana

namespace: monitoring

spec:

type: NodePort # 添加内容

ports:

- name: http

port: 3000

targetPort: http

nodePort: 30100 # 添加内容

selector:

app: grafana

[root@k8s-master manifests]#

# 配置修改2

[root@k8s-master manifests]# vim prometheus-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

prometheus: k8s

name: prometheus-k8s

namespace: monitoring

spec:

type: NodePort # 添加内容

ports:

- name: web

port: 9090

targetPort: web

nodePort: 30200 # 添加内容

selector:

app: prometheus

prometheus: k8s

sessionAffinity: ClientIP

[root@k8s-master manifests]#

# 配置修改3

[root@k8s-master manifests]# vim alertmanager-service.yaml

apiVersion: v1

kind: Service

metadata:

labels:

alertmanager: main

name: alertmanager-main

namespace: monitoring

spec:

type: NodePort # 添加内容

ports:

- name: web

port: 9093

targetPort: web

nodePort: 30300 # 添加内容

selector:

alertmanager: main

app: alertmanager

sessionAffinity: ClientIP

[root@k8s-master manifests]#

# 配置修改4

[root@k8s-master manifests]# vim grafana-deployment.yaml

# 将apps/v1beta2 改为 apps/v1

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: grafana

name: grafana

namespace: monitoring

spec:

replicas: 1

selector:

………………

kube-prometheus镜像版本查看与下载

由于镜像都在国外,因此经常会下载失败。为了快速下载镜像,这里我们下载国内的镜像,然后tag为配置文件中的国外镜像名即可。

查看kube-prometheus的镜像信息

# 当前工作目录

[root@k8s-master manifests]# pwd

/root/k8s_practice/prometheus/kube-prometheus-0.2.0/manifests

[root@k8s-master manifests]#

# 所有镜像信息如下

[root@k8s-master manifests]# grep -riE 'quay.io|k8s.gcr|grafana/' *

0prometheus-operator-deployment.yaml: - --config-reloader-image=quay.io/coreos/configmap-reload:v0.0.1

0prometheus-operator-deployment.yaml: - --prometheus-config-reloader=quay.io/coreos/prometheus-config-reloader:v0.33.0

0prometheus-operator-deployment.yaml: image: quay.io/coreos/prometheus-operator:v0.33.0

alertmanager-alertmanager.yaml: baseImage: quay.io/prometheus/alertmanager

grafana-deployment.yaml: - image: grafana/grafana:6.2.2

kube-state-metrics-deployment.yaml: image: quay.io/coreos/kube-rbac-proxy:v0.4.1

kube-state-metrics-deployment.yaml: image: quay.io/coreos/kube-rbac-proxy:v0.4.1

kube-state-metrics-deployment.yaml: image: quay.io/coreos/kube-state-metrics:v1.7.2

kube-state-metrics-deployment.yaml: image: k8s.gcr.io/addon-resizer:1.8.4

node-exporter-daemonset.yaml: image: quay.io/prometheus/node-exporter:v0.18.1

node-exporter-daemonset.yaml: image: quay.io/coreos/kube-rbac-proxy:v0.4.1

prometheus-adapter-deployment.yaml: image: quay.io/coreos/k8s-prometheus-adapter-amd64:v0.4.1

prometheus-prometheus.yaml: baseImage: quay.io/prometheus/prometheus

##### 由上可知alertmanager和prometheus的镜像版本未显示

### 获取alertmanager镜像版本信息

[root@k8s-master manifests]# cat alertmanager-alertmanager.yaml

apiVersion: monitoring.coreos.com/v1

kind: Alertmanager

metadata:

labels:

alertmanager: main

name: main

namespace: monitoring

spec:

baseImage: quay.io/prometheus/alertmanager

nodeSelector:

kubernetes.io/os: linux

replicas: 3

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: alertmanager-main

version: v0.18.0

##### 由上可见alertmanager的镜像版本为v0.18.0

### 获取prometheus镜像版本信息

[root@k8s-master manifests]# cat prometheus-prometheus.yaml

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

prometheus: k8s

name: k8s

namespace: monitoring

spec:

alerting:

alertmanagers:

- name: alertmanager-main

namespace: monitoring

port: web

baseImage: quay.io/prometheus/prometheus

nodeSelector:

kubernetes.io/os: linux

podMonitorSelector: {}

replicas: 2

resources:

requests:

memory: 400Mi

ruleSelector:

matchLabels:

prometheus: k8s

role: alert-rules

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector: {}

version: v2.11.0

##### 由上可见prometheus的镜像版本为v2.11.0

执行脚本:镜像下载并重命名【集群所有机器执行】

[root@k8s-master software]# vim download_prometheus_image.sh

#!/bin/sh

##### 在 master 节点和 worker 节点都要执行 【所有机器执行】

# 加载环境变量

. /etc/profile

. /etc/bashrc

###############################################

# 从国内下载 prometheus 所需镜像,并对镜像重命名

src_registry="registry.cn-beijing.aliyuncs.com/cloud_registry"

# 定义镜像集合数组

images=(

kube-rbac-proxy:v0.4.1

kube-state-metrics:v1.7.2

k8s-prometheus-adapter-amd64:v0.4.1

configmap-reload:v0.0.1

prometheus-config-reloader:v0.33.0

prometheus-operator:v0.33.0

)

# 循环从国内获取的Docker镜像

for img in ${images[@]};

do

# 从国内源下载镜像

docker pull ${src_registry}/$img

# 改变镜像名称

docker tag ${src_registry}/$img quay.io/coreos/$img

# 删除源始镜像

docker rmi ${src_registry}/$img

# 打印分割线

echo "======== $img download OK ========"

done

##### 其他镜像下载

image_name="alertmanager:v0.18.0"

docker pull ${src_registry}/${image_name} && docker tag ${src_registry}/${image_name} quay.io/prometheus/${image_name} && docker rmi ${src_registry}/${image_name}

echo "======== ${image_name} download OK ========"

image_name="node-exporter:v0.18.1"

docker pull ${src_registry}/${image_name} && docker tag ${src_registry}/${image_name} quay.io/prometheus/${image_name} && docker rmi ${src_registry}/${image_name}

echo "======== ${image_name} download OK ========"

image_name="prometheus:v2.11.0"

docker pull ${src_registry}/${image_name} && docker tag ${src_registry}/${image_name} quay.io/prometheus/${image_name} && docker rmi ${src_registry}/${image_name}

echo "======== ${image_name} download OK ========"

image_name="grafana:6.2.2"

docker pull ${src_registry}/${image_name} && docker tag ${src_registry}/${image_name} grafana/${image_name} && docker rmi ${src_registry}/${image_name}

echo "======== ${image_name} download OK ========"

image_name="addon-resizer:1.8.4"

docker pull ${src_registry}/${image_name} && docker tag ${src_registry}/${image_name} k8s.gcr.io/${image_name} && docker rmi ${src_registry}/${image_name}

echo "======== ${image_name} download OK ========"

echo "********** prometheus docker images OK! **********"

执行脚本后得到如下镜像

[root@k8s-master software]# docker images | grep 'quay.io/coreos'

quay.io/coreos/kube-rbac-proxy v0.4.1 a9d1a87e4379 6 days ago 41.3MB

quay.io/coreos/flannel v0.12.0-amd64 4e9f801d2217 4 months ago 52.8MB ## 之前已存在

quay.io/coreos/kube-state-metrics v1.7.2 3fd71b84d250 6 months ago 33.1MB

quay.io/coreos/prometheus-config-reloader v0.33.0 64751efb2200 8 months ago 17.6MB

quay.io/coreos/prometheus-operator v0.33.0 8f2f814d33e1 8 months ago 42.1MB

quay.io/coreos/k8s-prometheus-adapter-amd64 v0.4.1 5f0fc84e586c 15 months ago 60.7MB

quay.io/coreos/configmap-reload v0.0.1 3129a2ca29d7 3 years ago 4.79MB

[root@k8s-master software]#

[root@k8s-master software]# docker images | grep 'quay.io/prometheus'

quay.io/prometheus/node-exporter v0.18.1 d7707e6f5e95 11 days ago 22.9MB

quay.io/prometheus/prometheus v2.11.0 de242295e225 2 months ago 126MB

quay.io/prometheus/alertmanager v0.18.0 30594e96cbe8 10 months ago 51.9MB

[root@k8s-master software]#

[root@k8s-master software]# docker images | grep 'grafana'

grafana/grafana 6.2.2 a532fe3b344a 9 months ago 248MB

[root@k8s-node01 software]#

[root@k8s-node01 software]# docker images | grep 'addon-resizer'

k8s.gcr.io/addon-resizer 1.8.4 5ec630648120 20 months ago 38.3MB

kube-prometheus启动

启动prometheus

[root@k8s-master kube-prometheus-0.2.0]# pwd

/root/k8s_practice/prometheus/kube-prometheus-0.2.0

[root@k8s-master kube-prometheus-0.2.0]#

### 如果出现异常,可以再重复执行一次或多次

[root@k8s-master kube-prometheus-0.2.0]# kubectl apply -f manifests/

启动后svc与pod状态查看

[root@k8s-master ~]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master 152m 7% 1311Mi 35%

k8s-node01 100m 5% 928Mi 54%

k8s-node02 93m 4% 979Mi 56%

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl get svc -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-main NodePort 10.97.249.249 9093:30300/TCP 7m21s

alertmanager-operated ClusterIP None 9093/TCP,9094/TCP,9094/UDP 7m13s

grafana NodePort 10.101.183.103 3000:30100/TCP 7m20s

kube-state-metrics ClusterIP None 8443/TCP,9443/TCP 7m20s

node-exporter ClusterIP None 9100/TCP 7m20s

prometheus-adapter ClusterIP 10.105.174.86 443/TCP 7m19s

prometheus-k8s NodePort 10.109.179.233 9090:30200/TCP 7m19s

prometheus-operated ClusterIP None 9090/TCP 7m3s

prometheus-operator ClusterIP None 8080/TCP 7m21s

[root@k8s-master ~]#

[root@k8s-master ~]# kubectl get pod -n monitoring -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

alertmanager-main-0 2/2 Running 0 2m11s 10.244.4.164 k8s-node01

alertmanager-main-1 2/2 Running 0 2m11s 10.244.2.225 k8s-node02

alertmanager-main-2 2/2 Running 0 2m11s 10.244.4.163 k8s-node01

grafana-5cd56df4cd-6d75r 1/1 Running 0 29s 10.244.2.227 k8s-node02

kube-state-metrics-7d4bb66d8d-gx7w4 4/4 Running 0 2m18s 10.244.2.223 k8s-node02

node-exporter-pl47v 2/2 Running 0 2m17s 172.16.1.110 k8s-master

node-exporter-tmmbw 2/2 Running 0 2m17s 172.16.1.111 k8s-node01

node-exporter-w8wd9 2/2 Running 0 2m17s 172.16.1.112 k8s-node02

prometheus-adapter-c676d8764-phj69 1/1 Running 0 2m17s 10.244.2.224 k8s-node02

prometheus-k8s-0 3/3 Running 1 2m1s 10.244.2.226 k8s-node02

prometheus-k8s-1 3/3 Running 0 2m1s 10.244.4.165 k8s-node01

prometheus-operator-7559d67ff-lk86l 1/1 Running 0 2m18s 10.244.4.162 k8s-node01

kube-prometheus访问

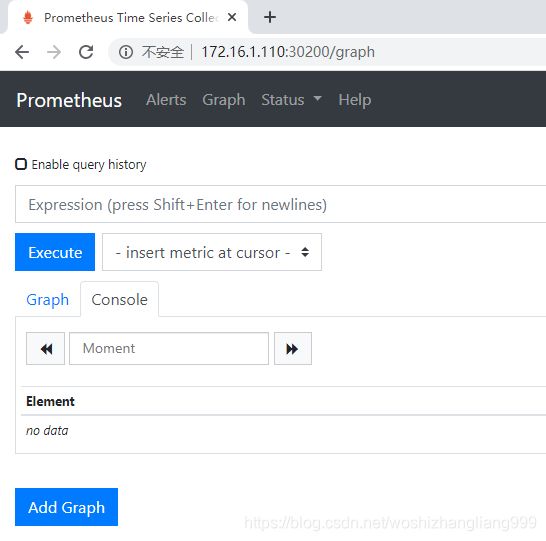

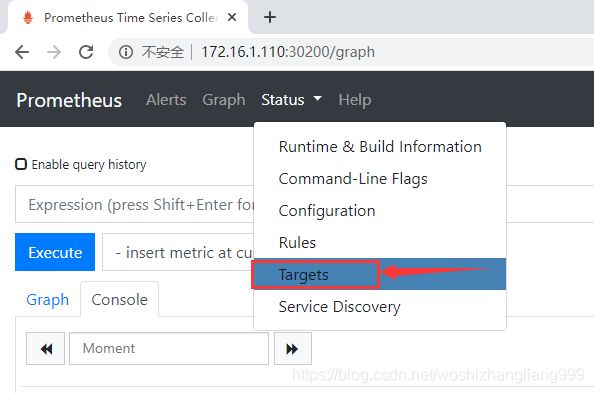

prometheus-service访问

访问地址如下:

http://172.16.1.110:30200/

通过访问如下地址,可以看到prometheus已经成功连接上了k8s的apiserver。

http://172.16.1.110:30200/targets

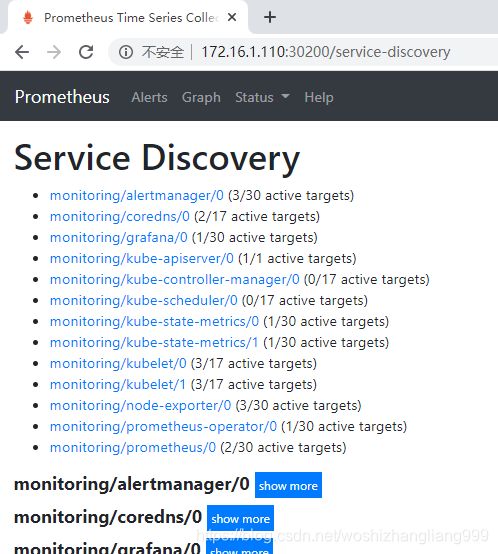

查看service-discovery

http://172.16.1.110:30200/service-discovery

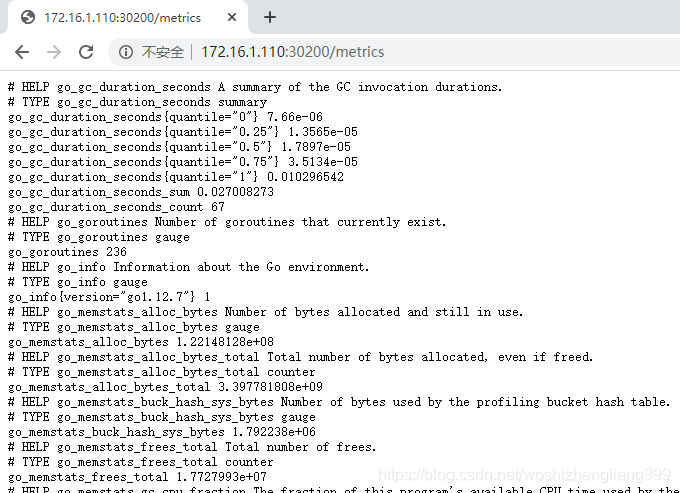

prometheus自己指标查看

http://172.16.1.110:30200/metrics

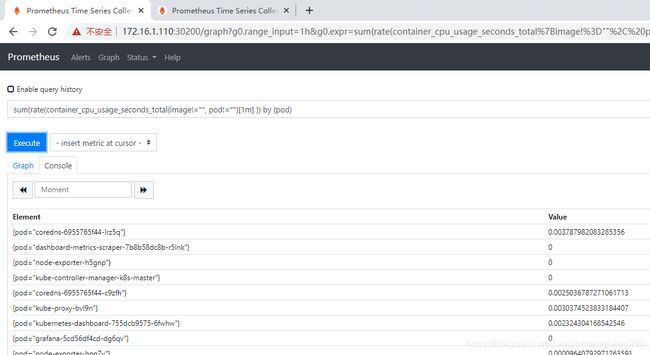

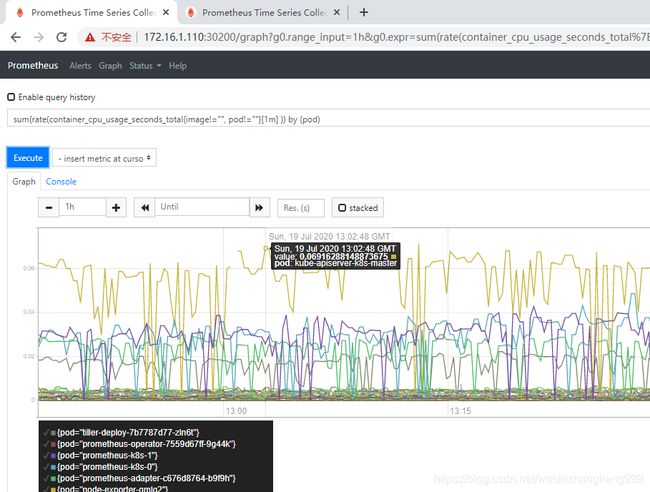

prometheus的WEB界面上提供了基本的查询,例如查询K8S集群中每个POD的CPU使用情况,可以使用如下查询条件查询:

# 直接使用 container_cpu_usage_seconds_total 可以看见有哪些字段信息

sum(rate(container_cpu_usage_seconds_total{image!="", pod!=""}[1m] )) by (pod)

列表页面

图形页面

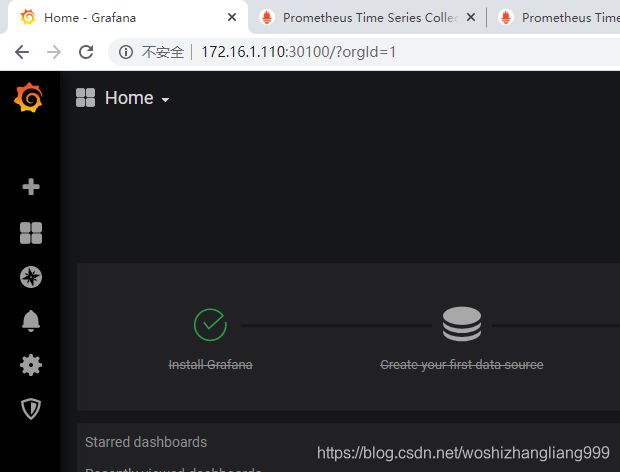

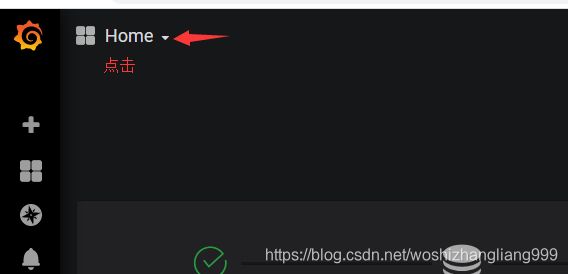

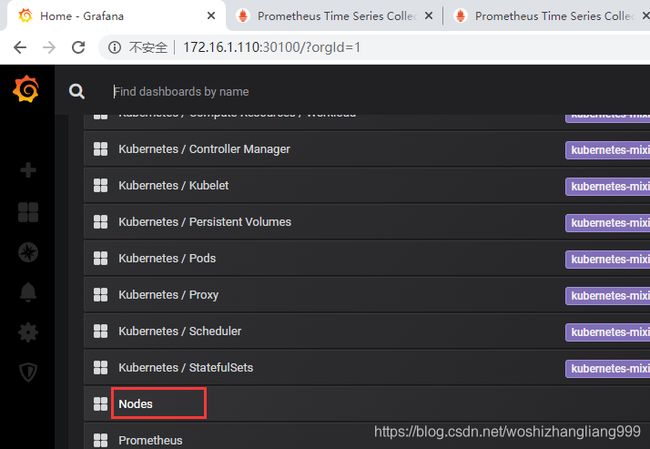

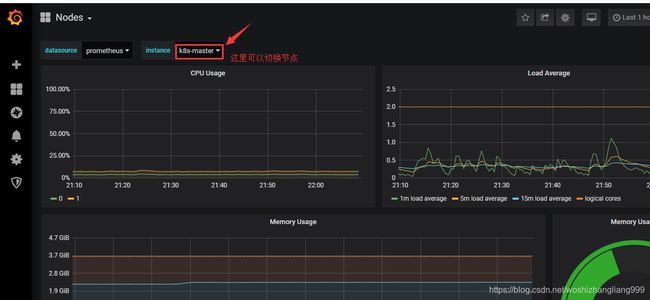

grafana-service访问

访问地址如下:

http://172.16.1.110:30100/

首次登录时账号密码默认为:admin/admin

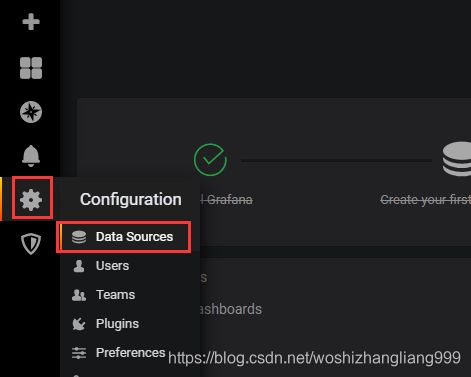

添加数据来源

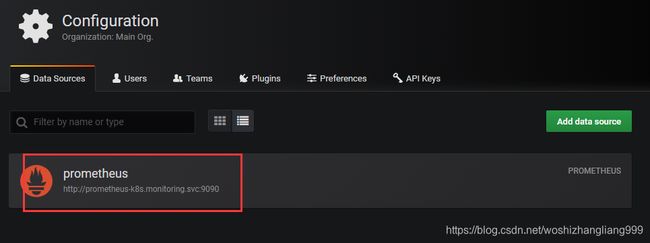

得到如下页面

如上,数据来源默认是已经添加好了的

点击进入,拉到下面,再点击Test按钮,测验数据来源是否正常

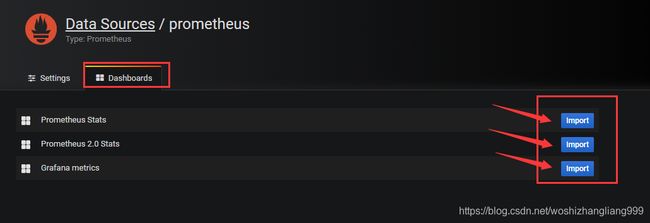

之后可导入一些模板

数据信息图像化查看

异常问题解决

如果 kubectl apply -f manifests/ 出现类似如下提示:

unable to recognize "manifests/alertmanager-alertmanager.yaml": no matches for kind "Alertmanager" in version "monitoring.coreos.com/v1"

unable to recognize "manifests/alertmanager-serviceMonitor.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "manifests/grafana-serviceMonitor.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "manifests/kube-state-metrics-serviceMonitor.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "manifests/node-exporter-serviceMonitor.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "manifests/prometheus-operator-serviceMonitor.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "manifests/prometheus-prometheus.yaml": no matches for kind "Prometheus" in version "monitoring.coreos.com/v1"

unable to recognize "manifests/prometheus-rules.yaml": no matches for kind "PrometheusRule" in version "monitoring.coreos.com/v1"

unable to recognize "manifests/prometheus-serviceMonitor.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "manifests/prometheus-serviceMonitorApiserver.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "manifests/prometheus-serviceMonitorCoreDNS.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "manifests/prometheus-serviceMonitorKubeControllerManager.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "manifests/prometheus-serviceMonitorKubeScheduler.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "manifests/prometheus-serviceMonitorKubelet.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

那么再次 kubectl apply -f manifests/ 即可;因为存在依赖。

但如果使用的是kube-prometheus:v0.3.0、v0.4.0、v0.5.0版本并出现了上面的提示【反复执行kubectl apply -f manifests/,但一直存在】,原因暂不清楚。

完毕!

![]()